The PyTorch reforged and forked version of the official THoR-framework, enhanced and adapted for SemEval-2024 paper nicolay-r at SemEval-2024 Task 3: Using Flan-T5 for Reasoning Emotion Cause in Conversations with Chain-of-Thought on Emotion States

Update February 23 2025: 🔥 BATCHING MODE SUPPORT. See 🌌 Flan-T5 provider for bulk-chain project. Test is available here

Update 06 June 2024: 🤗 Model and its description / tutorial for usage has been uploaded on huggingface: 🤗 nicolay-r/flan-t5-emotion-cause-thor-base

Update February 23 2025: 🔥 BATCHING MODE SUPPORT. See 🌌 Flan-T5 provider for bulk-chain project. Test is available here

Update 06 June 2024: 🤗 Model and its description / tutorial for usage has been uploaded on huggingface: 🤗 nicolay-r/flan-t5-emotion-cause-thor-base

Update 06 March 2024: 🔓

attrdictrepresents the main limitation for code launching inPython 3.10and hence been switched toaddict(see Issue#2).

Update 05 March 2024: The quick arXiv paper breakdowns 🔨 are @ Twitter/X post

Update 17 February 2024: We support

--bf16mode for launching Flan-T5 withtorch.bfloat16type; this feature allows launchingxl-sized model training with just a single NVidia-A100 (40GB)

NOTE: Since the existed fork aimed on a variety non-commercial projects application, this repository represent a copy of the originally published code with the folllowing 🔧 enhancements and changes

NOTE: List of the changes from the original THoR

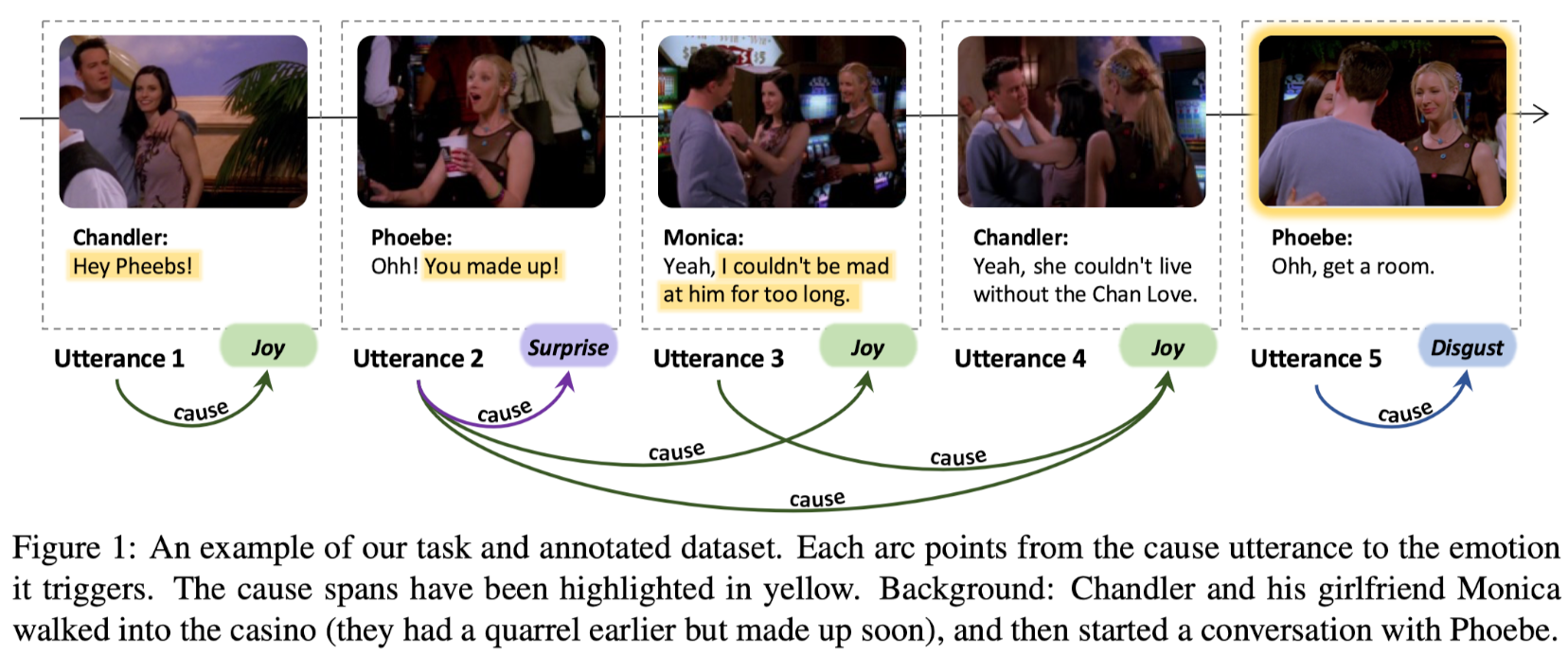

- Input: a conversation containing the speaker and the text of each utterance.

- Output: all emotion-cause pairs, where each pair contains an emotion utterance along with its emotion category and the textual cause span in a specific cause utterance, e.g:

- (

U3_Joy,U2_“You made up!”)

- (

The complete description of the task is available here.

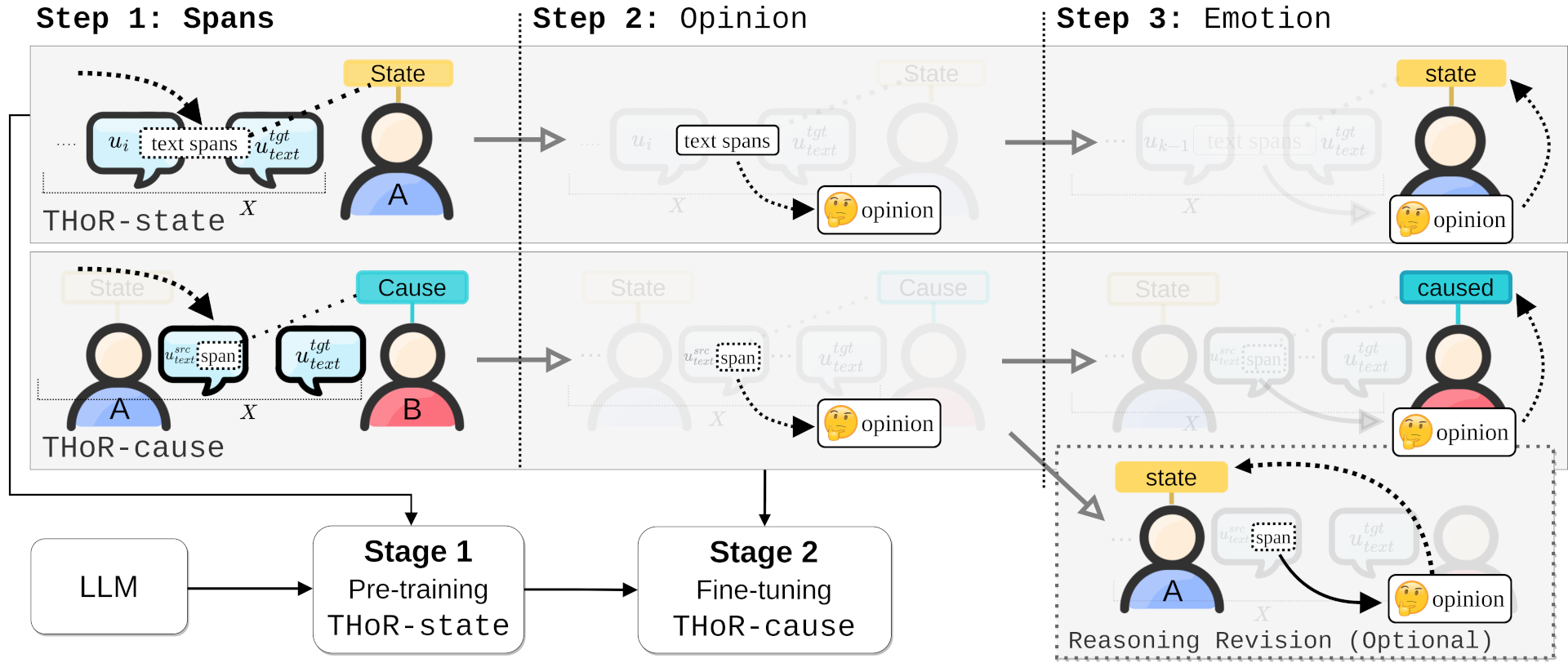

Framework illustration.

We provide 🔥 notebook for downloading all the necessary data, followed by launching

experiments with NVidia-V100/ or NVidia-A100.

Codalab Submission formation: please follow this section.

This project has been tested under Python-3.8 and adapted for the Python-3.10.

Using pip, you can install the necessary dependencies as follows:

pip install -r requirements.txtSerialize datasets: We provide download_data.py script

for downloading and serialization of the manually compiled datasets

(D_state and D_cause).

python download_data.py \

--cause-test "https://www.dropbox.com/scl/fi/4b2ouqdhgifqy3pmopq08/cause-mult-test.csv?rlkey=tkw0p1e01vezrjbou6v7qh36a&dl=1" \

--cause-train "https://www.dropbox.com/scl/fi/0tlkwbe5awcss2qmihglf/cause-mult-train.csv?rlkey=x9on1ogzn5kigx7c32waudi21&dl=1" \

--cause-valid "https://www.dropbox.com/scl/fi/8zjng2uyghbkpbfcogj6o/cause-mult-valid.csv?rlkey=91dgg4ly7p23e3id2230lqsoi&dl=1" \

--state-train "https://www.dropbox.com/scl/fi/0lokgaeo973wo82ig01hy/state-mult-train.csv?rlkey=tkt1oyo8kwgqs6gp79jn5vbh8&dl=1" \

--state-valid "https://www.dropbox.com/scl/fi/eu4yuk8n61izygnfncnbo/state-mult-valid.csv?rlkey=tlg8rac4ofkbl9o4ipq6dtyos&dl=1"For reproduction purposes you may refer to the code of this supplementary repository.

Use the Flan-T5 as the backbone LLM reasoner:

NOTE: We setup

basereasoner in config.yaml. However, it is highly recommended to choose the largest reasoning model you can afford (xlor higher) for fine-tuning.

We provide separate engines, and for each engine the source of the prompts in particular:

prompt_state: instruction wrapped into the promptprompt_cause: instruction wrapped into the promptthor_state: Class of the promptsthor_cause: Class of the promptsthor_cause_rr: Class of the prompts same asthor_cause

Use the main.py script with command-line arguments to run the Flan-T5-based THOR system.

python main.py -c <CUDA_INDEX> \

-m "google/flan-t5-base" \

-r [prompt|thor_state|thor_cause|thor_cause_rr] \

-d [state_se24|cause_se24] \

-lf "optional/path/to/the/pretrained/state" \

-es <EPOCH_SIZE> \

-bs <BATCH_SIZE> \

-f <YAML_CONFIG> -c,--cuda_index: Index of the GPU to use for computation (default:0).-d,--data_name: Name of the dataset. Choices arestate_se24orcause_se24.-m,--model_path: Path to the model on hugging face.-r,--reasoning: Specifies the reasoning mode, with one-step prompt or multi-step thor mode.-li,--load_iter: load a state on specific index from the samedata_nameresource (default:-1, not applicable.)-lp,--load_path: load a state on specific path.-p,--instruct: instructive prompt forprompttraining engine that involvestargetparameter only"-es,--epoch_size: amount of training epochs (default:1)-bs,--batch_size: size of the batch (default:None)-lr,--bert_lr: learning rate (default=2e-4)-t,--temperature: temperature (default=gen_config.temperature)-v,--validate: running under zero-shot mode onvalidset.-i,--infer_iter: running inference ontestdataset to form answers.-f,--config: Specifies the location of config.yaml file.

Configure more parameters in config.yaml file.

All the service that is not related to the Codalab is a part of another repository (link below 👇)

Once results were inferred (THOR-cause-rr results example),

you may refer to the following code to form a submission:

The original THoR project:

@inproceedings{FeiAcl23THOR,

title={Reasoning Implicit Sentiment with Chain-of-Thought Prompting},

author={Hao, Fei and Bobo, Li and Qian, Liu and Lidong, Bing and Fei, Li and Tat-Seng, Chua},

booktitle = "Proceedings of the Annual Meeting of the Association for Computational Linguistics",

pages = "1171--1182",

year={2023}

}You can cite this work as follows:

@article{rusnachenko2024nicolayr,

title={nicolay-r at SemEval-2024 Task 3: Using Flan-T5 for Reasoning Emotion Cause in Conversations with Chain-of-Thought on Emotion States},

booktitle = "Proceedings of the Annual Conference of the North American Chapter of the Association for Computational Linguistics",

author={Nicolay Rusnachenko and Huizhi Liang},

year= "2024",

month= jun,

address = "Mexico City, Mexico",

publisher = "Annual Conference of the North American Chapter of the Association for Computational Linguistics"

}This code is referred from following projects: CoT; Flan-T5; Transformers,

The code is released under Apache License 2.0 for Noncommercial use only.