Repository of FHIR and annotation resources used to benchmark NLP Sandbox tools

This repository provides a Python-Flask implementation of the NLP Sandbox Data Node. This Data Node relies on a MongoDB instance to store FHIR and annotation resources used to benchmark NLP Sandbox tools.

This Data Node can be used to:

- Create and manage datasets

- Create and manage FHIR stores

- Store and retrieve FHIR patient profiles

- Store and retrieve clinical notes

- Create and manage annotation stores

- Store and retrieve text annotations

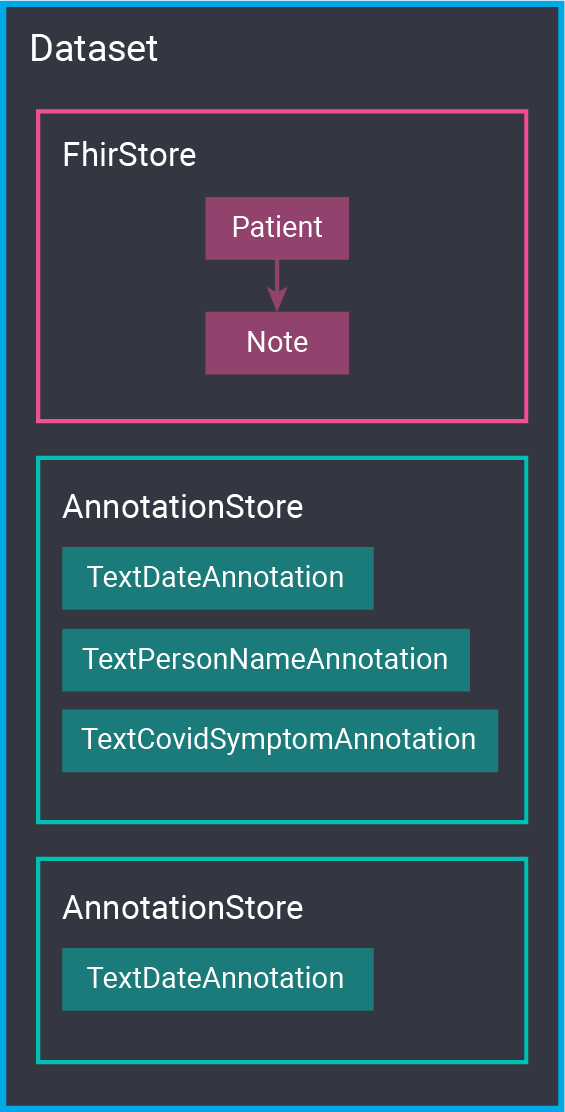

The figure below illustrates the organization of the data. A Dataset can have one or more FhirStores and AnnotationStores. An AnnotationStore can include different types of annotations. In NLPSandbox.io, the gold standard of a dataset is stored in one AnnotationStore. We then use N AnnotationStores to store the predictions generated by N tools contributed to NLPSandbox.io.

- Data Node API version: 1.2.0

- Data Node version: 1.2.1

- Docker image: nlpsandbox/data-node

Create the configuration file.

cp .env.example .env

The command below starts the Data Node locally.

docker-compose up --build

You can stop the container run with Ctrl+C, followed by docker-compose down.

We recommend using a Conda environment to install and run the Data Node.

conda create --name data-node python=3.9.4

conda activate data-node

Create the configuration file and export its parameters to environment variables.

cp .env.example .env

export $(grep -v '^#' .env | xargs -d '\n')

Start the MongoDB instance.

docker-compose up -d db

Install and start the Data Node.

cd server/

pip install -r requirements.txt

cd server && python -m openapi_server

The Data Node provides a web interface that you can use to create and manage resources. The address of this interface depends on whether you run the Data Node using Docker (production mode) or the Python development server.

- Using Docker: http://localhost/ui

- Using Python: http://localhost:8080/ui

Thinking about contributing to this project? Get started by reading our Contributor Guide.