This repository is a JAX implementations of lightweight license plate recognition (LPR) models. The default weight is trained on the Korean license plate dataset and also the model can be trained on any other license plate dataset.

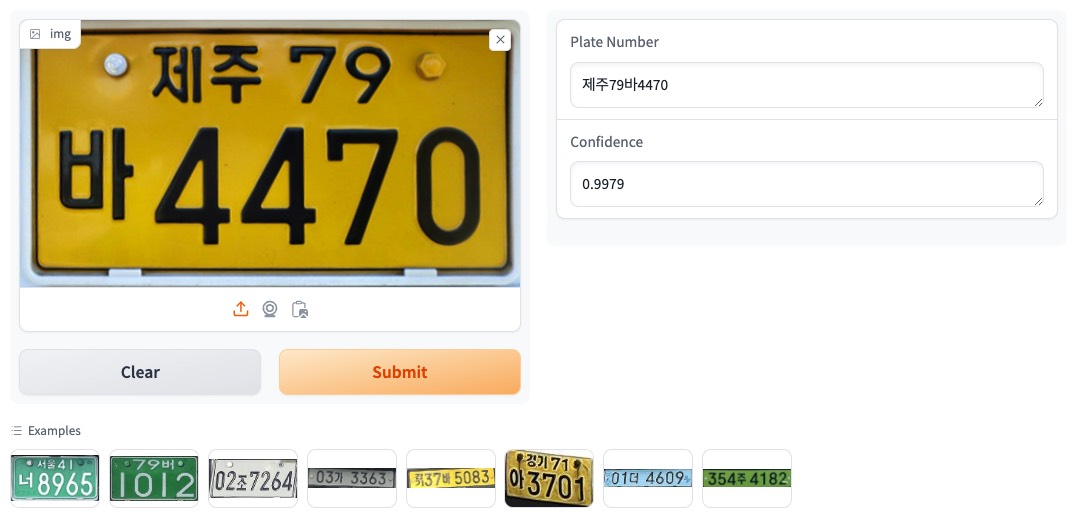

Try the model on the Hugging Face Spaces!

You can download any of deployment requirement files including the model weights, preprocessing and postprocessing scripts, and the model configuration file from Hugging Face Spaces and deploy the model on the cloud or edge devices.

The labeled data is required to train the model. The data should be organized as follows:

- data

- labels.names

- train

- {license_plate_number}_{index}.jpg

- {license_plate_number}_{index}.txt

- ...

- val

- {license_plate_number}_{index}.jpg

- {license_plate_number}_{index}.txt

- ...

license_plate_number is the license plate number and make sure that the number is formatted like 12가1234, 서울12가1234 and prepare a dict to parse the every character of the license plate number to the integer. The dict should be saved as labels.names file. image_number is the number of the image and it is used to distinguish the same license plate number. The .txt file is the bounding boxes of each character in the license plate number. The format of the .txt file is as follows:

x1 y1 x2 y2

...

xn yn xn ynThe order of the bounding boxes should be the same as the order of the characters in the license plate number.

The dataloader will parse the data and convert the license plate characters to the integer using the labels.names file. The license plate images will be resized to (96, 192) or any other size you want. In addition, the mask of the license plate number will be created via the bounding boxes and the mask will be used to calculate the loss.

The losses of the model are as follows:

For CTC:

For Mask:

| Model | Input Shape | Size | Accuracy | Speed (ms) |

|---|---|---|---|---|

| tinyLPR | (96, 192, 1) | 86 KB | 99.08 % | 0.44 ms |

The speed is tested on the Apple M2 chip.