A curated list of awesome temporal action segmentation resources. Inspired by awesome-machine-learning-resources.

A curated list of awesome temporal action segmentation resources. Inspired by awesome-machine-learning-resources.

⭐ Please leave a STAR if you like this project! ⭐

🔥 Huggingface Datasets for three common TAS benchmarks are now available and check them out here:

Do let us know if there is any IP issues regarding hosting these datasets on huggingface!

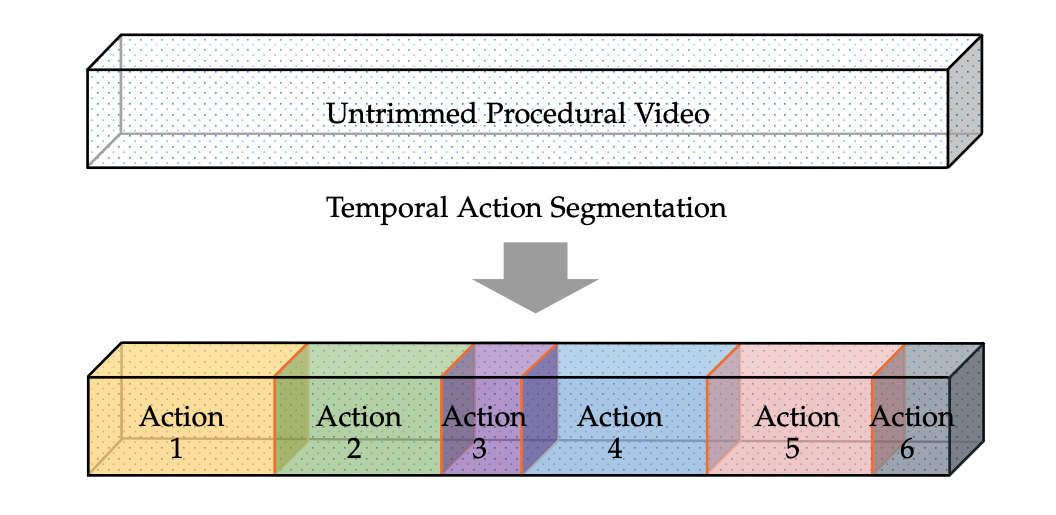

Temporal Action Segmentation takes as the input an untrimmed video sequence, segments it along the temporal dimension into clips and infers the semantics of actions in them.

- ATLAS tutorial in conjuction with ECCV2022. [Tutorial]

- Temporal Action Segmentation: An Analysis of Modern Techniques

[pdf]

- Guodong Ding, Fadime Sener, and Angela Yao, TPAMI 2023.

There are multiple datasets that have been used to benchmark the perfomance of the temporal action segmentation approaches. The most commonly adopted datasets are the as follows:

- The Language of Actions: Recovering the Syntax and Semantics of Goal-Directed Human Activities

[pdf]

- Hilde Kuehne, Ali Arslan, and Thomas Serre, CVPR 2014.

Read Abstract

This paper describes a framework for modeling human activities as temporally structured processes. Our approach is motivated by the inherently hierarchical nature of human activities and the close correspondence between human actions and speech: We model action units using Hidden Markov Models, much like words in speech. These action units then form the building blocks to model complex human activities as sentences using an action grammar. To evaluate our approach, we collected a large dataset of daily cooking activities: The dataset includes a total of 52 participants, each performing a total of 10 cooking activities in multiple real-life kitchens, resulting in over 77 hours of video footage. We evaluate the HTK toolkit, a state-of-the-art speech recognition engine, in combination with multiple video feature descriptors, for both the recognition of cooking activities (e.g., making pancakes) as well as the semantic parsing of videos into action units (e.g., cracking eggs). Our results demonstrate the benefits of structured temporal generative approaches over existing discrimina-tive approaches in coping with the complexity of human daily life activities.

targets recording videos ''in the wild'', in 18 different kitchens. The participants are not given any scrips and the recordings are unrehearsed and undirected. The dataset is composed of the 10 breakfast-related activities. This dataset is recorded with 52 participants with multiple cameras, varies from 3 to 5, all from a third-person point of view. There are 1712 videos, when accounting for the multi-camera views.

- Learning to Recognize Objects in Egocentric Activities

[pdf]

- Alireza Fatih, Xiaofeng Ren, and M James Rehg, CVPR 2011.

Read Abstract

This paper addresses the problem of learning object models from egocentric video of household activities, us- ing extremely weak supervision. For each activity sequence, we know only the names of the objects which are present within it, and have no other knowledge regarding the ap- pearance or location of objects. The key to our approach is a robust, unsupervised bottom up segmentation method, which exploits the structure ofthe egocentric domain to par- tition each frame into hand, object, and background cat- egories. By using Multiple Instance Learning to match object instances across sequences, we discover and lo- calize object occurrences. Object representations are re- fined through transduction and object-level classifiers are trained. We demonstrate encouraging results in detecting novel object instances using models produced by weakly- supervised learning.

contains 28 videos recorded in a single kitchen from seven procedural activities. The videos are recorded with a camera mounted on a cap, worn by four participants.

- Combining Embedded Accelerometers with Computer Vision for Recognizing Food Preparation Activities

[pdf]

- Sebastian Stein, and Stephen J Mckenna, UbiComp 2013.

is composed of 50 recorded videos of 25 participants making two different mixed salads. The videos are captured by a camera with a top-down view onto the work-surface. The participants are provided with recipe steps which are randomly sampled from a statistical recipe model.

- Unsupervised Learning from Narrated Instruction Videos

[pdf]

- Jean-Baptiste Alayrac, Piotr Bojanowski, Nishant Agrawal, Josef Sivic, Ivan Laptev, and Simon Lacoste-Julien, CVPR 2016.

is a recently collected dataset where 53 participants were asked to dissemble and assemble take apart toys without given any instructions which resulted in realistic sequences with great variation in action ordering. The dataset is annotated with fine-grained, hand-object interactions, and coarse action labels which are composed of multiple fine-grained action segments related to the attaching or detaching of a vehicle part. The authors evaluated their dataset for temporal action segmentation using the coarse labels.

- Assembly101: A Large-Scale Multi-View Video Dataset for Understanding Procedural Activities

[pdf]

- Fadime Sener, Dibyadip Chatterjee, Daniel Shelepov, Kun He, Dipika Singhania, Robert Wang, and others, CVPR 2022.

is a recently collected dataset where 53 participants were asked to dissemble and assemble take apart toys without given any instructions which resulted in realistic sequences with great variation in action ordering. The dataset is annotated with fine-grained, hand-object interactions, and coarse action labels which are composed of multiple fine-grained action segments related to the attaching or detaching of a vehicle part. The authors evaluated their dataset for temporal action segmentation using the coarse labels.

Accuracy or MoF (mean over frames) are an per-frame accuracy measure that calculates the ratio of frames that are correctly recognized by the temporal action model:

The F1-score, or F1@$\tau$ compares the Intersection over Union (IoU) of each segment with respect to the corre- sponding ground truth based on some threshold

The Edit Score is computed using the Levenshtein distance

- FACT: Frame-Action Cross-Attention Temporal Modeling for Efficient Fully-Supervised Action Segmentation,

[code]

- Zijia Lu and Ehsan Elhamifar, CVPR 2024.

- Activity Grammars for Temporal Action Segmentation,

[pdf]

[code]

- Dayoung Gong, Joonseok Lee, Deunsol Jung, Suha Kwak, and Minsu Cho, NeurIPS 2023.

- Diffusion Action Segmentation,

[pdf]

[code]

- Daochang Liu, Qiyue Li, AnhDung Dinh, Tingting Jiang, Mubarak Shah, and Chang Xu, ICCV 2023.

- How Much Temporal Long-Term Context is Needed for Action Segmentation?,

[pdf]

[code]

- Emad Bahrami, Gianpiero Francesca, and Juergen Gall, ICCV 2023.

- Streaming Video Temporal Action Segmentation In Real Time,

[pdf]

[code]

- Wujun Wen, Yunheng Li, Zhuben Dong, Lin Feng, Wanxiao Yang, and Shenlan Liu, ISKE 2023.

- Don't Pour Cereal into Coffee: Differentiable Temporal Logic for Temporal Action Segmentation,

[pdf]

[code]

- Ziwei Xu, Yogesh S Rawat, Yongkang Wong, Mohan S Kankanhalli, and Mubarak Shah, NeurIPS 2022.

- Maximization and Restoration: Action Segmentation through Dilation Passing and Temporal Reconstruction,

[pdf]

- Junyong Park, Daekyum Kim, Sejoon Huh, and Sungho Jo, PR 2022.

- Mcfm: Mutual Cross Fusion Module for Intermediate Fusion-Based Action Segmentation,

[pdf]

- Kenta Ishihara, Gaku Nakano, and Tetsuo Inoshita, ICIP 2022.

- Multistage temporal convolution transformer for action segmentation,

[pdf]

- Nicolas Aziere and Sinisa Todorovic, IVC 2022.

- Semantic2Graph: Graph-based Multi-modal Feature Fusion for Action Segmentation in Videos,

[pdf]

- Junbin Zhang, Pei-Hsuan Tsai, and Meng-Hsun Tsai, Arxiv 2022.

- Uncertainty-Aware Representation Learning for Action Segmentation,

[pdf]

- Lei Chen, Muheng Li, Yueqi Duan, Jie Zhou, and Jiwen Lu, IJCAI 2022.

- Unified Fully and Timestamp Supervised Temporal Action Segmentation via Sequence to Sequence Translation,

[pdf]

- Nadine Behrmann, S. Alireza Golestaneh, Zico Kolter, Juergen Gall, and Mehdi Noroozi, ECCV 2022.

- ASFormer: Transformer for Action Segmentation,

[pdf]

[code]

- Fangqiu Yi, Hongyu Wen, and Tingting Jiang, BMVC 2021.

- Alleviating Over-segmentation Errors by Detecting Action Boundaries,

[pdf]

[code]

- Yuchi Ishikawa, Seito Kasai, Yoshimitsu Aoki, and Hirokatsu Kataoka, WACV 2021.

- Coarse to Fine Multi-Resolution Temporal Convolutional Network,

[pdf]

[code]

- Dipika Singhania, Rahul Rahaman, and Angela Yao, Arxiv 2021.

- FIFA: Fast Inference Approximation for Action Segmentation,

[pdf]

- Yaser Souri, Yazan Abu Farha, Fabien Despinoy, Gianpiero Francesca, and Juergen Gall, GCPR 2021.

- Global2Local: Efficient Structure Search for Video Action Segmentation,

[pdf]

[code]

- Shang-Hua Gao, Qi Han, Zhong-Yu Li, Pai Peng, Liang Wang, and Ming-Ming Cheng, CVPR 2021.

- Action Segmentation with Mixed Temporal Domain Adaptation,

[pdf]

- Min-Hung Chen, Baopu Li, Yingze Bao, and Ghassan Alregib, WACV 2020.

- Boundary-Aware Cascade Networks for Temporal Action Segmentation,

[pdf]

[code]

- Zhenzhi Wang, Ziteng Gao, Limin Wang, Zhifeng Li, and Gangshan Wu, ECCV 2020.

- Improving Action Segmentation via Graph Based Temporal Reasoning,

[pdf]

- Yifei Huang, Yusuke Sugano, and Yoichi Sato, CVPR 2020.

- Temporal Aggregate Representations for Long Term Video Understanding,

[pdf]

- Fadime Sener, Dipika Singhania, and Angela Yao, ECCV 2020.

- MS-TCN: Multi-Stage Temporal Convolutional Network for Action Segmentation,

[pdf]

[code]

- Yazan Abu Farha and Juergen Gall, CVPR 2019.

- Temporal Deformable Residual Networks for Action Segmentation in Videos,

[pdf]

- Peng Lei and Sinisa Todorovic, CVPR 2018.

- Quo Vadis, Action Recognition? A New Model and the Kinetics Dataset,

[pdf]

[code]

- João Carreira and Andrew Zisserman, CVPR 2017.

- Temporal Convolutional Networks for Action Segmentation and Detection,

[pdf]

[code]

- Colin Lea, Michael D Flynn Ren, Austin Reiter, and Gregory D Hager, CVPR 2017.

- An end-to-end generative framework for video segmentation and recognition,

[pdf]

- Hilde Kuehne, Juergen Gall, and Thomas Serre, WACV 2016.

- Temporal Action Detection Using a Statistical Language Model,

[pdf]

[code]

- Alexander Richard and Juergen Gall, CVPR 2016.

- Efficient and Effective Weakly-Supervised Action Segmentation via Action-Transition-Aware Boundary Alignment,

- Angchi Xu and Wei-Shi Zheng, CVPR 2024.

- Is Weakly-supervised Action Segmentation Ready For Human-Robot Interaction ? No , Let ’ s Improve It With Action-union Learning,

[pdf]

- Fan Yang, Shigeyuki Odashima, Shoichi Masui, and Shan Jiang, IROS 2023.

- Reducing the Label Bias for Timestamp Supervised Temporal Action Segmentation,

[pdf]

- Kaiyuan Liu, Yunheng Li, Shenglan Liu, Chenwei Tan, and Zihang Shao, CVPR 2023.

- Timestamp-Supervised Action Segmentation in the Perspective of Clustering,

[pdf]

[code]

- Dazhao Du, Enhan Li, Lingyu Si, Fanjiang Xu, and Fuchun Sun, IJCAI 2023.

- A Generalized & Robust Framework For Timestamp Supervision in Temporal Action Segmentation,

[pdf]

[code]

- Rahul Rahaman, Dipika Singhania, Alexandre Thiery, and Angela Yao, ECCV 2022.

- Hierarchical Modeling for Task Recognition and Action Segmentation in Weakly-Labeled Instructional Videos,

[pdf]

[code]

- Reza Ghoddoosian, Saif Sayed, and Vassilis Athitsos, WACV 2022.

- Robust Action Segmentation from Timestamp Supervision,

[pdf]

- Yaser Souri, Yazan Abu Farha, Emad Bahrami, Gianpiero Francesca, and Juergen Gall, BMVC 2022.

- Semi-Weakly-Supervised Learning of Complex Actions from Instructional Task Videos,

[pdf]

[code]

- Yuhan Shen and Ehsan Elhamifar, CVPR 2022.

- Set-Supervised Action Learning in Procedural Task Videos via Pairwise Order Consistency,

[pdf]

[code]

- Zijia Lu and Ehsan Elhamifar, CVPR 2022.

- Temporal Action Segmentation with High-level Complex Activity Labels,

[pdf]

- Guodong Ding and Angela Yao, TMM 2022.

- Timestamp-Supervised Action Segmentation with Graph Convolutional Networks,

[pdf]

- Hamza Khan, Sanjay Haresh, Awais Ahmed, Shakeeb Siddiqui, Andrey Konin, M Zeeshan, Zia Quoc, and Huy Tran, IROS 2022.

- Turning to a Teacher for Timestamp Supervised Temporal Action Segmentation,

[pdf]

- Yang Zhao and Yan Song, ICME 2022.

- Unified Fully and Timestamp Supervised Temporal Action Segmentation via Sequence to Sequence Translation,

[pdf]

- Nadine Behrmann, S. Alireza Golestaneh, Zico Kolter, Juergen Gall, and Mehdi Noroozi, ECCV 2022.

- Weakly-Supervised Online Action Segmentation in Multi-View Instructional Videos,

[pdf]

[code]

- Reza Ghoddoosian, Isht Dwivedi, Nakul Agarwal, Chiho Choi, and Behzad Dariush, CVPR 2022.

- Anchor-Constrained Viterbi for Set-Supervised Action Segmentation,

[pdf]

- Jun Li and Sinisa Todorovic, CVPR 2021.

- Fast Weakly Supervised Action Segmentation Using Mutual Consistency,

[pdf]

[code]

- Yaser Souri, Mohsen Fayyaz, Luca Minciullo, Gianpiero Francesca, and Juergen Gall, TPAMI 2021.

- Learning Discriminative Prototypes with Dynamic Time Warping,

[pdf]

- Xiaobin Chang, Frederick Tung, and Greg Mori, CVPR 2021.

- Temporal Action Segmentation from Timestamp Supervision,

[pdf]

[code]

- Zhe Li, Yazan Abu Farha, and Juergen Gall, CVPR 2021.

- Weakly-Supervised Action Segmentation and Alignment via Transcript-Aware Union-of-Subspaces Learning,

[pdf]

[code]

- Zijia Lu and Ehsan Elhamifar, ICCV 2021.

- Weakly-supervised Temporal Action Localization by Uncertainty Modeling,

[pdf]

- Pilhyeon Lee, Jinglu Wang, Yan Lu, and Hyeran Byun, AAAI 2021.

- Fast Weakly Supervised Action Segmentation Using Mutual Consistency,

[pdf]

- Yaser Souri, Mohsen Fayyaz, Luca Minciullo, Gianpiero Francesca, and Juergen Gall, ECCV 2020.

- Procedure Completion by Learning from Partial Summaries,

[pdf]

- Zwe Naing and Ehsan Elhamifar, BMVC 2020.

- SCT: Set Constrained Temporal Transformer for Set Supervised Action Segmentation,

[pdf]

[code]

- Mohsen Fayyaz and Juergen Gall, CVPR 2020.

- Set-Constrained Viterbi for Set-Supervised Action Segmentation,

[pdf]

- Jun Li and Sinisa Todorovic, CVPR 2020.

- D3TW: Discriminative differentiable dynamic time warping for weakly supervised action alignment and segmentation,

[pdf]

[code]

- Chien Yi Chang, De An Huang, Yanan Sui, Fei-Fei Li, and Juan Carlos Niebles, CVPR 2019.

- Weakly Supervised Energy-Based Learning for Action Segmentation,

[pdf]

[code]

- Jun Li, Peng Lei, and Sinisa Todorovic, ICCV 2019.

- A Hybrid RNN-HMM Approach for Weakly Supervised Temporal Action Segmentation,

[pdf]

- Hilde Kuehne, Alexander Richard, and Juergen Gall, TPAMI 2018.

- Action Sets: Weakly Supervised Action Segmentation Without Ordering Constraints,

[pdf]

[code]

- Alexander Richard, Hilde Kuehne, and Juergen Gall, CVPR 2018.

- NeuralNetwork-Viterbi: A Framework for Weakly Supervised Video Learning,

[pdf]

[code]

- Alexander Richard, Hilde Kuehne, Ahsan Iqbal, and Juergen Gall, CVPR 2018.

- Weakly-Supervised Action Segmentation with Iterative Soft Boundary Assignment,

[pdf]

[code]

- Li Ding and Chenliang Xu, CVPR 2018.

- Weakly supervised action learning with RNN based fine-to-coarse modeling,

[pdf]

[code]

- Alexander Richard, Hilde Kuehne, and Juergen Gall, CVPR 2017.

- Weakly supervised learning of actions from transcripts,

[pdf]

- Hilde Kuehne, Alexander Richard, and Juergen Gall, CVIU 2017.

- Connectionist temporal modeling for weakly supervised action labeling,

[pdf]

[code]

- De An Huang, Fei-Fei Li, and Juan Carlos Niebles, ECCV 2016.

- Temporally Consistent Unbalanced Optimal Transport for Unsupervised Action Segmentation,

- Ming Xu and Stephen Gould, CVPR 2024.

- OTAS: Unsupervised Boundary Detection for Object-Centric Temporal Action Segmentation,

[pdf]

[code]

- Yuerong Li, Zhengrong Xue and Huazhe Xu, WACV 2024.

- Leveraging triplet loss for unsupervised action segmentation,

[pdf]

[code]

- Elena Belen Bueno-Benito, Biel Tura Vecino, and Mariella Dimiccoli, CVPRW 2023.

- TAEC: Unsupervised action segmentation with temporal-Aware embedding and clustering,

[pdf]

- Wei Lin, Anna Kukleva, Horst Possegger, Hilde Kuehne, and Horst Bischof, CEURW 2023.

- Fast and Unsupervised Action Boundary Detection for Action Segmentation,

[pdf]

- Zexing Du, Xue Wang, Guoqing Zhou, and Qing Wang, CVPR 2022.

- My View is the Best View: Procedure Learning from Egocentric Videos,

[pdf]

[code]

- Siddhant Bansal, Chetan Arora, and C V Jawahar, ECCV 2022.

- Temporal Action Segmentation with High-level Complex Activity Labels,

[pdf]

- Guodong Ding and Angela Yao, TMM 2022.

- Unsupervised Action Segmentation by Joint Representation Learning and Online Clustering,

[pdf]

[code]

- Sateesh Kumar, Sanjay Haresh, Awais Ahmed, Andrey Konin, M. Zeeshan Zia, and Quoc Huy Tran, CVPR 2022.

- Action Shuffle Alternating Learning for Unsupervised Action Segmentation,

[pdf]

- Jun Li and Sinisa Todorovic, CVPR 2021.

- Joint Visual-Temporal Embedding for Unsupervised Learning of Actions in Untrimmed Sequences,

[pdf]

- Rosaura G Vidalmata, Walter J Scheirer, Anna Kukleva, David Cox, and Hilde Kuehne, WACV 2021.

- Temporally-Weighted Hierarchical Clustering for Unsupervised Action Segmentation,

[pdf]

[code]

- M Saquib Sarfraz, Naila Murray, Vivek Sharma, Ali Diba, Luc Van Gool, and Rainer Stiefelhagen, CVPR 2021.

- Action Segmentation with Joint Self-Supervised Temporal Domain Adaptation,

[pdf]

[code]

- Min-Hung Chen, Baopu Li, Yingze Bao, Ghassan AlRegib, and Zsolt Kira, CVPR 2020.

Read Abstract

Despite the recent progress of fully-supervised action segmentation techniques, the performance is still not fully satisfactory. One main challenge is the problem of spatiotemporal variations (e.g. different people may perform the same activity in various ways). Therefore, we exploit unlabeled videos to address this problem by reformulating the action segmentation task as a cross-domain problem with domain discrepancy caused by spatio-temporal variations. To reduce the discrepancy, we propose Self-Supervised Temporal Domain Adaptation (SSTDA), which contains two self-supervised auxiliary tasks (binary and sequential domain prediction) to jointly align cross-domain feature spaces embedded with local and global temporal dynamics, achieving better performance than other Domain Adaptation (DA) approaches. On three challenging benchmark datasets (GTEA, 50Salads, and Breakfast), SSTDA outperforms the current state-of-the-art method by large margins (e.g. for the F1@25 score, from 59.6% to 69.1% on Breakfast, from 73.4% to 81.5% on 50Salads, and from 83.6% to 89.1% on GTEA), and requires only 65% of the labeled training data for comparable performance, demonstrating the usefulness of adapting to unlabeled target videos across variations. The source code is available at https://github.com/cmhungsteve/SSTDA.

- Unsupervised learning of action classes with continuous temporal embedding,

[pdf]

[code]

- Anna Kukleva, Hilde Kuehne, Fadime Sener, and Jurgen Gall, CVPR 2019.

Read Abstract

The task of temporally detecting and segmenting actions in untrimmed videos has seen an increased attention recently. One problem in this context arises from the need to define and label action boundaries to create annotations for training which is very time and cost intensive. To address this issue, we propose an unsupervised approach for learning action classes from untrimmed video sequences. To this end, we use a continuous temporal embedding of framewise features to benefit from the sequential nature of activities. Based on the latent space created by the embedding, we identify clusters of temporal segments across all videos that correspond to semantic meaningful action classes. The approach is evaluated on three challenging datasets, namely the Breakfast dataset, YouTube Instructions, and the 50Salads dataset. While previous works assumed that the videos contain the same high level activity, we furthermore show that the proposed approach can also be applied to a more general setting where the content of the videos is unknown. - Unsupervised Procedure Learning via Joint Dynamic Summarization,

[pdf]

- Ehsan Elhamifar and Zwe Naing, ICCV 2019.

Read Abstract

We address the problem of unsupervised procedure learning from unconstrained instructional videos. Our goal is to produce a summary of the procedure key-steps and their ordering needed to perform a given task, as well as localization of the key-steps in videos. We develop a collaborative sequential subset selection framework, where we build a dynamic model on videos by learning states and transitions between them, where states correspond to different subactivities, including background and procedure steps. To extract procedure key-steps, we develop an optimization framework that finds a sequence of a small number of states that well represents all videos and is compatible with the state transition model. Given that our proposed optimization is non-convex and NP-hard, we develop a fast greedy algorithm whose complexity is linear in the length of the videos and the number of states of the dynamic model, hence, scales to large datasets. Under appropriate conditions on the transition model, our proposed formulation is approximately submodular, hence, comes with performance guarantees. We also present ProceL, a new multimodal dataset of 47.3 hours of videos and their transcripts from diverse tasks, for procedure learning evaluation. By extensive experiments, we show that our framework significantly improves the state of the art performance.

- Unsupervised Learning and Segmentation of Complex Activities from Video,

[pdf]

[code]

- Fadime Sener and Angela Yao, CVPR 2018.

Read Abstract

This paper presents a new method for unsupervised seg-mentation of complex activities from video into multiple steps, or sub-activities, without any textual input. We propose an iterative discriminative-generative approach which alternates between discriminatively learning the appearance of sub-activities from the videos' visual features to sub-activity labels and generatively modelling the temporal structure of sub-activities using a Generalized Mallows Model. In addition, we introduce a model for background to account for frames unrelated to the actual activities. Our approach is validated on the challenging Breakfast Actions and Inria Instructional Videos datasets and outperforms both unsupervised and weakly-supervised state of the art.

- Unsupervised learning from narrated instruction videos,

[pdf]

- Jean-Baptiste Alayrac, Piotr Bojanowski, Nishant Agrawal, Josef Sivic, Ivan Laptev, and Simon Lacoste-Julien, CVPR 2016.

Read Abstract

We address the problem of automatically learning the main steps to complete a certain task, such as changing a car tire, from a set of narrated instruction videos. The contributions of this paper are three-fold. First, we develop a new unsupervised learning approach that takes advantage of the complementary nature of the input video and the associated narration. The method solves two clustering problems, one in text and one in video, applied one after each other and linked by joint constraints to obtain a single coherent sequence of steps in both modalities. Second, we collect and annotate a new challenging dataset of real-world instruction videos from the Internet. The dataset contains about 800,000 frames for five different tasks1 that include complex interactions between people and objects, and are captured in a variety of indoor and outdoor settings. Third, we experimentally demonstrate that the proposed method can automatically discover, in an unsupervised manner, the main steps to achieve the task and locate the steps in the input videos.

- Unsupervised Semantic Parsing of Video Collections,

[pdf]

- Ozan Sener, Amir R. Zamir, Silvio Savarese, and Ashutosh Saxena, ICCV 2015.

Read Abstract

Human communication typically has an underlying structure. This is reflected in the fact that in many user generated videos, a starting point, ending, and certain objective steps between these two can be identified. In this paper, we propose a method for parsing a video into such semantic steps in an unsupervised way. The proposed method is capable of providing a semantic "storyline" of the video composed of its objective steps. We accomplish this using both visual and language cues in a joint generative model. The proposed method can also provide a textual description for each of the identified semantic steps and video segments. We evaluate this method on a large number of complex YouTube videos and show results of unprecedented quality for this intricate and impactful problem.

- Iterative Contrast-Classify for Semi-supervised Temporal Action Segmentation,

[pdf]

[code]

- Dipika Singhania, Rahul Rahaman, and Angela Yao, AAAI 2022.

Read Abstract

Temporal action segmentation classifies the action of each frame in (long) video sequences. Due to the high cost of frame-wise labeling, we propose the first semi-supervised method for temporal action segmentation. Our method hinges on unsupervised representation learning, which, for temporal action segmentation, poses unique challenges. Actions in untrimmed videos vary in length and have unknown labels and start/end times. Ordering of actions across videos may also vary. We propose a novel way to learn frame-wise representations from temporal convolutional networks (TCNs) by clustering input features with added time-proximity conditions and multi-resolution similarity. By merging representation learning with conventional supervised learning, we develop an "Iterative Contrast-Classify (ICC)" semi-supervised learning scheme. With more labelled data, ICC progressively improves in performance; ICC semi-supervised learning, with 40% labelled videos, performs similarly to fully-supervised counterparts. Our ICC improves MoF by +1.8, +5.6, +2.5% on Breakfast, 50Salads, and GTEA respectively for 100% labelled videos. - Leveraging Action Affinity and Continuity for Semi-supervised Temporal Action Segmentation,

[pdf]

[code]

- Guodong Ding and Angela Yao, ECCV 2022.

Read Abstract

We present a semi-supervised learning approach to the temporal action segmentation task. The goal of the task is to temporally detect and segment actions in long, untrimmed procedural videos, where only a small set of videos are densely labelled, and a large collection of videos are unlabelled. To this end, we propose two novel loss functions for the unlabelled data: an action affinity loss and an action continuity loss. The action affinity loss guides the unlabelled samples learning by imposing the action priors induced from the labelled set. Action continuity loss enforces the temporal continuity of actions, which also provides frame-wise classification supervision. In addition, we propose an Adaptive Boundary Smoothing (ABS) approach to build coarser action boundaries for more robust and reliable learning. The proposed loss functions and ABS were evaluated on three benchmarks. Results show that they significantly improved action segmentation performance with a low amount (5% and 10%) of labelled data and achieved comparable results to full supervision with 50% labelled data. Furthermore, ABS succeeded in boosting performance when integrated into fully-supervised learning.

- Progress-Aware Online Action Segmentation for Egocentric Procedural Task Videos,

- Yuhan Shen and Ehsan Elhamifar, CVPR 2024.

- Coherent Temporal Synthesis for Incremental Action Segmentation,

[pdf]

- Guodong Ding, Hans Golong and Angela Yao, CVPR 2024.

Read Abstract

Data replay is a successful incremental learning technique for images. It prevents catastrophic forgetting by keeping a reservoir of previous data, original or synthesized, to ensure the model retains past knowledge while adapting to novel concepts. However, its application in the video domain is rudimentary, as it simply stores frame exemplars for action recognition. This paper presents the first exploration of video data replay techniques for incremental action segmentation, focusing on action temporal modeling. We propose a Temporally Coherent Action (TCA) model, which represents actions using a generative model instead of storing individual frames. The integration of a conditioning variable that captures temporal coherence allows our model to understand the evolution of action features over time. Therefore, action segments generated by TCA for replay are diverse and temporally coherent. In a 10-task incremental setup on the Breakfast dataset, our approach achieves significant increases in accuracy for up to 22% compared to the baselines.

If you find this repository helpful in your research, please consider citing our survey as:

@article{ding2023temporal,

title={Temporal action segmentation: An analysis of modern techniques},

author={Ding, Guodong and Sener, Fadime and Yao, Angela},

journal={IEEE Transactions on Pattern Analysis and Machine Intelligence},

year={2023},

publisher={IEEE}

}