Step-by-step guide for deployment of an image classification model on an MCU-based system. A balanced image classification model is developed using the eIQ portal to classify 5 types of dry beans.

This balanced image classification model was trained on 5 types of dry beans - black beans, garbanzo beans, green split peas, small red beans and yellow split peas - using the eIQ portal. The provided model was trained using an input size of 128x128x3 and over 25 epoches. The model was then exported from the eIQ portal as an int8 quantized tflite model.

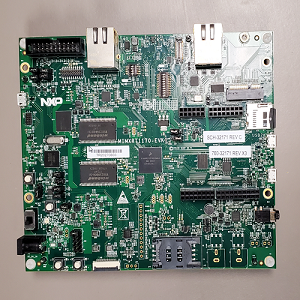

The image classification model is then deployed on RT1170-EVK which is an evaluation board, equipped with a 1GHz Arm Cortex-M7 core and a 400MHz Arm Cortex-M4 core, that is useful for evaluation and protyping using the MCUXpresso suite. The RT1170-EVK board memory consists of a 512 Mbit SDRAM, a 512 Mbit Octal Flash, a 128 Mbit QPSI Flash, a 2 Gbit Raw NAND Flash, a 64 Mbit LPSPI Flash and a TF socket for an SD card.

- Software

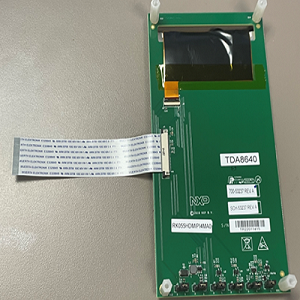

- Hardware

- Model Generation using eIQ Portal

- Software Setup

- Hardware Setup

- Results

- FAQs

- Support

- Release Notes

-

Download and Install MCUXpresso IDE v11.8.0+.

-

Download and install eIQ Toolkit v1.8.0.

-

Download the software package in this repository.

-

(Optional) Install a terminal window such as TeraTerm.

-

Purchase supported board:

-

0V5640 MIPI camera module (comes with EVK board)

-

Purchase supported display:

Open the eIQ Portal and follow the below-mentioned sections from the eIQ Toolkit User Guide (Rev 1.8.0) found in the documentation section of the eIQ Toolkit page.

Follow Section 3.2 and Section 3.3.2 to import the dataset and create a new project. The dataset folder in this repository follows the naming convention of the structured folders dataset cited in Section 3.3.2.

Follow Section 3.5.2 to create a train-test split for the dataset.

Follow Section 3.6.1 to select the best-suited model for the target. The model used in this software package is a balanced classification mobilenet v2 model for MCU.

Follow Section 3.7 to the train the selected model by adjusting the training parameters.

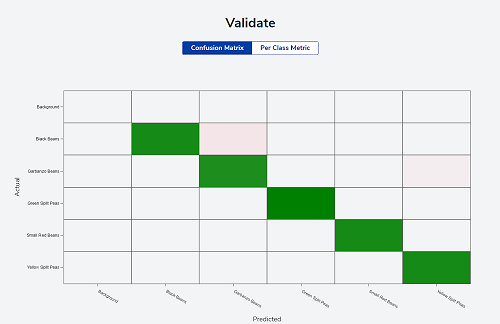

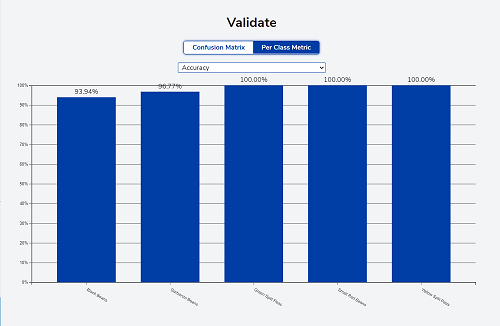

Follow Section 3.8 to validate the model. The confusion matrix and the per-class matrix from the validation process for the model used in this software package are shown below:

Follow Section 3.9 to export the model from the eIQ portal. The model used in this software package is an int8 quantized tflite model.

Follow Section 3.10 to quantized the trained model to decrease its size and improve its inference time.

To deloy the generated model in the embedded project, convert the tflite model to C++ format by following Section 7.2 of this ReadMe

To run the software package given in this repository, open MCUXpresso IDE v11.8.0+ and follow the below-mentioned sections from the MCUXpresso IDE User Guide (Rev 11.8.0) found in the documentation section of the MCUXpresso IDE page.

Follow Section 10.3 to import this project into MCUXpresso IDE.

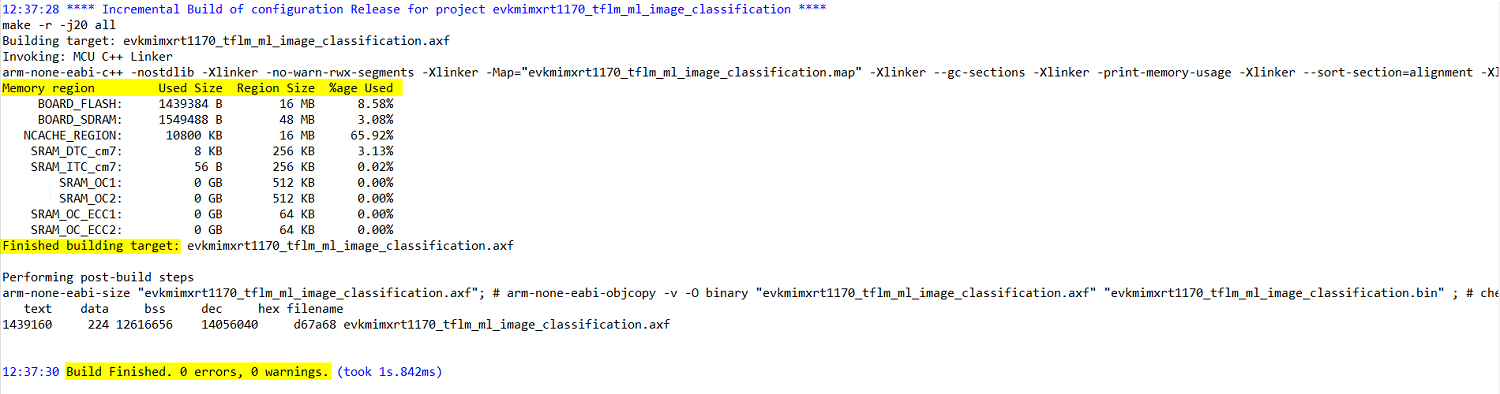

Follow Section 21.2 to change the build configuration to release build. Then follow Section 5.2 to build the project. After building the project, the console should display the following:

Follow Section 13 to debug the project.

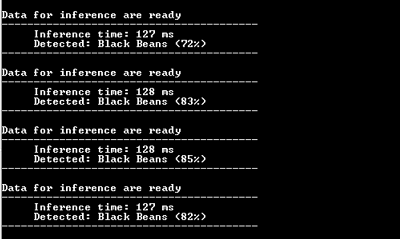

Open your terminal window such as Tera Term or follow Section 21.8 for using terminal view for UART communication with target to see the inference results from the camera input. Make sure to connect to the COM port that the board enumerated as and use 115200 baud, 1 stop bit and no parity.

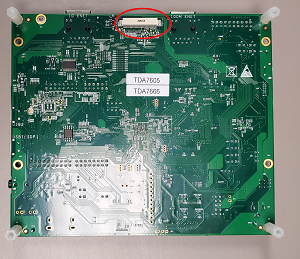

Attach the LCD to J48 port on the back of the board as shown in the image below.

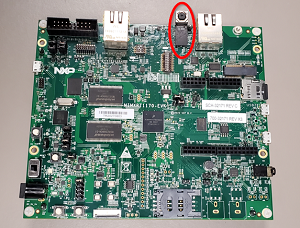

Attach the camera to J2 port as shown in the image below.

The inferencing results from the MIPI camera input should be displayed on the terminal and LCD as shown below. Sample images of the 5 classes of dry beans are also shown below.

Question: Why do I get a blank LCD screen while running this example?

Answer: If you have a RK055HDMIPI4MA0 LCD, then in source/video/eiq_display_config.h file change line 41 to "#define DEMO_PANEL DEMO_PANEL_RK055MHD091". If you are using the old LCD display panel - RK055HDMIPI4M, then change line 41 to "#define DEMO_PANEL DEMO_PANEL_RK055AHD091".

Question: I want to run an image classification model I generated using the eIQ portal for the given dry beans dataset and not the model used in this software package. How do I go about this?

Answer: If you would like to run your own image classification model that you have generated using the eIQ portal instead of the the model used in this software package, then please follow Section 4 and Section 5 of the eIQ TensorFlow Lite for Microcontrollers Lab for i.MX RT1170 - With Camera.pdf - Revision 5, August 2023.

Questions regarding the content/correctness of this example can be entered as Issues within this GitHub repository.

Warning: For more general technical questions regarding NXP Microcontrollers and the difference in expected funcionality, enter your questions on the NXP Community Forum

| Version | Description / Update | Date |

|---|---|---|

| 1.0 | Initial release on Application Code Hub | September 18th 2023 |