Author: Paul Lindquist

Electronic dance music is overwhelmingly created with computers and plugins rather than instruments and live recordings. It's typically distributed digitally, opting for high-quality .wav, .aiff and .mp3 files over CDs and vinyl. As such, thousands of new songs are released every day. This presents a challenge for any DJ or music curator seeking to keep their library current without drowning in new releases. To complicate matters further, the music isn't centralized. Releases are sold on numerous online stores like Beatport, Juno Download, Traxsource and Bandcamp or given away for free on platforms like Soundcloud. Crate digging has long since moved to the digital space and it's easy to get overwhelmed.

Like any other genre of music, electronic dance music has historically been categorized by sub-genres. Generally, those sub-genres are defined by features like tempo (beats per minute/BPM), mood and the types of instrument samples used. Trance rarely dips below 122 beats per minute, saw synths aren't heavily utilized in Deep House, etc. But as the music has evolved and artists implement elements from different sub-genres, classifying songs by sub-genre has become both cumbersome and antiquated. There needs to be a more efficient way to analyze songs irrespective of sub-genres. By the early 2010s, a leader emerged in that space.

Spotify acquired that leader, music intelligence and data platform The Echo Nest, in 2014. Shortly thereafter, they began integrating TEN's algorithm-based audio analysis and tagging songs with audio features such as valence, energy, danceability, etc. Through their Web API, Spotify permits the extraction of these features when building out datasets. Along with the additionally tagged sub-genres, this allows for a more detailed categorization and comparison of songs.

This project leverages those audio features and focuses solely on electronic dance music within Spotify's database. Common sub-genres categorized by both Every Noise at Once and Spotify's own genre seeds are used to narrow the final dataset.

Spotify is chosen as the singular data-gathering platform for a few reasons:

- It's the world's most popular music streaming service with a database of over 70 million songs

- The algorithm-based audio analysis (song features) provides unmatched opportunity for song comparison and recommendation

- Their Web API is accessible and well-documented

- Songs are tagged with identifiers like ID and URI for organized tracking

The following Spotify audio features are extracted and used in determining similarity for recommendation. Refer to the documentation for an in-depth explanation of each:

- Acousticness, danceability, energy, instrumentalness, liveness, loudness, speechiness, tempo (BPM), valence

Musical features like key and mode are purposefully omitted because there are separate third-party applications (e.g. Mixed in Key) that can hard-tag songs with that information. Many DJs do this and will already have it available during song selection. Also, and perhaps more importantly, making recommendations based on a song's key can lead to a string of songs that sound too familiar. Over time, this creates a tired listening experience.

This project sets out to make a recommendation system for electronic dance music, specifically with live DJ'ing in mind. It can assist DJs with tracklist preparation or live DJ performance. Often only 1 song is needed to fill out a set, create a bridge between 2 songs or inspire a line of thinking that allows the DJ to come up with the next song on their own. This recommendation system can assist that process.

Sometimes a DJ wants variety and would like to play a song that's categorized by a different sub-genre than the song currently being played. The final model function gives the option to provide recommendations within the same sub-genre or across all sub-genres.

I serve as my own stakeholder for this project. Personal domain knowledge is used to determine validity of the recommendations. I've DJ'ed electronic dance music for 13 years and have played countless live mixes. From 2013-2019, I co-founded, operated and resident DJ'ed an electronic music podcast, amassing over 72,000 subscribers. As a casual listener, I've been a fan of the genre since the 90s.

Data is aggregated from multiple sources:

- API calls of Spotify's Web API using their genre seeds

- A raw dataset of approx. 500k songs referencing Every Noise at Once's The Sound of Everything Spotify playlist

- Multiple Kaggle datasets that contained necessary sub-genre tagging and audio feature listings (energy, danceability, etc.)

This project exclusively uses content-based filtering to build a recommendation system. Similarity is calculated using K-Nearest Neighbors (KNN), cosine similarity and sigmoid kernel. Exploratory data analysis and visualizations are conducted on the final, cleaned data.

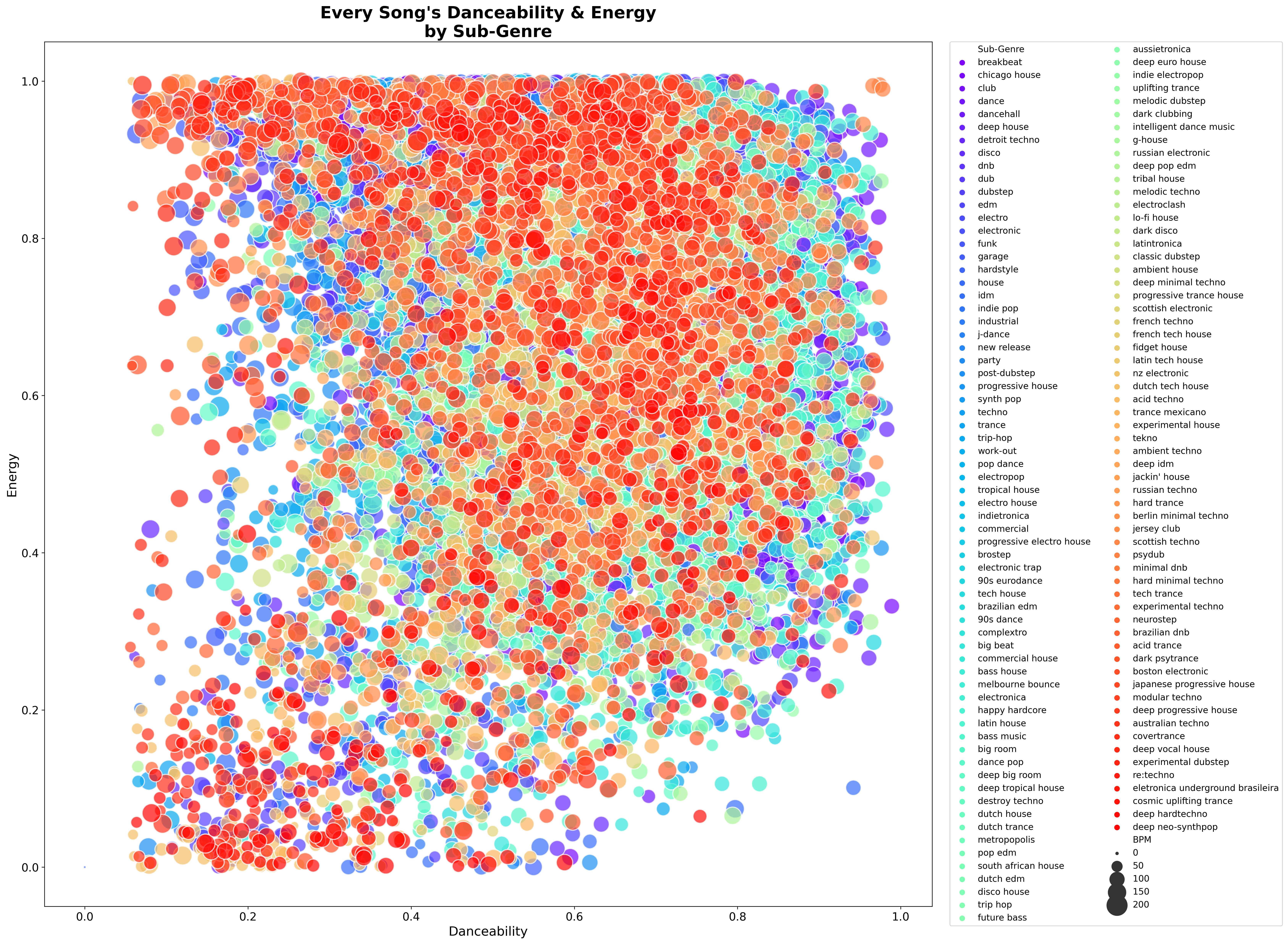

The final dataset has just under 23,000 songs spanning 128 sub-genres. It comes as no surprise that the majority of songs within the dataset score high in both energy and danceability. Both features somewhat define electronic dance music.

Recommendations are made by testing numerous modeling algorithms and functions, all similarity and distance-based. Cosine similarity and K-Nearest Neighbors yield the most consistent results while sigmoid kernel provides valid recommendations with a bit of variety. The other kernel functions (linear, polynomial, x², etc.) don't produce many varying recommendations from those of cosine similarity or sigmoid kernel so they aren't used in the final model function.

While the scores of each algorithm/function are used to create the "mathematically most similar" recommendations, what really matters is how the songs sound when compared to the provided reference song. As such, the validity of the results are somewhat subjective.

Please note: In the video comparisons below, the reference song is repeatedly played before each recommendation. This is so the ear can make a direct comparison with the reference song rather than the other recommendations. After all, live DJs are only looking for a next-song recommendation while another song is currently playing.

- The first recommended song is so similar to the reference that DJs have made mashups of the 2 songs. From the similar instrumentation to the nearly-identical syncopated arpeggio, everything about this recommendation is spot-on.

- The second recommended song captures the essence and energy of the reference while not sounding the exact same.

- Interestingly, the third recommendation is a song from the same artist and released in the same year as the reference. Release year and artist are not a part of the modeled data so the similarity is being identified within the audio features.

Reference_Song_vs_Recommendations_1.mp4

A bit of a different approach is used for this next batch of recommendations. To test robustness, the final model function is input to recommend songs across all genres. Notice the reference song has a piano-heavy, Disco-infused-almost-throwback feel to it. Its energy is upbeat and its valence is positive.

- The first recommendation carries that same positivity and upbeat energy. Like the reference song, it also has vocals and a Disco-inspired bassline.

- The second recommendation sounds the most dissimilar of all the recommendations. It has a few filtered instrumentation elements like the reference song but it's hard to describe its energy as upbeat or its valence as positive.

- The third recommendation also comes from quite a different sub-genre but its sound is energetic, positive and it too features vocals. It would be a nice change of pace from the reference song without sounding like there was no connection.

Reference_Song_vs_Recommendations_2.mp4

The recommendation system is quite good. It's able to provide a variety of recommendations that include both songs with exactness in sound and songs with similar energy, danceability and valence. Given the number of results within the scope of the provided reference song, there's a strong likelihood a DJ would be able to select a song from the list of recommendations. Remember, it only takes 1 usable song for the recommendation system to successfully achieve its objective.

There are a few limitations with this project:

- The data relies heavily on the original tagging of sub-genre and audio features for making recommendations. If, for whatever reason, a song's sub-genre, danceability, etc. wasn't initially tagged properly, the recommendations will reflect those deficiencies.

- The final dataset only includes just under 23,000 songs. This is likely a severe underrepresentation of electronic dance music from Spotify's 70 million song database.

- The final dataset is also static, in that it includes data from other raw datasets and isn't exclusively derived from Spotify API calls. Over time, the data will become outdated and new releases won't be represented in the data, both for reference and recommendation.

- Recommendations for sub-genres like Tech House and Techno, where audio features vary and songs are harder to classify, aren't as consistent. It's unsurprising given the ambiguity of the sub-genres' features but it still yields weaker recommendations.

Should this project be continued, the following next steps could be explored:

- Expanding the data through additional Spotify API calls

- Importing new data, through API calls or web scraping, using an electronic music-specific store like Beatport, Juno Download, Traxsource, etc.

- Deploying the final model function to a mobile app, or at least a mobile-responsive application like Streamlit, so DJs can access recommendations on their phones while mixing

Please review the full analysis in my Jupyter Notebook or presentation deck.

For additional questions, feel free to contact me.

├── data <- Source data .csv files

├── images <- Exported Notebook visualizations

├── README.md <- Top-level README for reviewers of this project

├── environment.yml <- Environment .yml file for reproducibility

├── main_notebook.ipynb <- Technical and narrative documentation in Jupyter Notebook

├── project_presentation.pdf <- PDF version of project presentation

├── requirements.txt <- Requirements .txt file for reproducibility

└── spotify_authorization.py <- Spotify authorization function to call in Main Notebook