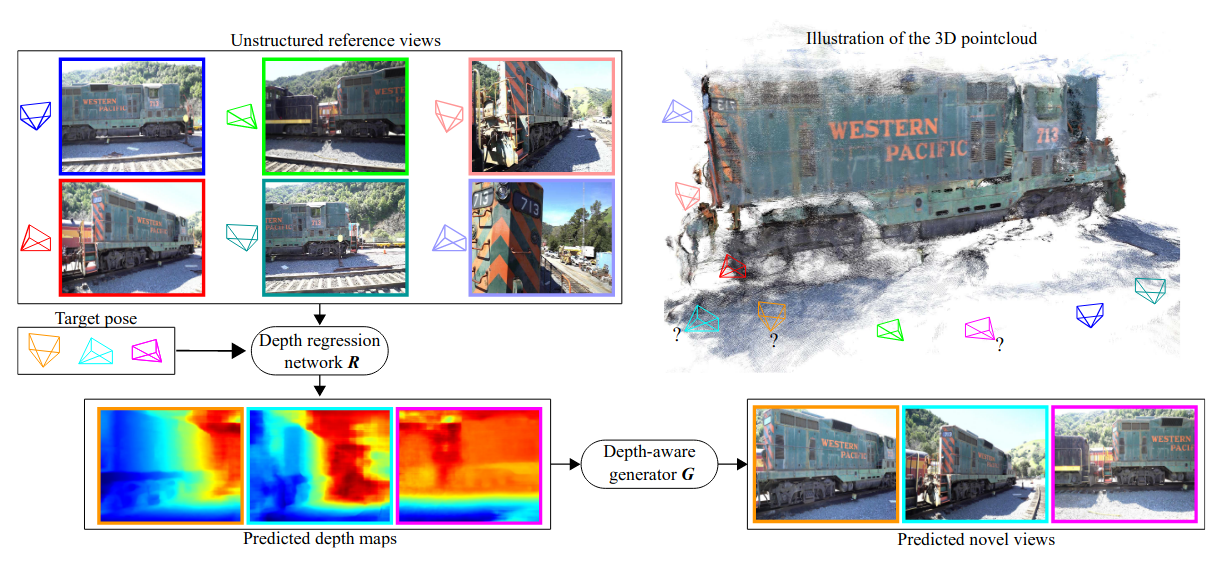

This repository contains a pytorch lightning implementation for the 3DV 2021 RGBD-Net paper. We propose a new cascaded architecture for novel view synthesis, called RGBD-Net, which consists of two core components: a hierarchical depth regression network and a depth-aware generator network. The former one predicts depth maps of the target views by using adaptive depth scaling, while the latter one leverages the predicted depths and renders spatially and temporally consistent target images.

Install environment:

pip install pytorch-lightning, inplace_abn

pip install imageio, pillow, scikit-image, opencv-python, config-argparse, lpips

Download the preprocessed DTU training data and Depth_raw from original MVSNet repo and unzip. We provide a DTU example, please follow with the example's folder structure.

With depth supervision

python train.py --root_dir dataset_path --num_epochs 32 \

--batch_size 4 --depth_interval 2.65 --n_depths 8 32 48 --interval_ratios 1.0 2.0 4.0 \

--optimizer adam --lr 1e-3 --lr_scheduler cosine --num_gpus 4 --loss_type sup --exp_name sup \

--ckpt_dir ./ckpts --log_dir ./logs

Without depth supervision

python train.py --root_dir dataset_path --num_epochs 32 \

--batch_size 4 --depth_interval 2.65 --n_depths 8 32 48 --interval_ratios 1.0 2.0 4.0 \

--optimizer adam --lr 1e-3 --lr_scheduler cosine --num_gpus 4 --loss_type unsup --exp_name unsup \

--ckpt_dir ./ckpts --log_dir ./logs

Please change the weight path in test.py to your trained model and execute python test.py

python test.py --root_dir dataset_path

If you find our code or paper helps, please consider citing:

@inproceedings{nguyen2021rgbd,

title={RGBD-Net: Predicting color and depth images for novel views synthesis},

author={Nguyen-Ha, Phong and Karnewar, Animesh and Huynh, Lam and Rahtu, Esa and Heikkila, Janne},

booktitle={Proceedings of the International Conference on 3D Vision},

year={2021}

}

Big thanks to CasMVSNet_pl, our code is partially borrowing from them.