Johan Edstedt · Georg Bökman · Mårten Wadenbäck · Michael Felsberg

The DeDoDe detector learns to detect 3D consistent repeatable keypoints, which the DeDoDe descriptor learns to match. The result is a powerful decoupled local feature matcher.

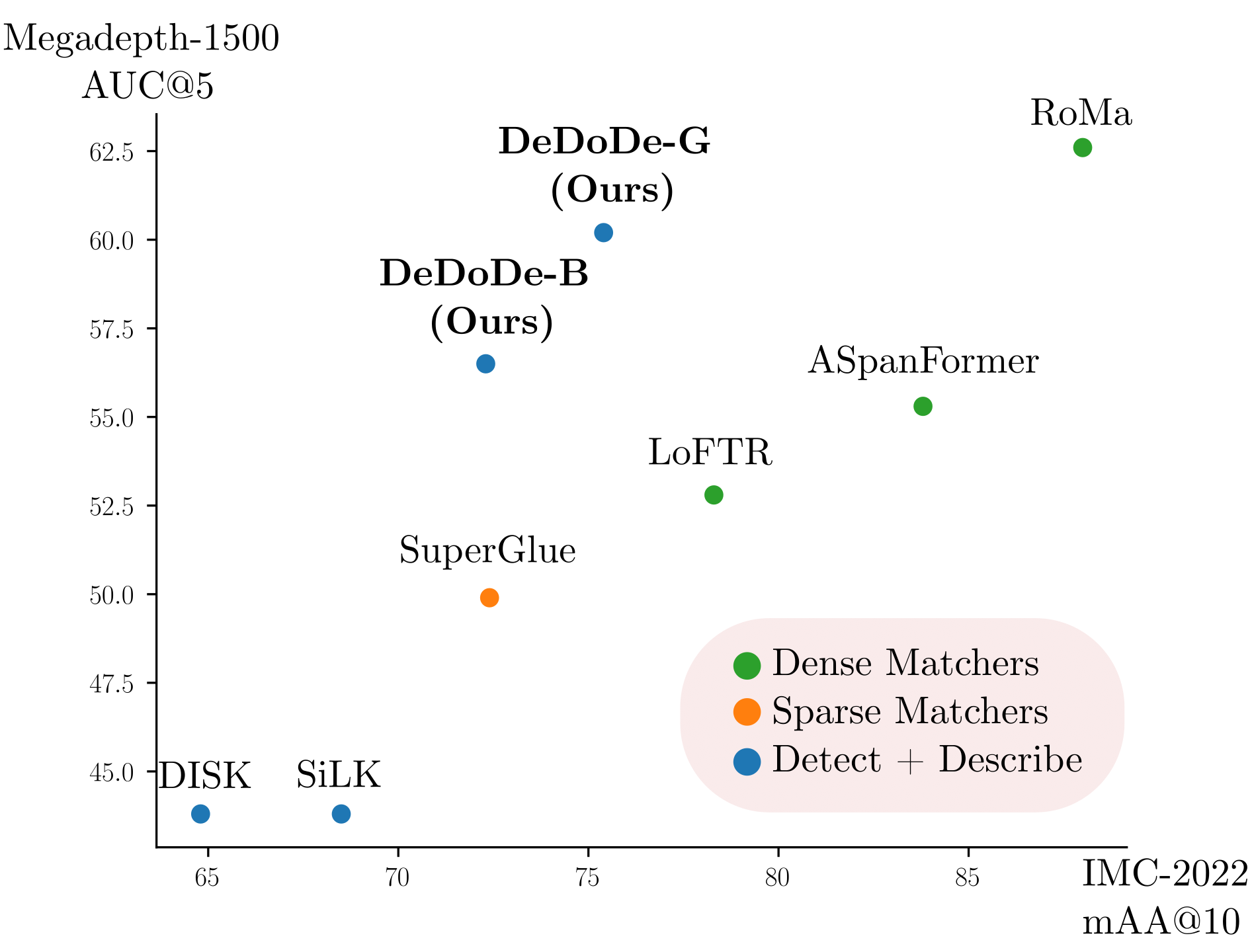

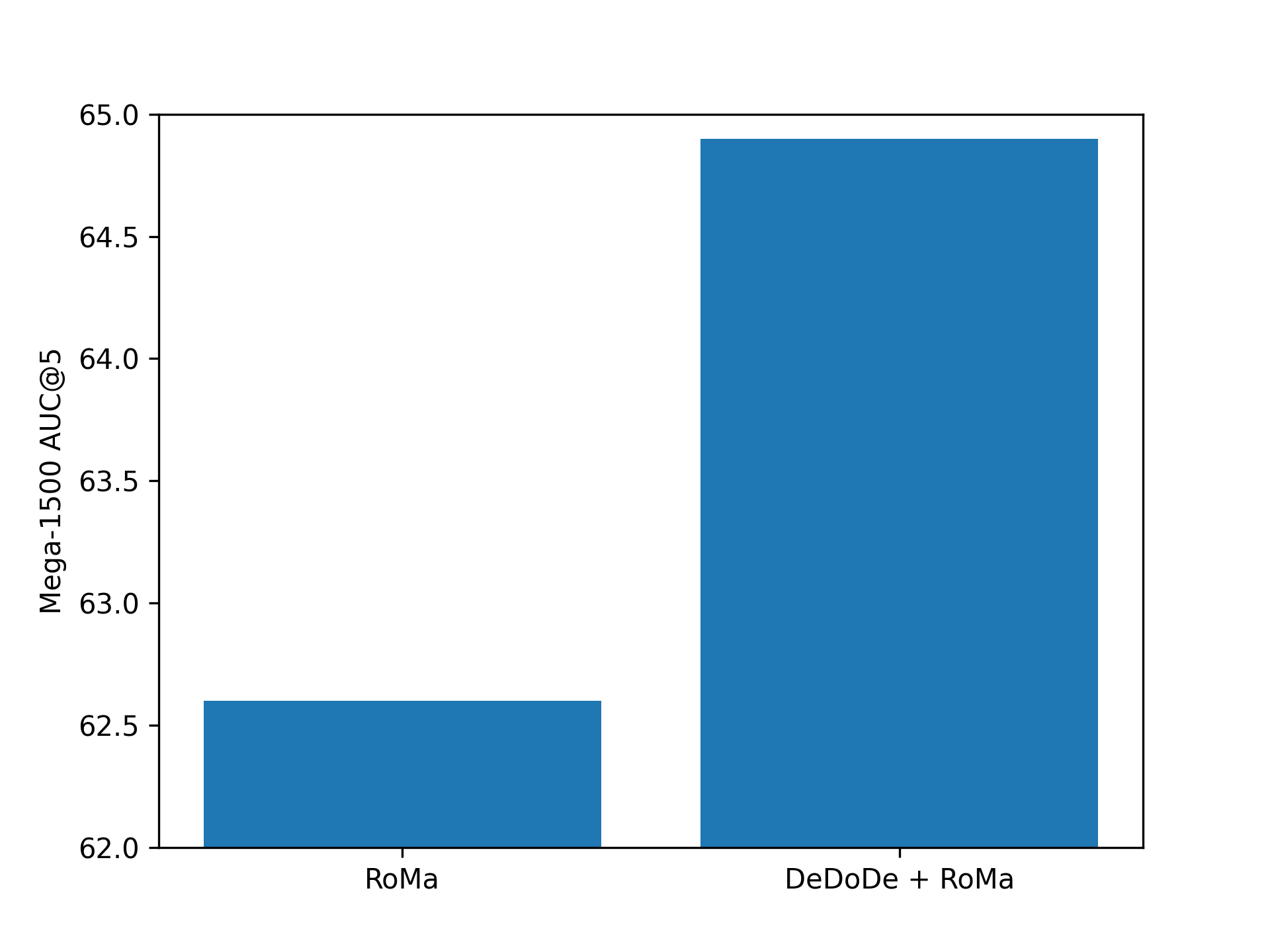

We experimentally find that DeDoDe significantly closes the performance gap between detector + descriptor models and fully-fledged matchers. The potential of DeDoDe is not limited to local feature matching, in fact we find that we can improve state-of-the-art matchers by incorporating DeDoDe keypoints.

Below we show how DeDoDe can be run, you can also check out the demos

from DeDoDe import dedode_detector_L, dedode_descriptor_B, dedode_descriptor_G

from DeDoDe.matchers.dual_softmax_matcher import DualSoftMaxMatcher

detector = dedode_detector_L(weights = torch.load("dedode_detector_L.pth"))

# Choose either a smaller descriptor,

descriptor = dedode_descriptor_B(weights = torch.load("dedode_descriptor_B.pth"))

# Or a larger one

descriptor = dedode_descriptor_G(weights = torch.load("dedode_descriptor_G.pth"),

dinov2_weights = None) # You can manually load dinov2 weights, or we'll pull from facebook

matcher = DualSoftMaxMatcher()

im_A_path = "assets/im_A.jpg"

im_B_path = "assets/im_B.jpg"

im_A = Image.open(im_A_path)

im_B = Image.open(im_B_path)

W_A, H_A = im_A.size

W_B, H_B = im_B.size

detections_A = detector.detect_from_path(im_A_path, num_keypoints = 10_000)

keypoints_A, P_A = detections_A["keypoints"], detections_A["confidence"]

detections_B = detector.detect_from_path(im_B_path, num_keypoints = 10_000)

keypoints_B, P_B = detections_B["keypoints"], detections_B["confidence"]

description_A = descriptor.describe_keypoints_from_path(im_A_path, keypoints_A)["descriptions"]

description_B = descriptor.describe_keypoints_from_path(im_B_path, keypoints_B)["descriptions"]

matches_A, matches_B, batch_ids = matcher.match(keypoints_A, description_A,

keypoints_B, description_B,

P_A = P_A, P_B = P_B,

normalize = True, inv_temp=20, threshold = 0.1)#Increasing threshold -> fewer matches, fewer outliers

matches_A, matches_B = matcher.to_pixel_coords(matches_A, matches_B, H_A, W_A, H_B, W_B)Right now you can find them here: https://github.com/Parskatt/DeDoDe/releases/tag/dedode_pretrained_models Probably we'll add some autoloading in the near future.

- fabio-sim/DeDoDe-ONNX-TensorRT: Deploy DeDoDe with ONNX and TensorRT 🚀

- Image Matching WebUI: a web GUI to easily compare different matchers, including DeDoDe 🤗

All code/models except DINOv2 (descriptor-G), are MIT license (do whatever you want). DINOv2 has a non-commercial license (see LICENSE_DINOv2).

@article{edstedt2023dedode,

title={{DeDoDe: Detect, Don't Describe -- Describe, Don't Detect for Local Feature Matching}},

author={Johan Edstedt and Georg Bökman and Mårten Wadenbäck and Michael Felsberg},

year={2023},

eprint={2308.08479},

archivePrefix={arXiv},

primaryClass={cs.CV}

}