Crowd Analyzer is a Python application designed to analyze pedestrian and crowd mobility patterns using computer vision and machine learning techniques. It incorporates YOLO for object detection, Kalman filters for tracking, and various methods for density and speed estimation.

The application also utilizes PedPy to process pedestrian trajectories and LlamaVision 90B via the Groq API for real-time interpretation of PedPy output plots.

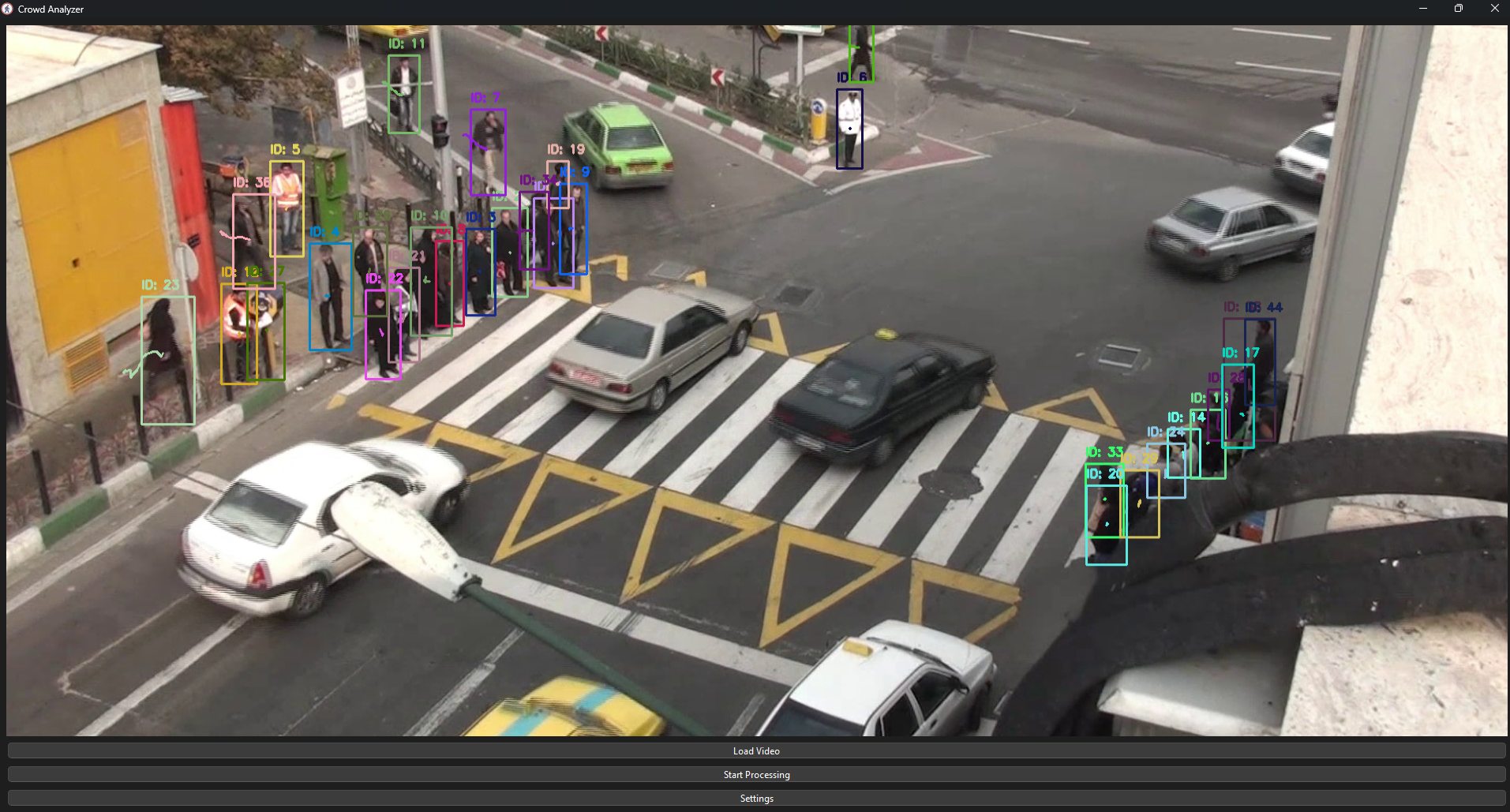

- Object detection using YOLO

- Kalman filter-based tracking

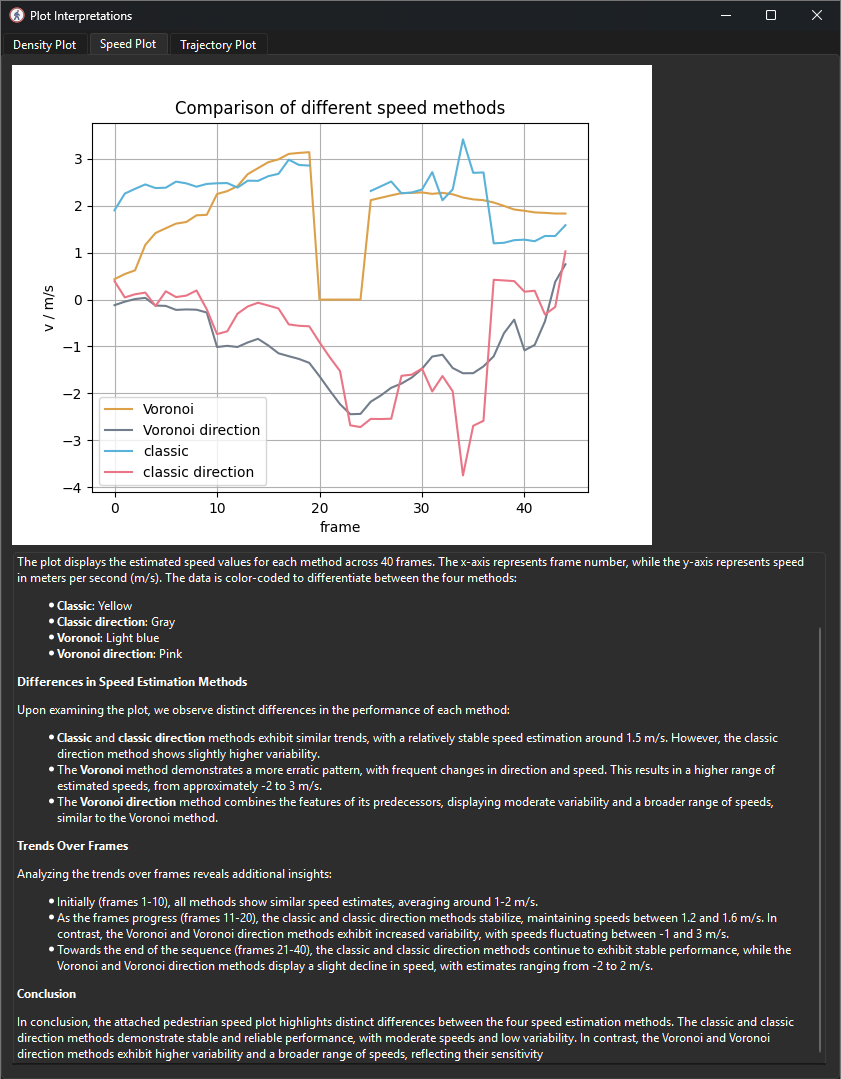

- Density and speed estimation using Voronoi and classic methods

- Interactive point selection for homography transformation

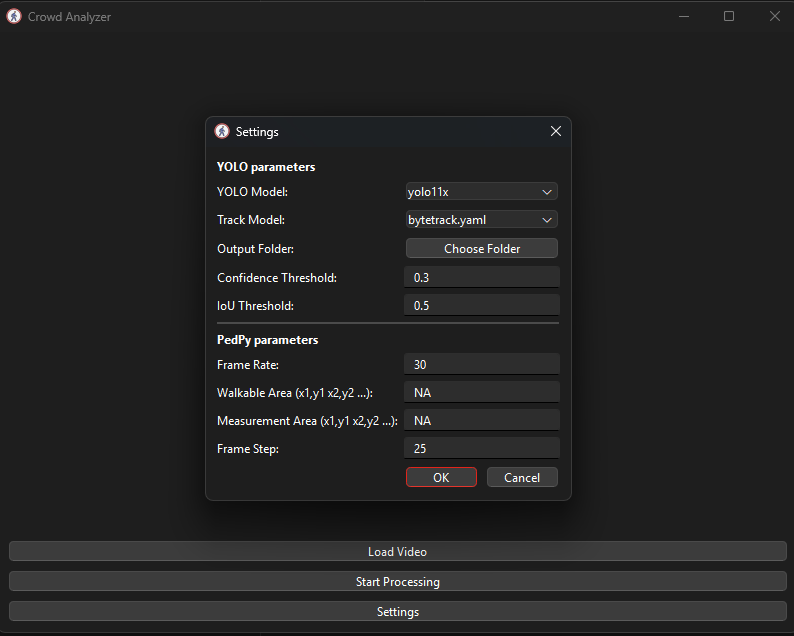

- Graphical user interface (GUI) using PyQt6

- Visualization of trajectories, density, and speed plots using PedPy

- Real-time plot interpretation using LlamaVision AI

Sample_1080.mp4

Demo.mp4

Video processing and tracking visualization

Density, Speed and trajectory analysis plots using Pedpy and LllamaVision

-

Clone the repository:

git clone https://github.com/yourusername/crowd-analyzer.git cd crowd-analyzer -

Create a virtual environment and activate it:

python -m venv venv source venv/bin/activate # On Windows use `venv\Scripts\activate`

-

Install the required dependencies:

pip install -r requirements.txt

-

Set up the environment variables:

- Create a

.envfile in the project root directory. - Add your Groq API key to the

.envfile:GROQ_API_KEY=your_groq_api_key

- Create a

-

Run the application:

python CrowdAnalyzer.py

-

Load a video file using the "Load Video" button.

-

Configure settings using the "Settings" button.

-

Start processing the video using the "Start Processing" button.

-

View the results and plots after processing is complete.

CrowdAnalyzer.py: Main application file containing the GUI and core functionality.Tracker.py: Contains the tracking and density estimation logic.requirements.txt: Lists the required Python packages..env: Environment variables file for storing sensitive information like API keys.

Contributions are welcome! Please fork the repository and submit a pull request for any improvements or bug fixes.

This project is licensed under the MIT License.