This is the official implementation of the paper Initiative Defense against Voice Conversion through Generative Adversarial Network.

The left part of the figure represents the normal voice conversion process, while the right part illustrates the intention of our work. We introduce perturbations to the mel spectrogram of the target audio to prevent the voice conversion model from generating the intended output.

The left part of the figure represents the normal voice conversion process, while the right part illustrates the intention of our work. We introduce perturbations to the mel spectrogram of the target audio to prevent the voice conversion model from generating the intended output.

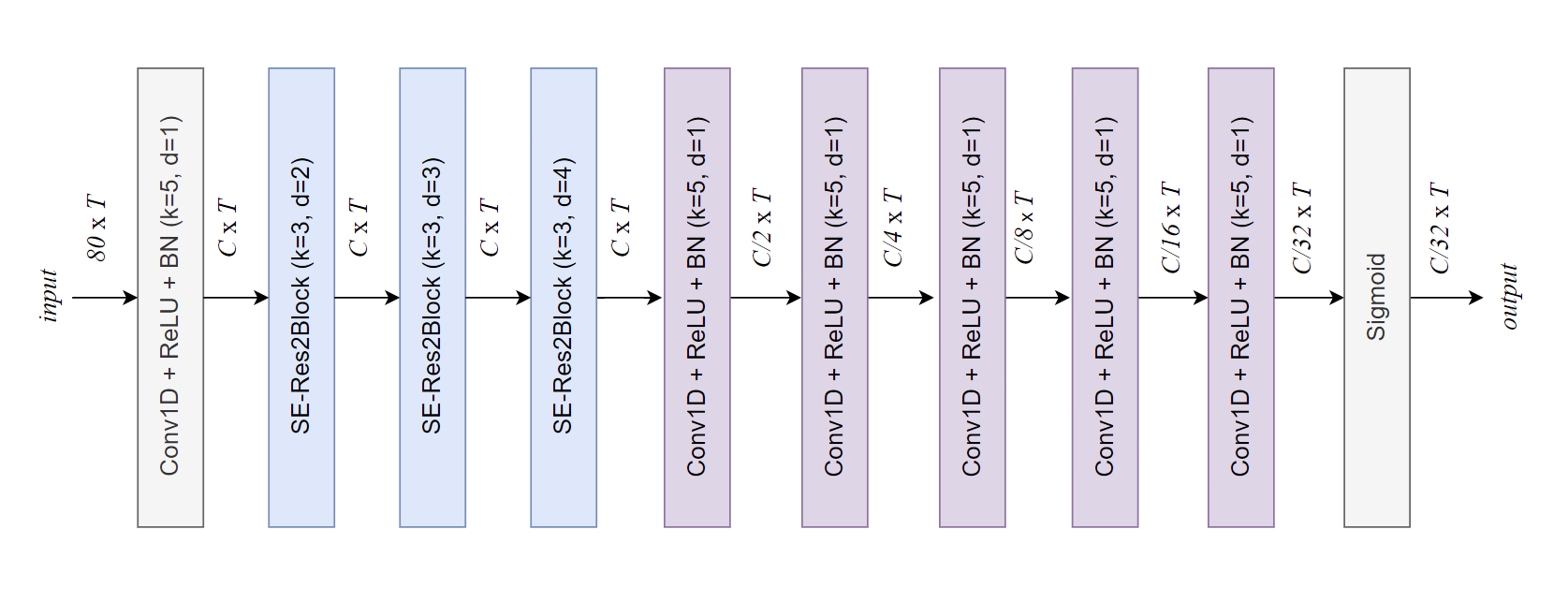

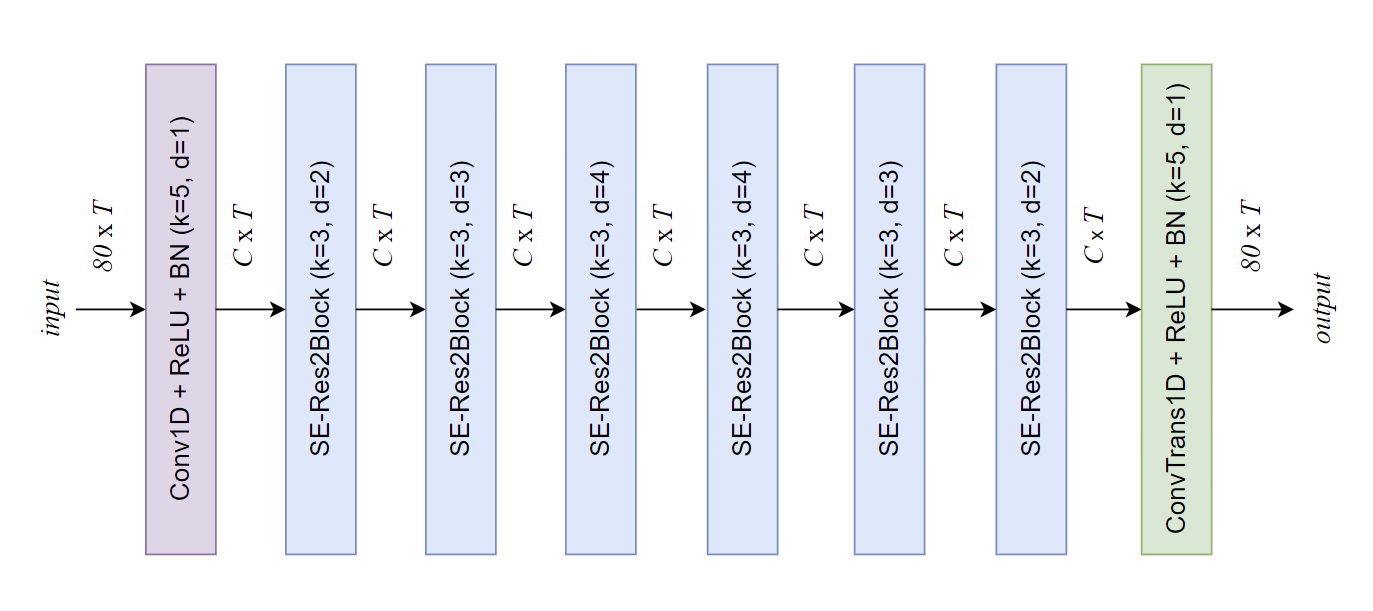

The SE-ResNet blocks were proposed by ECAPA-TDNN, and the details of the SE-ResNet blocks can be found in the original paper here.

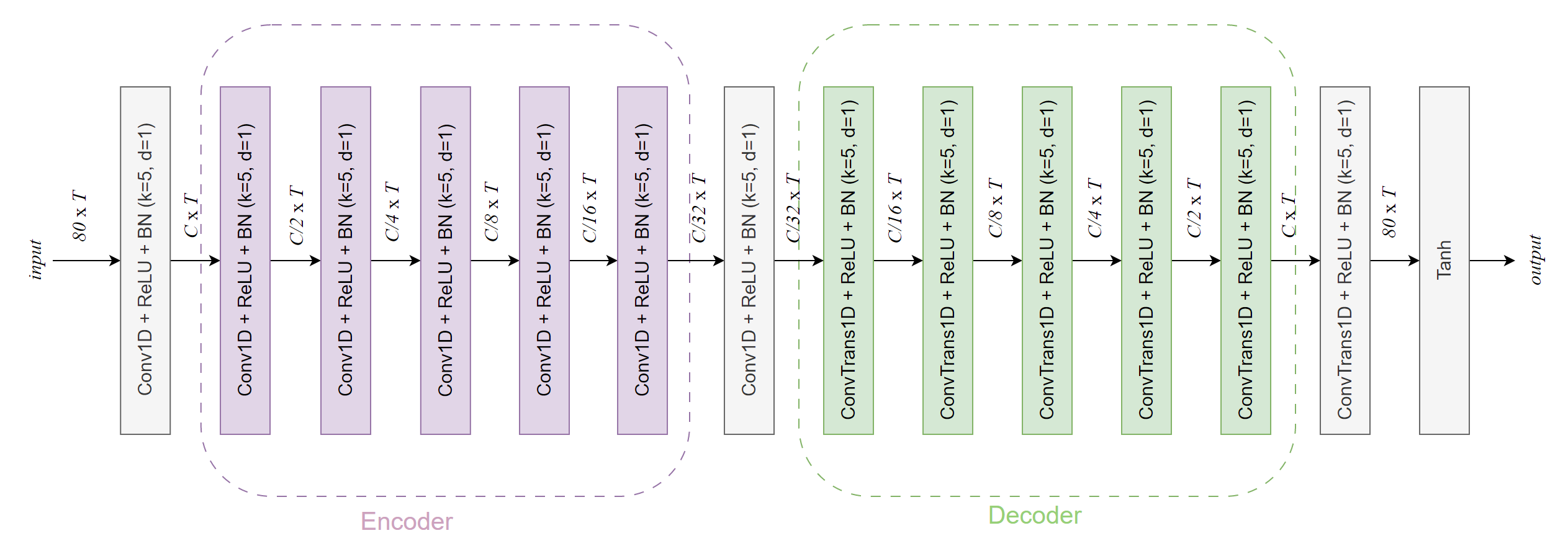

In the architectural detail graph below, "Conv1D + ReLU + BN" represents a 1D convolutional block consisting of 1D convolutional layers, a ReLU activation function, and batch normalization. "ConvTrans1D" denotes a 1D transposed convolution layer. "k" indicates the kernel size, "d" represents the dilation, "C" signifies the channel size, and "T" denotes the relative time length of the input mel-spectrogram.

- Adain-vc: https://github.com/cyhuang-tw/AdaIN-VC

- Vqvc+: https://github.com/ericwudayi/SkipVQVC

- Again-vc: https://github.com/KimythAnly/AGAIN-VC

- Triaan-vc: https://github.com/winddori2002/TriAAN-VC

Use conda env create -f cc.yaml to create conda env.

Melgan: https://github.com/descriptinc/melgan-neurips

Resemblyzer: https://github.com/resemble-ai/Resemblyzer

- VCTK: https://datashare.ed.ac.uk/handle/10283/2950.

- Experimental samples can be found in the 'samples' folder under this repository.

The checkpoints/netG folder contains the weights of the generator, and checkpoints/swcsm contains the weights of the swcsm.

hyper-parameter settings are all in the file hyperparameter.py. You can adjust them according to the specific needs.

- Set the field

self.project_rootto the path of this repository. For steps 2-7, you can place all the data under thedatafolder within this repository. - Set the feild self.vctk_48k to the path of the VCTK dataset. e.g.

/you/path/to/the/VCTK-Corpus-0.92/wav48_silence_trimmed, or put it under thedatafolder of this repo. - Set the feild self.vctk_22k to the path where you want to place resampled waveform.

- Set the feild self.vctk_mels to the path where you want to store the processed mel.

- Set the feild self.vctk_speaker_info to the path of the VCTK speaker-info.txt file.

- Set the feild self.metadata to the path where you place metadata.json.

- Set the feild self.vctk_rec_mels to the path where you want to place vctk_rec_mels which are mels that reconstruct to the waveform and re-extract with melgan preprocessing.

- Run prepare_data.py to 1) downsample the VCTK dataset, 2) extract from the VCTK dataset, and 3) obtain the metadata.

- Run train_swcsm.py to train the swcsm.

- Run trainer.py to train the framework.

Our implementation is hugely influenced by the repositories metioned before and the following repositories as we benefit a lot from their codes and papers.