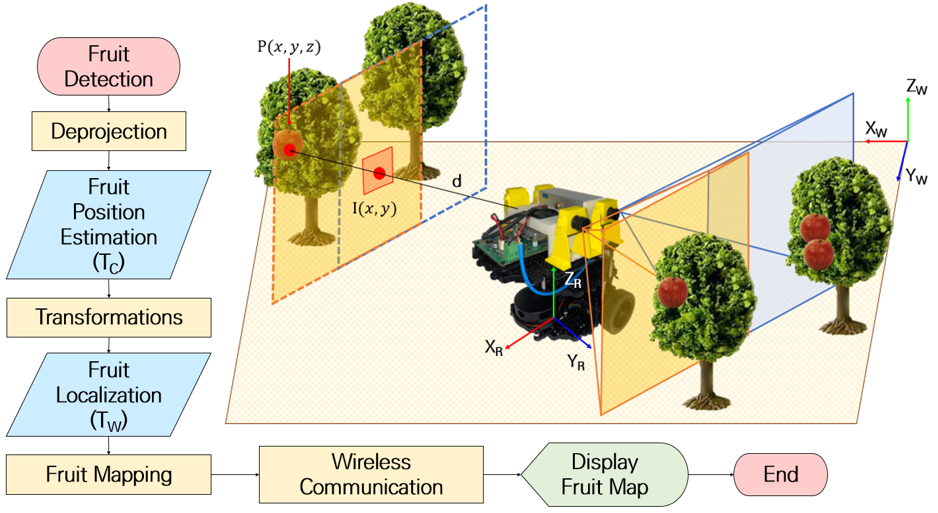

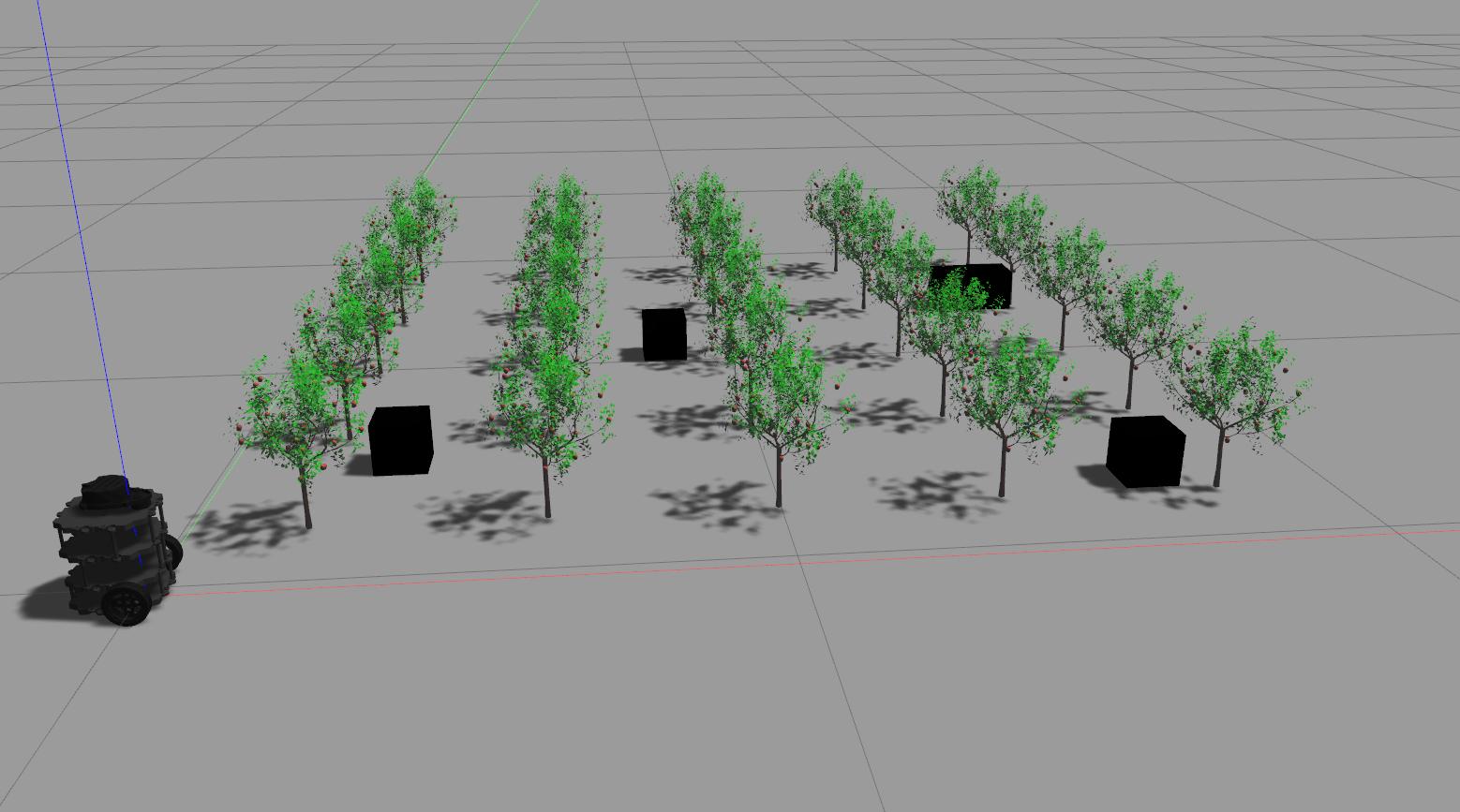

로봇 미션: 제작한 로봇을 이용하여 과수원 모사 경기장 내의 출발점부터 도착점까지 주행하면서 과수 모형에 달린 과일을 검출, 분류, 계수함(로봇 임무 제한시간은 5분 이내)

주행: 과수 열을 인식하여 열 사이로 충돌없이 주행해야 함

- 각 팀별로 주어지는 예비 시험에서 맵작성 가능

- 주행시간은 로봇의 출발점부터 주행하면서 각 과수의 과일 검출을 끝내고 도착점까지 이동한 시간으로 측정함

회피: 주행 동안 주어진 장애물 모형을 충돌 없이 회피하여야 함

- 장애물은 본선대회 당일 임의 재배치 됨

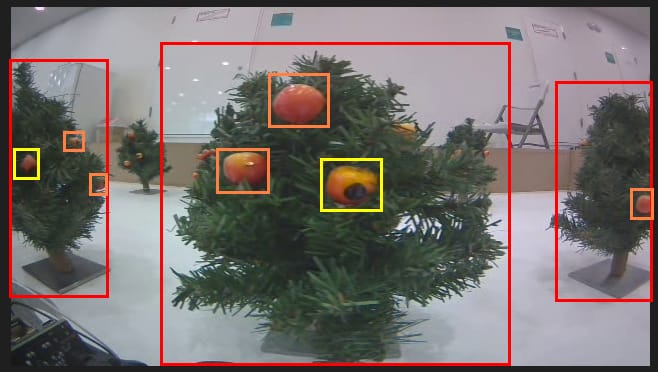

검출: 과수 모형에 달린 과일을 정상과와 질병과로 분류하고 계수해야 함

sudo apt-get install ros-noetic-slam-karto

sudo apt-get install ros-noetic-teb-local-planner

# Turtlebot

sudo apt install ros-noetic-turtlebot3-msgs

sudo apt install ros-noetic-turtlebot3

sudo apt install ros-noetic-dynamixel-sdkTo install this repository on your home folder:

cd ~

git clone git@github.com:pvela2017/KSAM-2022-Robotic-Competition.git

cd KSAM-2022-Robotic-Competition/ros

catkin_makeBefore running the repository the models path needs to be setup:

echo 'export GAZEBO_MODEL_PATH=~/KSAM-2022-Robotic-Competition/ros/src/robot_gazebo/models:${GAZEBO_MODEL_PATH}' >> ~/.bashrc

source ~/.bashrcsource ./devel/setup.bash

roslaunch robot_gazebo scenario_1_world.launchIn another terminal:

cd ~/KSAM-2022-Robotic-Competition/ros

source ./devel/setup.bashKarto SLAM:

roslaunch robot_slam robot_slam_simulation.launch slam_method:=kartoGmapping SLAM:

roslaunch robot_slam robot_slam_simulation.launch slam_method:=gmappingIn another terminal:

cd ~/KSAM-2022-Robotic-Competition/ros

source ./devel/setup.bashDWA planner:

roslaunch turtlebot3_navigation turtlebot3_navigation_simulation.launch planner:=dwaTEB planner:

roslaunch turtlebot3_navigation turtlebot3_navigation_simulation.launch planner:=tebcd ~/KSAM-2022-Robotic-Competition/ros

source ./devel/setup.bash

roslaunch turtlebot_bringup turtlebot_robotIn another terminal:

cd ~/KSAM-2022-Robotic-Competition/ros

source ./devel/setup.bashKarto SLAM:

roslaunch robot_slam robot_slam_real.launch slam_method:=kartoGmapping SLAM:

roslaunch robot_slam robot_slam_real.launch slam_method:=gmappingIn another terminal:

cd ~/KSAM-2022-Robotic-Competition/ros

source ./devel/setup.bashDWA planner:

roslaunch turtlebot3_navigation turtlebot3_navigation_real.launch planner:=dwaTEB planner:

roslaunch turtlebot3_navigation turtlebot3_navigation_real.launch planner:=tebIn another terminal:

cd ~/KSAM-2022-Robotic-Competition/ros

source ./devel/setup.bash

roslaunch turtlebot3_teleop turtlebot3_teleop_key.launchTo initiate the waypoints:

cd ~/KSAM-2022-Robotic-Competition/ros

source ./devel/setup.bash

rosrun turtlebot3_navigation goals.pyTo reset the robot position in the simulation:

cd ~/KSAM-2022-Robotic-Competition/ros

source ./devel/setup.bash

rosrun turtlebot3_navigation reset.pyLaunch obstacle LED and camera servo:

cd ~/KSAM-2022-Robotic-Competition/ros

source ./devel/setup.bash

rosrun robot_competition leds_servos.pyLaunch tree identification using lidar:

cd ~/KSAM-2022-Robotic-Competition/ros

source ./devel/setup.bash

rosrun robot_competition tree_labels.pyYOLOX is a single-stage object detector that makes several modifications to YOLOv3 with a DarkNet53 backbone. We chose YOLOX because it is a lightweight model able to run in a Jetson Nano. Also because our familiarity working with other YOLO neural network.

To install the Yolo requirements:

cd ./YOLOX

conda create -n yolox python=3.7

conda activate yolox

conda install pytorch==1.8.0 torchvision==0.9.0 torchaudio==0.8.0 cudatoolkit=11.1 -c pytorch -c conda-forge

pip3 install -r requirements.txt

pip3 install -v -e .Pc Test:

python3 tools/demo.py video -f ./nano.py -c ./best_ckpt.pth --path ./1_2.mp4 --conf 0.25 --nms 0.45 --tsize 320 --save_result --device [cpu/gpu]For counting the apples:

python3 digiag_1st.py capture -f ./nano.py -c ./best_ckpt.pth --conf 0.25 --nms 0.45 --tsize 320 --device gpu --save_resultThen to save the results to the USB:

sudo fdisk -l

sudo mount ~/media/myusb2Sometimes there are some errors with the camera drivers, if that is the case this is the workaround:

rm ~/.cache/gstreamer-1.0/registry.aarch64.bin

export LD_PRELOAD=/usr/lib/aarch64-linux-gnu/libgomp.so.1

sudo service nvargus-daemon restartTo train the model, please refer to the guidelines provided by YOLOX.