This is the official codebase of paper Assessing and Enhancing Large Language Models in Rare Disease Question-answering.

🌟 Please star our repo to follow the latest updates on ![]() ReDis-QA-Bench!

ReDis-QA-Bench!

📣 We have released our paper and source code of ReDis-QA-Bench!

📙 We have released our benchmark dataset ReDis-QA!

📕 We have released our corpus for RAG ReCOP!

📘 Baseline corpus refers to PubMed, Textbook, Wikipedia and StatPearls!

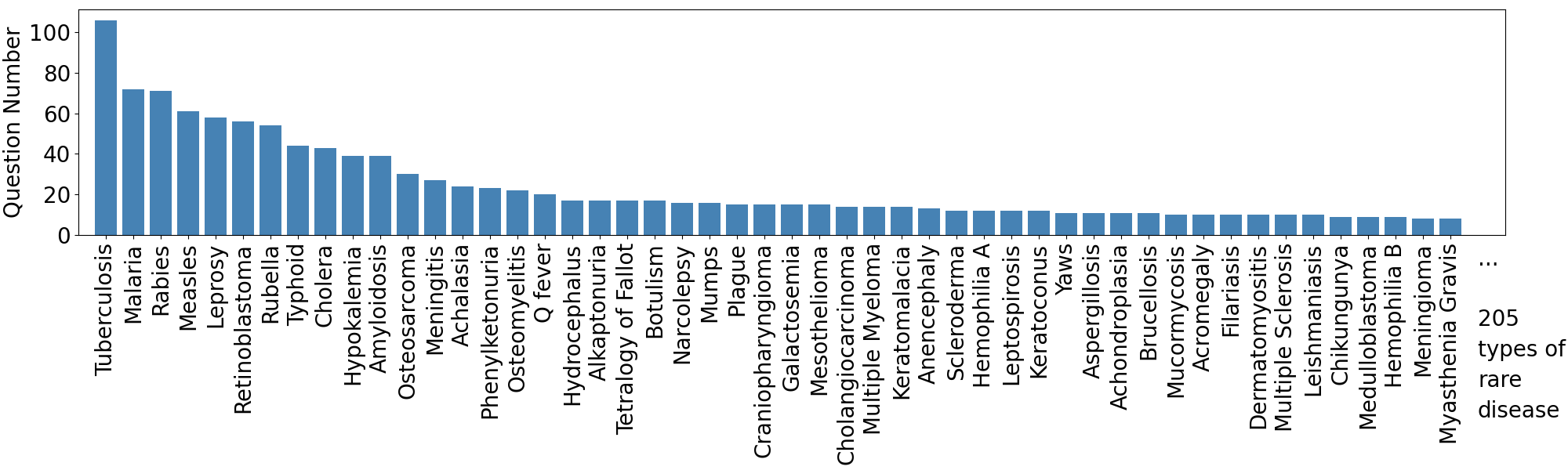

ReDis-QA dataset widely covers 205 types of rare diseases, where the most frequent disease features over 100 questions.

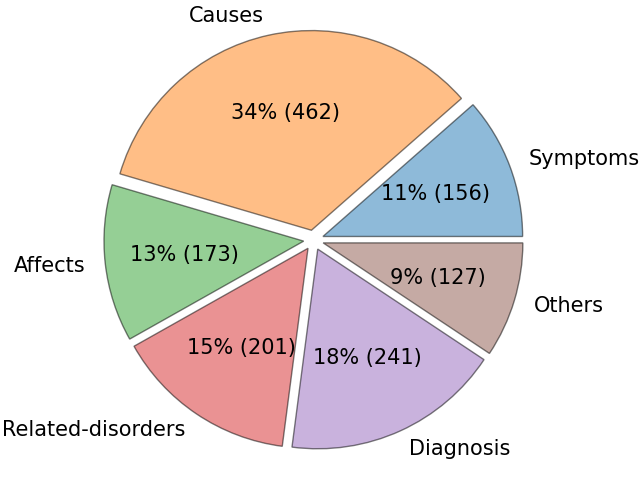

ReDis-QA dataset includes 11%, 33%, 13%, 15%, 18% of the questions corresponding to the symptoms, causes, affects, related-disorders, diagnosis of rare diseases, respectively. The remaining 9% of the questions pertain to other properties of the diseases.

-

Python Environment:

- Create a virtual environment using Python 3.10.0.

-

PyTorch Installation:

- Install the version of PyTorch that is compatible with your system's CUDA version (e.g., PyTorch 2.4.0+cu121).

-

Additional Libraries:

- Install the remaining required libraries by running:

pip install -r requirements.txt

- Install the remaining required libraries by running:

-

Git Large File Storage (Git LFS):

- Git LFS is required to download and load large corpora Textbooks, Wikipedia, and PubMed for the first time. ReCOP downloading does not require Git LFS.

-

Java:

- Ensure Java is installed for using the BM25 retriever.

Loading ReDis-QA Dataset:

from datasets import load_dataset

eval_dataset = load_dataset("guan-wang/ReDis-QA")['test'] Loading ReCOP Corpus:

from datasets import load_dataset

corpus = load_dataset("guan-wang/ReCOP")['train'] Run LLMs w/o RAG on the ResDis-QA dataset:

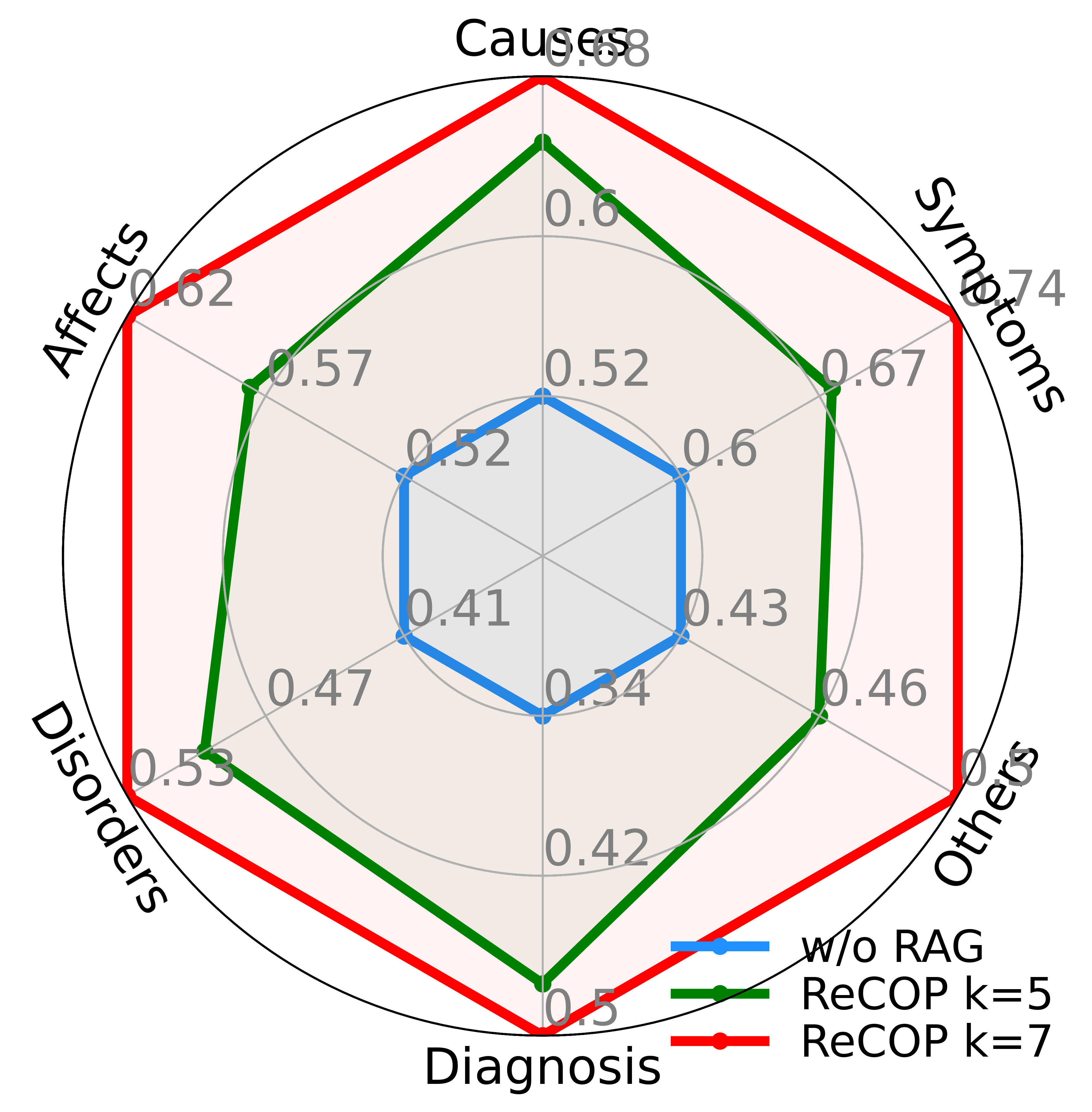

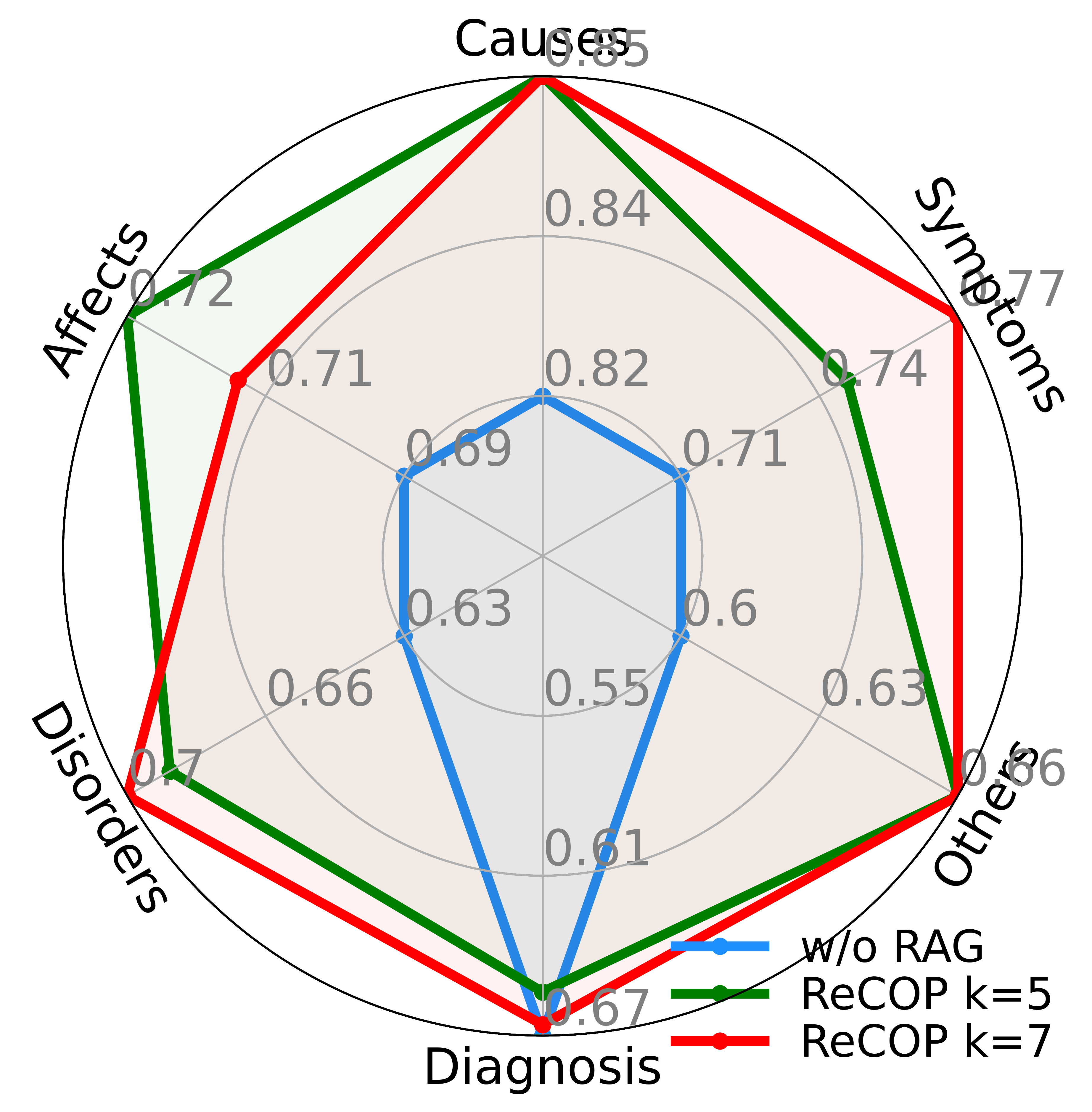

bash zero-shot-bench/scripts/run_exp.shThe accuracy of LLMs on each subset of properties is shown as follows:

Run RAG with ReCOP corpus using the meta-data retriever on the ResDis-QA dataset:

bash meta-data-bench/scripts/run_exp.shRun RAG with ReCOP and baseline corpora using MedCPT/BM25 retriever on the ResDis-QA dataset:

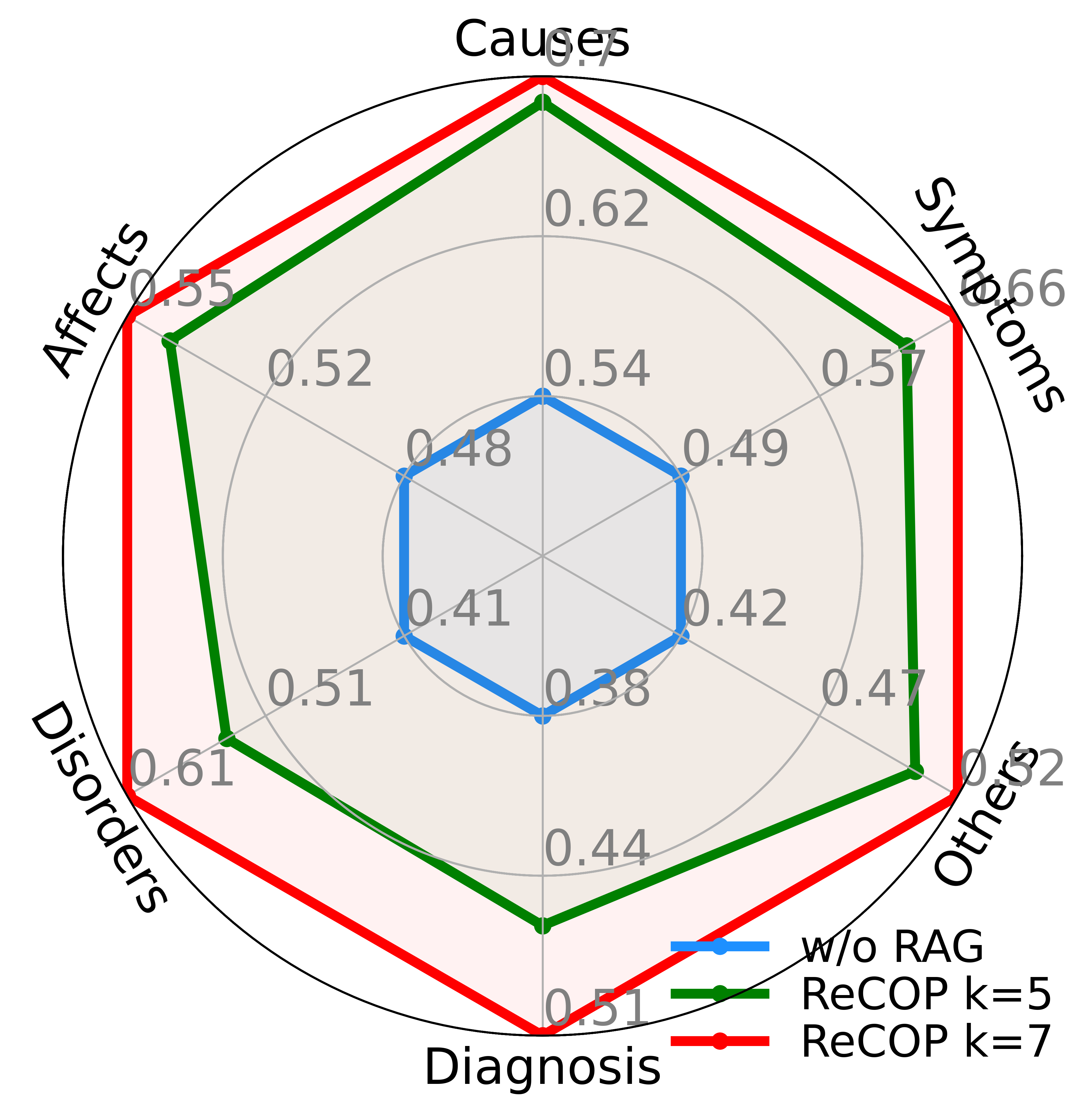

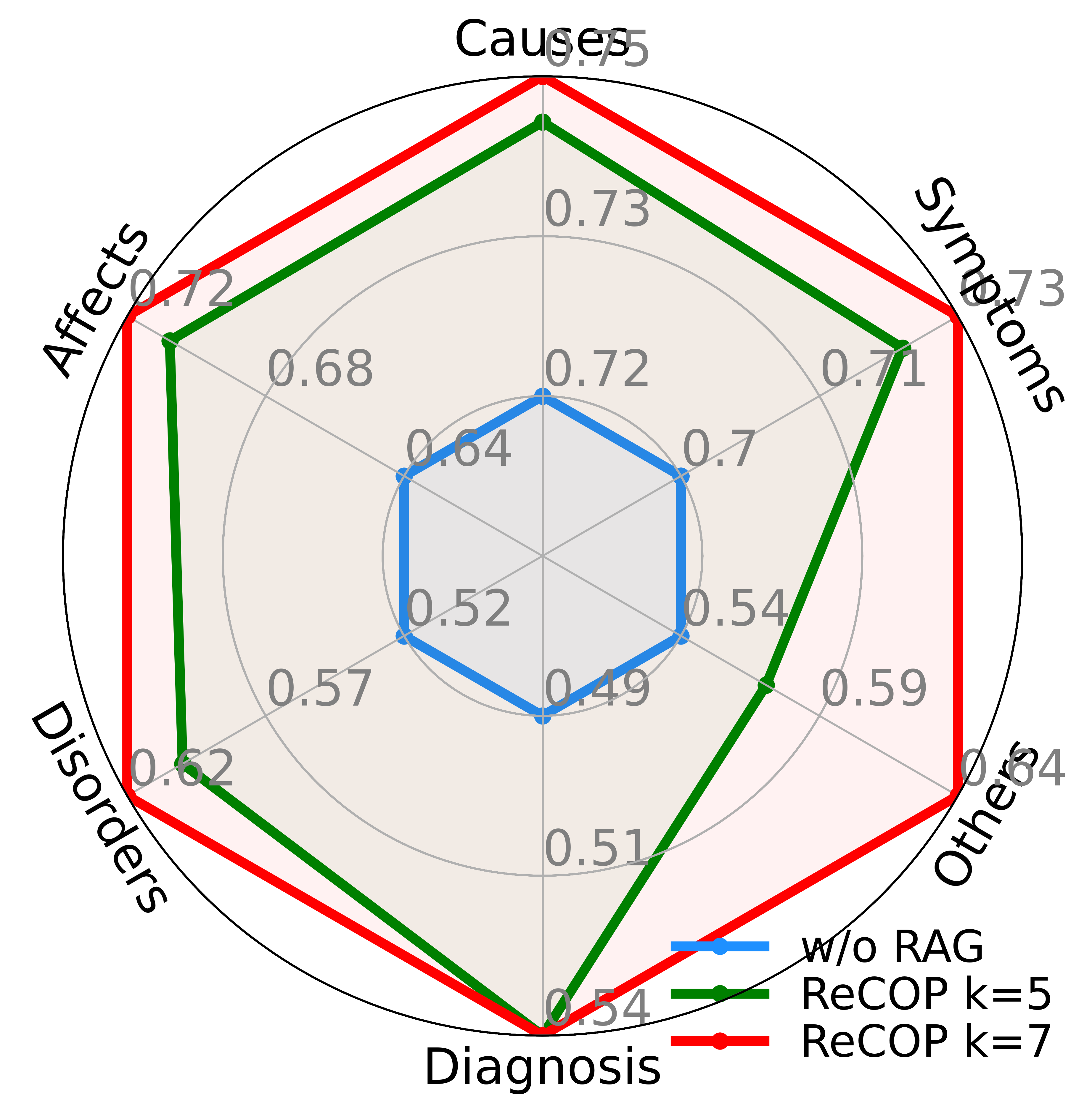

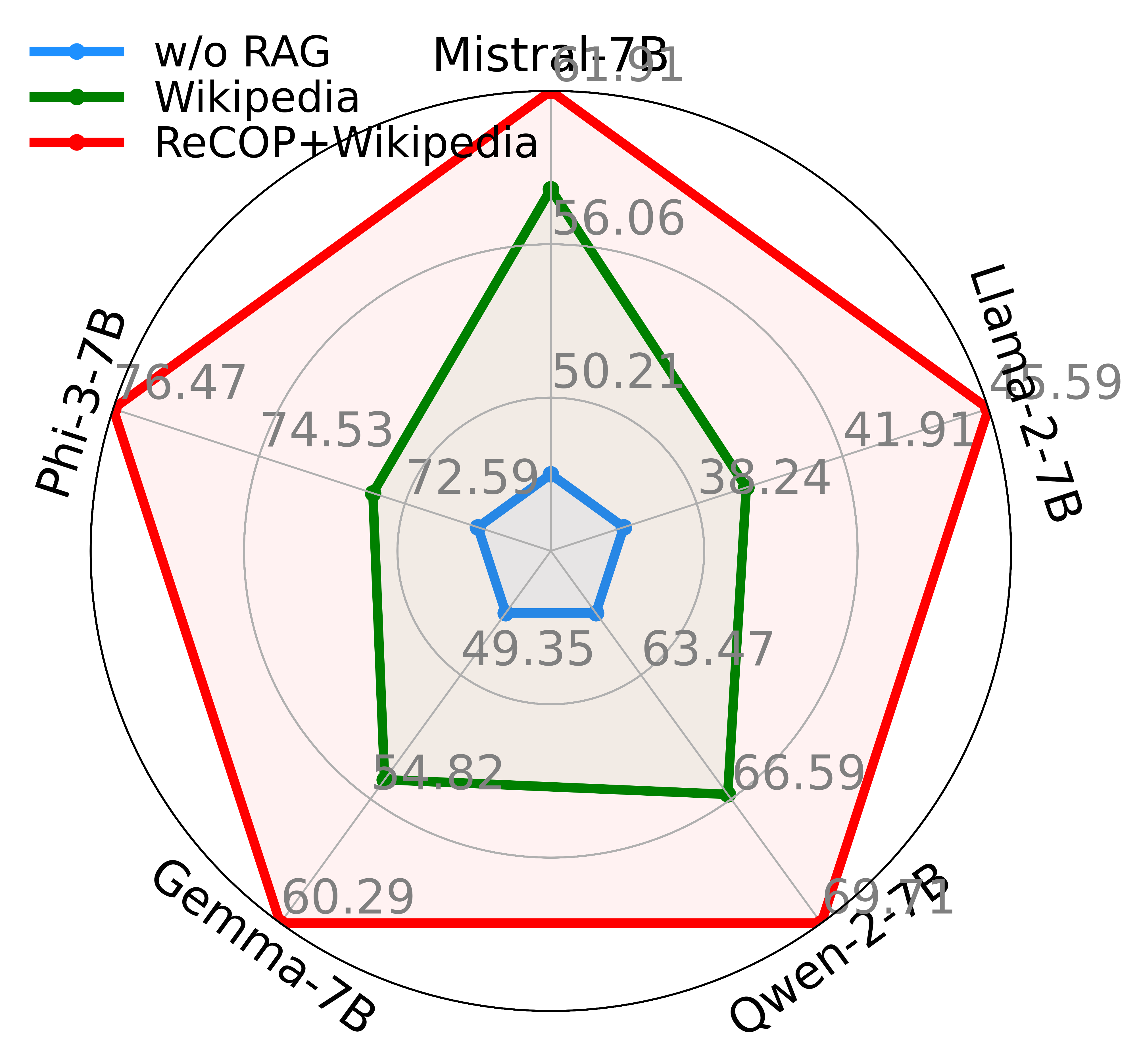

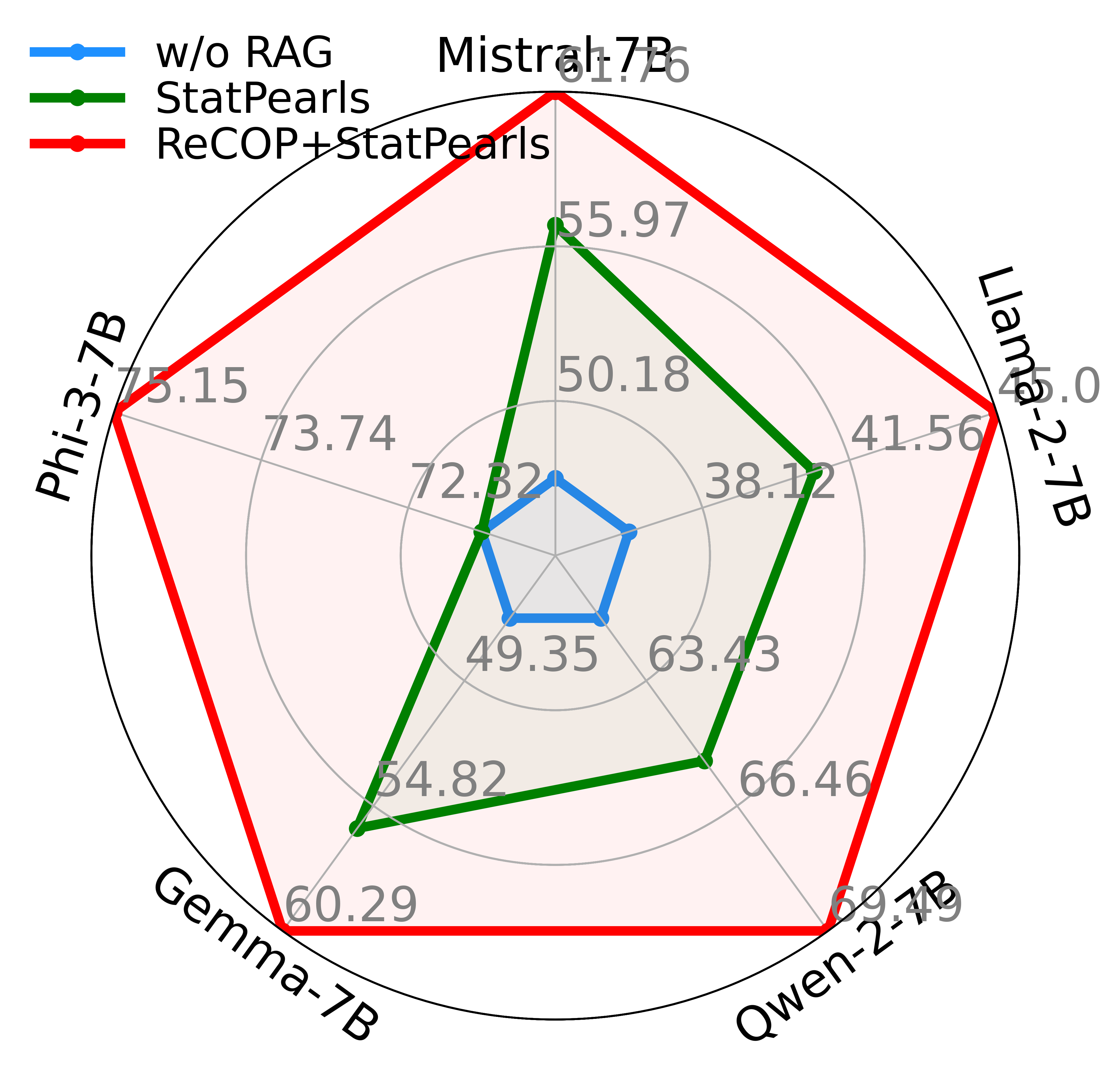

bash rag-bench/scripts/run_exp.shThe accuracy of RAG with ReCOP corpus is shown as follows:

Run RAG with baseline corpus and combine with ReCOP on the ResDis-QA dataset:

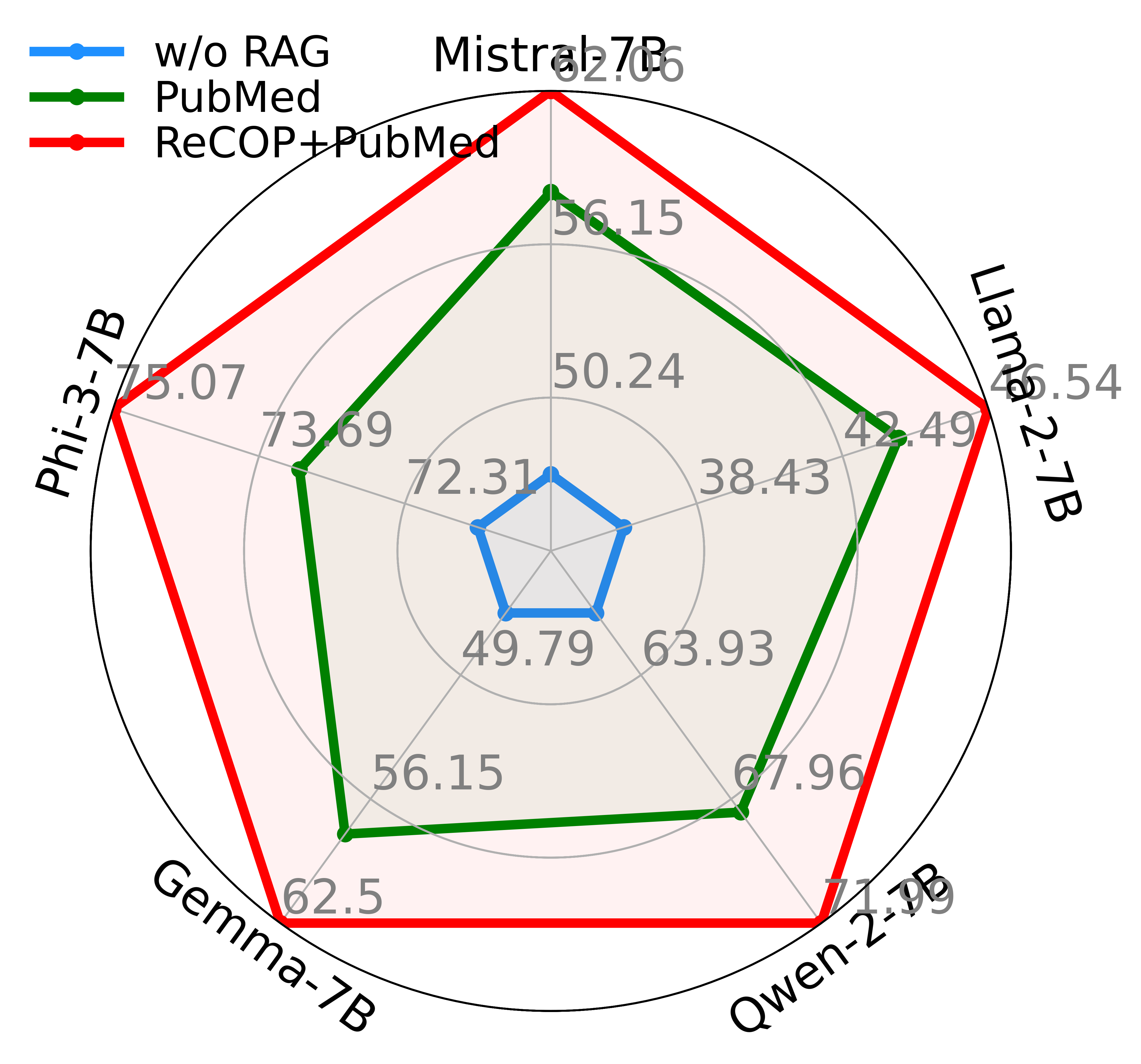

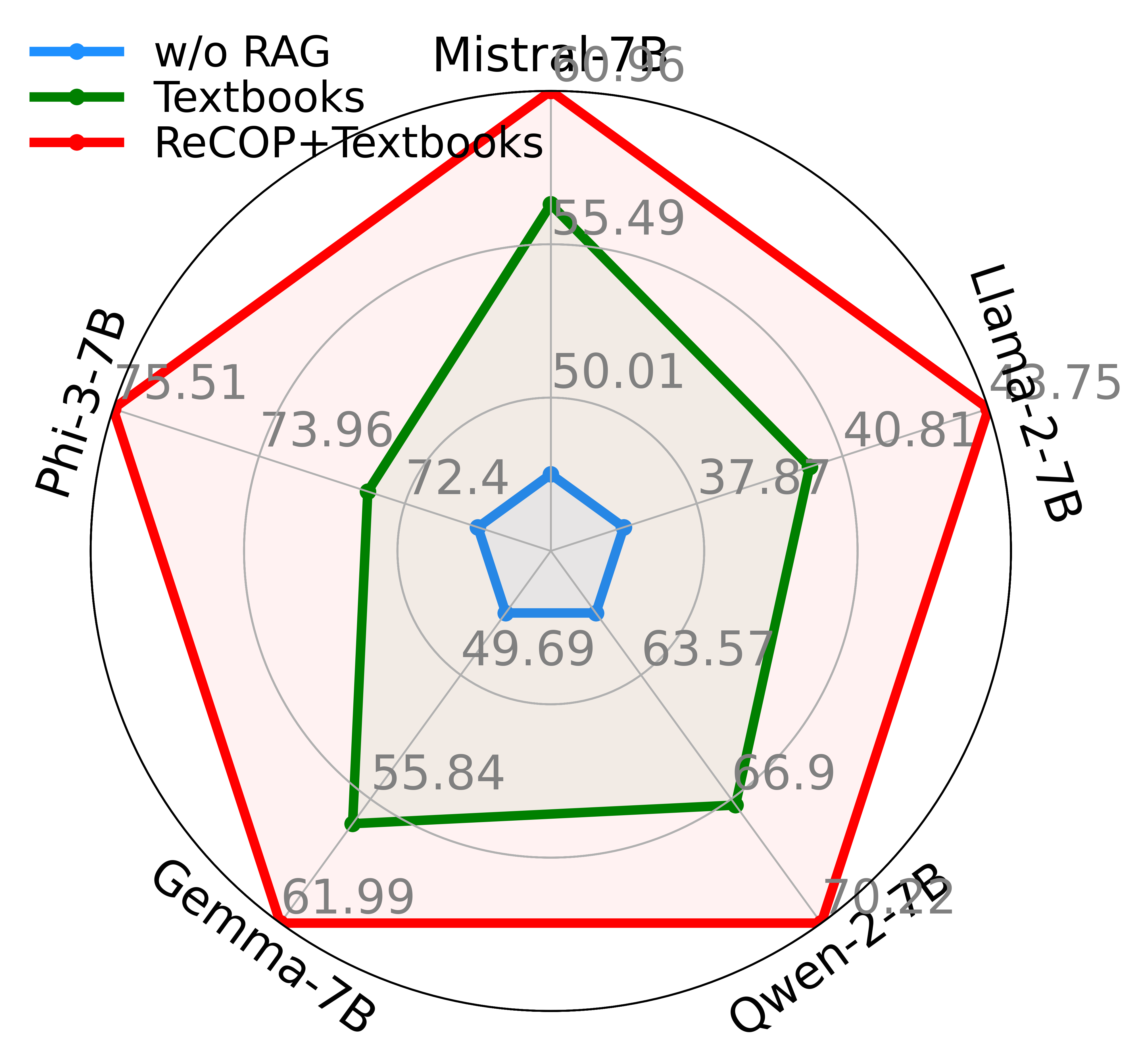

bash combine-corpora-bench/scripts/run_exp.shThe MedCPT, BM25 retrievers, and baseline corpus are sourced from the opensource repo MedRAG. Thanks to their contributions to the community!

If you find this work useful, you may cite this work:

@article{wang2024assessing,

title={Assessing and Enhancing Large Language Models in Rare Disease Question-answering},

author={Wang, Guanchu and Ran, Junhao and Tang, Ruixiang and Chang, Chia-Yuan and Chuang, Yu-Neng and Liu, Zirui and Braverman, Vladimir and Liu, Zhandong and Hu, Xia},

journal={arXiv preprint arXiv:2408.08422},

year={2024}

}