Welcome to the ultimate hub for on-device Large Language Models (LLMs)! This repository is your go-to resource for all things related to LLMs designed for on-device deployment. Whether you're a seasoned researcher, an innovative developer, or an enthusiastic learner, this comprehensive collection of cutting-edge knowledge is your gateway to understanding, leveraging, and contributing to the exciting world of on-device LLMs.

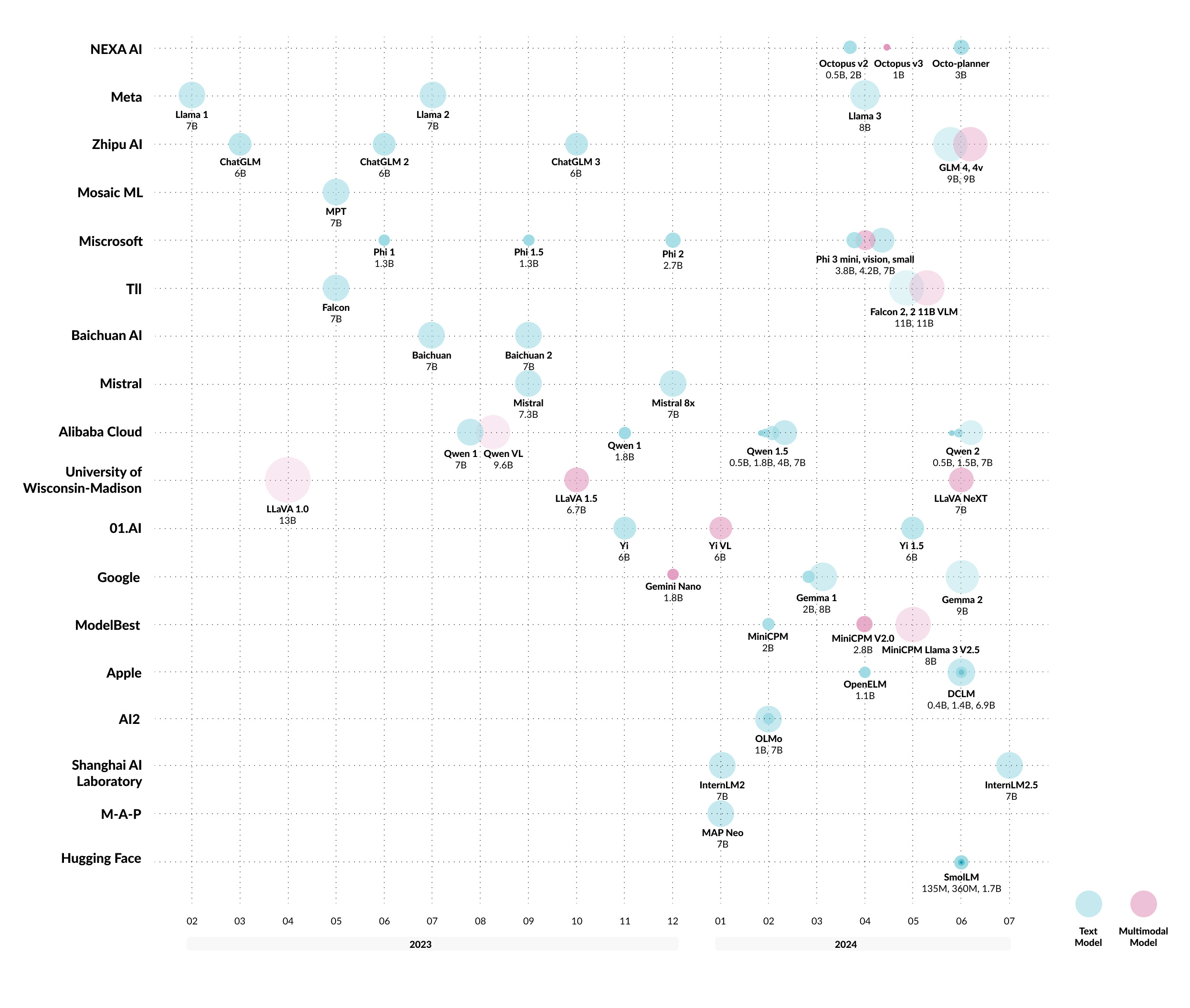

- 📊 Comprehensive overview of on-device LLM evolution with easy-to-understand visualizations

- 🧠 In-depth analysis of groundbreaking architectures and optimization techniques

- 📱 Curated list of state-of-the-art models and frameworks ready for on-device deployment

- 💡 Practical examples and case studies to inspire your next project

- 🔄 Regular updates to keep you at the forefront of rapid advancements in the field

- 🤝 Active community of researchers and practitioners sharing insights and experiences

- Awesome LLMs on Device: A Comprehensive Survey

- Contents

- Tutorials and Learning Resources

- Citation

- Tinyllama: An open-source small language model

arXiv 2024 [Paper] [Github] - MobileVLM V2: Faster and Stronger Baseline for Vision Language Model

arXiv 2024 [Paper] [Github] - MobileAIBench: Benchmarking LLMs and LMMs for On-Device Use Cases

arXiv 2024 [Paper] - Octopus series papers

arXiv 2024 [Octopus] [Octopus v2] [Octopus v3] [Octopus v4] [Github] - The Era of 1-bit LLMs: All Large Language Models are in 1.58 Bits

arXiv 2024 [Paper] - AWQ: Activation-aware Weight Quantization for LLM Compression and Acceleration

arXiv 2023 [Paper] [Github]

- The case for 4-bit precision: k-bit inference scaling laws

ICML 2023 [Paper] - Challenges and applications of large language models

arXiv 2023 [Paper] - MiniLLM: Knowledge distillation of large language models

ICLR 2023 [Paper] [github] - Gptq: Accurate post-training quantization for generative pre-trained transformers

ICLR 2023 [Paper] [Github] - Gpt3. int8 (): 8-bit matrix multiplication for transformers at scale

NeurIPS 2022 [Paper]

- OpenELM: An Efficient Language Model Family with Open Training and Inference Framework

ICML 2024 [Paper] [Github]

- Ferret-v2: An Improved Baseline for Referring and Grounding with Large Language Models

arXiv 2024 [Paper] - Phi-3 Technical Report: A Highly Capable Language Model Locally on Your Phone

arXiv 2024 [Paper] - Exploring post-training quantization in llms from comprehensive study to low rank compensation

AAAI 2024 [Paper] - Matrix compression via randomized low rank and low precision factorization

NeurIPS 2023 [Paper] [Github]

- MNN: A lightweight deep neural network inference engine

2024 [Github] - PowerInfer-2: Fast Large Language Model Inference on a Smartphone

arXiv 2024 [Paper] [Github] - llama.cpp: Lightweight library for Approximate Nearest Neighbors and Maximum Inner Product Search

2023 [Github] - Powerinfer: Fast large language model serving with a consumer-grade gpu

arXiv 2023 [Paper] [Github] - mllm: Fast and lightweight multimodal LLM inference engine for mobile and edge devices

2023 [Github]

| Model | Performance | Computational Efficiency | Memory Requirements |

|---|---|---|---|

| MobileLLM | High accuracy, optimized for sub-billion parameter models | Embedding sharing, grouped-query attention | Reduced model size due to deep and thin structures |

| EdgeShard | Up to 50% latency reduction, 2× throughput improvement | Collaborative edge-cloud computing, optimal shard placement | Distributed model components reduce individual device load |

| LLMCad | Up to 9.3× speedup in token generation | Generate-then-verify, token tree generation | Smaller LLM for token generation, larger LLM for verification |

| Any-Precision LLM | Supports multiple precisions efficiently | Post-training quantization, memory-efficient design | Substantial memory savings with versatile model precisions |

| Breakthrough Memory | Up to 4.5× performance improvement | PIM and PNM technologies enhance memory processing | Enhanced memory bandwidth and capacity |

| MELTing Point | Provides systematic performance evaluation | Analyzes impacts of quantization, efficient model evaluation | Evaluates memory and computational efficiency trade-offs |

| LLMaaS on device | Reduces context switching latency significantly | Stateful execution, fine-grained KV cache compression | Efficient memory management with tolerance-aware compression and swapping |

| LocMoE | Reduces training time per epoch by up to 22.24% | Orthogonal gating weights, locality-based expert regularization | Minimizes communication overhead with group-wise All-to-All and recompute pipeline |

| EdgeMoE | Significant performance improvements on edge devices | Expert-wise bitwidth adaptation, preloading experts | Efficient memory management through expert-by-expert computation reordering |

| JetMoE | Outperforms Llama27B and 13B-Chat with fewer parameters | Reduces inference computation by 70% using sparse activation | 8B total parameters, only 2B activated per input token |

| Pangu- |

Neural architecture, parameter initialization, and optimization strategy for billion-level parameter models | Embedding sharing, tokenizer compression | Reduced model size via architecture tweaking |

- AWQ: Activation-aware Weight Quantization for LLM Compression and Acceleration

arXiv 2024 [Paper] [Github] - MobileLLM: Optimizing Sub-billion Parameter Language Models for On-Device Use Cases

arXiv 2024 [Paper] [Github]

- EdgeShard: Efficient LLM Inference via Collaborative Edge Computing

arXiv 2024 [Paper] - Llmcad: Fast and scalable on-device large language model inference

arXiv 2023 [Paper]

- The Breakthrough Memory Solutions for Improved Performance on LLM Inference

IEEE Micro 2024 [Paper] - MELTing point: Mobile Evaluation of Language Transformers

arXiv 2024 [Paper] [Github]

- LLM as a system service on mobile devices

arXiv 2024 [Paper] - Locmoe: A low-overhead moe for large language model training

arXiv 2024 [Paper] - Edgemoe: Fast on-device inference of moe-based large language models

arXiv 2023 [Paper]

- Any-Precision LLM: Low-Cost Deployment of Multiple, Different-Sized LLMs

arXiv 2024 [Paper] [Github] - On the viability of using llms for sw/hw co-design: An example in designing cim dnn accelerators

IEEE SOCC 2023 [Paper]

- The Era of 1-bit LLMs: All Large Language Models are in 1.58 Bits

arXiv 2024 [Paper] - AWQ: Activation-aware Weight Quantization for LLM Compression and Acceleration

arXiv 2024 [Paper] [Github] - Gptq: Accurate post-training quantization for generative pre-trained transformers

ICLR 2023 [Paper] [Github] - Gpt3. int8 (): 8-bit matrix multiplication for transformers at scale

NeurIPS 2022 [Paper]

- Challenges and applications of large language models

arXiv 2023 [Paper]

- MiniLLM: Knowledge distillation of large language models

ICLR 2024 [Paper]

- Exploring post-training quantization in llms from comprehensive study to low rank compensation

AAAI 2024 [Paper] - Matrix compression via randomized low rank and low precision factorization

NeurIPS 2023 [Paper] [Github]

- llama.cpp: A lightweight library for efficient LLM inference on various hardware with minimal setup. [Github]

- MNN: A blazing fast, lightweight deep learning framework. [Github]

- PowerInfer: A CPU/GPU LLM inference engine leveraging activation locality for device. [Github]

- ExecuTorch: A platform for On-device AI across mobile, embedded and edge for PyTorch. [Github]

- MediaPipe: A suite of tools and libraries, enables quick application of AI and ML techniques. [Github]

- MLC-LLM: A machine learning compiler and high-performance deployment engine for large language models. [Github]

- VLLM: A fast and easy-to-use library for LLM inference and serving. [Github]

- OpenLLM: An open platform for operating large language models (LLMs) in production. [Github]

- The Breakthrough Memory Solutions for Improved Performance on LLM Inference

IEEE Micro 2024 [Paper] - Aquabolt-XL: Samsung HBM2-PIM with in-memory processing for ML accelerators and beyond

IEEE Hot Chips 2021 [Paper]

- Text Generating For Messaging: Gboard smart reply

- Translation: LLMCad

- Meeting Summarizing

- Healthcare application: BioMistral-7B, HuatuoGPT

- Research Support

- Companion Robot

- Disability Support: Octopus v3, Talkback with Gemini Nano

- Autonomous Vehicles: DriveVLM

| Model | Institute | Paper |

|---|---|---|

| Gemini Nano | Gemini: A Family of Highly Capable Multimodal Models | |

| Octopus series model | Nexa AI | Octopus v2: On-device language model for super agent Octopus v3: Technical Report for On-device Sub-billion Multimodal AI Agent Octopus v4: Graph of language models Octopus: On-device language model for function calling of software APIs |

| OpenELM and Ferret-v2 | Apple | OpenELM is a significant large language model integrated within iOS to enhance application functionalities. Ferret-v2 significantly improves upon its predecessor, introducing enhanced visual processing capabilities and an advanced training regimen. |

| Phi series | Microsoft | Phi-3 Technical Report: A Highly Capable Language Model Locally on Your Phone |

| MiniCPM | Tsinghua University | A GPT-4V Level Multimodal LLM on Your Phone |

| Gemma2-9B | Gemma 2: Improving Open Language Models at a Practical Size | |

| Qwen2-0.5B | Alibaba Group | Qwen Technical Report |

- MIT: TinyML and Efficient Deep Learning Computing

- Harvard: Machine Learning Systems

- Deep Learning AI : Introduction to on-device AI

We believe in the power of community! If you're passionate about on-device AI and want to contribute to this ever-growing knowledge hub, here's how you can get involved:

- Fork the repository

- Create a new branch for your brilliant additions

- Make your updates and push your changes

- Submit a pull request and become part of the on-device LLM movement

If our hub fuels your research or powers your projects, we'd be thrilled if you could cite our paper here:

@article{xu2024device,

title={On-Device Language Models: A Comprehensive Review},

author={Xu, Jiajun and Li, Zhiyuan and Chen, Wei and Wang, Qun and Gao, Xin and Cai, Qi and Ling, Ziyuan},

journal={arXiv preprint arXiv:2409.00088},

year={2024}

}This project is open-source and available under the MIT License. See the LICENSE file for more details.

Don't just read about the future of AI – be part of it. Star this repo, spread the word, and let's push the boundaries of on-device LLMs together! 🚀🌟