This is the official PyTorch implementation code for NeWCRFs. For technical details, please refer to:

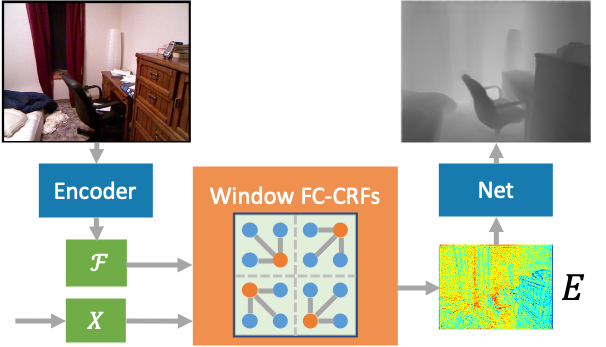

NeW CRFs: Neural Window Fully-connected CRFs for Monocular Depth Estimation

Weihao Yuan, Xiaodong Gu, Zuozhuo Dai, Siyu Zhu, Ping Tan

CVPR 2022

[Project Page] |

[Paper]

If you find this code useful in your research, please cite:

@inproceedings{yuan2022newcrfs,

title={NeWCRFs: Neural Window Fully-connected CRFs for Monocular Depth Estimation},

author={Yuan, Weihao and Gu, Xiaodong and Dai, Zuozhuo and Zhu, Siyu and Tan, Ping},

booktitle={Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition},

pages={},

year={2022}

}

conda create -n newcrfs python=3.8

conda activate newcrfs

conda install pytorch=1.10.0 torchvision cudatoolkit=11.1

pip install matplotlib, tqdm, tensorboardX, timm, mmcv

You can prepare the datasets KITTI and NYUv2 according to here, and then modify the data path in the config files to your dataset locations.

First download the pretrained encoder backbone from here, and then modify the pretrain path in the config files.

Training the NYUv2 model:

python newcrfs/train.py configs/arguments_train_nyu.txt

Training the KITTI model:

python newcrfs/train.py configs/arguments_train_kittieigen.txt

Evaluate the NYUv2 model:

python newcrfs/eval.py configs/arguments_eval_nyu.txt

Evaluate the KITTI model:

python newcrfs/eval.py configs/arguments_eval_kittieigen.txt

| Model | Abs.Rel. | Sqr.Rel | RMSE | RMSElog | a1 | a2 | a3 | SILog |

|---|---|---|---|---|---|---|---|---|

| NYUv2 | 0.0952 | 0.0443 | 0.3310 | 0.1185 | 0.923 | 0.992 | 0.998 | 9.1023 |

| KITTI_Eigen | 0.0520 | 0.1482 | 2.0716 | 0.0780 | 0.975 | 0.997 | 0.999 | 6.9859 |

Test images with the indoor model:

python newcrfs/test.py --data_path datasets/test_data --dataset nyu --filenames_file data_splits/test_list.txt --checkpoint_path model_nyu.ckpt --max_depth 10 --save_viz

Play with the live demo from a video or your webcam:

python newcrfs/demo.py --dataset nyu --checkpoint_path model_zoo/model_nyu.ckpt --max_depth 10 --video video.mp4

Thanks to Jin Han Lee for opening source of the excellent work BTS. Thanks to Microsoft Research Asia for opening source of the excellent work Swin Transformer.