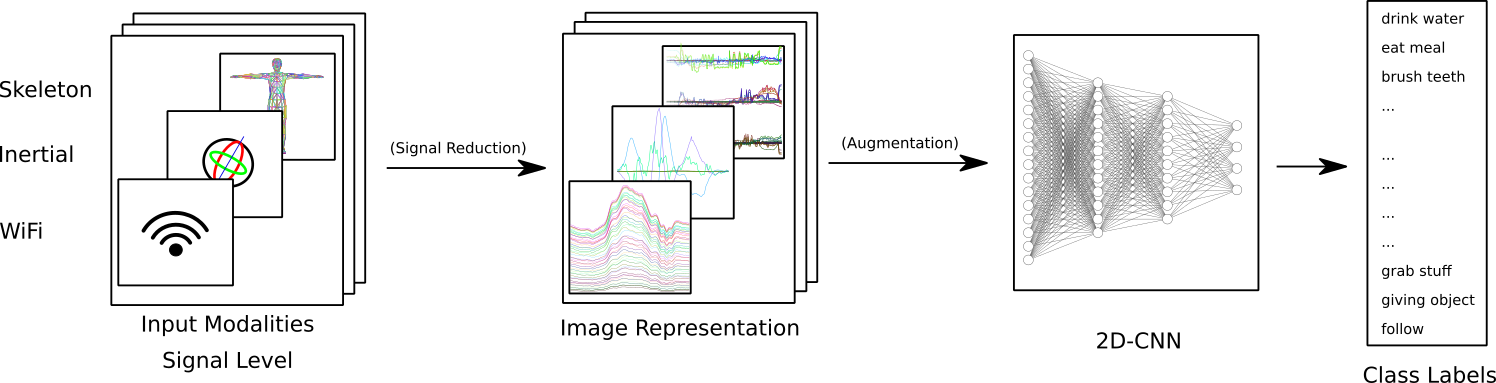

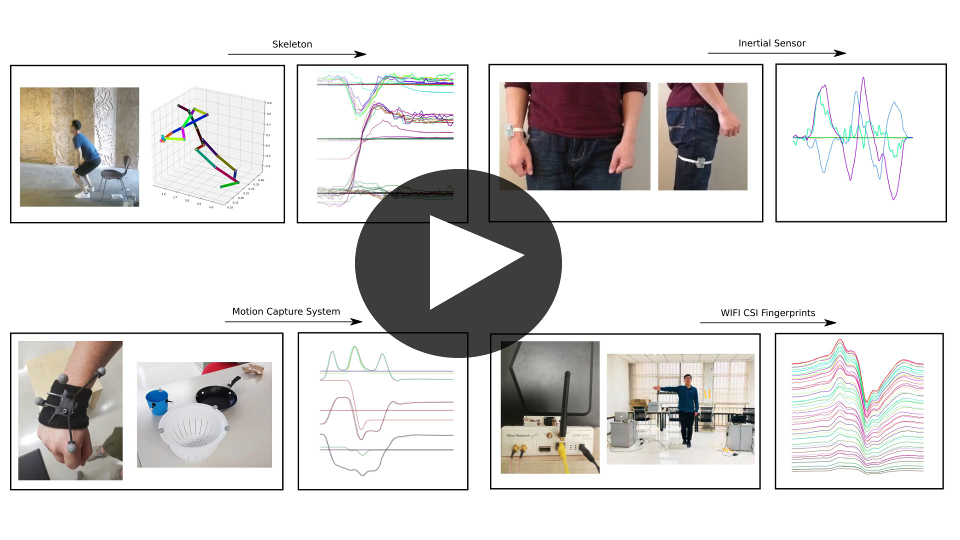

This repository contains the action recognition approach as presented in the Gimme Signals paper.

In case the video does not play you can download it here

@inproceedings{Memmesheimer2020GSD,

author = {Memmesheimer, Raphael and Theisen, Nick and Paulus, Dietrich},

title = {Gimme Signals: Discriminative signal encoding for multimodal activity recognition},

year = {2020},

booktitle = {2020 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS)},

address = {Las Vegas, NV, USA},

publisher = {IEEE},

doi = {10.1109/IROS45743.2020.9341699},

isbn = {978-1-7281-6213-3},

}

- pytorch, torchvision, pytorch-lightning, hydra-core

pip intall -r requirements.txt

The following command installs supported torch and torhvision versions in case you get an CUDA Kernel issue:

pip install torch==1.11.0+cu113 torchvision==0.12.0+cu113 -f https://download.pytorch.org/whl/torch_stable.html

Example code to generate representations for the NTU dataset:

python generate_representation_ntu.py <ntu_skeleton_dir> $DATASET_FOLDER <split>

where split is either cross_subject, cross_setup, one_shot

Representations must be placed inside a $DATASET_FOLDER that an environment variable points to.

We provide precalculated representations for intermediate result reproduction:

Example:

python train.py dataset=simitate model_name=efficientnet learning_rate=0.1 net="efficientnet"

Exemplary, this command trains using the simitate dataset.