This repository is the official implementation of the paper:

Efficient Learning of Urban Driving Policies Using Bird's-Eye-View State Representations

Trumpp, Raphael, Martin Buechner, Abhinav Valada, and Marco Caccamo.

The paper will be presented at the IEEE International Conference on Intelligent Transportation Systems 2023. If you find our work useful, please consider citing it.

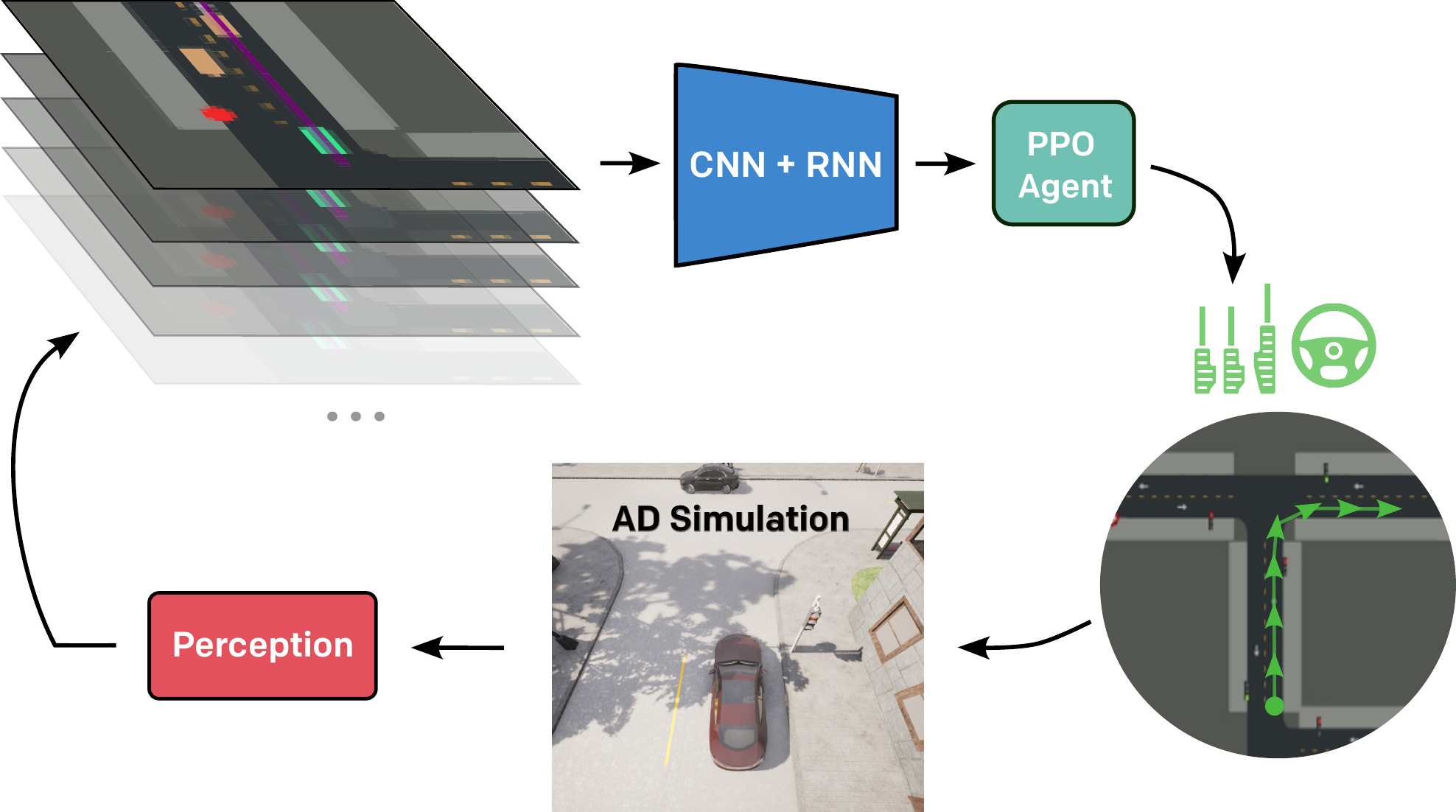

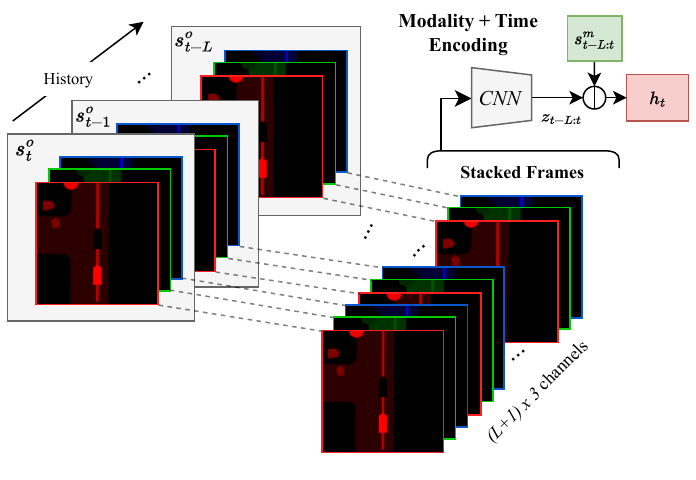

Autonomous driving involves complex decision-making in highly interactive environments, requiring thoughtful negotiation with other traffic participants. While reinforcement learning provides a way to learn such interaction behavior, efficient learning critically depends on scalable state representations. Contrary to imitation learning methods, high-dimensional state representations still constitute a major bottleneck for deep reinforcement learning methods in autonomous driving. In this paper, we study the challenges of constructing bird's-eye-view representations for autonomous driving and propose a recurrent learning architecture for long-horizon driving. Our PPO-based approach, called RecurrDriveNet, is demonstrated on a simulated autonomous driving task in CARLA, where it outperforms traditional frame-stacking methods while only requiring one million experiences for efficient training. RecurrDriveNet causes less than one infraction per driven kilometer by interacting safely with other road users.

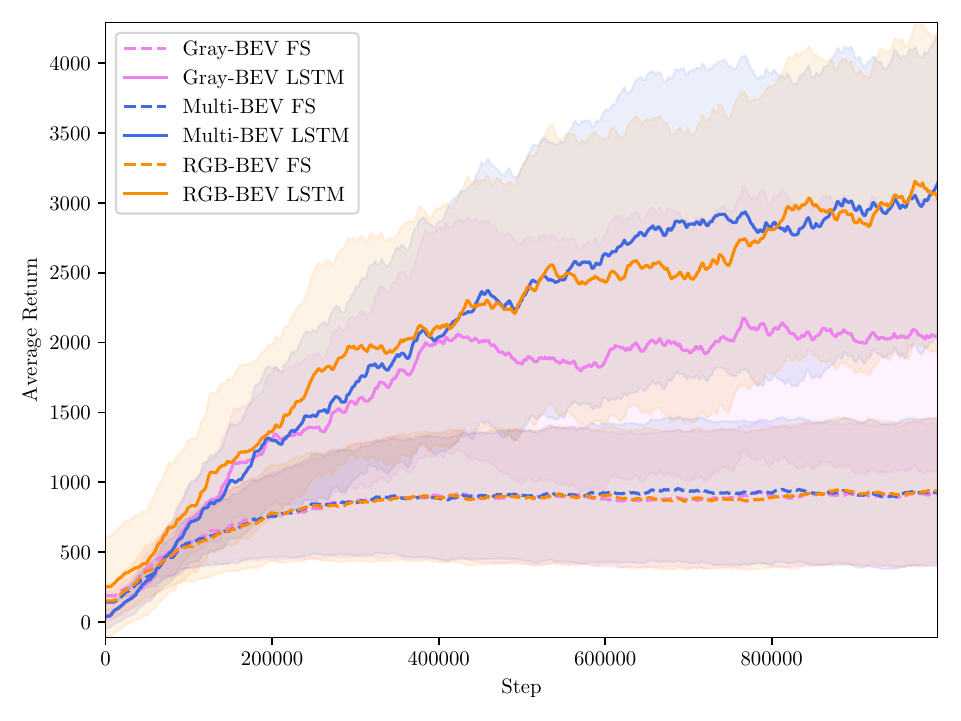

Current reinforcement learning approaches for learning driving policies face the bottleneck of dimensionality. In this paper, we evaluate the efficiency of various bird's-eye-view representations used for describing the state of the driving scene. In addition to that, we propose a novel LSTM-based encoding scheme for efficiently encoding the bird's-eye-view state representation across the full trajectory of states in a reinforcement learning fashion. This alleviates the need for old-fashioned frame-stacking methods and enables further long-horizon driving research.

Based on our chosen LSTM-based encoding of bird's-eye-view representations, we achieve significantly higher average returns while reducing the number of infractions when driving compared to frame-stacking methods. This allows also robust stopping at red traffic lights.

- We recommend to use a virtual environment for the installation:

python -m venv learning2drive source learning2drive/bin/activate - Activate the environment and install the following packages:

pip install torch TBD

Most of the code is documented with automatically generated docstrings, please use them with caution.

TBD

If you find our work useful, please consider citing our paper:

@article{trumpp2023efficient,

title={Efficient Learning of Urban Driving Policies Using Bird's-Eye-View State Representations},

author={Trumpp, Raphael and B{\"u}chner, Martin and Valada, Abhinav and Caccamo, Marco},

journal={arXiv preprint arXiv:2305.19904},

year={2023}

}GNU General Public License v3.0 only" (GPL-3.0) © raphajaner