Zhenjia Xu1,

Zhou Xian2,

Xingyu Lin3,

Cheng Chi1,

Zhiao Huang4,

Chuang Gan5†,

Shuran Song1†

1Columbia University, 2CMU, 3UC Berkeley, 4UC San Diego, 5UMass Amherst & MIT-IBM Lab

RSS 2023

Project Page | Video | arXiv

This repository contains code for training and evaluating RoboNinja in both simulation and real-world settings.

We recommend Mambaforge instead of the standard anaconda distribution for faster installation:

$ mamba env create -f environment.ymlbut you can use conda as well:

$ conda env create -f environment.ymlActivate conda environment and login to wandb.

$ conda activate roboninja

$ wandb loginGenerate cores with in-distribution geometries (300 train + 50 eval)

$ python roboninja/workspace/bone_generation_workspace.pyGenerate cores with out-of-distribution geometries (50 eval)

$ python roboninja/workspace/bone_generation_ood_workspace.pysimulation_example.ipynb provides a quick example of the simulation. It first create a scene and render an image. It then runs a forward pass a backward pass using the initial action trajectory. Finally, it executes an optimized action trajectory.

If you get an error related to rendering, here are some potential solutions:

- make sure vulkan is installed

TI_VISIBLE_DEVICEis not correctly set in roboninja/env/tc_env.py (L17). The reason is that vukan device index is not alighed with cuda device index, and that's the reason I have the function calledget_vulkan_offset(). Change this function implementation based on your setup.

$ python roboninja/workspace/optimization_workspace.py name=NAMEHere {NAME} typically choose expert_{X}, where X is the index of the rigid core. Loss curve are logged as roboninja/{NAME} on wandb as well as visualization of intermediate results. The final result will be saved in data/optimization/{NAME}. Configurations are stored in roboninja/config/optimization_workspace.yaml.

$ python roboninja/workspace/state_estimation_workspace.pyLoss curve are logged as roboninja/state_estimation on wandb as well as visualization on both training and testing sets. Checkpoints will be saved in data/state_estimation. Configurations are stored in roboninja/config/state_estimation_workspace.yaml.

$ python roboninja/workspace/close_loop_policy_workspace.py dataset.expert_dir=data/expertHere is the training script using the provided expert demonstraitons stored in data/expert. You can also change the directory to the trajectories you collected in the previous step. Loss curve are logged as roboninja/close_loop_policy on wandb as well as visualization with different tollerance values. Checkpoints will be saved in data/close_loop_policy. Configurations are stored in roboninja/config/close_loop_policy_workspace.yaml.

Pretrained models can be downloaded by:

wget https://roboninja.cs.columbia.edu/download/roboninja-pretrined.zip

Unzip and remember to change the state_estimation_path and close_loop_policy_path in roboninja/configs/eval_workspace.yaml.

We provide two type of simulaiton environments for evaluation:

sim: a geometry-based simulation that incorporates collision detection. It is designed to calculate cut mass and the number of collisions. This simulation is very fast. It is very fast but can not estimate energy consumption.taichi: The physics-based simulaiton implemented in Taichi and used for trajectory optimization. It is slower compared to thesimenvironment but supports energy consumption estimation. Simulation results in our paper are evaluated using this environment.

Here is the command using taichi environment:

$ python roboninja/workspace/eval_workspace.py type=taichiResults will be saved in data/eval

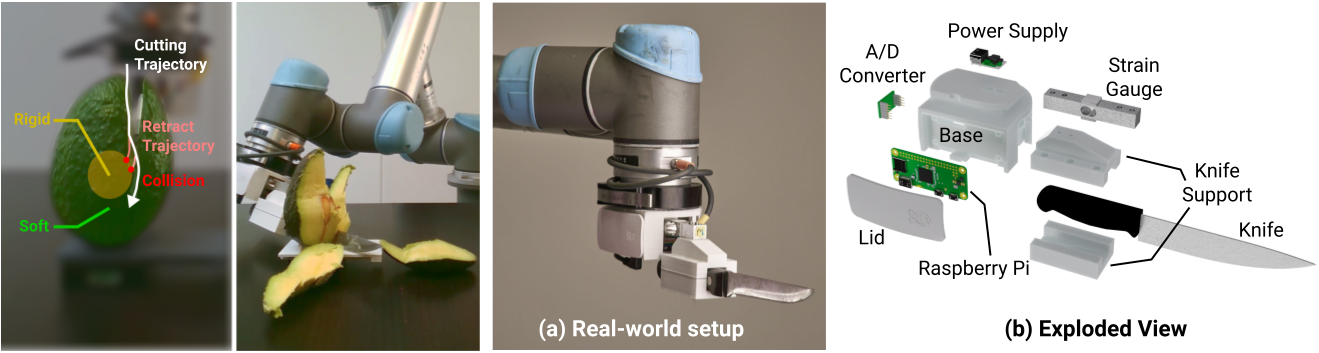

- UR5-CB3 or UR5e (RTDE Interface is required)

- Millibar Robotics Manual Tool Changer (only need robot side)

- Kinetic Sand

- Digital load cell and HX711 A/D converter. Amazon link

- Raspberry Pi Zero 2 W

- 3D printed container for assembly.

- knife

Relevant tutorial: https://tutorials-raspberrypi.com/digital-raspberry-pi-scale-weight-sensor-hx711/

Copy roboninja/real_world/server_udp.py into Raspberry Pi and run

python server_udp.py

Update ip address and other configurations in roboninja/configs/real_env.yaml, and run

python roboninja/workspace/eval_workspace.py type=real bone_idx={INDEX}

@inproceedings{xu2023roboninja,

title={RoboNinja: Learning an Adaptive Cutting Policy for Multi-Material Objects},

author={Xu, Zhenjia and Xian, Zhou and Lin, Xingyu and Chi, Cheng and Huang, Zhiao and Gan, Chuang and Song, Shuran},

booktitle={Proceedings of Robotics: Science and Systems (RSS)},

year={2023}

}

This repository is released under the MIT license. See LICENSE for additional details.

- Physics simulator is adapted from FluidLab.

- UR5 controller is adapted from Diffusion Policy.

- Force sensor is inspired by this toterial.