This repository is the official implementation of the paper:

CoDEPS: Continual Learning for Depth Estimation and Panoptic Segmentation

Niclas Vödisch, Kürsat Petek, Wolfram Burgard, and Abhinav Valada.

Robotics: Science and Systems (RSS), 2023

If you find our work useful, please consider citing our paper:

@article{voedisch23codeps,

title={CoDEPS: Online Continual Learning for Depth Estimation and Panoptic Segmentation},

author={Vödisch, Niclas and Petek, Kürsat and Burgard, Wolfram and Valada, Abhinav},

journal={Robotics: Science and Systems (RSS)},

year={2023}

}

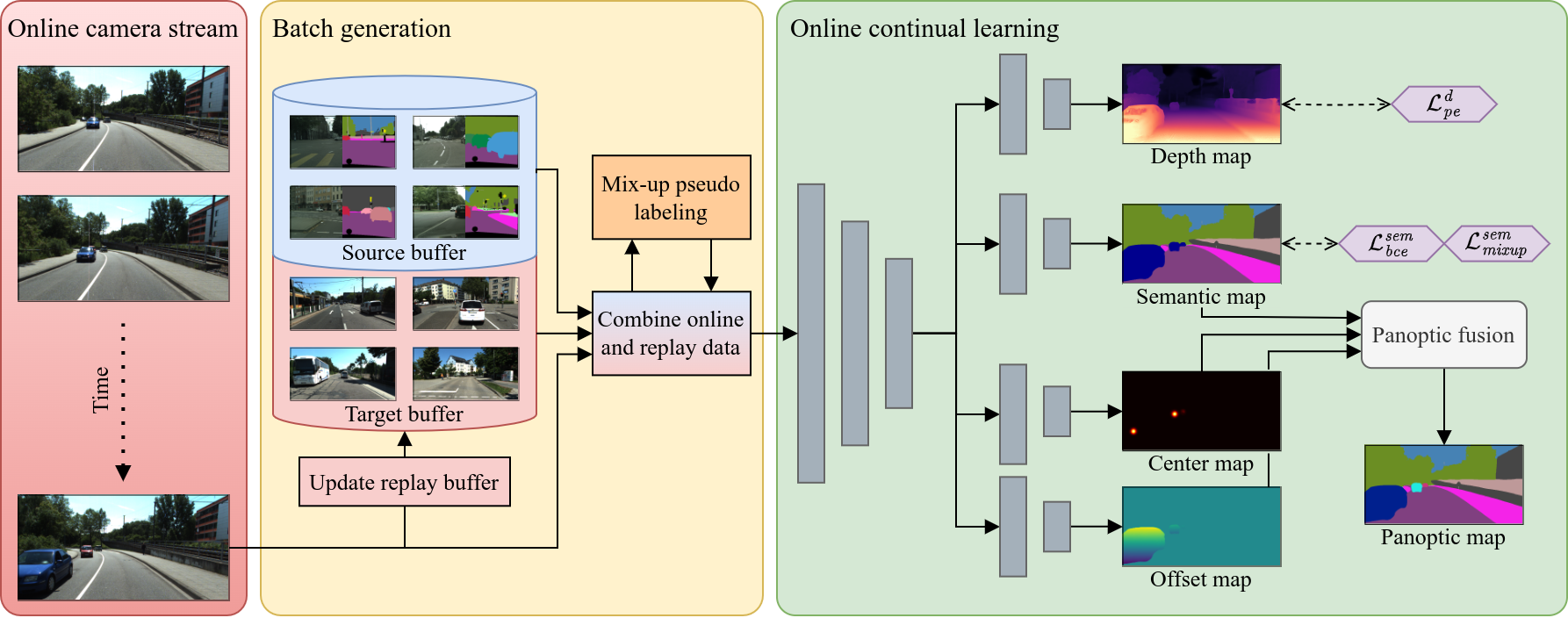

Operating a robot in the open world requires a high level of robustness with respect to previously unseen environments. Optimally, the robot is able to adapt by itself to new conditions without human supervision, e.g., automatically adjusting its perception system to changing lighting conditions. In this work, we address the task of continual learning for deep learning-based monocular depth estimation and panoptic segmentation in new environments in an online manner. We introduce CoDEPS to perform continual learning involving multiple real-world domains while mitigating catastrophic forgetting by leveraging experience replay. In particular, we propose a novel domain-mixing strategy to generate pseudo-labels to adapt panoptic segmentation. Furthermore, we explicitly address the limited storage capacity of robotic systems by proposing sampling strategies for constructing a fixed-size replay buffer based on rare semantic class sampling and image diversity. We perform extensive evaluations of CoDEPS on various real-world datasets demonstrating that it successfully adapts to unseen environments without sacrificing performance on previous domains while achieving state-of-the-art results.

- Create conda environment:

conda create --name codeps python=3.8 - Activate environment:

conda activate codeps - Install dependencies:

pip install -r requirements.txt

The script file to pretrain CoDEPS can be found in scripts/train.sh. Before executing the script, make sure to adapt all parameters.

Additionally, ensure that the dataset path is set correctly in the corresponding config file, e.g., cfg/train_cityscapes.yaml.

Note: We also provide the pretrained model weights at this link: https://drive.google.com/file/d/1KlIbYrzS6p8ymcuAJXy-DdHF8CqL2REc/view?usp=sharing

To perform online continual learning, execute scripts/adapt.sh. Similar to the pretraining script, make sure to adapt all parameters before executing the script.

Additionally, ensure that the dataset path is set correctly in the corresponding config file, e.g., cfg/adapt_cityscapes_kitti_360.yaml.

Download the following files:

- leftImg8bit_sequence_trainvaltest.zip (324GB)

- gtFine_trainvaltest.zip (241MB)

- camera_trainvaltest.zip (2MB)

- disparity_sequence_trainvaltest.zip (106GB) (optional)

Run cp cityscapes_class_distribution.pkl CITYSCAPES_ROOT/class_distribution.pkl, where CITYSCAPES_ROOT is the path to the extracted Cityscapes dataset.

After extraction, one should obtain the following file structure:

── cityscapes

├── camera

│ └── ...

├── disparity_sequence

│ └── ...

├── gtFine

│ └── ...

└── leftImg8bit_sequence

└── ...

Download the following files:

- Perspective Images for Train & Val (128G): You can remove "01" in line 12 in

download_2d_perspective.shto only download the relevant images. - Test Semantic (1.5G)

- Semantics (1.8G)

- Calibrations (3K)

After extraction and copying of the perspective images, one should obtain the following file structure:

── kitti_360

├── calibration

│ ├── calib_cam_to_pose.txt

│ └── ...

├── data_2d_raw

│ ├── 2013_05_28_drive_0000_sync

│ └── ...

├── data_2d_semantics

│ └── train

│ ├── 2013_05_28_drive_0000_sync

│ └── ...

└── data_2d_test

├── 2013_05_28_drive_0008_sync

└── 2013_05_28_drive_0018_sync

- Download the annotations using the link from semkitti-dvps.

- Download the KITTI odometry color images (65GB) and extract them to the same folder.

- Execute our script to organize the data in accordance with the KITTI dataset:

python scripts/prepare_sem_kitti_dvps.py --in_path IN_PATH --out_path OUT_PATH

Afterwards, one should obtain the following file structure:

── sem_kitti_dvps

├── data_2d_raw

│ ├── 01

│ │ ├── image_2

│ │ │ └── ...

│ │ ├── calib.txt

│ │ └── times.txt

│ └── ...

├── data_2d_semantics

│ ├── 01

│ └── ...

└── data_2d_depth

├── 01

└── ...

For academic usage, the code is released under the GPLv3 license. For any commercial purpose, please contact the authors.

This work was partly funded by the European Union’s Horizon 2020 research and innovation program under grant agreement No 871449-OpenDR and the Bundesministerium für Bildung und Forschung (BMBF) under grant agreement No FKZ 16ME0027.