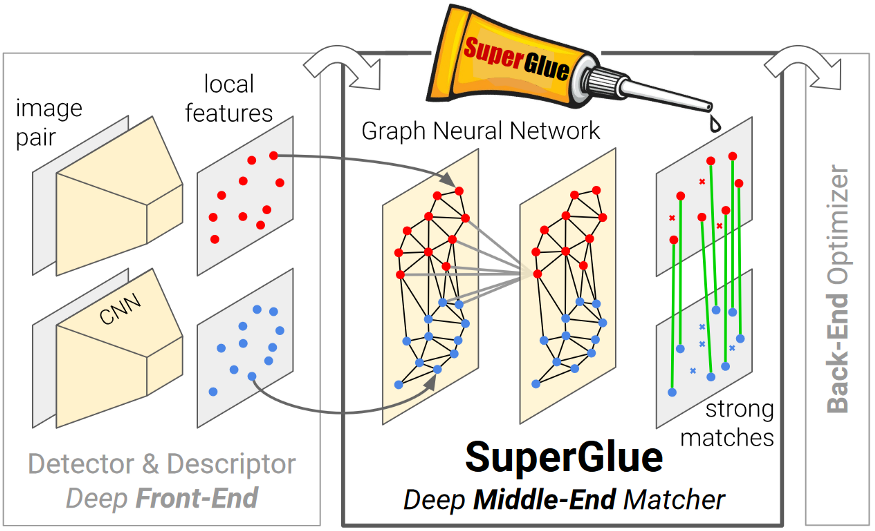

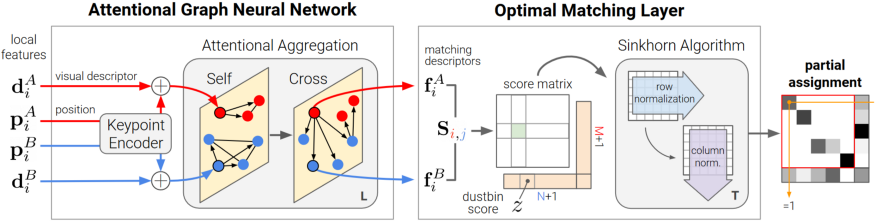

The SuperGlue network is a Graph Neural Network combined with an Optimal Matching layer that is trained to perform matching on two sets of sparse image features. SuperGlue operates as a "middle-end," performing context aggregation, matching, and filtering in a single end-to-end architecture.

Correspondences across images have some constraints:

- A keypoint can have at most a single correspondence in the another image.

- Some keypoints will be unmatched due to occlusion and failure of the detector.

SuperGlue aims to find all correspondences between reprojections of the same points and identifying keypoints that have no matches. There are two main components in SuperGlue architecture: Attentional Graph Neural Network and Optimal Matching Layer.

This repo includes PyTorch code for training the SuperGlue matching network on top of SIFT keypoints and descriptors. For more details, please see:

- Full paper PDF: SuperGlue: Learning Feature Matching with Graph Neural Networks.

- Python 3

- PyTorch >= 1.1

- OpenCV >= 3.4 (4.1.2.30 recommended for best GUI keyboard interaction, see this note)

- Matplotlib >= 3.1

- NumPy >= 1.18

Simply run the following command: pip3 install numpy opencv-python torch matplotlib

Or create a conda environment by conda install --name myenv --file superglue.txt

There are two main top-level scripts in this repo:

train.py: trains the superglue model.load_data.py: reads images from files and creates pairs. It generates keypoints, descriptors and ground truth matches which will be used in training.

Download the COCO2014 dataset files for training

wget http://images.cocodataset.org/zips/train2014.zip

Download the validation set

wget http://images.cocodataset.org/zips/val2014.zip

Download the test set

wget http://images.cocodataset.org/zips/test2014.zip

To train the SuperGlue with default parameters, run the following command:

python train.py- Use

--epochto set the number of epochs (default:20). - Use

--train_pathto set the path to the directory of training images. - Use

--eval_output_dirto set the path to the directory in which the visualizations is written (default:dump_match_pairs/). - Use

--show_keypointsto visualize the detected keypoints (default:False). - Use

--viz_extensionto set the visualization file extension (default:png). Use pdf for highest-quality.

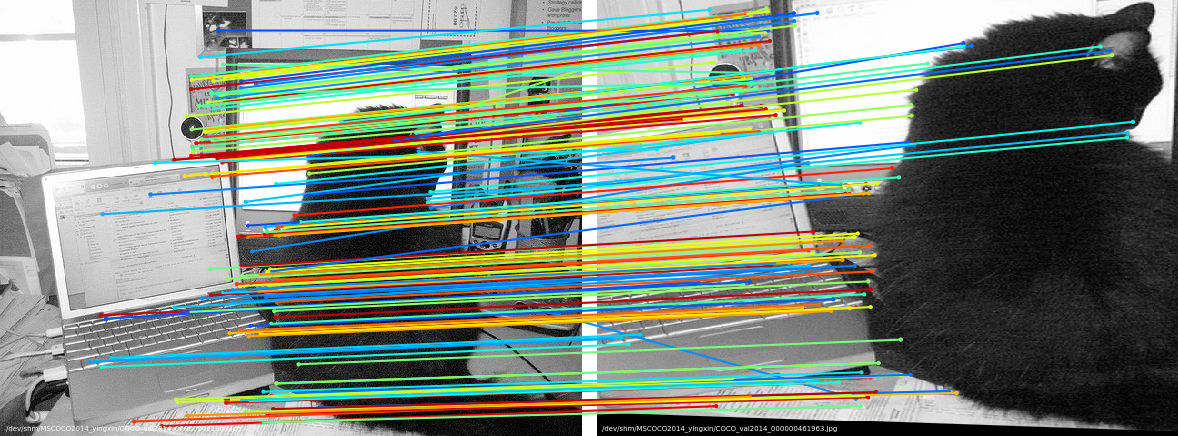

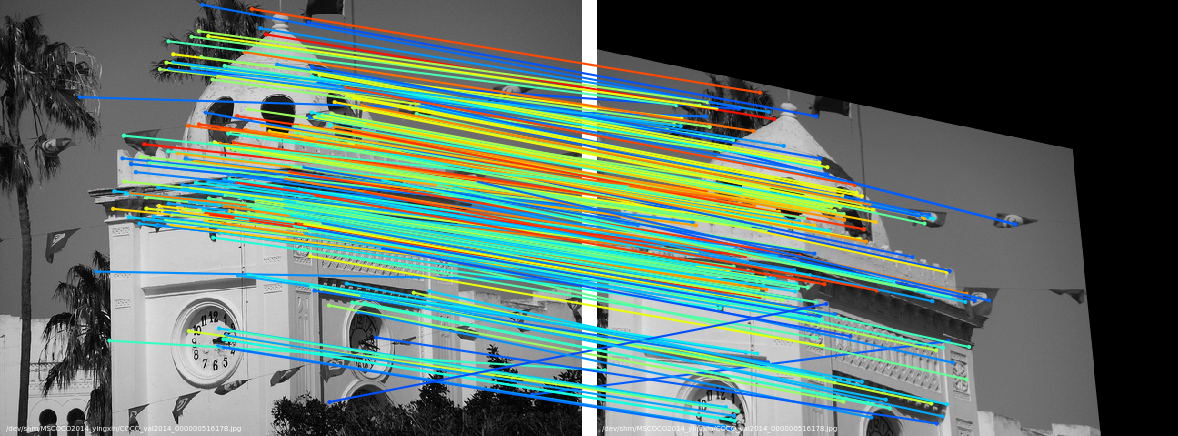

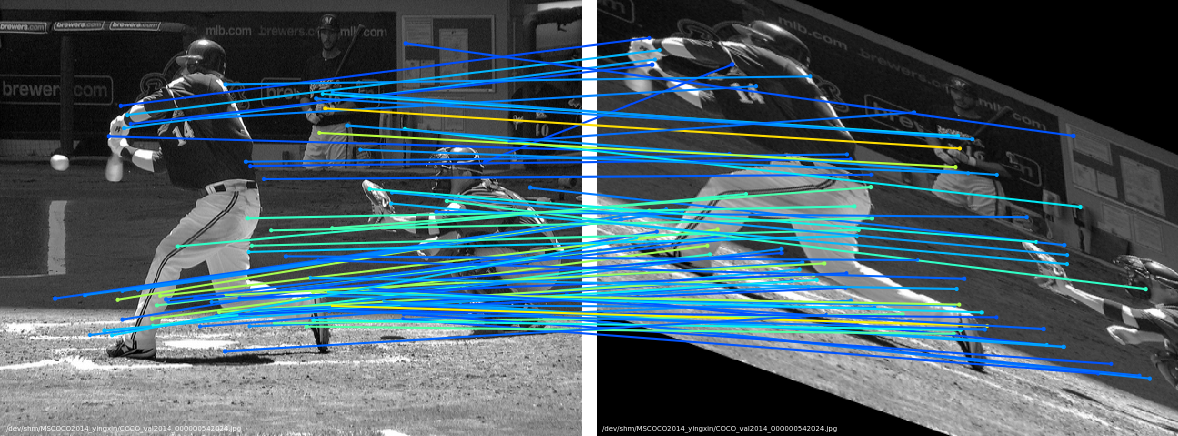

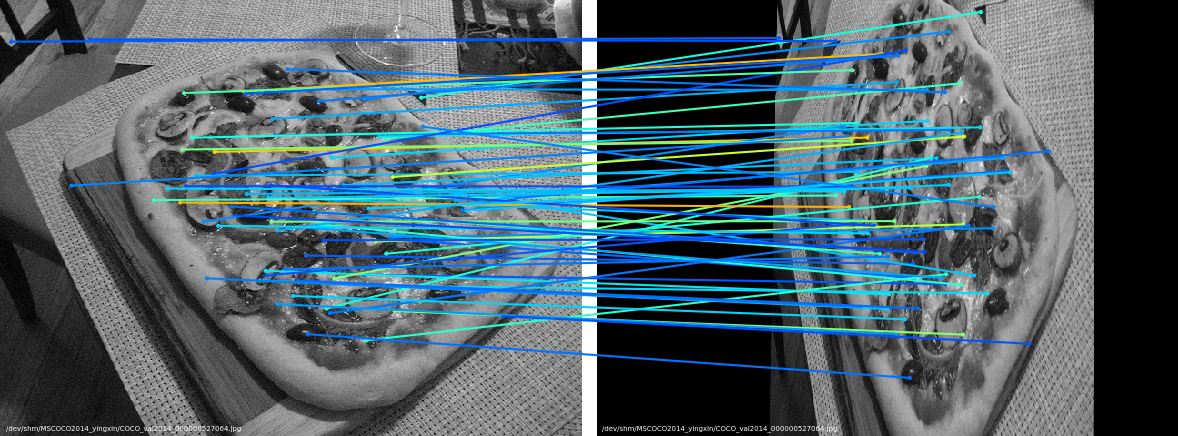

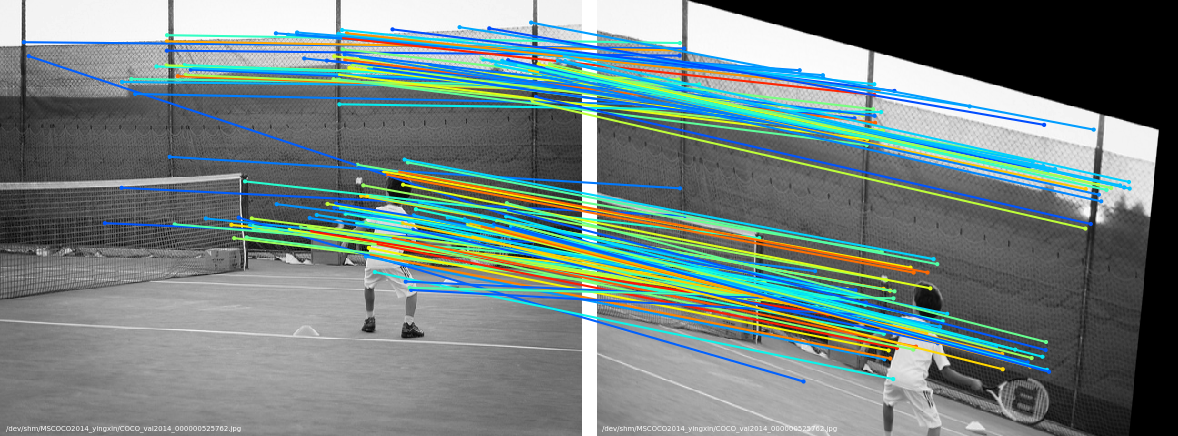

The matches are colored by their predicted confidence in a jet colormap (Red: more confident, Blue: less confident).

You should see images like this inside of dump_match_pairs/