This package is what the name suggests: Meta's segment-anything wrapped in a ROS 2 node. In this wrapper we offer...

- ROS 2 services for segmenting images using point and box queries.

- A Python client which handles the serialization of queries.

Installation is easy:

- Start by cloning this package into your ROS 2 environment and build via:

colcon build --symlink-install- Install SAM by running

pip install git+https://github.com/facebookresearch/segment-anything.git.

Run the SAM ROS 2 node using:

ros2 launch ros2_sam server.launch.py # will download SAM models if not not already downloadedThe node has three parameters:

checkpoint_dirdirectory containing SAM model checkpoints. If empty, models will be downloaded automatically.model_typeSAM model to use, defaults tovit_h. Check SAM documentation for options.devicewhether to use CUDA and which device, defaults tocuda. Usecpuif you have no CUDA. If you want to use a specific GPU, set someting likecuda:1.

The node currently offers a single service ~/segment, which can be called to segment an image.

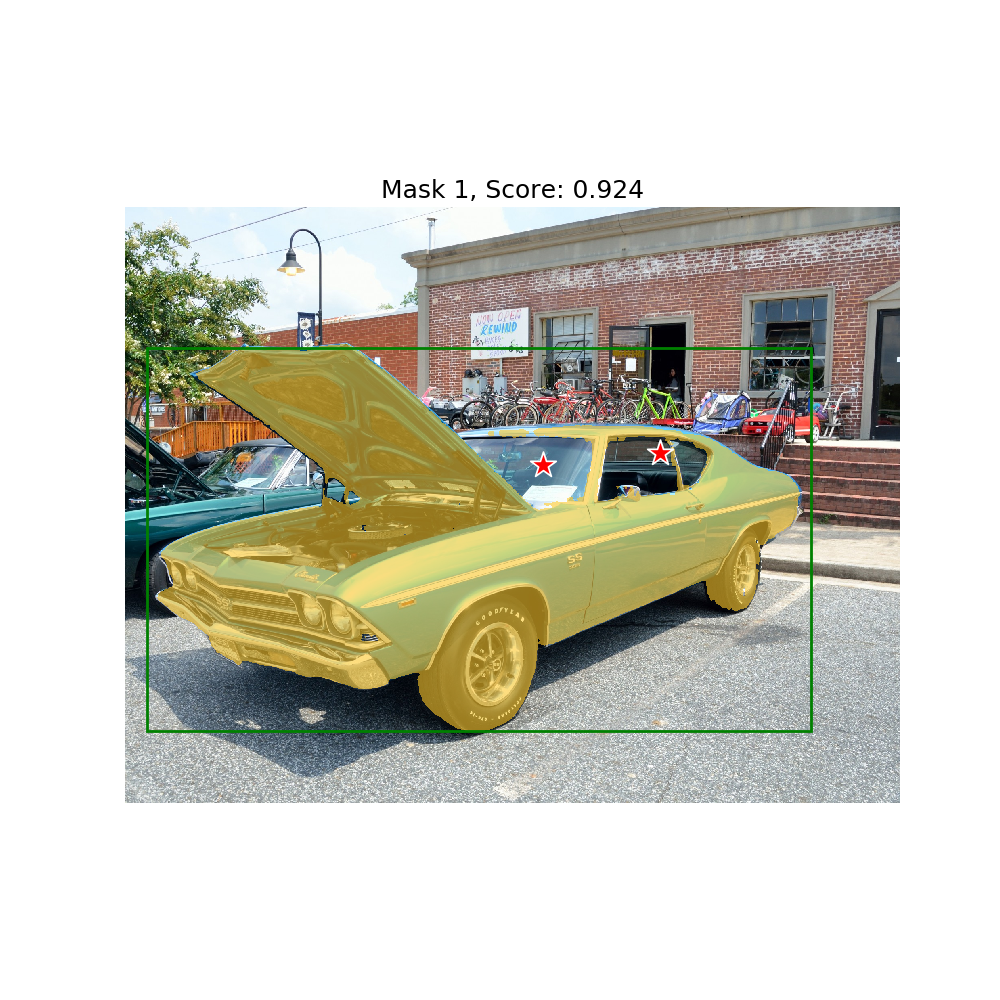

You can test SAM by starting the node and then running ros2 run ros2_sam sam_client_node. This should yield the following result:

ros2_sam offers a single service ~/segment of the type ros2_sam_msgs/srv/Segmentation. The service definition is

sensor_msgs/Image image # Image to segment

geometry_msgs/Point[] query_points # Points to start segmentation from

int32[] query_labels # Mark points as positive or negative samples

std_msgs/Int32MultiArray boxes # Boxes can only be positive samples

bool multimask # Generate multiple masks

bool logits # Send back logits

---

sensor_msgs/Image[] masks # Masks generated for the query

float32[] scores # Scores for the masks

sensor_msgs/Image[] logits # Logit activations of the masks

The service request takes input image, input point prompts, corresponding labels and the box prompt. The service response contains the segmentation masks, confidence scores and the logit activations of the masks.

To learn more about the types and use of different queries, please refer to the original SAM tutorial

The service calls are wrapped up conveniently in the ROS 2 SAM client.

Alternatively, if you don't feel like assembling the service calls yourself, one can use the ROS 2 SAM client instead of the service calls.

Initialize the client with the service name of the SAM segmentation service

from ros2_sam import SAMClient

sam_client = SAMClient("sam_client", service_name="sam_server/segment")Call the segment method with the input image, input prompt points and corresponding labels. This returns 3 segmentation masks for the object and their corresponding confidence scores

img = cv2.imread('path/to/image.png')

points = np.array([[100, 100], [200, 200], [300, 300]])

labels = [1, 1, 0]

masks, scores = sam_client.sync_segment_request(img, points, labels)Additional utilities for visualizing segmentation masks and input prompts

from ros2_sam import show_mask, show_points

show_mask(masks[0], plt.gca())

show_points(points, np.asarray(labels), plt.gca())