Energy-based Potential Games for Joint Motion Forecasting and Control

Christopher Diehl1, Tobias Klosek1, Martin Krüger1, Nils Murzyn2, Timo Osterburg1 and Torsten Bertram1

1 Technical University Dortmund, 2 ZF Friedrichshafen AG, Artificial Intelligence Lab

Conference on Robot Learning (CoRL), 2023

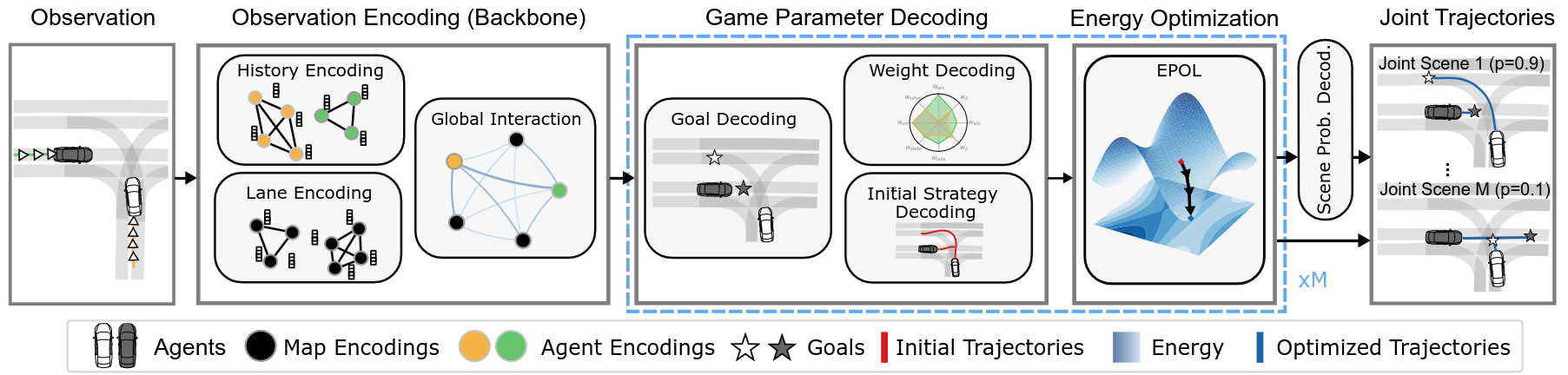

- Abstract: This work uses game theory as a mathematical framework to address interaction modeling in multi-agent motion forecasting and control. Despite its interpretability, applying game theory to real-world robotics, like automated driving, faces challenges such as unknown game parameters. To tackle these, we establish a connection between differential games, optimal control, and energy-based models, demonstrating how existing approaches can be unified under our proposed Energy-based Potential Game formulation. Building upon this, we introduce a new end-to-end learning application that combines neural networks for game-parameter inference with a differentiable game-theoretic optimization layer, acting as an inductive bias.

- This repository includes the code for training and evaluation of the proposed Enery-based Potential Game approach (EPO) approach and another joint prediction baseline (see +SC in the paper ) for the Waymo Open Motion Dataset.

We decompose the modeling multi-agent interactions into two subtasks: (1) A neural network encodes the scene (agent histories and map information) and models global interaction. The resulting encoding is used to decode game-parameters and initial control sequences of all agents. (2) We construct an energy (joint cost) based on the use the inferred game parameters. The joint cost function is then minimized using gradient-based optimization, starting from the predicted initialization. We perform parallel energy optimizations (one for each mode) resulting in different future scene evolutions.

For installation, please follow these steps:

- Download the Waymo Open Motion dataset (Version 1.2.0) (described here)

- Clone the repository

git clone https://github.com/rst-tu-dortmund/diff_epo_planner.git

- Create a new conda environment from the provided environment file (epo_waymo.yml)

conda env create -f epo_waymo.yml

- Activate the environment.

- Install Theseus (Version 0.1.4)

pip install theseus-ai==0.1.4

The environment was tested on a machine with Ubuntu 18.04 with NVIDIA RTX 3090 and Ubuntu 22.04 with NVIDIA RTX 2080 Super.

The first step is to preprocess the downloaded dataset. This repository provides a script to preprocess the Waymo Open Motion dataset, which is located in the data/utils subfolder. The script will create a new folder with the processed data. Preprocessing leads to significant improvements in terms of training times.

cd data/utils

python preprocess_waymo.py

--data_path= *PATH_TO_WAYMO_DATASET*

--save_path= *PATH_TO_SAVE_PROCESSED_DATA*

--configs=*PATH_TO_JSON/*configs_eponet.json

--n_parallel= *NUMBER_OF_PARALLEL_PROCESSES*

- Setting n_parallel to a high value reduces the runtime of the preprocessing.

- data_path is the path to the folder containing the tfrecord files (e.g., YOUR_PATH/waymo/v_1_2_0/scenario/training).

- Please run the script for the training subfolder and the validation_interactive subfolder.

- Both models can be trained with the following command:

python train_scene_prediction.py --configs= configs_*MODELNAME*.json

- Please ensure that you specify a valid model path. The dataset_waymo, specified in the json-config, should contain the following subfolders, whereas data contains the data after the preprocessing:

dataset_waymo/

├── train/

│ └── data/

└── val/

└── data/

- Please also set the storage_path to a folder where the checkpoints will be stored on your device.

- We use Weights & Biases for logging. You can register an account for free. The entity can also be set in the configs_MODELNAME.json

We also provide a script for evaluating trained models. Please set the model_path in the configs accordingly. Trained checkpoints of (eponet or scnet) can be evaluated with:

python evaluate_scene_prediction.py --configs= configs_*INSERT_MODEL_NAME_HERE*.json

Due to the policy of the Waymo Open Motion dataset, we are not able to provide pre-trained checkpoints.

Please refer to results.

Please feel free to open an issue or contact us (christopher.diehl@tu-dortmund.de), if you have any questions or suggestions.

If you find our repo useful, please consider citing our paper with the following BibTeX entry and giving us a star ⭐

@InProceedings{Diehl2023CoRL,

title={Energy-based Potential Games for Joint Motion Forecasting and Control},

author={Diehl, Christopher and Klosek, Tobias and Krueger, Martin and Murzyn, Nils and Osterburg, Timo and Bertram, Torsten},

booktitle={Conference on Robot Learning (CoRL)},

year={2023}

}@article{Diehl2023ICMLW,

title={On a Connection between Differential Games, Optimal Control, and Energy-based Models for Multi-Agent Interactions},

author={Diehl, Christopher and Klosek, Tobias and Krueger, Martin and Murzyn, Nils and Bertram, Torsten},

booktitle={International Conference on Machine Learning, Frontiers4LCD Workshop},

year={2023}

}This work was supported by the Federal Ministry for Economic Affairs and Climate Action on the basis of a decision by the German Bundestag and the European Union in the Project KISSaF - AI-based Situation Interpretation for Automated Driving.