WORK IN PROGRESS! 🔺🔺🔺

This space is for the project for the SDSC Hackathon on Gen AI 2023. HyperLearn stands for Hyper-Personalized Learn Optimization Tool. It is a working title - any better name suggestions are very welcome 😉

You can contact me under the gmail-address: ruiz.crp

See the link https://sdsc-hackathons.ch/projectPage?projectRef=DHepetK0DLQ6cRMVcvPb|rt0UoB2tZng7d5TSUtti for the project proposal and a description of the idea.

There is a number of issues in this project, which is going to be elaborated here as a preparation for the hackathon:

- How to tackle the Hyper-Personalization.

- Data: Open Educational Resources for fine-tuning?

- Quality of data and of answer: Knowledge-Graph solution?

- What would be the plan for the hackathon?

WORK IN PROGRESS! 🔺🔺🔺

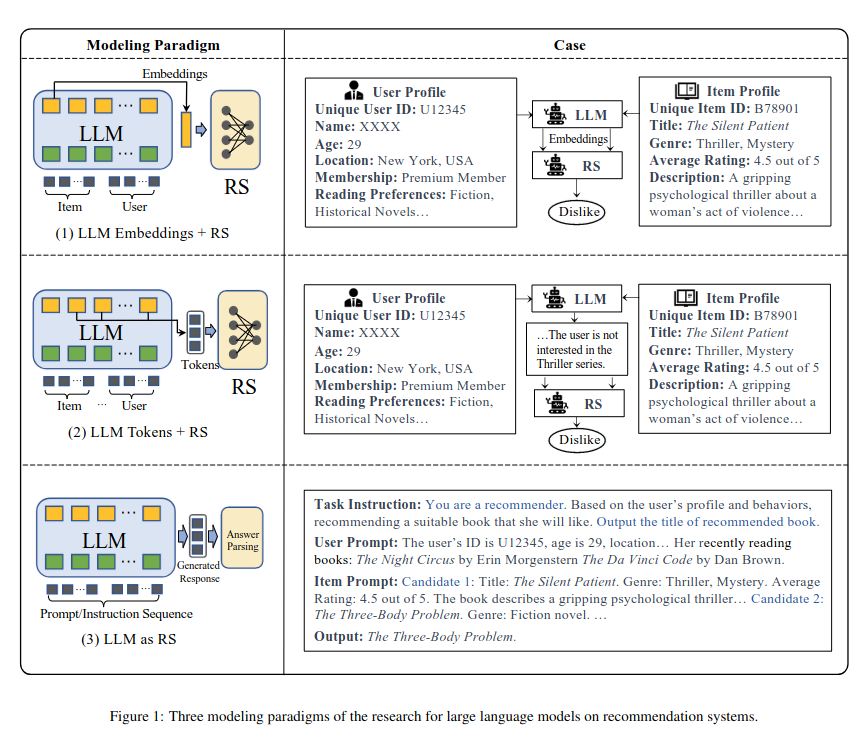

In the pre-LLM way, a classical recommender system would have been used (see also below for some links). Maybe a good start-point with regards to a LLM-based solution would be the following figure taken out of Wu et al. published relatively recently in May 2023. It shows three modeling paradigms how LLMs could be combined with a recommender system. Actually in the third option, the LLM is at the same time the recommender system. I think this third option is a good minimal solution for this hackathon: It is heavily based on prompting some user profile information in advance, which is quite feasible to achieve a minimum but not very satisfying to make something advanced/interesting (e.g. information that user likes soccer and has issues with past continuous tenses). So if we can do more than that, this would be perfect. The options are discussed in more detail below.

Next to Wu et al, the following literature might provide a good overview about the topic:, this might be the right place to find a solution And the Chen et al. July 2023 paper also seems like a crucial overview. Also this paper by Fao et al. July 2023 Seem to be a good start.

The following structure is currently used (maybe you have more ideas!):

- Minimal solution: LLM as Recommender System

- LLM Tokens plus Recommender System

- LLM Embeddings plus Recommender System

- Recommender Systems in a pre-LLM way

It seems that for example RecLLM by Google's Team Friedman et al. May 2023 used such an approach in a more elaborated chat-like you-tube commendation system (https://arxiv.org/pdf/2305.07961.pdf). It requires prompting elementsinto the memory, which is why one of the solutions is called MemPrompt by Madaan et al. February 2023. Also ChatREC is a solution in that direction by Gao et al. May 2003. See also this blog-post using T5 as a simple fine-tuned LLM-recommender giving an input of purchases and a possible output-list of choices based on semantic context.

Weakness: Among other things such a minimal solution would be bound to token length: This is a solution that could only keep a certain amount of tokens as information. This would hamper scalability particularly if you do many excercises.

This could be a cool solution: TALLRec by Bao et al. April 2023 that even comes with code! They call their approach Large Recommendation Language Model (LRLM), which fits, right? 😍 "Elaborately, TALLRec structures the recommendation data as instructions and tunes the LLM via an additional instruction tuning process. Moreover, given that LLM training necessitates a substantial amount of computing resources, TALLRec employs a lightweight tuning approach to efficiently adapt the LLMs to the recommendation task. Specifically, we apply the TALLRec framework on the LLaMA-7B model with a LoRA architecture, which ensures the framework can be deployed on an Nvidia RTX 3090 (24GB) GPU" (p.2). The text also mentions that they took a history of 10 items. This could be tried with for example a history of 10 language excercises.

A similar solution could be based on a sequence of items. This is an approach used by GenRec, Ji et al. 2023 July. Another solution based on ranking is PALR by Yang et al. 2023 May .

Definition by Chen et al. 2023: "These systems aim to predict and suggest items of interest to individual users, such as movies, products, or articles, based on their historical interactions and preferences".

See for example Facebook's Team Naumov et al.2019. This is a solution (available on a github repo for pytorch) that was build for increasing the click-rates based on personalization. How to apply that to LLMs? A. overview seems to be also here from Da'u and Salim 2020, although I cannot access the paper.

More sources:

- https://github.com/AiFangzhe/Exercise-Recommendation-System

- https://www.aimsciences.org/article/doi/10.3934/steme.2022011

- https://www.kaggle.com/datasets/junyiacademy/learning-activity-public-dataset-by-junyi-academy?select=Log_Problem.csv

On the one hand, there are structured datasets that could easily be applied such as Grammatical Error Corrections. I guess that this could be a good minimal solution for the hackathon. On the other hand, Open Educational Resources in the form of exercises are rather usually are unstructured and the quality is of all sorts. Note also that there might be licensing issues when using data - I'm trying to always state the license below. At least two large GEC corpuses in English and Russian are available and license/permission-checked. I will try to structure here in several different kinds of data sources:

- Grammatical Error Corrections

- Exercises: Text. Structured.

- Exercises: Text. Unstructured.

- Other probably useful sources

- Maybe unusuable but interesting nevertheless

GEC are structured datasets that are often created by universities. They contain sentences and some sort of error coding. Unfortunately, it seems that the methods are not congruent among them.

- NUS Corpus of Learner English (NUCLE). I filled out the requested form and am waiting for the reply. Also a non-commercial license.

- CoNLL-2014 Shared task. Data can be downloaded here. There is also an interesting paper on that here.

- A corpus was made for the BA2019 task see also WI-LOCNESS, data to download.

- Cambridge Learner Corpus, see paper here and data here

- For those three academic links above, this github-repo might help to convert the data.

- The C4_200M Synthetic Dataset for GEC was published by Google. Github repo is here, and data is on kaggle (approx 10 GB). But Google's C4 dataset is also required to make the match with the hashes in it (806 GB).

- There is here a nice list with currently 14 GEC datasets. Among them JFLEG.

- Unclear are datasets such as the EFCAMDAT described here, and allegedly (didn't work for me) available here. The

- Russian grammatical error correction RULEC-GEC. We have the data available: I filled out the requested form and the responsible person authorized us. CC 4.0 by-sa.

- For Russian there is also ReLco which is openly available under MIT license

There is here also a tutorial on how-to fine-tune a transformer model on GEC.

Having for example news sources in several languages could help us in developing some exercices ourselves (for example with Q/A generation based on texts, see below).

- I contacted Euronews to ask whether we could use their texts material.

- Junyi Academy: Kaggle dataset for the optimization of math excercises. However, the actual math-questions are not published (only the titles), and thus it does not seem useful if we really want to fine-tune: https://www.kaggle.com/datasets/junyiacademy/learning-activity-public-dataset-by-junyi-academy?select=Log_Problem.csv. CC4.0by-nc-sa.

An important issue are wrong answers. As mentioned in the proposal, I tried llama2 to ask for simple Russian exercises to learn. I now also tried to tell him that he is an English teacher and is here to teach me. The conversation was quite interesting. It started then indeed with some exercises to spot for example the difference between "its" and "it's". But when I asked him to make more difficult exercises, he quickly started to hallucinate and created many wrong exercises and confusing answers.

The bottomline is: How to make sure that the questions and answers are correct?

Q/A Generation could be used to create a learning setting where the person has to read a text (e.g. a newspaper article,, as mentioned in the data sources), and questions of understanding are generated by the algorithm. I guess a source like this, is already out-to-date because llama2 is able to do that even without fine-tuning. Maybe we can target a random article and then insert the text into the chat-session?

Please add here your thoughts. This is just a flexible proposal.

- Thinking and discussing together which solutions to use/try

- Download OER(?)-Data on S3 or other place

- Preparation of data to have it in a usable format

- Preparation of language model (llama2, mirage,...?)

- Fine-tuning of the model (recently saw this )

- Exploration of the solution

- Back to new exploration loops.. :-)

- Preparation of a demo-UI for the final presentation

There are many things still missing here.

Apparently Azure can be used with e.g. llama2 to fine-tune directly. Without needing to work with the transformer packages etc.? See here https://techcommunity.microsoft.com/t5/ai-machine-learning-blog/introducing-llama-2-on-azure/ba-p/3881233

We will need S3. If we get Google's S4 language corpus, we will need 1 TB only for that.

Unclear how much GPU-power we will need for fine-tuning.

This work is licensed under a Creative Commons Attribution-ShareAlike 4.0 International License.