Developing a well-documented repository for the Lung Nodule Detection task on the Luna16 dataset. This work is inspired by the ideas of the first-placed team at DSB2017, "grt123".

Lung cancer is the most common cancer in the world. More people die as a result of lung cancer each year than from breast, colorectal, and prostate cancer combined. Lung nodule detection is an important process in detecting lung cancer. Lots of work has been done in providing a robust model, however, there isn't an exact solution by now.

The Data Science Bowl (or DSB in short) is the world's premier data science for social good competition, created in 2014 and presented by Booz Allen Hamilton and Kaggle. The Data Science Bowl brings together data scientists, technologists, and domain experts across industries to take on the world's challenges with data and technology. In DSB2017, the competition was held to find lung nodules. Team grt123 came up with the best results by implementing a 3d UNet based YOLO. At this repository, I'm going to attack the problem inspired by their approach and provide a better result in some cases. Furthermore, I'm going to make it well documented to enrich the literature.

Making their implementation working on every GPU configurations (even no GPU!), changing the data pre-processing, and augmentation from a big monotonic code into two stages are my first goals. Mainly I have gone through their paper. I hope it helps researchers. If you have any questions on the code, please send an email to me.

The preparation code is implemented in the prepare package, including pre-processing and augmentation.

There is a jupyter notebook as a tutorial, covering the pre-processing steps.

Take a look at the prepare._classes.CTScan.preprocess method.

Also, there is another jupyter notebook tutorial explaining the augmentation techniques of the method prepare._classes.PatchMaker._get_augmented_patch,

which is strongly recommended to review.

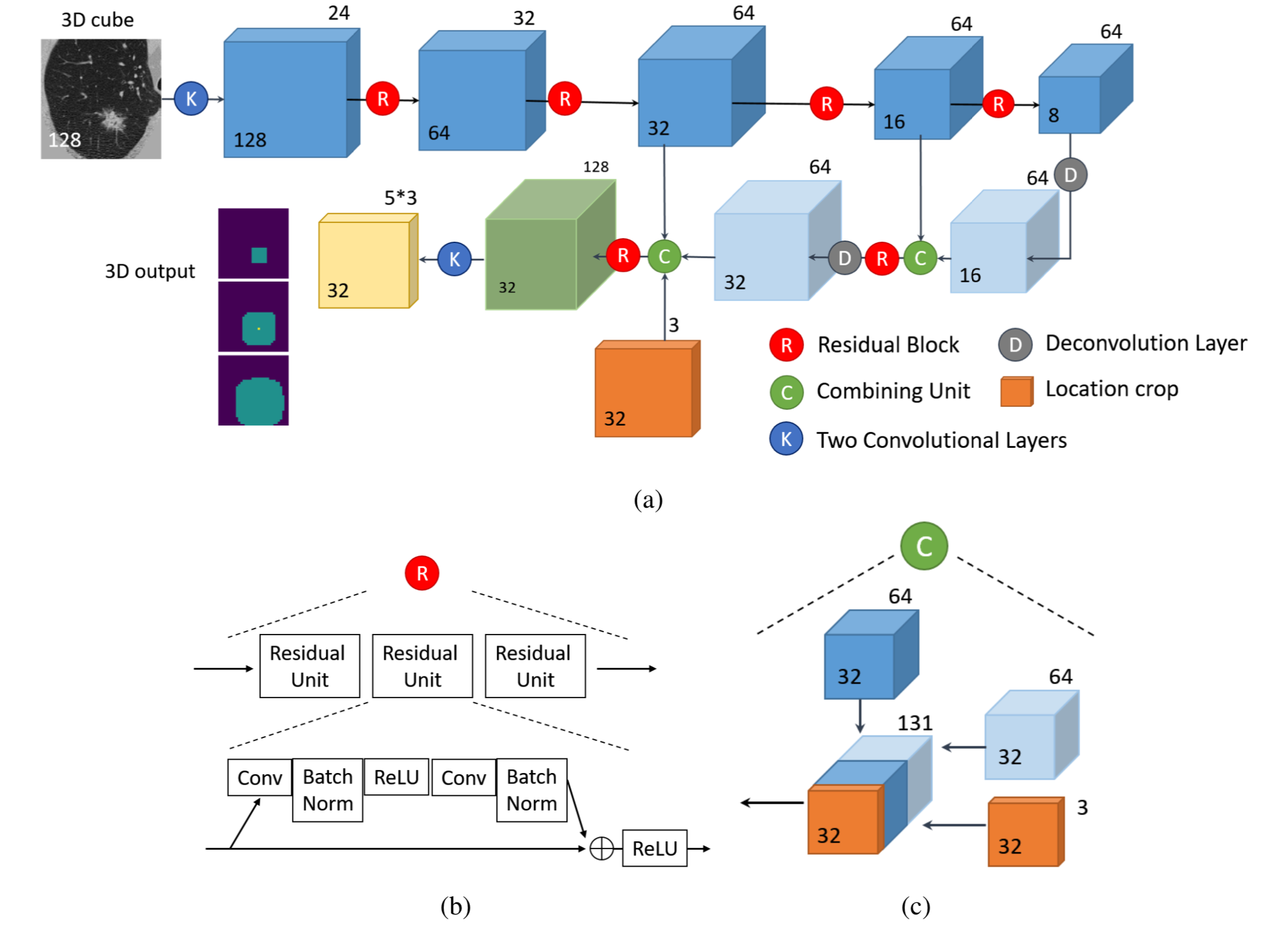

In order to have a good image of the "Nodule Net", you could study [the paper]!

The below image shows the network structure.

Its code is in model package mostly the same as the original version of the code.

Also, loss computation at model/loss.py is an IOU approach, to know the details you can read their paper.

The LunaDataSet class in main/dataset.py, loads the saved augmented data to a torch Dataset and uses it to form a DataLoader and then feed the model as well as computing the loss.

-

Download the Luna16 dataset from here. There is also a small version of the dataset just for testing which is available in my google drive here, it is because the size of the original dataset is too large to download. Also, for more information, the dataset description is available here.

-

Change the first 2 variables in

configs.pyfile -

Run

prepare/run_preprocess.py -

Run

prepare/run_augmentation.py -

Run

main/train.py

The model has been trained in some small epochs by a small sample on google colab infrastructure. You could simply copy the data to your own Google Drive account and run this notebook to learn the procedure of training the model using google colab!