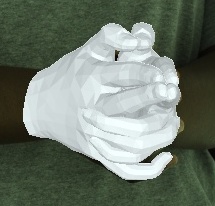

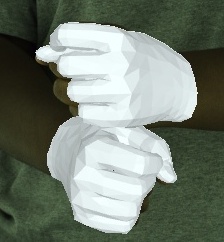

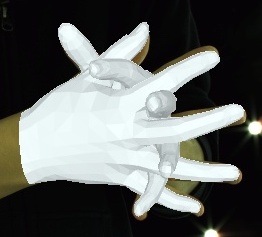

Modified the InterHand2.6M for 3D hand detection on custom images for pose estimation using a Single RGB Image

- This repo is official PyTorch implementation of InterHand2.6M: A Dataset and Baseline for 3D Interacting Hand Pose Estimation from a Single RGB Image (ECCV 2020).

- Our InterHand2.6M dataset is the first large-scale real-captured dataset with accurate GT 3D interacting hand poses.

Above demo videos have low-quality frames because of the compression for the README upload.

- 2020.10.7. Fitted MANO parameters are available!

- For the InterHand2.6M dataset download and instructions, go to [HOMEPAGE].

- Belows are instructions for our baseline model, InterNet, for 3D interacting hand pose estimation from a single RGB image.

- Install SMPLX

cd MANO_render- Set

smplx_pathandroot_pathinrender.py - Run

python render.py

The ${ROOT} is described as below.

${ROOT}

|-- data

|-- common

|-- main

|-- output

datacontains data loading codes and soft links to images and annotations directories.commoncontains kernel codes for 3D interacting hand pose estimation.maincontains high-level codes for training or testing the network.outputcontains log, trained models, visualized outputs, and test result.

You need to follow directory structure of the data as below.

${ROOT}

|-- data

| |-- STB

| | |-- data

| | |-- rootnet_output

| | | |-- rootnet_stb_output.json

| |-- RHD

| | |-- data

| | |-- rootnet_output

| | | |-- rootnet_rhd_output.json

| |-- InterHand2.6M

| | |-- annotations

| | | |-- all

| | | |-- human_annot

| | | |-- machine_annot

| | |-- images

| | | |-- train

| | | |-- val

| | | |-- test

| | |-- rootnet_output

| | | |-- rootnet_interhand2.6m_output_all_test.json

| | | |-- rootnet_interhand2.6m_output_machine_annot_val.json

- Download InterHand2.6M data [HOMEPAGE]

- Download STB parsed data [images] [annotations]

- Download RHD parsed data [images] [annotations]

- All annotation files follow MS COCO format.

- If you want to add your own dataset, you have to convert it to MS COCO format.

You need to follow the directory structure of the output folder as below.

${ROOT}

|-- output

| |-- log

| |-- model_dump

| |-- result

| |-- vis

logfolder contains training log file.model_dumpfolder contains saved checkpoints for each epoch.resultfolder contains final estimation files generated in the testing stage.visfolder contains visualized results.

- In the

main/config.py, you can change settings of the model including dataset to use and which root joint translation vector to use (from gt or from RootNet).

In the main folder, run

python train.py --gpu 0-3 --annot_subset $SUBSETto train the network on the GPU 0,1,2,3. --gpu 0,1,2,3 can be used instead of --gpu 0-3. If you want to continue experiment, run use --continue.

$SUBSET is one of [all, human_annot, machine_annot].

all: Combination of the human and machine annotation.Train (H+M)in the paper.human_annot: The human annotation.Train (H)in the paper.machine_annot: The machine annotation.Train (M)in the paper.

Place trained model at the output/model_dump/.

In the main folder, run

python test.py --gpu 0-3 --test_epoch 20 --test_set $DB_SPLIT --annot_subset $SUBSETto test the network on the GPU 0,1,2,3 with snapshot_20.pth.tar. --gpu 0,1,2,3 can be used instead of --gpu 0-3.

$DB_SPLIT is one of [val,test].

val: The validation set.Valin the paper.test: The test set.Testin the paper.

$SUBSET is one of [all, human_annot, machine_annot].

all: Combination of the human and machine annotation.(H+M)in the paper.human_annot: The human annotation.(H)in the paper.machine_annot: The machine annotation.(M)in the paper.

Here I provide the performance and pre-trained snapshots of InterNet, and output of the RootNet as well.

- Pre-trained InterNet trained on [InterHand2.6M v0.0] [full InterHand2.6M] [STB] [RHD]

- RootNet output on [full InterHand2.6M] [STB] [RHD]

- InterNet evaluation results on [InterHand2.6M v0.0]

@InProceedings{Moon_2020_ECCV_InterHand2.6M,

author = {Moon, Gyeongsik and Yu, Shoou-I and Wen, He and Shiratori, Takaaki and Lee, Kyoung Mu},

title = {InterHand2.6M: A Dataset and Baseline for 3D Interacting Hand Pose Estimation from a Single RGB Image},

booktitle = {European Conference on Computer Vision (ECCV)},

year = {2020}

}

InterHand2.6M is CC-BY-NC 4.0 licensed, as found in the LICENSE file.