The official codes for "A Knowledge-enhanced Pathology Vision-language Foundation Model for Cancer Diagnosis"

Preprint | Download Model | Webpage | Cite

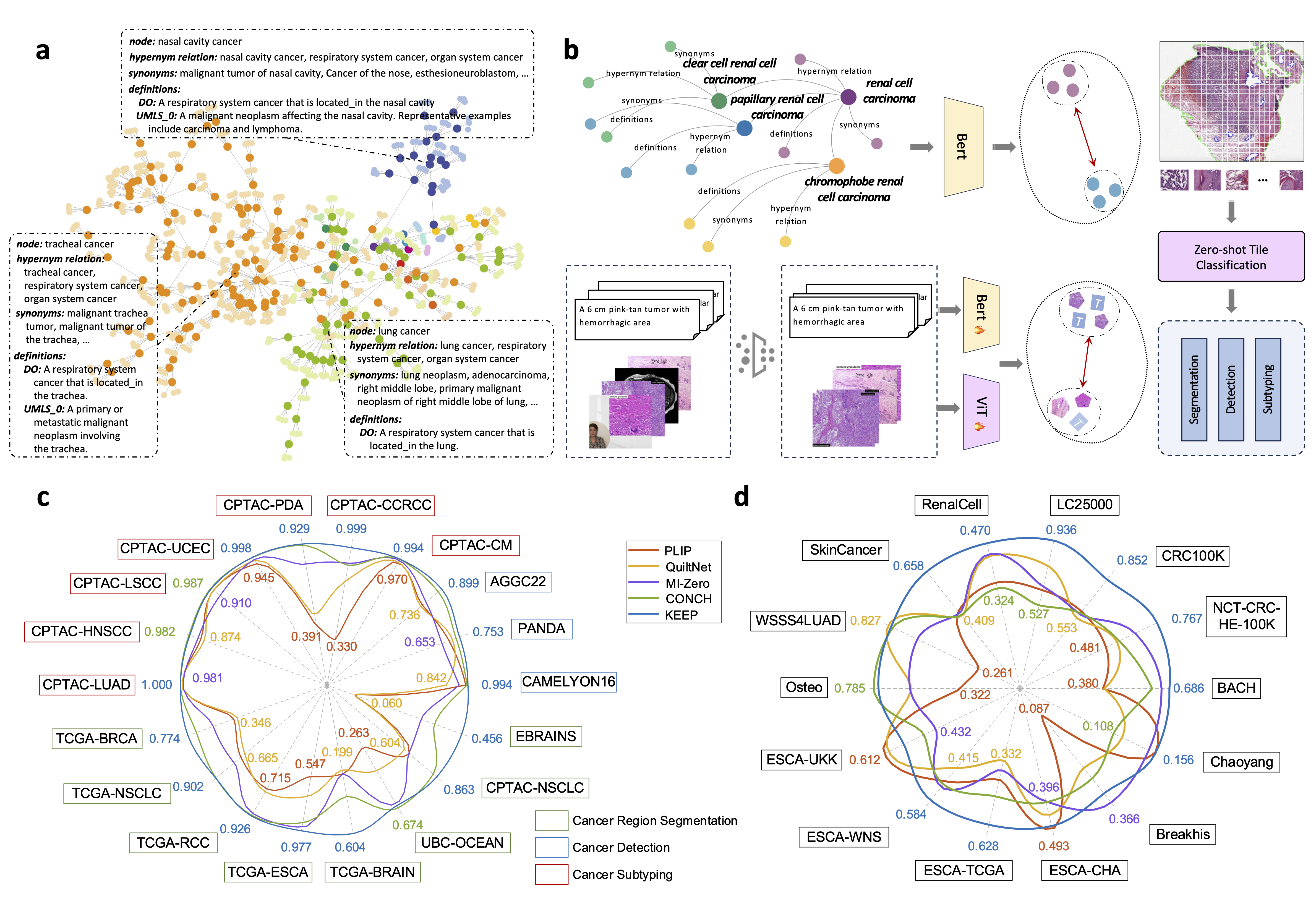

Abstract: Deep learning has enabled the development of highly robust foundation models for various pathological tasks across diverse diseases and patient cohorts. Among these models, vision-language pre-training, which leverages large-scale paired data to align pathology image and text embedding spaces, and provides a novel zero-shot paradigm for downstream tasks. However, existing models have been primarily data-driven and lack the incorporation of domain-specific knowledge, which limits their performance in cancer diagnosis, especially for rare tumor subtypes. To address this limitation, we establish a KnowledgE-Enhanced Pathology (KEEP) foundation model that harnesses disease knowledge to facilitate vision-language pre-training. Specifically, we first construct a disease knowledge graph (KG) that covers 11,454 human diseases with 139,143 disease attributes, including synonyms, definitions, and hypernym relations. We then systematically reorganize the millions of publicly available noisy pathology image-text pairs, into 143K well-structured semantic groups linked through the hierarchical relations of the disease KG. To derive more nuanced image and text representations, we propose a novel knowledge-enhanced vision-language pre-training approach that integrates disease knowledge into the alignment within hierarchical semantic groups instead of unstructured image-text pairs. Validated on 18 diverse benchmarks with more than 14,000 whole slide images (WSIs), KEEP achieves state-of-the-art performance in zero-shot cancer diagnostic tasks. Notably, for cancer detection, KEEP demonstrates an average sensitivity of 89.8% at a specificity of 95.0% across 7 cancer types, significantly outperforming vision-only foundation models and highlighting its promising potential for clinical application. For cancer subtyping, KEEP achieves a median balanced accuracy of 0.456 in subtyping 30 rare brain cancers, indicating strong generalizability for diagnosing rare tumors. All codes and models will be available for reproducing our results.

[12/18/2024]: Model weights for easy inference are now available.

[12/18/2024]: Paper is available in ArXiv (https://arxiv.org/abs/2412.13126).

KEEP (KnowledgE-Enhanced Pathology) is a foundation model designed for cancer diagnosis that integrates disease knowledge into vision-language pre-training. It utilizes a comprehensive disease knowledge graph (KG) containing 11,454 human diseases and 139,143 disease attributes, such as synonyms, definitions, and hierarchical relationships. KEEP reorganizes millions of publicly available noisy pathology image-text pairs into 143K well-structured semantic groups based on the hierarchical relations of the disease KG. By incorporating disease knowledge into the alignment process, KEEP achieves more nuanced image and text representations. The model is validated on 18 diverse benchmarks with over 14,000 whole-slide images (WSIs), demonstrating state-of-the-art performance in zero-shot cancer diagnosis, including an average sensitivity of 89.8% for cancer detection across 7 cancer types. KEEP also excels in subtyping rare cancers, achieving strong generalizability in diagnosing rare tumor subtypes.

- Why we need KNOWLEDGE? We need knowledge integration in computational pathology to address the limitations of existing models, which often struggle with data scarcity, noisy annotations, and the complexity of cancer subtypes. Domain-specific knowledge, such as disease ontologies and medical terminologies, provides a structured framework that enhances the model’s understanding of pathology images by incorporating clinically relevant context. This knowledge helps improve model accuracy and generalizability, particularly in data-scarce or rare disease settings. Furthermore, it aids in distinguishing subtle disease manifestations, ensuring more precise diagnosis and better performance on downstream tasks like cancer detection and subtyping.

You can directly download the model weights from Google Drive with the link: KEEP_release for easy inference.

cd ./quick_start

python keep_inference.pyWe provide a .py file for fast evaluation on WSIs as follows. You need to change ``data path'' in the Python script to the path in your computer. In this part, you only need one 4090 GPU.

cd WSI_evaluation

python zeroshot_segment_WSI.pyWe manually annotate 1,000 noisy pathology images to fine-tune Yolov8. You can download the fine-tuned Yolov8 model directly from PathDetector .

cd data

# Detection pathology image in slide

python detection.py --data_path /path/to/images/ --model_path /path/to/yolov8/

# textual refinement: extract entities, paraphrased by templates

python text_processing.py --data_path /path/to/texts/

# image-text data cluster

python data_cluster.py --image_path /path/to/images/ --text_path /path/to/texts/ --structured_data_path /path/to/save/For knowledge graph construction, we download the knowledge structure from Disease Ontology (DO). Then, we search for synonyms in Unified Medical Language System (UMLS) based on the UMLS_CUI of each entity and construct the final KG.

For disease knowledge encoding, we train the knowledge encoder in a way similar to our previous work KEP. You can find more detailed information in the repository. In this part, we use four A100 GPUs.

Start by cloning the repository and cd into the directory:

git clone https://github.com/MAGIC-AI4Med/KEEP.git

cd KEEPNext, create a conda environment and install the dependencies:

conda create -n keep python=3.8 -y

conda activate keep

pip install --upgrade pip

pip install -r requirements.txtIf you need to retrain the model, you could refer to the following code and modify the relevant parameters. In this part, we only use one A100 GPU.

cd training

CUDA_VISIBLE_DEVICES=0

python main.py

--data-path /path/to/data/

--save-path /path/to/save/

--num-workers 8

--batch-size 512

--warmup 1000We present benchmark results for a range of representative tasks. A complete set of benchmarks can be found in the paper. These results will be updated with each new iteration of KEEP.

| Models | PLIP[1] | QuiltNet [2] | MI-Zero (Pub) [3] | CONCH [4] | KEEP(Ours) |

|---|---|---|---|---|---|

| CAMELYON16 | 0.253 | 0.157 | 0.186 | 0.292 | 0.361 |

| PANDA | 0.295 | 0.309 | 0.276 | 0.315 | 0.334 |

| AGGC22 | 0.284 | 0.282 | 0.324 | 0.449 | 0.530 |

| Models | CHIEF[1] | PLIP [2] | QuiltNet [3] | MI-Zero (Pub) [4] | CONCH [5] | KEEP(Ours) |

|---|---|---|---|---|---|---|

| CPTAC-CM | 0.915 | 0.970 | 0.972 | 0.985 | 0.994 | 0.994 |

| CPTAC-CCRCC | 0.723 | 0.330 | 0.755 | 0.886 | 0.871 | 0.999 |

| CPTAC-PDA | 0.825 | 0.391 | 0.464 | 0.796 | 0.920 | 0.929 |

| CPTAC-UCEC | 0.955 | 0.945 | 0.973 | 0.979 | 0.996 | 0.998 |

| CPTAC-LSCC | 0.901 | 0.965 | 0.966 | 0.910 | 0.987 | 0.983 |

| CPTAC-HNSCC | 0.946 | 0.898 | 0.874 | 0.918 | 0.982 | 0.976 |

| CPTAC-LUAD | 0.891 | 0.988 | 0.991 | 0.981 | 0.999 | 1.000 |

| Models | PLIP [1] | QuiltNet [2] | MI-Zero (Pub) [3] | CONCH [4] | KEEP(Ours) |

|---|---|---|---|---|---|

| TCGA-BRCA | 0.519 | 0.500 | 0.633 | 0.727 | 0.774 |

| TCGA-NSCLC | 0.699 | 0.667 | 0.753 | 0.901 | 0.902 |

| TCGA-RCC | 0.735 | 0.755 | 0.908 | 0.921 | 0.926 |

| TCGA-ESCA | 0.614 | 0.746 | 0.954 | 0.923 | 0.977 |

| TCGA-BRAIN | 0.361 | 0.346 | 0.361 | 0.453 | 0.604 |

| UBC-OCEAN | 0.343 | 0.469 | 0.652 | 0.674 | 0.661 |

| CPTAC-NSCLC | 0.647 | 0.607 | 0.643 | 0.836 | 0.863 |

| EBRAINS | 0.096 | 0.093 | 0.325 | 0.371 | 0.456 |

The project was built on top of amazing repositories such as MI-Zero, CLAM, OpenCLIP. We thank the authors and developers for their contribution.

If you find our work useful in your research, please consider citing our paper:

@article{zhou2024keep,

title={A Knowledge-enhanced Pathology Vision-language Foundation Model for Cancer Diagnosis},

author={Xiao Zhou, Luoyi Sun, Dexuan He, Wenbin Guan, Ruifen Wang, Lifeng Wang, Xin Sun, Kun Sun, Ya Zhang, Yanfeng Wang, Weidi Xie},

journal={arXiv preprint arXiv:2412.13126},

year={2024}

}