Latent Manifold Walk

This utility is designed to improve interpretability of learned latent features spaces of variational autoencoding models. Latent space walking is a popular method to interrogate independently learned features.

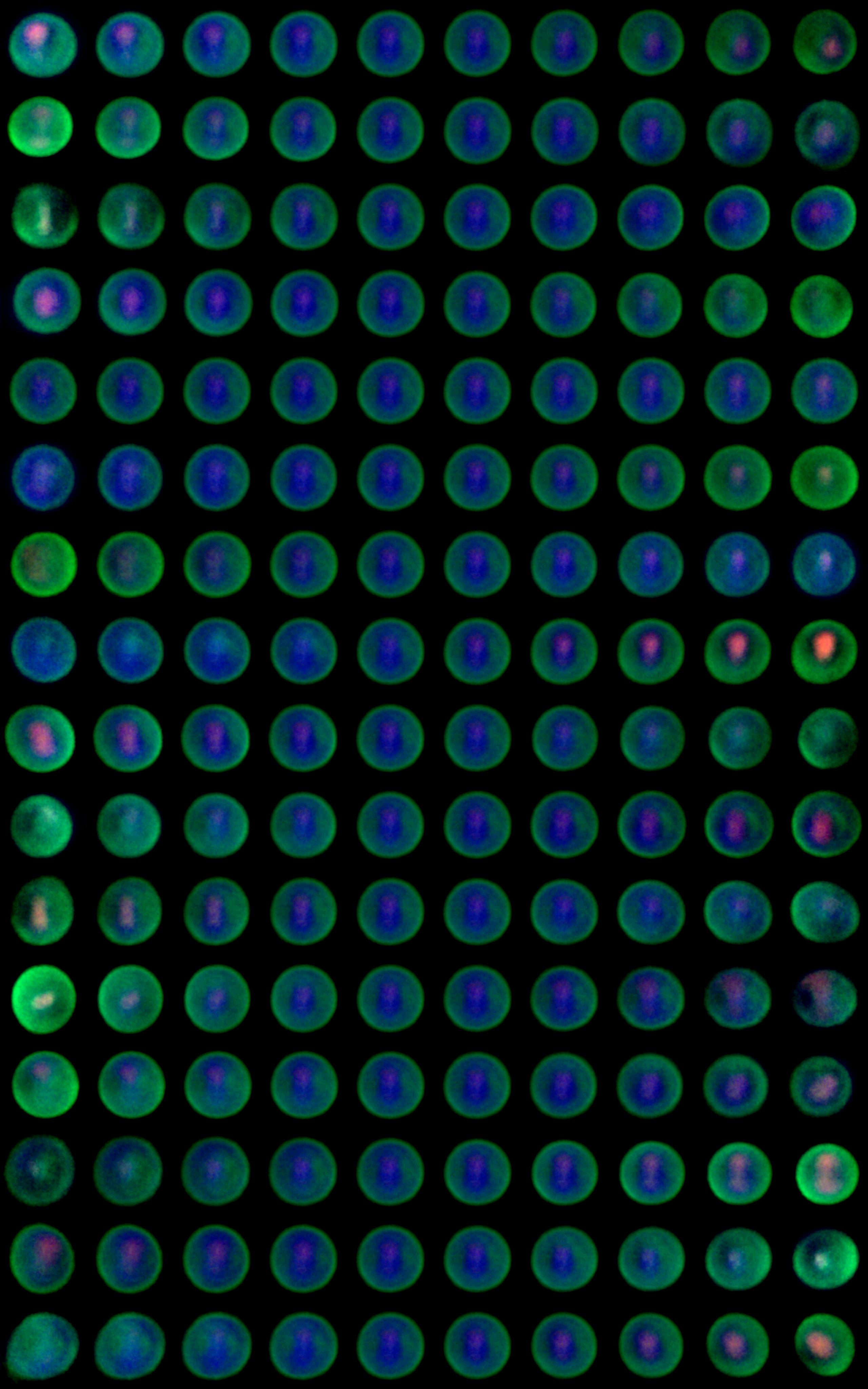

Because a variational autoencoder (VAE) model learns features that conform to expected distributions across the dataset, each feature can be evaluated by generating synthetic output samples using the trained decoder network. An example of a latent space walk is shown below, in which each row is a learned feature, and each column represents a different position along the percentile distribution curve of the prior, which in this case is a standard normal. For each latent feature under study, the remaining features are simply held to their expected value.

This visualization illustrates the role of independent features, but does not model interactions between them. To better visualize the entire learned feature space, we introduce the Principal Feature Manifold.

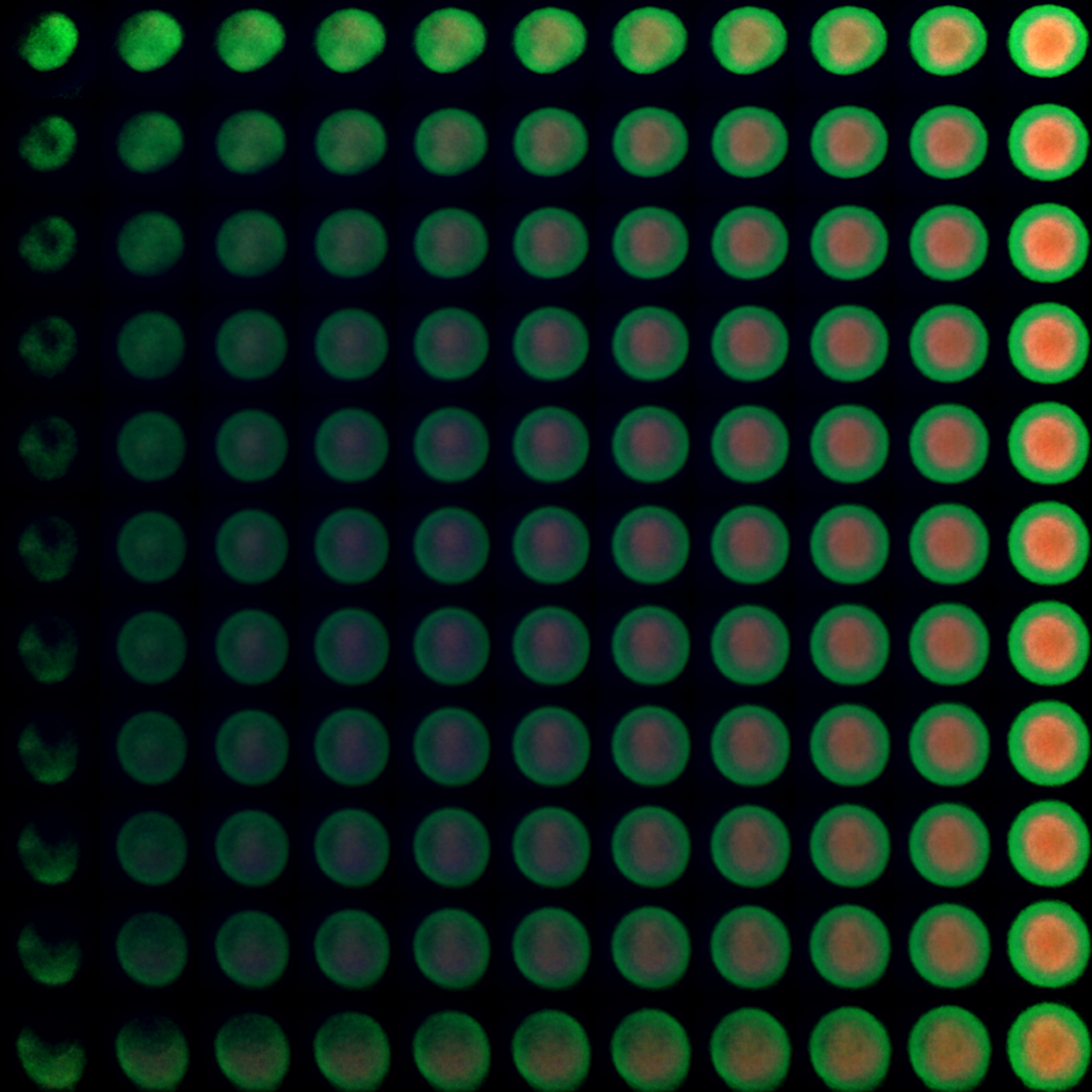

Principal Manifold Walk

Our motivation arises from knowledge that learned VAE features are not strictly independent of one another, and are commonly correlated across the encoded dataset. Although each independent feature is expected to conform to a prior distribution (enforced through the incorporation of a Kullback-Leibler divergence term in the model's cost function), no special constraint is placed on feature interactions.

To generate a principal feature manifold, we first sample the percentile distributions of the first two principal components of the encodings space. The sampled space is then rotated back into VAE feature space via the invese of the principal component rotation matrix. The resulting synthetic samples are then visualized by passing them through the trained decoder network. In this example, the x and y axis account for 16% and 13% of variability in the learned feature space, respectively.

Usage

This script has only been tested to work with Keras decoder models. Simply point the utility to both model archecture files (.json) and weight files (.hdf5) as well as an encodings file. The encodings file should be generated by a fully trained encoder model and saved as a csv file without rownames or headers.

python walk_manifold.py \

--model_arch models/arch_decoder.json \

--model_weights models/weights_decoder.hdf5 \

--encoding_file models/encodings.csv \

--save_dir output/