Here are several stream connectors for the Centreon Broker.

The goal is to provide useful scripts to the community to extend the open source solution Centreon.

You can find Lua scripts written to export Centreon data to several outputs.

If one script is the good one for you, it is recommended to copy it on the Centreon central server into the /usr/share/centreon-broker/lua directory. If it does not exist, you can create it. This directory must be readable by the centreon-broker user.

When the script is copied, you have to configure it through the centreon web interface.

Stream connector documentation are provided here:

- https://documentation.centreon.com/docs/centreon/en/latest/developer/writestreamconnector.html

- https://documentation.centreon.com/docs/centreon-broker/en/latest/exploit/stream_connectors.html

Don't hesitate to propose improvements and/or contact the community through our Slack workspace.

Here is a list of the available scripts:

This stream connector works with metric events. So you need them to be configured in Centreon broker.

Parameters to specify in the stream connector configuration are:

- log-file as string: it is the complete file name of this script logs.

- elastic-address as string: it is the ip address of the Elasticsearch server

- elastic-port as number: it is the port, if not provided, this value is 9200.

- max-row as number: it is the max number of events before sending them to the elastic server. If not specified, its value is 100

This stream connector is an alternative to the previous one, but works with neb service_status events. As those events are always available on a Centreon platform, this script should work more often.

To use this script, one need to install the lua-socket and lua-sec libraries.

Parameters to specify in the stream connector configuration are:

- http_server_address as string: the (ip) address of the Elasticsearch server

- http_server_port as number: the port of the Elasticsearch server, by default 9200

- http_server_protocol as string: the connection scheme, by default http

- http_timeout as number: the connection timeout, by default 5 seconds

- filter_type as string: filter events to compute, by default metric,status

- elastic_index_metric as string: the index name for metrics, by default centreon_metric

- elastic_index_status as string: the index name for status, by default centreon_status

- elastic_username as string: the API username if set

- elastic_password as password: the API password if set

- max_buffer_size as number: the number of events to stock before the next flush, by default 5000

- max_buffer_age as number: the delay to wait before the next flush, by default 30 seconds

- skip_anon_events as number: skip events without name in broker cache, by default 1

- log_level as number: log level from 1 to 3, by default 3

- log_path as string: path to log file, by default /var/log/centreon-broker/stream-connector-elastic-neb.log

If one of max_buffer_size or max_buffer_age is reached, events are sent.

Two indices need to be created on the Elasticsearch server:

curl -X PUT "http://elasticsearch/centreon_metric" -H 'Content-Type: application/json'

-d '{"mappings":{"properties":{"host":{"type":"keyword"},"service":{"type":"keyword"},

"instance":{"type":"keyword"},"metric":{"type":"keyword"},"value":{"type":"double"},

"min":{"type":"double"},"max":{"type":"double"},"uom":{"type":"text"},

"type":{"type":"keyword"},"timestamp":{"type":"date","format":"epoch_second"}}}}'

curl -X PUT "http://elasticsearch/centreon_status" -H 'Content-Type: application/json'

-d '{"mappings":{"properties":{"host":{"type":"keyword"},"service":{"type":"keyword"},

"output":{"type":"text"},"status":{"type":"keyword"},"state":{"type":"keyword"},

"type":{"type":"keyword"},"timestamp":{"type":"date","format":"epoch_second"}}}}''

This stream connector works with metric events. So you need them to be configured in Centreon broker.

To use this script, one need to install the lua-socket library.

Parameters to specify in the stream connector configuration are:

- http_server_address as string: it is the ip address of the InfluxDB server

- http_server_port as number: it is the port, if not provided, this value is 8086

- http_server_protocol as string: by default, this value is http

- influx_database as string: The database name, mydb is the default value

- max_buffer_size as number: The number of events to stock before them to be sent to InfluxDB

- max_buffer_age as number: The delay in seconds to wait before the next flush.

if one of max_buffer_size or max_buffer_age is reached, events are sent.

This stream connector is an alternative to the previous one, but works with neb service_status events. As those events are always available on a Centreon platform, this script should work more often.

To use this script, one need to install the lua-socket and lua-sec libraries.

Parameters to specify in the stream connector configuration are:

- measurement as string: the InfluxDB measurement, overwrites the service description if set

- http_server_address as string: the (ip) address of the InfluxDB server

- http_server_port as number: the port of the InfluxDB server, by default 8086

- http_server_protocol as string: the connection scheme, by default https

- http_timeout as number: the connection timeout, by default 5 seconds

- influx_database as string: the database name, by default mydb

- influx_retention_policy as string: the database retention policy, default is database's default

- influx_username as string: the database username, no authentication performed if not set

- influx_password as string: the database password, no authentication performed if not set

- max_buffer_size as number: the number of events to stock before the next flush, by default 5000

- max_buffer_age as number: the delay to wait before the next flush, by default 30 seconds

- skip_anon_events as number: skip events without name in broker cache, by default 1

- log_level as number: log level from 1 to 3, by default 3

- log_path as string: path to log file, by default /var/log/centreon-broker/stream-connector-influxdb-neb.log

if one of max_buffer_size or max_buffer_age is reached, events are sent.

This stream connector works with neb service_status events.

This stream connector need at least centreon-broker-18.10.1.

To use this script, one need to install the lua-curl library.

Parameters to specify in the stream connector configuration are:

- ipaddr as string: the ip address of the Warp10 server

- logfile as string: the log file

- port as number: the Warp10 server port

- token as string: the Warp10 write token

- max_size as number: how many queries to store before sending them to the Warp10 server.

There are two ways to use our stream connector with Splunk. The first and probably most common way uses Splunk Universal Forwarder. The second method uses Splunk HEC (HTTP Event Collector).

In that case, you're going to use "Centreon4Splunk", it comes with:

Thanks to lkco!

There are two Lua scripts proposed here:

- splunk-events-luacurl.lua that sends states to Splunk.

- splunk-metrics-luacurl.lua that sends metrics to Splunk.

An HTTP events collector has be configured in data entries.

Login as root on the Centreon central server using your favorite SSH client.

In case your Centreon central server must use a proxy server to reach the Internet, you will have to export the https_proxy environment variable and configure yum to be able to install everything.

export https_proxy=http://my.proxy.server:3128

echo "proxy=http://my.proxy.server:3128" >> /etc/yum.confNow that your Centreon central server is able to reach the Internet, you can run:

yum install -y lua-curlThese packages are necessary for the script to run.

Then copy the splunk-events-luacurl.lua and splunk-metrics-luacurl.lua scripts to /usr/share/centreon-broker/lua.

Here are the steps to configure your stream connector for the Events:

- Add a new "Generic - Stream connector" output to the central broker in the "Configuration / Poller / Broker configuration" menu.

- Name it as wanted and set the right path:

| Name | Splunk Events |

|---|---|

| Path | /usr/share/centreon-broker/lua/splunk-events-luacurl.lua |

- Add at least 3 string parameters containing your Splunk configuration:

| Type | String |

|---|---|

http_server_url |

http://x.x.x.:8088/services/collector |

splunk_token |

your hec token |

splunk_index |

your event index |

Here are the steps to configure your stream connector for the Metrics:

- Add a new "Generic - Stream connector" output to the central broker in the "Configuration / Poller / Broker configuration" menu.

- Name it as wanted and set the right path

| Name | Splunk Metrics |

|---|---|

| Path | /usr/share/centreon-broker/lua/splunk-metrics-luacurl.lua |

- Add at least 3 string parameters containing your Splunk configuration:

| Type | String |

|---|---|

http_server_url |

http://x.x.x.:8088/services/collector |

splunk_token |

your hec token |

splunk_index |

your metric index |

Thats all for now!

Then save your configuration, export it and restart the broker daemon:

systemctl restart cbdIf you want to change the host value in the HTTP POST data to identify from which Centreon Plateform the data is sent:

| Type | String |

|---|---|

splunk_host |

Poller-ABC |

If your Centreon central server has no direct access to Splunk but needs a proxy server, you will have to add a new string parameter:

| Type | String |

|---|---|

http_proxy_string |

http://your.proxy.server:3128 |

The default value of 2 is fine for initial troubleshooting, but generates a huge amount of logs if you have a lot of hosts. In order to get less log messages, you are should add this parameter:

| Type | Number |

|---|---|

log_level |

1 |

The default log file is /var/log/centreon-broker/stream-connector-splunk-*.log. If it does not suit you, you can set it with the log_path parameter:

| Type | String |

|---|---|

log_path |

/var/log/centreon-broker/my-custom-logfile.log |

The stream connector sends the check results received from Centreon Engine to ServiceNow. Only the host and service check results are sent.

This stream connector is in BETA version because it has not been used enough time in production environments.

This stream connector needs the lua-curl library available for example with luarocks:

luarocks install lua-curl

In Configuration > Pollers > Broker configuration, you need to modify the Central Broker Master configuration.

Add an output whose type is Stream Connector. Choose a name for your configuration. Enter the path to the connector-servicenow.lua file.

Configure the lua parameters with the following informations:

| Name | Type | Description |

|---|---|---|

| client_id | String | The client id for OAuth authentication |

| client_secret | String | The client secret for OAuth authentication |

| username | String | Username for OAuth authentication |

| password | Password | Password for OAuth authentication |

| instance | String | The ServiceNow instance |

| logfile | String | The log file with its full path (optional) |

The following table describes the matching information between Centreon and the ServiceNow Event Manager.

Host event

| Centreon | ServiceNow Event Manager field | Description |

|---|---|---|

| hostname | node | The hostname |

| output | description | The Centreon Plugin output |

| last_check | time_of_event | The time of the event |

| hostname | resource | The hostname |

| severity | The level of severity depends on the host status |

Service event

| Centreon | ServiceNow Event Manager field | Description |

|---|---|---|

| hostname | node | The hostname |

| output | description | The Centreon Plugin output |

| last_check | time_of_event | The time of the event |

| service_description | resource | The service name |

| severity | The level of severity depends on the host status |

NDO protocol is no longer supported by Centreon Broker. It is now replaced by BBDO (lower network footprint, automatic compression and encryption). However it is possible to emulate the historical NDO protocol output with this stream connector.

Parameters to specify in the broker output web ui are:

- ipaddr as string: the ip address of the listening server

- port as number: the listening server port

- max-row as number: the number of event to store before sending the data

By default logs are in /var/log/centreon-broker/ndo-output.log

- lua version >= 5.1.4

- install lua-socket library (http://w3.impa.br/~diego/software/luasocket/)

- from sources, you have to install also gcc + lua-devel packages

Create a broker output for HP OMI Connector

Parameters to specify in the broker output web ui are:

ipaddras string: the ip address of the listening serverportas number: the listening server portlogfileas string: where to send logsloglevelas number: the log level (0, 1, 2, 3) where 3 is the maximum levelmax_sizeas number: how many events to store before sending them to the servermax_ageas number: flush the events when the specified time (in second) is reach (even ifmax_sizeis not reach)

Login as root on the Centreon central server using your favorite SSH client.

In case your Centreon central server must use a proxy server to reach the Internet, you will have to export the https_proxy environment variable and configure yum to be able to install everything.

export https_proxy=http://my.proxy.server:3128

echo "proxy=http://my.proxy.server:3128" >> /etc/yum.confNow that your Centreon central server is able to reach the Internet, you can run:

yum install -y lua-curl epel-release

yum install -y luarocks

luarocks install luaxmlThese packages are necessary for the script to run. Now let's download the script:

wget -O /usr/share/centreon-broker/lua/bsm_connector.lua https://raw.githubusercontent.com/centreon/centreon-stream-connector-scripts/master/bsm/bsm_connector.lua

chmod 644 /usr/share/centreon-broker/lua/bsm_connector.luaThe BSM StreamConnnector is now installed on your Centreon central server!

Create a broker output for HP BSM Connector.

Parameters to specify in the broker output WUI are:

source_ci(string): Name of the transmiter, usually Centreon server namehttp_server_url(string): the full HTTP URL. Default: https://my.bsm.server:30005/bsmc/rest/events/ws-centreon/.http_proxy_string(string): the full proxy URL if needed to reach the BSM server. Default: empty.log_path(string): the log file to uselog_level(number): the log level (0, 1, 2, 3) where 3 is the maximum level. 0 logs almost nothing. 1 logs only the beginning of the script and errors. 2 logs a reasonable amount of verbose. 3 logs almost everything possible, to be used only for debug. Recommended value in production: 1.max_buffer_size(number): how many events to store before sending them to the server.max_buffer_age(number): flush the events when the specified time (in second) is reached (even ifmax_buffer_sizeis not reached).

The lua-curl and luatz libraries are required by this script:

yum install -y lua-curl epel-release

yum install -y luarocks

luarocks install luatzThen copy the pagerduty.lua script to /usr/share/centreon-broker/lua.

Here are the steps to configure your stream connector:

- Add a new "Generic - Stream connector" output to the central broker in the "Configuration / Poller / Broker configuration" menu.

- Name it as wanted and set the right path:

| Name | pagerduty |

|---|---|

| Path | /usr/share/centreon-broker/lua/pagerduty.lua |

- Add at least one string parameter containing your PagerDuty routing key/token.

| Type | String |

|---|---|

pdy_routing_key |

<type your key here> |

Thats all for now!

Then save your configuration, export it and restart the broker daemon:

systemctl restart cbdIf your Centreon central server has no direct access to PagerDuty but needs a proxy server, you will have to add a new string parameter:

| Type | String |

|---|---|

http_proxy_string |

http://your.proxy.server:3128 |

In order to have working links/URL in your PagerDuty events, you are encouraged to add this parameter:

| Type | String |

|---|---|

pdy_centreon_url |

http://your.centreon.server |

The default value of 2 is fine for initial troubleshooting, but generates a huge amount of logs if you have a lot of hosts. In order to get less log messages, you are should add this parameter:

| Type | Number |

|---|---|

log_level |

1 |

The default log file is /var/log/centreon-broker/stream-connector-pagerduty.log. If it does not suit you, you can set it with the log_path parameter:

| Type | String |

|---|---|

log_path |

/var/log/centreon-broker/my-custom-logfile.log |

In case you want to tune the maximum number of events sent in a row for optimization purpose, you may add this parameter:

| Type | Number |

|---|---|

max_buffer_size |

10 (default value) |

In case you want to shorten the delay (in seconds) between the reception of an event and its transmission to PagerDuty, you can set this parameter:

| Type | Number |

|---|---|

max_buffer_age |

30 (default value) |

Canopsis

This script use the stream-connector mechanism of Centreon to get events from the pollers. The event is then translated to a Canopsis event and sent to the HTTP REST API.

This connector follow the best practices of the Centreon documentation (see the listed links in the first section).

The script is in lua language as imposed by the stream-connector specification.

It get all the events from Centreon and convert these events in a Canopsis compatible json format.

Filtered events are sent to HTTP API of Canopsis by chunk to reduce the number of connections.

The filtered events are :

- acknowledgment events (category 1, element 1)

- downtime events (category 1, element 5)

- host events (category 1, element 14)

- service events (category 1, element 24)

Extra informations are added to the host and services as bellow :

- action_url

- notes_url

- hostgroups

- servicegroups (for service events)

Two kinds of ack are sent to Canopsis :

- Ack creation

- Ack deletion

An ack is positioned on the resource/component reference

Two kinds of downtime are sent to Canopsis as "pbehavior" :

- Downtime creation

- Downtime cancellation

A uniq ID is generated from the informations of the downtime carried by Centreon.

Note : The recurrent downtimes are not implemented by the stream connector yet.

All HARD events with a state changed from hosts are sent to Canopsis.

Take care of the state mapping as below :

-- CENTREON // CANOPSIS

-- ---------------------

-- UP (0) // INFO (0)

-- DOWN (1) // CRITICAL (3)

-- UNREACHABLE (2) // MAJOR (2)

All HARD events with a state changed from services are sent to Canopsis.

Take care of the state mapping as below :

-- CENTREON // CANOPSIS

-- ---------------------

-- OK (0) // INFO (0)

-- WARNING (1) // MINOR (1)

-- CRITICAL (2) // CRITICAL (3)

-- UNKNOWN (3) // MAJOR (2)

- lua version >= 5.1.4

- install lua-socket library (http://w3.impa.br/~diego/software/luasocket/)

-

= 3.0rc1-2 ( from sources, you have to install also gcc + lua-devel packages ) available into canopsis repository

-

- centreon-broker version 19.10.5 or >= 20.04.2

Software deployment from sources (centreon-broker 19.10.5 or >= 20.04.2) :

- Copy the lua script

bbdo2canopsis.luafromcanopsisdir to/usr/share/centreon-broker/lua/bbdo2canopsis.lua - Change the permissions to this file

chown centreon-engine:centreon-engine /usr/share/centreon-broker/lua/bbdo2canopsis.lua

Software deployment from packages (centreon-broker >= 20.04.2) :

- Install canopsis repository first

echo "[canopsis]

name = canopsis

baseurl=https://repositories.canopsis.net/pulp/repos/centos7-canopsis/

gpgcheck=0

enabled=1" > /etc/yum.repos.d/canopsis.repo

- install connector with Yum

yum install canopsis-connector-centreon-stream-connector

Enable the connector :

- add a new "Generic - Stream connector" output on the central-broker-master (see the official documentation)

- export the poller configuration (see the official documentation)

- restart services 'systemctl restart cbd centengine gorgoned'

If you modify this script in development mode ( directly into the centreon host ), you will need to restart the Centreon services (at least the centengine service).

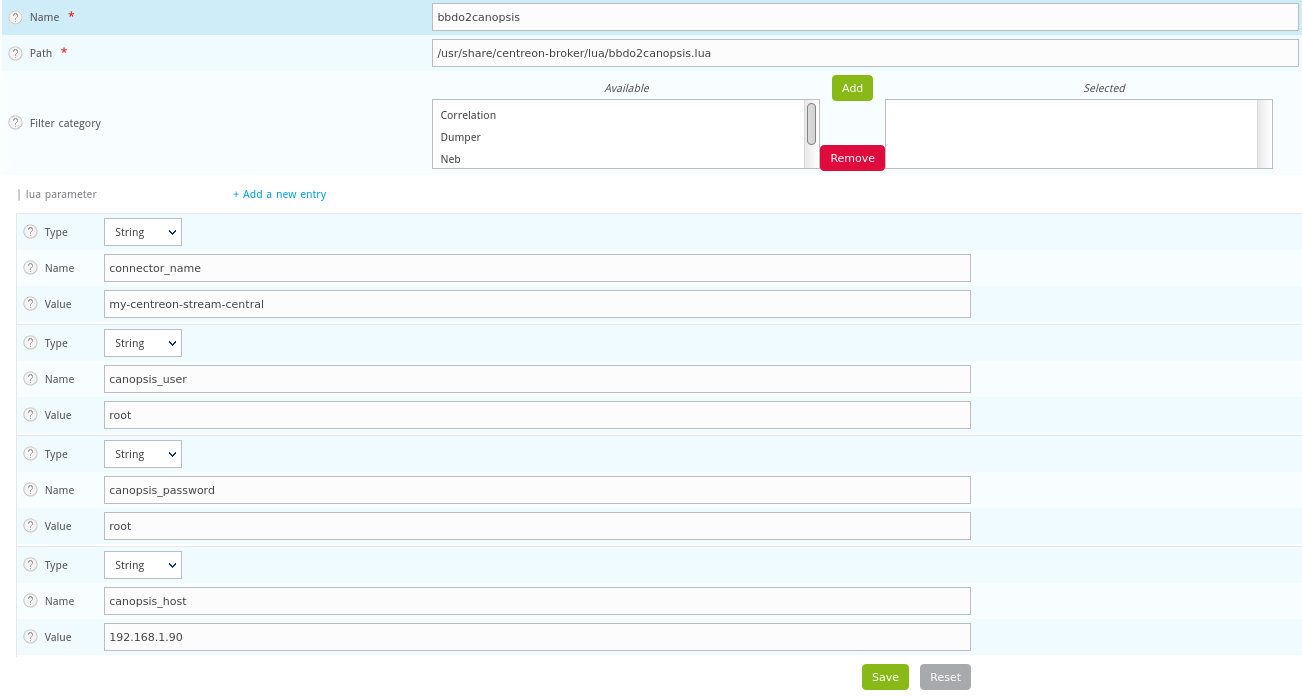

All the configuration can be made througt the Centreon interface as described in the official documentation.

The main parameters you have to set are :

connector_name = "your connector source name"

canopsis_user = "your Canopsis API user"

canopsis_password = "your Canopsis API password"

canopsis_host = "your Canopsis host"

If you want to customize your queue parameters (optional) :

max_buffer_age = 60 -- retention queue time before sending data

max_buffer_size = 10 -- buffer size in number of events

The init spread timer (optional) :

init_spread_timer = 360 -- time to spread events in seconds at connector starts

This timer is needed for the start of the connector.

During this time, the connector send all HARD state events (with state change or not) to update the events informations from Centreon to Canopsis. In that way the level of information tends to a convergence.

This implies a burst of events and a higher load for the server during this time.

On the Centreon WUI you can set these parameters as below :

In Configuration > Pollers > Broker configuration > central-broker-master > Output > Select "Generic - Stream connector" > Add

By default the connector use the HTTP REST API of Canopsis to send events.

Check your alarm view to see the events from Centreon.

All logs are dumped into the default log file "/var/log/centreon-broker/debug.log"

You can also use a raw log file to dump all Canopsis events and manage your own way to send events (by example with logstash) by editing the "sending_method" variable en set the "file" method.