The OpenVX framework provides a mechanism to add new vision functions to OpenVX by 3rd party vendors. This project has below OpenVX modules and utilities to extend amdovx-core project, which contains the AMD OpenVX Core Engine.

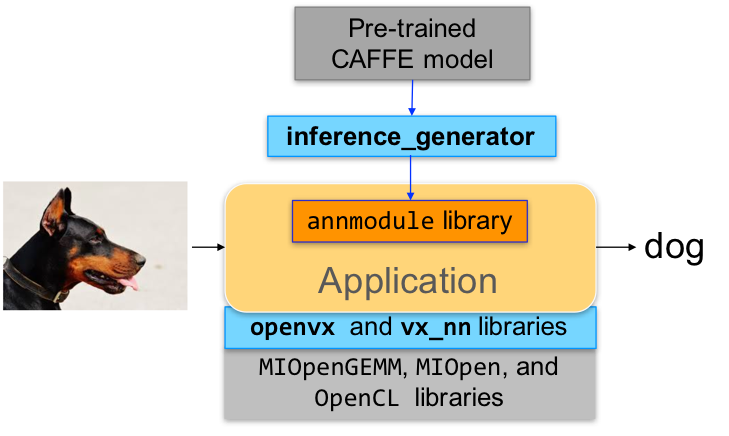

- vx_nn: OpenVX neural network module

- inference_generator: generate inference library from pre-trained CAFFE models

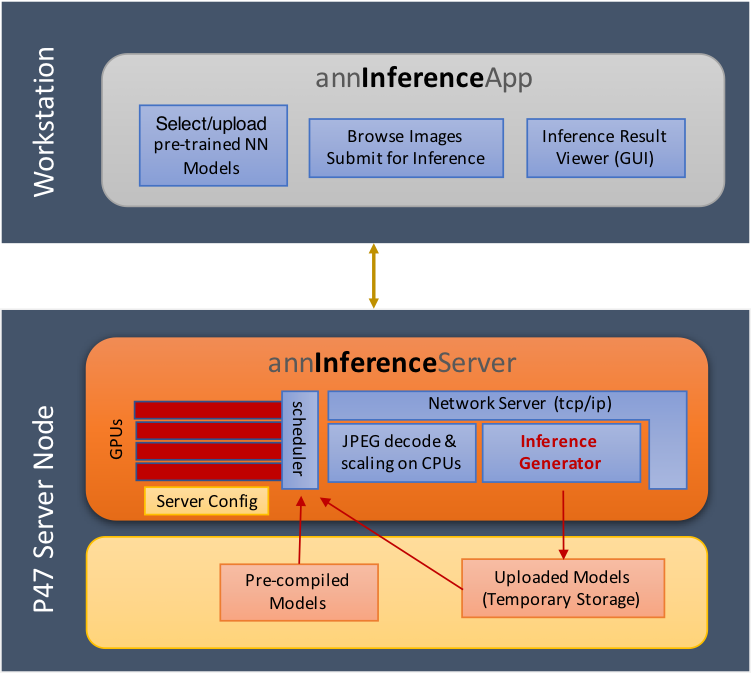

- annInferenceServer: sample Inference Server

- annInferenceApp: sample Inference Client Application

- vx_loomsl: Radeon LOOM stitching library for live 360 degree video applications

- loom_shell: an interpreter to prototype 360 degree video stitching applications using a script

- vx_opencv: OpenVX module that implemented a mechanism to access OpenCV functionality as OpenVX kernels

If you're interested in Neural Network Inference, start with the sample inference application.

| Inference Application Development Workflow | Sample Inference Application |

|---|---|

|

|

Refer to Wiki page for further details.

- CPU: SSE4.1 or above CPU, 64-bit

- GPU: Radeon Instinct or Vega Family of Products (16GB recommended)

- CMake 2.8 or newer download

- Qt Creator for annInferenceApp

- protobuf for inference_generator

- install

libprotobuf-devandprotobuf-compilerneeded for vx_nn

- install

- OpenCV 3 (optional) download for vx_opencv

- Set OpenCV_DIR environment variable to OpenCV/build folder

Refer to Wiki page for developer instructions.

- git clone, build and install other ROCm projects (using

cmakeand% make install) in the below order for vx_nn.- rocm-cmake

- MIOpenGEMM

- MIOpen -- make sure to use

-DMIOPEN_BACKEND=OpenCLoption with cmake

- git clone this project using

--recursiveoption so that correct branch of the amdovx-core project is cloned automatically in the deps folder. - build and install (using

cmakeand% make install)- executables will be placed in

binfolder - libraries will be placed in

libfolder - the installer will copy all executables into

/opt/rocm/binand libraries into/opt/rocm/lib - the installer also copies all the OpenVX and module header files into

/opt/rocm/includefolder

- executables will be placed in

- add the installed library path to LD_LIBRARY_PATH environment variable (default

/opt/rocm/lib) - add the installed executable path to PATH environment variable (default

/opt/rocm/bin)

- build annInferenceApp.pro using Qt Creator

- or use annInferenceApp.py for simple tests

- Use loom.sln to build x64 platform