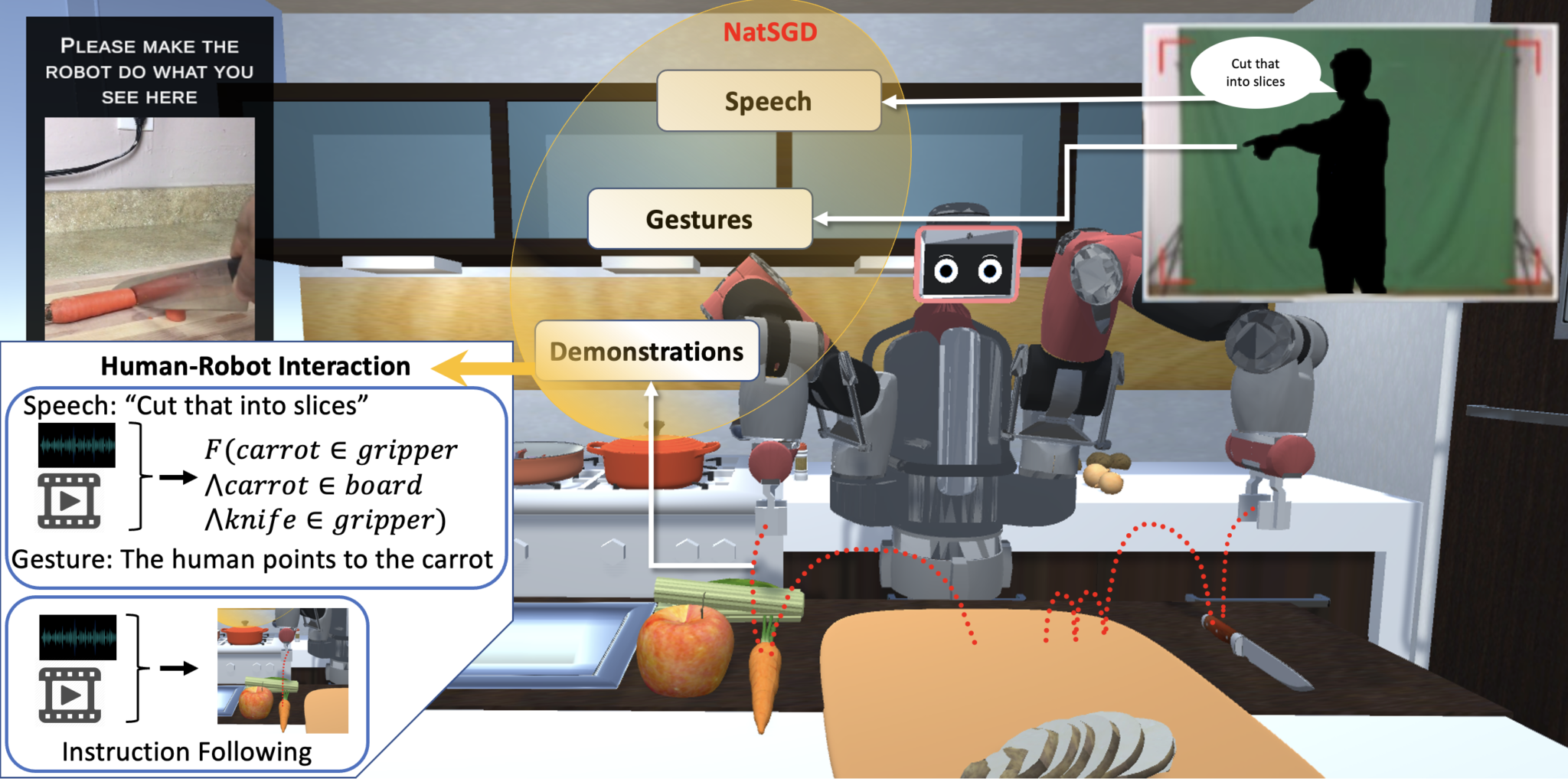

NatSGD Dataset Human Communication to Linear Temporal Logic (Comm2LTL) Benchmarking: This repository contains the implementation and models for translating human communication into Linear Temporal Logic (LTL).

- 1. Prerequisites

- 2. Download Dataset files

- 3. Pre-Training Gesture Encoder

- 4. Fine-Tuning Speech and Gesture Models

- 5. Spot Score Calculation

- 6. Results

While we validated Python 3.9 on a Linux machine, any newer python versions should work as long as there is sufficient GPU memory to load the models. Please install the prerequisites using

python3 -m pip install -r requirements.txt

Download dataset files from this Google Drive link into the data folder.

Before the fine-tuning the models, we need to train the gesture Autoencoder. Below are the steps to prepare data and pre

To prepare the LSTM encoder model for gesture preprocessing in our projects, take the following steps:

- Run the

lstmencoder.pyscript. - This will generate a

.pthfile that we will use later as the gesture motion encoding for the downstream tasks.

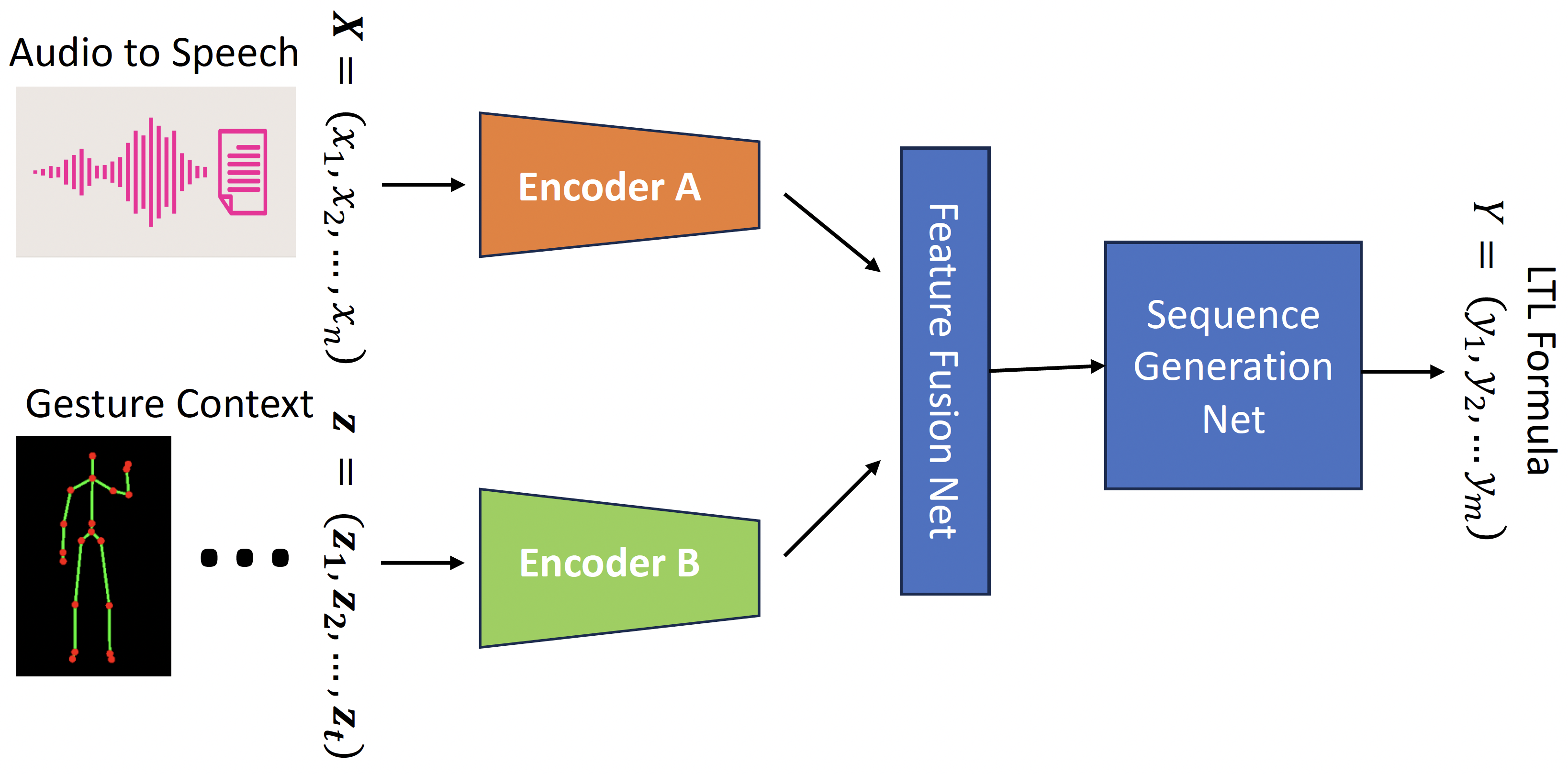

We have to fine-tune two distinct models, namely bart-base and t5-base, on our dataset across three modalities: Speech only, Gesture only, and a combination of Speech and Gestures.

The learning framework for translating a pair of speech and gesture data to an LTL formula that can solve multi-modal human task understanding problems.

The learning framework for translating a pair of speech and gesture data to an LTL formula that can solve multi-modal human task understanding problems.

For the Speech-only model, please see:

For the Gesture-only model, please see:

To explore the Speech and Gesture combined model, please see:

In order to calculate the Spot Score, you will follow the steps below and use calc_spot_score.py file.

- Update the input folder and file name in the code according to your specific dataset and file structure. E.g. Here we have Speech + Gestures T5 test predictions for epoch 100's model.

input_folder = os.getcwd() + '/results/predictions/speechGesturesT5/test'

input_file = input_folder + '/test_data_epoch_100.txt'

- Run

calc_spot_score.pyto compute the total score for this prediction set.

| Model (using BART) | Jaq Sim ↑ | Spot Score ↑ |

|---|---|---|

| Speech Only | 0.934 | 0.434 |

| Gestures Only | 0.922 | 0.299 |

| Speech + Gestures | 0.944 | 0.588 |

| Model (using T5) | Jaq Sim ↑ | Spot Score ↑ |

|---|---|---|

| Speech Only | 0.917 | 0.299 |

| Gestures Only | 0.948 | 0.244 |

| Speech + Gestures | 0.961 | 0.507 |

For any additional information or inquiries, please feel free to contact us. Thank you for using NatSGD dataset and Comm2LTL benchmarking!