- Sovits 2.0 inference demo is available!

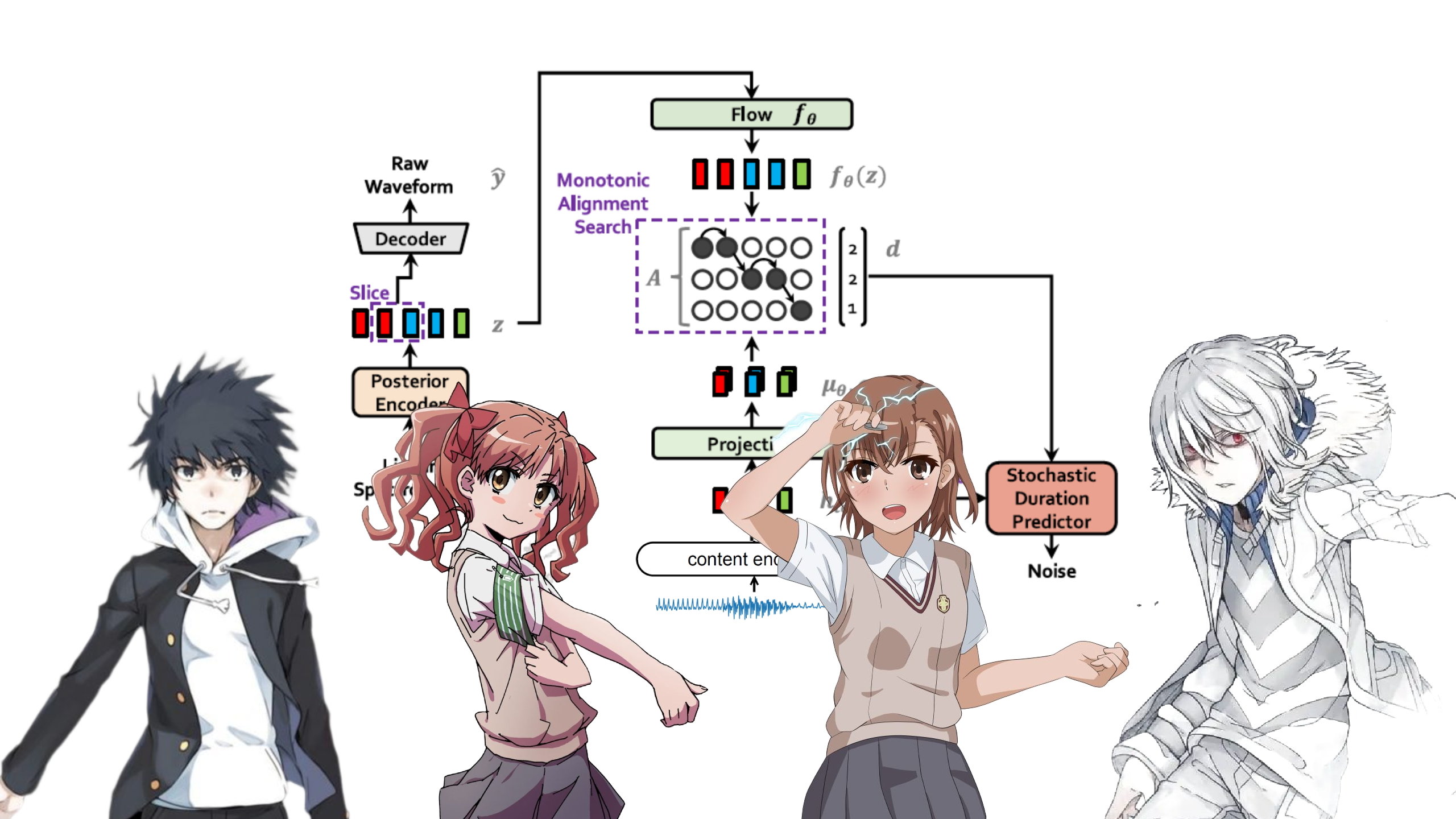

Inspired by Rcell, I replaced the word embedding of TextEncoder in VITS with the output of the ContentEncoder used in Soft-VC to achieve any-to-one voice conversion with non-parallel data. Of course, any-to-many voice converison is also doable!

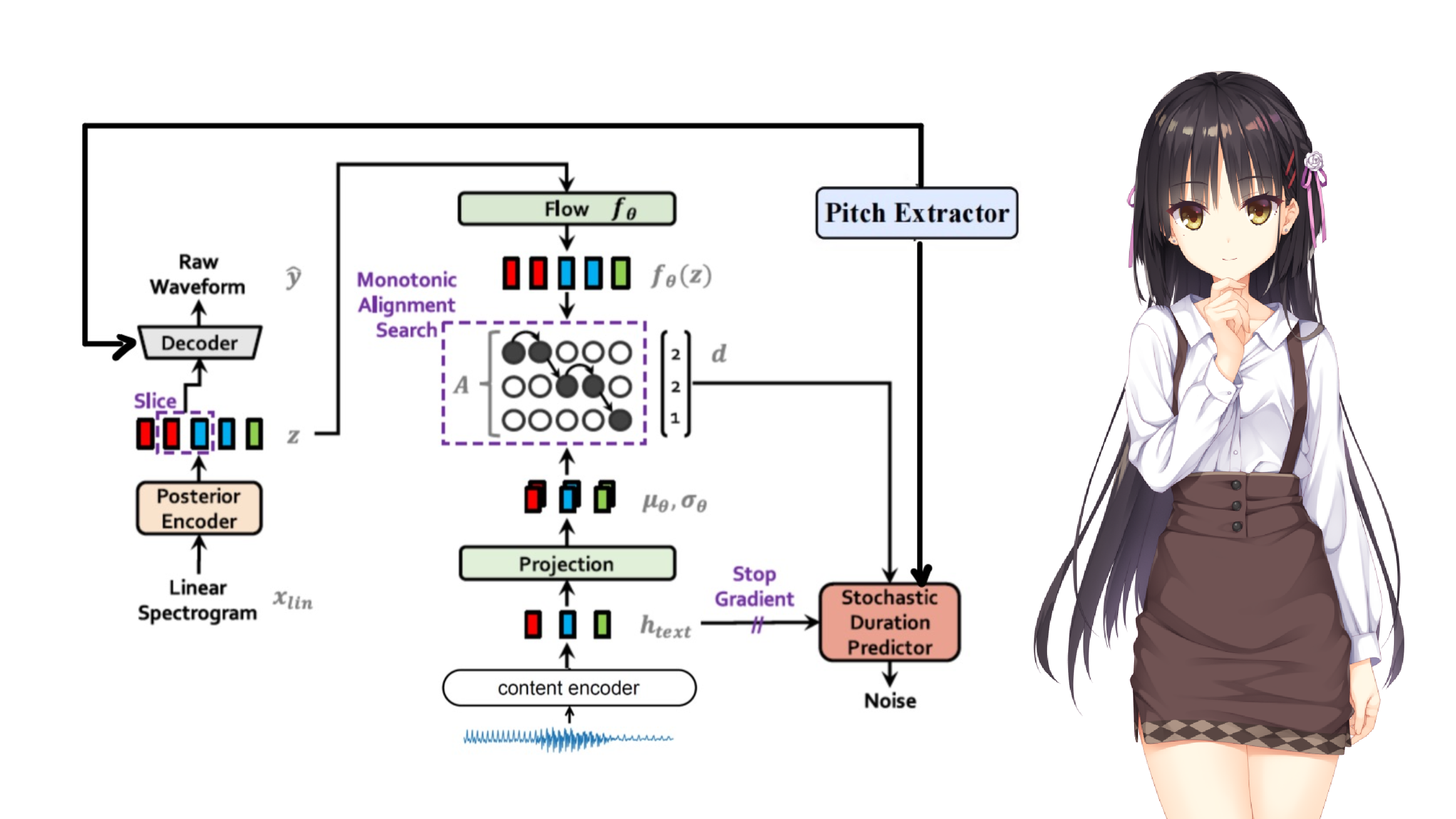

For better voice quality, in Sovits2, I utilize the f0 model used in StarGANv2-VC to get fundamental frequency feature of an input audio and feed it to the vocoder of VITS.

- Description

| Speaker | ID |

|---|---|

| 一方通行 | 0 |

| 上条当麻 | 1 |

| 御坂美琴 | 2 |

| 白井黑子 | 3 |

-

Model: Google drive

-

Config: in this repository

-

Demo

- Colab: Sovits (魔法禁书目录)

- BILIBILI: 基于Sovits的4人声音转换模型

- Description

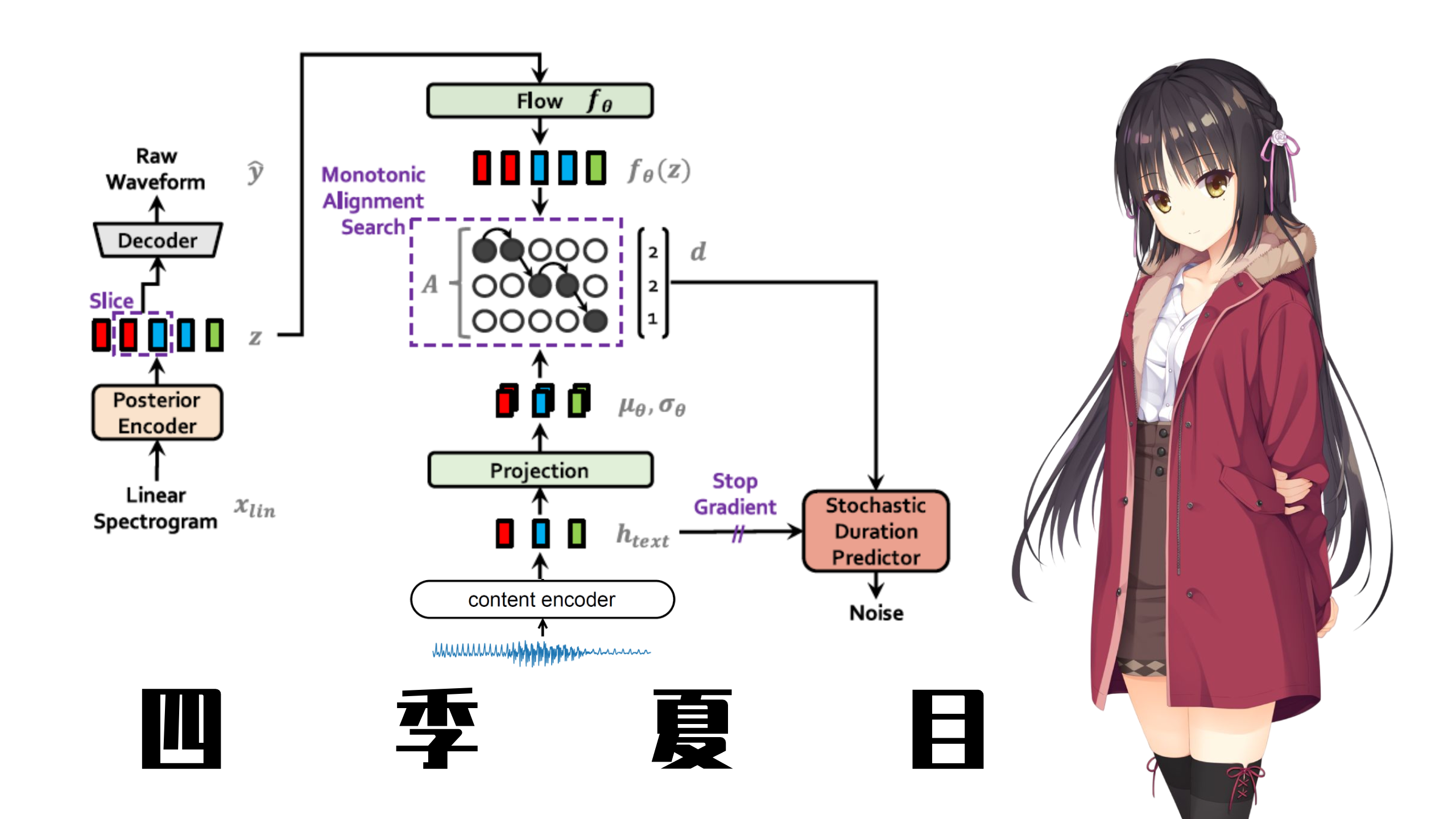

Single speaker model of Shiki Natsume.

-

Model: Google drive

-

Config: in this repository

-

Demo

- Colab: Sovits (四季夏目)

- BILIBILI: 枣子姐变声器

- Description

Single speaker model of Shiki Natsume, trained with F0 feature.

-

Model: Google drive

-

Config: in this repository

-

Demo

- Colab: Sovits2 (四季夏目)

Audio should be wav file, with mono channel and a sampling rate of 22050 Hz.

Your dataset should be like:

└───wavs

├───dev

│ ├───LJ001-0001.wav

│ ├───...

│ └───LJ050-0278.wav

└───train

├───LJ002-0332.wav

├───...

└───LJ047-0007.wav

Utilize the content encoder to extract speech units in the audio.

For more information, refer to this repo.

cd hubert

python3 encode.py soft path/to/wavs/directory path/to/soft/directory --extension .wavThen you need to generate filelists for both your training and validation files. It's recommended that you prepare your filelists beforehand!

Your filelists should look like:

Single speaker:

path/to/wav|path/to/unit

...

Multi-speaker:

path/to/wav|id|path/to/unit

...

Single speaker:

python train.py -c configs/config.json -m model_name

Multi-speaker:

python train_ms.py -c configs/config.json -m model_name

You may also refer to train.ipynb

Please refer to inference.ipynb

- Add F0 model

- Add F0 loss

QQ: 2235306122

BILIBILI: Francis-Komizu

Special thanks to Rcell for giving me both inspiration and advice!