This repository provides an implementation of dynamical systems, function approximators, dynamical movement primitives, and black-box optimization with evolution strategies, in particular the optimization of the parameters of dynamical movement primitives.

Version 2 of dmpbbo was released in June 2022. If you still require the previous API, or the C++ implementations of training and optimization that used XML as the serialization format, please use v1.0.0: https://github.com/stulp/dmpbbo/tree/v1.0.0

This library may be useful for you if you

-

are interested in the theory behind dynamical movement primitives and their optimization. Then the tutorials are the best place to start.

-

already know about dynamical movement primitives and reinforcement learning, but would rather use existing than brew it yourself. In this case, demos/ is a good starting point, as they provide examples of how to use the code.

-

run the optimization of DMPs on a real robot. In this case, go right ahead to demos/robot/. Reading the tutorials and inspecting demos beforehand will help to understand demos/robot.

-

want to develop and contribute. If you want to delve deeper into the functionality of the code, the doxygen documentation of the API is for you. See the INSTALL.md on how to generate it.

Here is a quick look at the code functionality for training and real-time execution of dynamical movement primitives (see demo_dmp_training.py for the full source code):

# Train a DMP with a trajectory

traj = Trajectory.loadtxt("trajectory.txt")

function_apps = [ FunctionApproximatorRBFN(10, 0.7) for _ in range(traj.dim) ]

dmp = Dmp.from_traj(traj, function_apps, dmp_type="KULVICIUS_2012_JOINING")

# Numerical integration

dt = 0.001

n_time_steps = int(1.3 * traj.duration / dt)

x, xd = dmp.integrate_start()

for tt in range(1, n_time_steps):

x, xd = dmp.integrate_step(dt, x)

# Convert complete DMP state to end-eff state

y, yd, ydd = dmp.states_as_pos_vel_acc(x, xd)

# Save the DMP to a json file that can be read in C++

json_for_cpp.savejson_for_cpp("dmp_for_cpp.json", dmp)The json file saved above can be read into C++ and be integrated in real-time as follows (see demoDmp.cpp for the full source code including memory allocation)

ifstream file("dmp_for_cpp.json");

Dmp* dmp = json::parse(file).get<Dmp*>();

// Allocate memory for x/xd/y/yd/ydd

dmp->integrateStart(x, xd);

double dt = 0.001;

for (double t = 0.0; t < 2.0; t+=dt) {

dmp->integrateStep(dt, x, x, xd);

// Convert complete DMP state to end-eff state

dmp->stateAsPosVelAcc(x, xd, y, yd, ydd);

}For our own use, the aims of coding this were the following:

-

Allowing easy and modular exchange of different dynamical systems within dynamical movement primitives.

-

Allowing easy and modular exchange of different function approximators within dynamical movement primitives.

-

Being able to compare different exploration strategies (e.g. covariance matrix adaptation vs. exploration decay) when optimizing dynamical movement primitives.

-

Enabling the optimization of different parameter subsets of function approximators.

-

Running dynamical movement primitives in the control loop on real robots.

How to install the libraries/binaries/documentation is described in INSTALL.md

The core functionality is in the Python package dmpbbo/. It contains five subpackages:

-

dmpbbo/functionapproximators : defines a generic interface for function approximators, as well as several specific implementations (weighted least-squares regression (WLS), radial basis function networks (RBFN), and locally-weighted regression (LWR).

-

dmpbbo/dynamicalsystems : definition of a generic interface for dynamical systems, as well as several specific implementations (exponential system, sigmoid system, spring-damper system, etc.)

-

dmpbbo/dmp : implementation of dynamical movement primitives, building on the functionapproximator and dynamicalsystems packages.

-

dmpbbo/bbo : implementation of several evolutionary algorithms for the stochastic optimization of black-box cost functions

-

dmpbbo/bbo_of_dmps : examples and helper functions for applying black-box optimization to the optimization of DMP parameters.

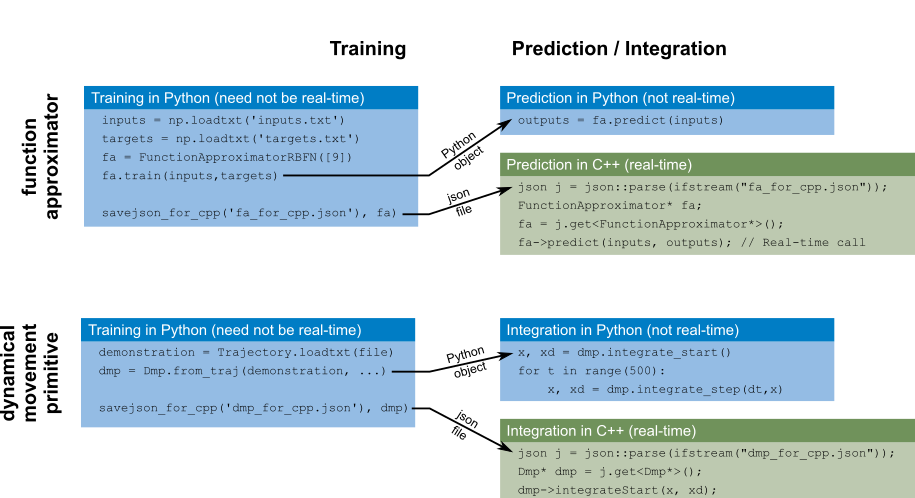

The function approximators are trained with input and target data, and a DMP is trained with a demonstrated trajectory. These trained model can be saved to the json format, and then be read by the C++ code in src/ (with nlohmann::json). The DMP integration functions that are called inside the control loop are all real-time, in the sense that they do not dynamically allocate memory, and not computationally intensive (mainly the multiplication of small matrices). The design pattern behind dmpbbo is thus "Train in Python. Execute in C++.", as illustrated in the image below.

As the optimization algorithm responsible for generating exploratory samples and updating the DMP parameters need not be real-time, requires intermediate visualization for monitoring purposes, and is more easily implemented in a script, the bbo and bbo_of_dmps subpackages have not been implemented in C++.

To see a concrete example of how the Python and C++ implementations are intended to work together, please see demos/robot/. Here, the optimization is done in Python, but a simulated "robot" executes the DMPs in C++.

In 2014, I decided to write one library that integrates the different research threads on the acquisition and optimization that I had been pursuing since 2009. These threads are listed below. Also, I wanted to provide a tutorial on dynamical movement primitives for students, along with code to try DMPs out in practice.

-

Representation and training of parameterized skills, i.e. motion primitives that adapt their trajectory to task parameters [matsubara11learning], [silva12learning], [stulp13learning].

-

Representing and optimizing gain schedules and force profiles as part of a DMP [buchli11learning], [kalakrishnan11learning]

-

Showing that evolution strategies outperform reinforcement learning algorithms when optimizing the parameters of a DMP [stulp13robot], [stulp12policy_hal]

-

Demonstrating the advantages of using covariance matrix adaptation for the policy improvement [stulp12path],[@stulp12adaptive],[@stulp14simultaneous]

-

Using the same unified model for the model parameters of different function approximators [stulp15many]. In fact, coding this library lead to this article, rather than vice versa.

If you use this library in the context of experiments for a scientific paper, we would appreciate if you could cite this library in the paper as follows:

@article{stulp2019dmpbbo,

author = {Freek Stulp and Gennaro Raiola},

title = {DmpBbo: A versatile Python/C++ library for Function Approximation, Dynamical Movement Primitives, and Black-Box Optimization},

journal = {Journal of Open Source Software}

year = {2019},

doi = {10.21105/joss.01225},

url = {https://www.theoj.org/joss-papers/joss.01225/10.21105.joss.01225.pdf}

}

- [buchli11learning] Jonas Buchli, Freek Stulp, Evangelos Theodorou, and Stefan Schaal. Learning variable impedance control. International Journal of Robotics Research, 30(7):820-833, 2011.

- [ijspeert02movement] A. J. Ijspeert, J. Nakanishi, and S. Schaal. Movement imitation with nonlinear dynamical systems in humanoid robots. In Proceedings of the IEEE International Conference on Robotics and Automation (ICRA), 2002.

- [ijspeert13dynamical] A. Ijspeert, J. Nakanishi, P Pastor, H. Hoffmann, and S. Schaal. Dynamical Movement Primitives: Learning attractor models for motor behaviors. Neural Computation, 25(2):328-373, 2013.

- [kalakrishnan11learning] M. Kalakrishnan, L. Righetti, P. Pastor, and S. Schaal. Learning force control policies for compliant manipulation. In IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS 2011), 2011.

- [kulvicius12joining] Tomas Kulvicius, KeJun Ning, Minija Tamosiunaite, and Florentin Wörgötter. Joining movement sequences: Modified dynamic movement primitives for robotics applications exemplified on handwriting. IEEE Transactions on Robotics, 28(1):145-157, 2012.

- [matsubara11learning] T Matsubara, S Hyon, and J Morimoto. Learning parametric dynamic movement primitives from multiple demonstrations. Neural Networks, 24(5):493-500, 2011.

- [silva12learning] Bruno da Silva, George Konidaris, and Andrew G. Barto. Learning parameterized skills. In John Langford and Joelle Pineau, editors, Proceedings of the 29th International Conference on Machine Learning (ICML-12), ICML '12, pages 1679-1686, New York, NY, USA, July 2012. Omnipress.

- [stulp12adaptive] Freek Stulp. Adaptive exploration for continual reinforcement learning. In International Conference on Intelligent Robots and Systems (IROS), pages 1631-1636, 2012.

- [stulp12path] Freek Stulp and Olivier Sigaud. Path integral policy improvement with covariance matrix adaptation. In Proceedings of the 29th International Conference on Machine Learning (ICML), 2012.

- [stulp12policy_hal] Freek Stulp and Olivier Sigaud. Policy improvement methods: Between black-box optimization and episodic reinforcement learning. hal-00738463, 2012.

- [stulp13learning] Freek Stulp, Gennaro Raiola, Antoine Hoarau, Serena Ivaldi, and Olivier Sigaud. Learning compact parameterized skills with a single regression. In IEEE-RAS International Conference on Humanoid Robots, 2013.

- [stulp13robot] Freek Stulp and Olivier Sigaud. Robot skill learning: From reinforcement learning to evolution strategies. Paladyn. Journal of Behavioral Robotics, 4(1):49-61, September 2013.

- [stulp14simultaneous] Freek Stulp, Laura Herlant, Antoine Hoarau, and Gennaro Raiola. Simultaneous on-line discovery and improvement of robotic skill options. In International Conference on Intelligent Robots and Systems (IROS), 2014.

- [stulp15many] Freek Stulp and Olivier Sigaud. Many regression algorithms, one unified model - a review. Neural Networks, 2015.

Contributions in the form of feedback, code, and bug reports are very welcome:

- If you have found an issue or a bug or have a question about the code, please open a GitHub issue.

- If you want to implement a new feature, please fork the source code, modify, and issue a pull request through the project GitHub page.