This is the source code for our paper MOS: Towards Scaling Out-of-distribution Detection for Large Semantic Space by Rui Huang and Sharon Li. Code is modified from Google BiT, ODIN, Outlier Exposure, deep Mahalanobis detector and Robust OOD Detection.

This is a group-based OOD detection framework that is effective for large-scale image classification. Our key idea is to decompose the large semantic space into smaller groups with similar concepts, which allows simplifying the decision boundary and reducing the uncertainty space between in- vs. out-of-distribution data.

Please download ImageNet-1k and place the training data and validation data in

./dataset/id_data/ILSVRC-2012/train and ./dataset/id_data/ILSVRC-2012/val, respectively.

We have curated 4 OOD datasets from iNaturalist, SUN, Places, and Textures, and de-duplicated concepts overlapped with ImageNet-1k.

For iNaturalist, SUN, and Places, we have sampled 10,000 images from the selected concepts for each dataset, which can be download via the following links:

wget http://pages.cs.wisc.edu/~huangrui/imagenet_ood_dataset/iNaturalist.tar.gz

wget http://pages.cs.wisc.edu/~huangrui/imagenet_ood_dataset/SUN.tar.gz

wget http://pages.cs.wisc.edu/~huangrui/imagenet_ood_dataset/Places.tar.gzFor Textures, we use the entire dataset, which can be downloaded from their original website.

Please put all downloaded OOD datasets into ./dataset/ood_data/.

For more details about these OOD datasets, please check out our paper.

Please download the BiT-S pre-trained model families

and put them into the folder ./bit_pretrained_models.

The backbone used in our paper for main results is BiT-S-R101x1.

For group-softmax finetuning (MOS), please run:

bash ./scripts/finetune_group_softmax.sh

For flat-softmax finetuning (baselines), please run:

bash ./scripts/finetune_flat_softmax.sh

To reproduce our MOS results, please run:

bash ./scripts/test_mos.sh iNaturalist(/SUN/Places/Textures)

To reproduce baseline approaches, please run:

bash ./scripts/test_baselines.sh MSP(/ODIN/Energy/Mahalanobis/KL_Div) iNaturalist(/SUN/Places/Textures)

Note: before testing Mahalanobis, make sure you have tuned and saved its hyperparameters first by running:

bash ./scripts/tune_mahalanobis.sh

To facilitate the reproduction of the results reported in our paper, we also provide our group-softmax finetuned model and flat-softmax finetuned model, which can be downloaded via the following links:

wget http://pages.cs.wisc.edu/~huangrui/finetuned_model/BiT-S-R101x1-group-finetune.pth.tar

wget http://pages.cs.wisc.edu/~huangrui/finetuned_model/BiT-S-R101x1-flat-finetune.pth.tarAfter downloading the provided models, you can skip Step 3

and set --model_path in scripts in Step 4 accordingly.

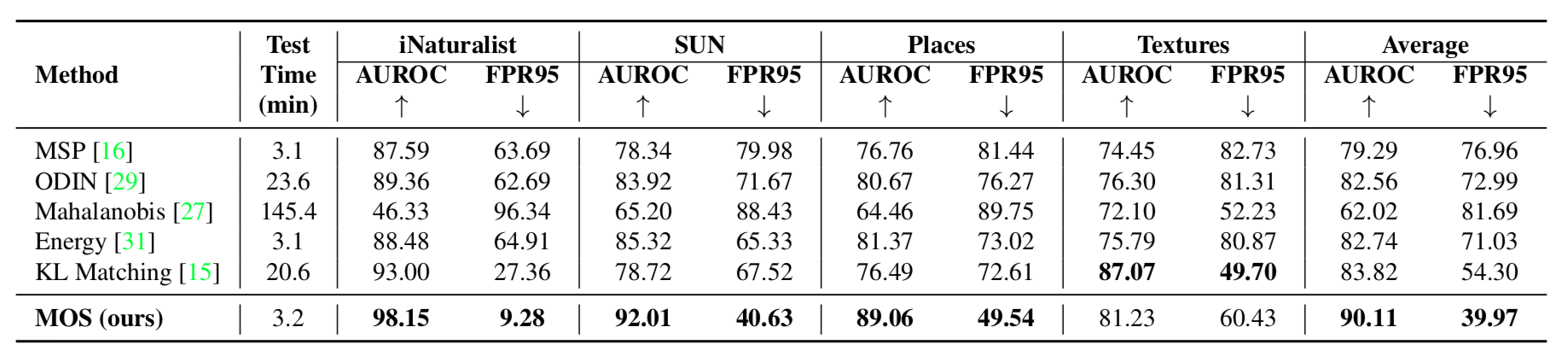

MOS achieves state-of-the-art performance averaged on the 4 OOD datasets.

If you use our codebase or OOD datasets, please cite our work:

@inproceedings{huang2021mos,

title={MOS: Towards Scaling Out-of-distribution Detection for Large Semantic Space},

author={Huang, Rui and Li, Yixuan},

booktitle={Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition},

year={2021}

}