- Implement Curiosity Replay (https://arxiv.org/abs/2306.15934)

- Layer normalization: We use the default epsilon value for layer normalization from Flax.

- GRU: We adopt the default GRU implementation from Flax.

- Adam: We use the default epsilon value for Adam from Flax.

- Policy optimizer: We employ a single optimizer for the policy.

- DynamicScale: We use the default DynamicScale for FP16 training from Optax.

pip install --upgrade setuptools==65.5.0 wheel==0.38.4

pip install --upgrade "jax[cuda12_pip]" -f https://storage.googleapis.com/jax-releases/jax_cuda_releases.html

pip install -r requirements.txt

pip install -e .

python train.py --exp_name [exp_name] --seed [seed]

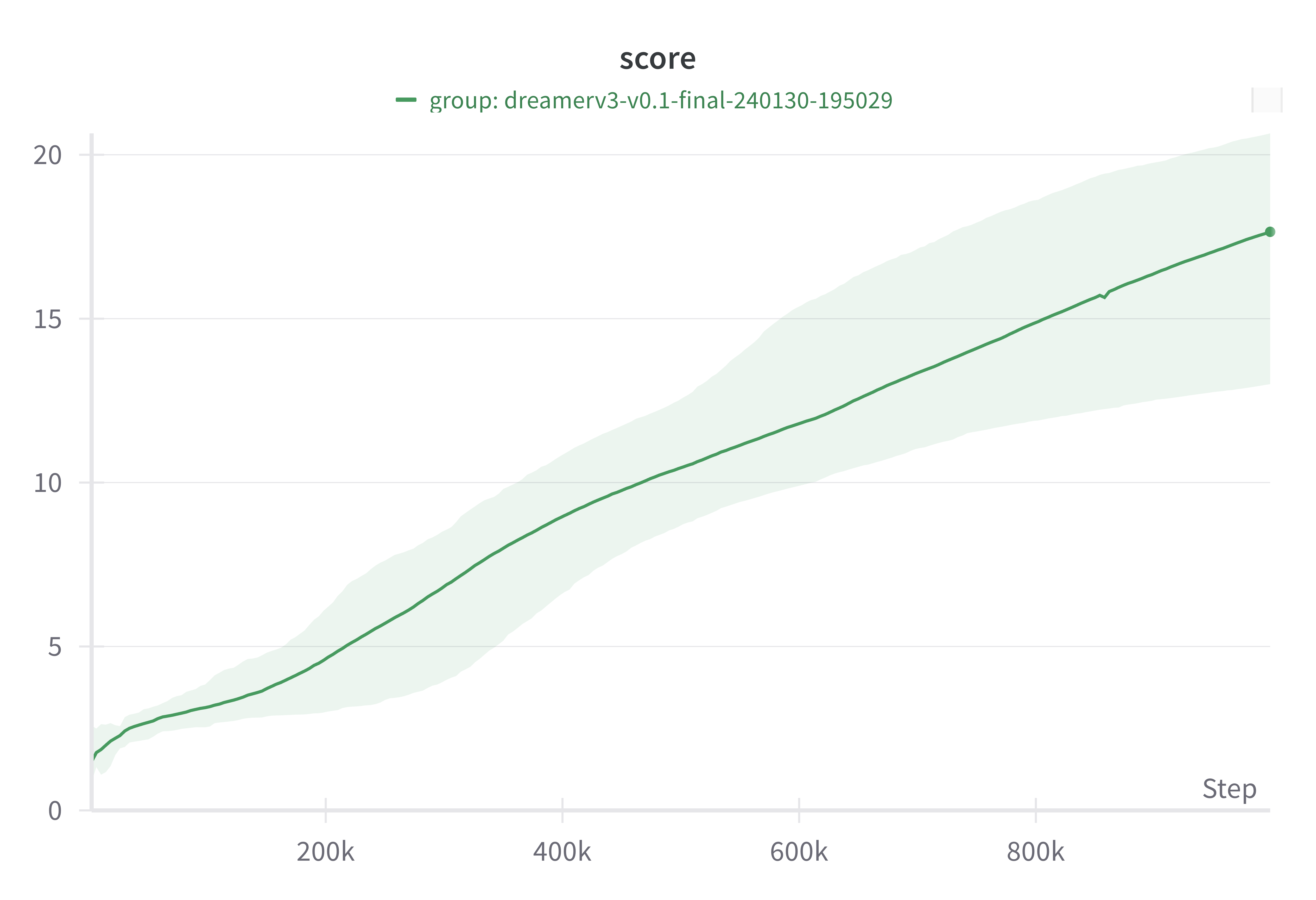

score (10 seeds): 17.65 ± 2.29