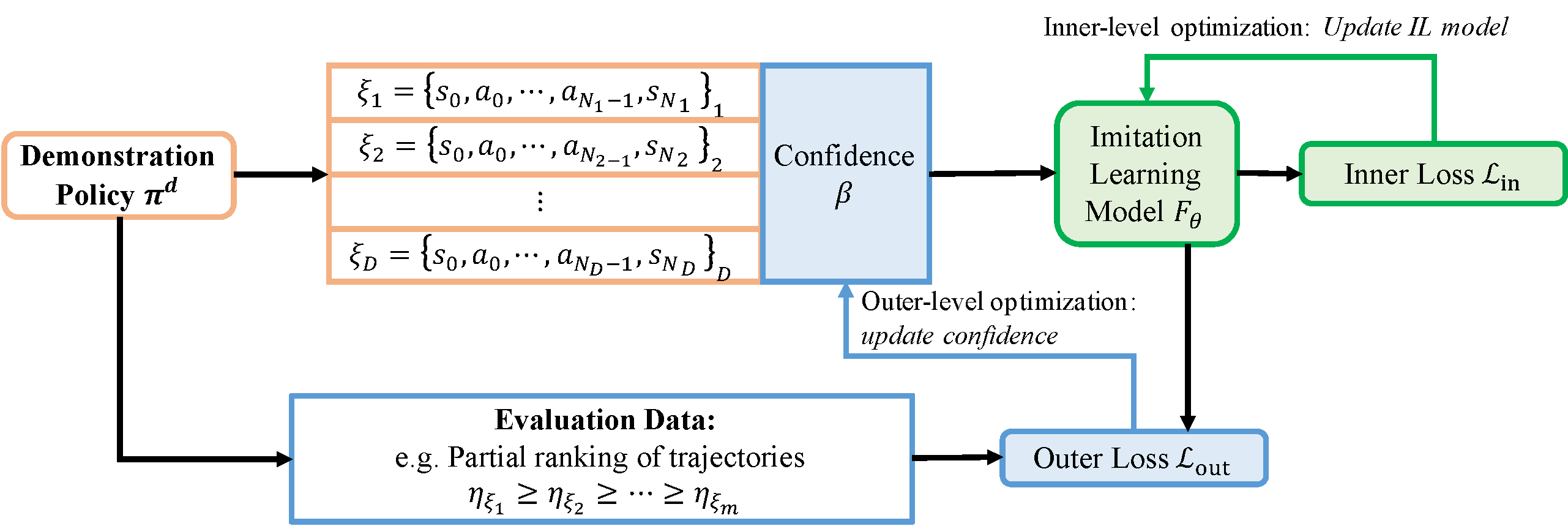

Official implementation of the NeurIPS 2021 paper: S Zhang, Z Cao, D Sadigh, Y Sui: "Confidence-Aware Imitation Learning from Demonstrations with Varying Optimality".

You need to have the following libraries with Python3:

- MuJoCo (This should be installed first)

- Matplotlib

- NumPy

- Gym

- tqdm

- PyTorch

- TensorBoard

- mujoco_py

- SciPy

- pandas

To install requirements:

conda create -n cail python=3.6

conda activate cail

pip install -r requirements.txtNote that you need to install MuJoCo on your device first. Please follow the instructions in mujoco-py for help.

Install CAIL: First clone this repo, then run

pip install -e .To train an expert or an Imitation Learning algorithm that reproduces the results, you can use the hyper-parameters we provided in ./hyperparam.yaml.

To train experts, we provide algorithms including Proximal Policy Optimization (PPO) [5] and Soft Actor-Critic (SAC) [6]. Also, we provide some well-trained experts' weights in the ./weights folder. The file name means the number of steps the expert is trained.

For example, you can use the following line to train an expert:

python train_expert.py --env-id Ant-v2 --algo ppo --num-steps 10000000 --eval-interval 100000 --rollout 10000 --seed 0After training, the logs will be saved in folder ./logs/<env_id>/<algo>/seed<seed>-<training time>/. The folder contains summary to track the process of training, and model to save the models.

First, create a folder for demonstrations:

mkdir buffersYou need to collect demonstraions using trained experts' weights. --algo specifies the expert's algorithm, For example, if you train an expert with ppo, you need to use --algo ppo while collecting demonstrations. The experts' weights we provide are trained by PPO for Ant-v2, and SAC for Reacher-v2. --std specifies the standard deviation of the gaussian noise add to the action, and --p-rand specifies the probability the expert acts randomly. We set std to

Use the following line to collect demonstrations. In our experiments, we set the size of the buffers to contain 200 trajectories in the buffer, generated by 5 different policies. So the buffer size for Ant-v2 is 40000 each policy, and 2000 for Reacher-v2 each policy.

python collect_demo.py --weight "./weights/Ant-v2/10000000.pkl" --env-id Ant-v2 --buffer-size 40000 --algo ppo --std 0.01 --p-rand 0.0 --seed 0After collecting, the demonstrations will be saved in the./buffers/Raw/<env_id> folder. The file names of the demonstrations indicate the sizes and the mean rewards.

You can create a mixture of demonstrations using the collected demonstrations in the previous step. Use the following command to mix the demonstrations.

python mix_demo.py --env-id Ant-v2 --folder "./buffers/Raw/Ant-v2"After mixing, the mixed demonstrations will be saved in the ./buffers/<env_id> folder. The file names of the demonstrations indicate the sizes and the mean rewards of its mixed parts.

The mixed buffers we used in our experiments can be downloaded here. For Linux users, we recommend to use the following commands:

pip install gdown

cd buffers

gdown https://drive.google.com/uc?id=1oohGvjlEqhwZwof5vHnwr_mx2D0AjlzU

tar -zxvf buffers.tar.gz

rm buffers.tar.gz

cd ..You can train IL using the following line:

python train_imitation.py --algo cail --env-id Ant-v2 --buffer "./buffers/Ant-v2/size200000_reward_4787.23_3739.91_2947.49_2115.17_789.13.pth" --rollout-length 10000 --num-steps 20000000 --eval-interval 40000 --label 0.05 --lr-conf 0.1 --pre-train 5000000 --seed 0Note that --label means the ratio of trajectories labeled with trajectory rewards. In our paper, we let 5% trajectories be labeled.

Here we also provide the implementation of the baselines: Generative Adversarial Imitation Learning (GAIL) [1], Adversarial Inverse Reinforcement Learning (AIRL) [2], Two-step Importance Weighting Imitation Learning (2IWIL) [3], Generative Adversarial Imitation Learning with Imperfect demonstration and Confidence (IC-GAIL) [3], Trajectory-ranked Reward Extrapolation (T-REX) [4], Disturbance-based Reward Extrapolation (D-REX) [7], Self-Supervised Reward Regression (SSRR) [8]. In our paper, we train each algorithm 10 times use random seeds 0~9.

For all the baselines except for SSRR, the command for training is similar to the command ahead. For SSRR, since we need to train an AIRL first, we provide some pre-trained AIRL models in ./weights/SSRR_base. The AIRL models are trained with the same command ahead with different random seeds. Use the following line to train SSRR:

python train_imitation.py --env-id Ant-v2 --buffer "./buffers/Ant-v2/size200000_reward_4787.23_3739.91_2947.49_2115.17_789.13.pth" --rollout-length 10000 --num-steps 20000000 --eval-interval 40000 --label 0.05 --algo ssrr --airl-actor "./weights/SSRR_base/Ant-v2/seed0/actor.pkl" --airl-disc "./weights/SSRR_base/Ant-v2/seed0/disc.pkl" --seed 0If you want to evaluate an agent, use the following line:

python eval_policy.py --weight "./weights/Ant-v2/10000000.pkl" --env-id Ant-v2 --algo ppo --episodes 5 --render --seed 0 --delay 0.02We use TensorBoard to track the training process. Use the following line to see how everything goes on during the training:

tensorboard --logdir='<path-to-summary>'We provide the pre-trained models in the folder ./weights/Pretrained. We train each algorithm in each environment 10 times in our paper, and we provide all the converged models.

We provide the following command to make gifs of trained agents:

python make_gif.py --env-id Reacher-v2 --weight "./weights/Pretrained/Reacher-v2/cail/actor_0.pkl" --algo cail --episodes 5This will generate ./figs/<env_id>/actor_0.gif, which shows the performance of the agent.

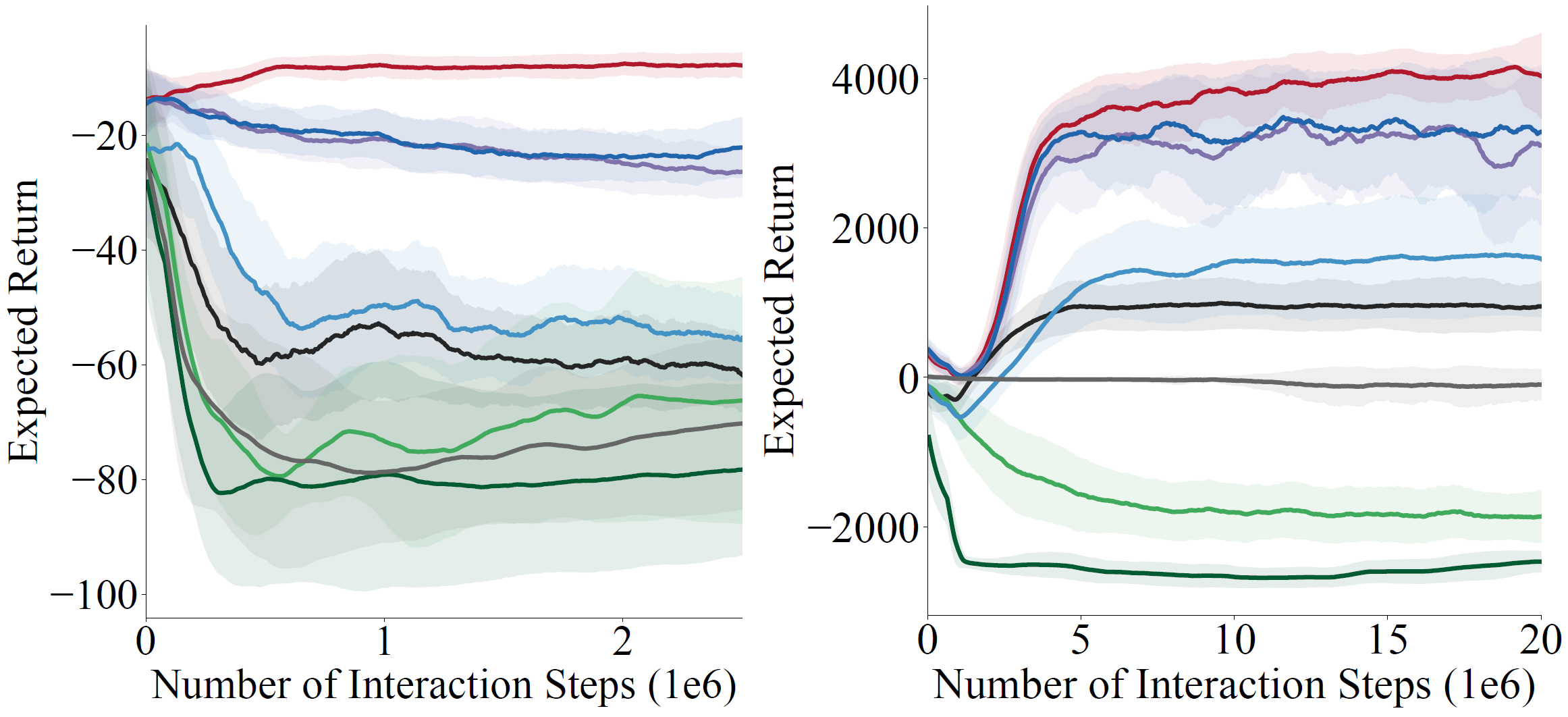

To reproduce our results shown in the paper, you can use our pre-collected demonstrations, and train all the algorithms use the aforementioned commands with the hyper-parameters. Note that we use random seeds 0~9 to train each algorithm 10 times. The results should be like Figure 2.

The numerical results of the converged policies are here in the table:

| Method | Reacher-v2 | Ant-v2 |

|---|---|---|

| CAIL | ||

| 2IWIL | ||

| IC-GAIL | ||

| AIRL | ||

| GAIL | ||

| T-REX | ||

| D-REX | ||

| SSRR |

We also show the gifs of the demonstrations and trained agents:

-

Reacher-v2

-

Ant-v2

@inproceedings{zhang2021cail,

title={Confidence-Aware Imitation Learning from Demonstrations with Varying Optimality},

author={Zhang, Songyuan and Cao, Zhangjie and Sadigh, Dorsa and Sui, Yanan},

booktitle={Conference on Neural Information Processing Systems (NeurIPS)},

year={2021}

}

The author would like to thank Tong Xiao for the inspiring discussions and her help in implementing the codes and the experiments.

The code structure is based on the repo gail-airl-ppo.pytorch.

[1] Ho, J. and Ermon, S. Generative adversarial imitation learning. In Advances in neural information processing systems, pp. 4565–4573, 2016.

[2] Fu, J., Luo, K., and Levine, S. Learning robust rewards with adversarial inverse reinforcement learning. In International Conference on Learning Representations, 2018.

[3] Wu, Y.-H., Charoenphakdee, N., Bao, H., Tangkaratt, V.,and Sugiyama, M. Imitation learning from imperfect demonstration. In International Conference on Machine Learning, pp. 6818–6827, 2019.

[4] Brown, D., Goo, W., Nagarajan, P., and Niekum, S. Extrapolating beyond suboptimal demonstrations via inversere inforcement learning from observations. In International Conference on Machine Learning, pp. 783–792. PMLR, 2019.

[5] Schulman, J., Wolski, F., Dhariwal, P., Radford, A., and Klimov, O. Proximal policy optimization algorithms. arXiv preprint arXiv:1707.06347, 2017.

[6] Haarnoja, T., Zhou, A., Abbeel, P., and Levine, S. Soft actor-critic: Off-policy maximum entropy deep reinforcement learning with a stochastic actor. In International Conference on Machine Learning, pp. 1861–1870. PMLR, 2018.

[7] Daniel S Brown, Wonjoon Goo, and Scott Niekum. Better-than-demonstrator imitation learning via automatically-ranked demonstrations. In Conference on Robot Learning, pages 330–359. PMLR, 2020.

[8] Letian Chen, Rohan Paleja, and Matthew Gombolay. Learning from suboptimal demonstration via self-supervised reward regression. In Conference on Robot Learning. PMLR, 2020.