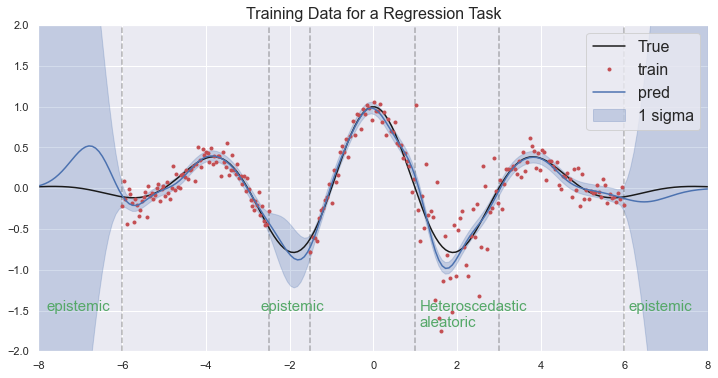

This reposit compares the uncertainty in Gaussian Process Regression and other methods developed for deep learning such as Ensemble, Bayesian approach based on the dropout. It performs 1D regression simulations where the training data is corrupted with both epistemic and aleatoric unceratinty. Your can start with Comparison.ipynb in notebooks folder.

- Training data

- GP regression

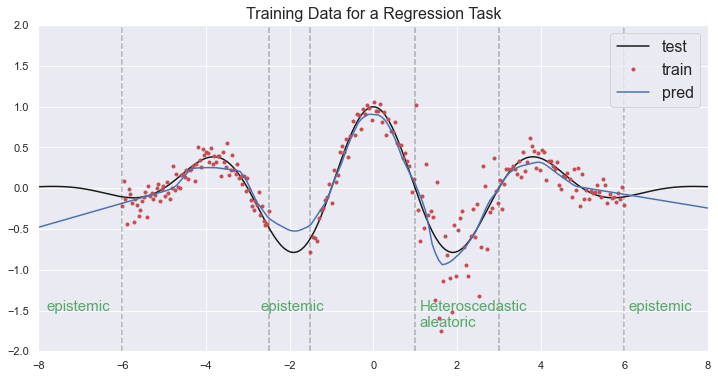

- Neural net

- Ensemble

- Bayeisn neural net with dropout

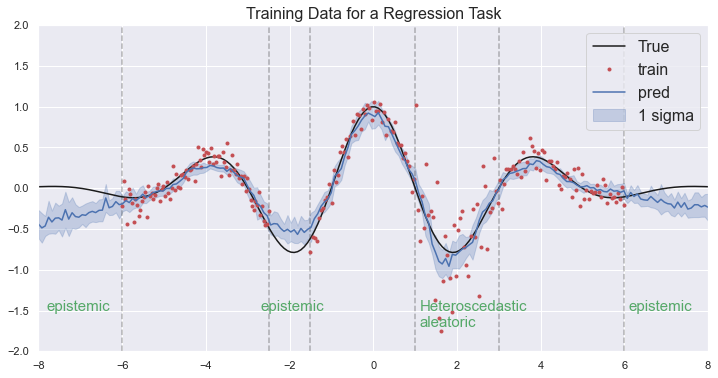

- Single density network

- Density + dropout

- Density + ensemble

- Mixture density network

- Bayesiean NN based on dropout: https://arxiv.org/pdf/1703.04977.pdf

- Deep ensemble density network: https://papers.nips.cc/paper/2017/file/9ef2ed4b7fd2c810847ffa5fa85bce38-Paper.pdf

- Mixture density network: https://arxiv.org/pdf/1709.02249.pdf

- Gaussian process regression Code from Sungjoon Choi(https://github.com/sjchoi86)

- Gaussian process for heteroscedastic data (https://cs.stanford.edu/~quocle/LeSmoCan05.pdf)