Tingting Zheng, Kui jiang, Hongxun Yao

CVPR24

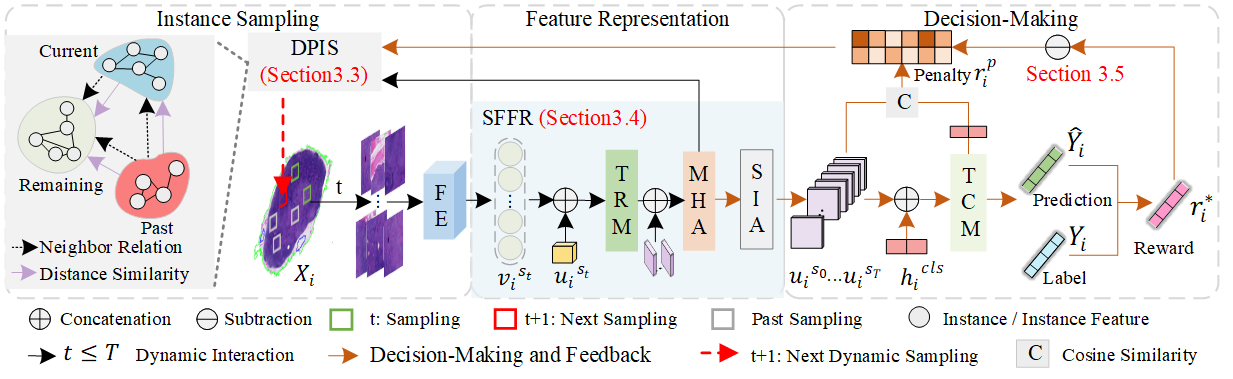

Abstract: Multi-Instance Learning (MIL) has shown impressive performance for histopathology whole slide image (WSI) analysis using bags or pseudo-bags. It involves instance sampling feature representation and decision-making. However, existing MIL-based technologies at least suffer from one or more of the following problems: 1) requiring high storage and intensive pre-processing for numerous instances (sampling); 2) potential over-fitting with limited knowledge to predict bag labels (feature representation); 3) pseudo-bag counts and prior biases affect model robustness and generalizability (decision-making). Inspired by clinical diagnostics using the past sampling instances can facilitate the final WSI analysis but it is barely explored in prior technologies. To break free of these limitations we integrate the dynamic instance sampling and reinforcement learning into a unified framework to improve the instance selection and feature aggregation forming a novel Dynamic Policy Instance Selection (DPIS) scheme for better and more credible decision-making. Specifically, the measurement of feature distance and reward function are employed to boost continuous instance sampling. To alleviate the over-fitting we explore the latent global relations among instances for more robust and discriminative feature representation while establishing reward and punishment mechanisms to correct biases in pseudo-bags using contrastive learning. These strategies form the final Dynamic Policy-Driven Adaptive Multi-Instance Learning (PAMIL) method for WSI tasks. Extensive experiments reveal that our PAMIL method outperforms the state-of-the-art by 3.8% on CAMELYON16 and 4.4% on TCGA lung cancer datasets.

- [2024/02/29] The repo is created

- [2024/07/27] Update preprocessing features

- Linux (Tested on Ubuntu 18.04)

- NVIDIA GPU (Tested on 3090)

torch

torchvision

numpy

h5py

scipy

scikit-learning

pandas

nystrom_attention

admin_torchWe use the same configuration of data preprocessing as DSMIL.

We use CLAM to preprocess CAMELYON16 at 20x. For your own dataset, you can modify and run create_patches_fp_Lung.py and extract_features_fp_LungRes18Imag.py.

The data used for training, validation and testing are expected to be organized as follows:

DATA_ROOT_DIR/

├──DATASET_1_DATA_DIR/

└── pt_files

├── slide_1.pt

├── slide_2.pt

└── ...

└── h5_files

├── slide_1.h5

├── slide_2.h5

└── ...

├──DATASET_2_DATA_DIR/

└── pt_files

├── slide_a.pt

├── slide_b.pt

└── ...

└── h5_files

├── slide_i.h5

├── slide_ii.h5

└── ...

└── ...We use preprocessing features from MMIL. More details about this file can refer DSMIL and CLAM Thanks to their wonderful works!

@inproceedings{zheng2024dynamic,

title={Dynamic Policy-Driven Adaptive Multi-Instance Learning for Whole Slide Image Classification},

author={Zheng, Tingting and

Jiang, Kui and

Yao, Hongxun},

booktitle={CVPR},

year={2024}

}