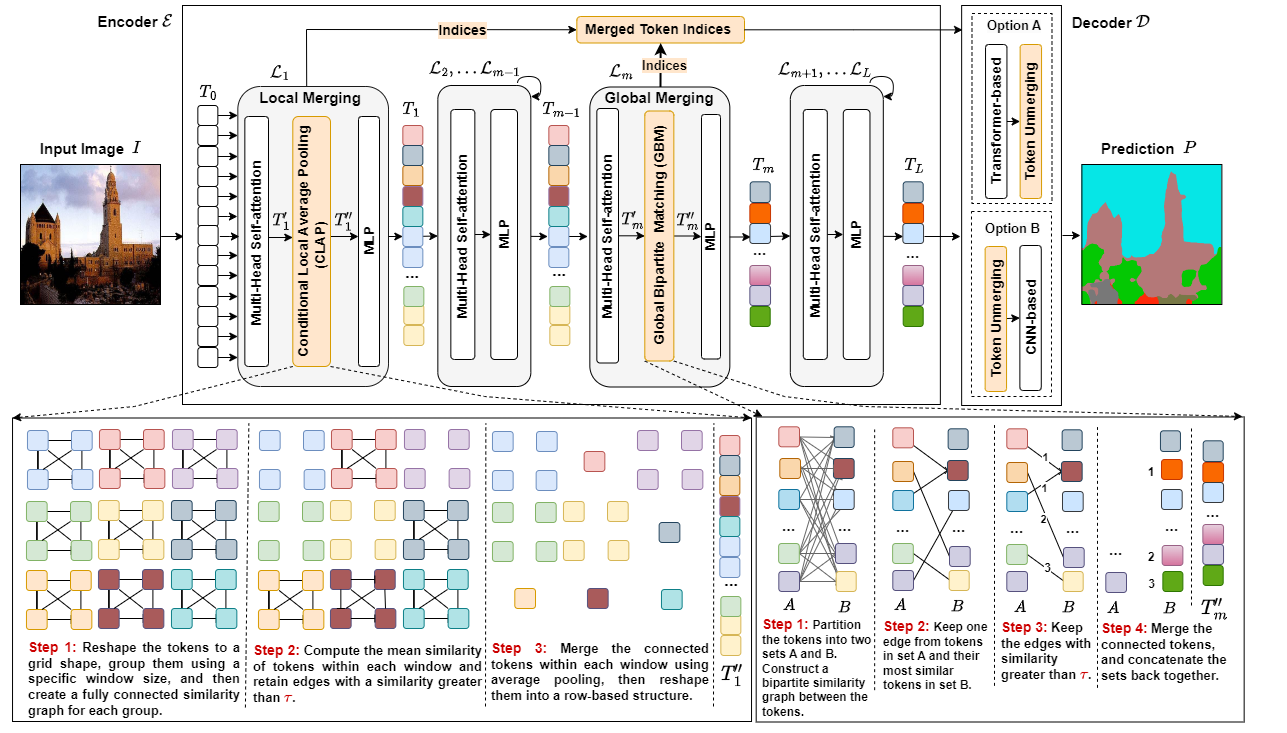

ALGM: Adaptive Local-then-Global Token Merging for Efficient Semantic Segmentation with Plain Vision Transformers (CVPR 2024)

[Project page] [Paper]

"ALGM: Adaptive Local-then-Global Token Merging for Efficient Semantic Segmentation with Plain Vision Transformers", by Narges Norouzi, Svetlana Orlova, Daan de Geus, and Gijs Dubbelman, CVPR 2024.

In this repository, Adaptive Local-then-Global Token Merging (ALGM) is applied to Segmenter: Transformer for Semantic Segmentation by Robin Strudel*, Ricardo Garcia*, Ivan Laptev and Cordelia Schmid, ICCV 2021.

The provided code extends the original code for Segmenter.

Installation follows the installation of the original Segmenter code. Specifically: define os environment variables pointing to your checkpoint and dataset directory, put in your .bashrc:

export DATASET=/path/to/dataset/dir1. Clone the repo

git clone https://github.com/tue-mps/algm-segmenter.git

cd algm-segmenter2. Setting up the virtualenv

Install PyTorch (>= 1.13.1 # For scatter_reduce).

# create environment

conda create -n algm python==3.10

conda activate algm

# install pytorch with cuda

conda install pytorch==1.13.1 torchvision==0.14.1 torchaudio==0.13.1 pytorch-cuda=11.7 -c pytorch -c nvidia

# install required packages

pip install -r requirements.txt3. Setting up the ALGM package

cd algm

# set up the ALGM package

python setup.py build develop4. Prepare the datasets To download ADE20K, use the following command:

python -m segm.scripts.prepare_ade20k $DATASETSimilar preparation scripts also exist for Cityscapes and Pascal-Context.

To train Segmenter + ALGM using ViT-S/16 with specific configurations on the ADE20K dataset, use the command provided below. The model is configured to apply ALGM at layers 1 and 5, with a merging window size of 2x2 and a threshold of 0.88.

python -m segm.train --log-dir runs/vit_small_layers_1_5_T_0.88/ \

--dataset ade20k \

--backbone vit_small_patch16_384 \

--decoder mask_transformer \

--patch-type algm \

--selected-layers 1 5 \

--merging-window-size 2 2 \

--threshold 0.88 For more examples of training commands, see TRAINING.

To perform an evaluation using Segmenter + ALGM on ADE20K, execute the following command. Ensure you replace path_to_checkpoint.pth with the actual path to your checkpoint file. Additionally, make sure the variant.yaml file is located in the same directory as your checkpoint file. For additional examples covering all available backbones and datasets, refer to the jobs directory.

Note: Please use the specific values for the selected-layers and threshold options for each backbone. You can find these values in the variant.yaml file.

# single-scale baseline evaluation:

python -m segm.eval.miou path_to_checkpoint.pth \

ade20k \

--singlescale \

--patch-type pure

# Explanation:

# --singlescale: Evaluates the model using a single scale of input images.

# --patch-type pure: Uses the standard patch processing without any modifications.

# single-scale baseline + ALGM evaluation:

python -m segm.eval.miou path_to_checkpoint.pth \

ade20k \

--singlescale \

--patch-type algm \

--selected-layers 1 5 \

--merging-window-size 2 2 \

--threshold 0.88

# Explanation:

# --patch-type algm: Applies the ALGM patch type.

# --selected-layers 1 5: Specifies which layers of the network to apply ALGM. In this case, layers 1 and 5.

# --merging-window-size 2 2: Sets the size of the merging window for the ALGM algorithm, here it is 2x2.

# --threshold 0.90: Sets the confidence threshold for merging patches in ALGM, where 0.90 stands for 90% confidence.

To calculate the Im/Sec and GFLOPs, execute the following commands. Again, ensure you replace path_to_checkpoint_directory with the actual path to your checkpoint file. Additionally, make sure the variant.yaml file is located in the same directory as your checkpoint file.

Note: Please use the specific values for the selected-layers and threshold options for each backbone. You can find these values in the variant.yaml file.

# Im/sec

python -m segm.speedtest --model-dir path_to_checkpoint_directory \

--dataset ade20k \

--batch-size 1 \

--patch-type algm \

--selected-layers 1 5 \

--merging-window-size 2 2 \

--threshold 0.88

# GFLOPs

python -m segm.flops --model-dir path_to_checkpoint_directory \

--dataset ade20k \

--batch-size 1 \

--patch-type algm \

--selected-layers 1 5 \

--merging-window-size 2 2 \

--threshold 0.88

Below, we provide the results for different network settings and datasets.

Segmenter models with ViT backbone:

| Backbone | Crop size | mIoU | Im/sec (BS=32) | GFLOPs | Download | |

|---|---|---|---|---|---|---|

| ViT-Ti/16 | 512x512 | 38.1 | 287 | 12.8 | model | config |

| ViT-Ti/16 + ALGM | 512x512 | 38.9 | 388 | 8.4 | model | config |

| ViT-S/16 | 512x512 | 45.3 | 134 | 38.6 | model | config |

| ViT-S/16 + ALGM | 512x512 | 46.4 | 192 | 26.3 | model | config |

| ViT-B/16 | 512x512 | 48.5 | 51 | 130 | model | config |

| ViT-B/16 + ALGM | 512x512 | 49.4 | 73 | 91 | model | config |

| ViT-L/16 | 640x640 | 51.8 | 10 | 672 | model | config |

| ViT-L/16 + ALGM | 640x640 | 52.7 | 16 | 438 | model | config |

| Backbone | Crop size | mIoU | Im/sec (BS=32) | GFLOPs | Download | |

|---|---|---|---|---|---|---|

| ViT-S/16 | 480x480 | 53.0 | 172 | 32.1 | model | config |

| ViT-S/16 + ALGM | 480x480 | 53.2 | 217 | 24.6 | model | config |

| Backbone | Crop size | mIoU | Im/sec (BS=32) | GFLOPs | Download | |

|---|---|---|---|---|---|---|

| ViT-S/16 | 768x768 | 76.5 | 41 | 116 | model | config |

| ViT-S/16 + ALGM | 768x768 | 76.9 | 65 | 76 | model | config |

- [x] Training and Inference code

- [x] Flops and Speedtest code

- [ ] ViT-Large checkpoints for Cityscapes and Pascal-Context datasets

- [ ] COCO-Stuff dataset support

- [ ] Code for merging visulization

@inproceedings{norouzi2024algm,

title={{ALGM: Adaptive Local-then-Global Token Merging for Efficient Semantic Segmentation with Plain Vision Transformers}},

author={Norouzi, Narges and Sorlova, Svetlana and {de Geus}, Daan and Dubbelman, Gijs},

booktitle={IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR)},

year={2024}

}

This code uses the ToMe repository for implementing the global merging module and extends the official Segmenter code. The Vision Transformer code in the original repository is based on timm library and the semantic segmentation training and evaluation pipelines are based on mmsegmentation.