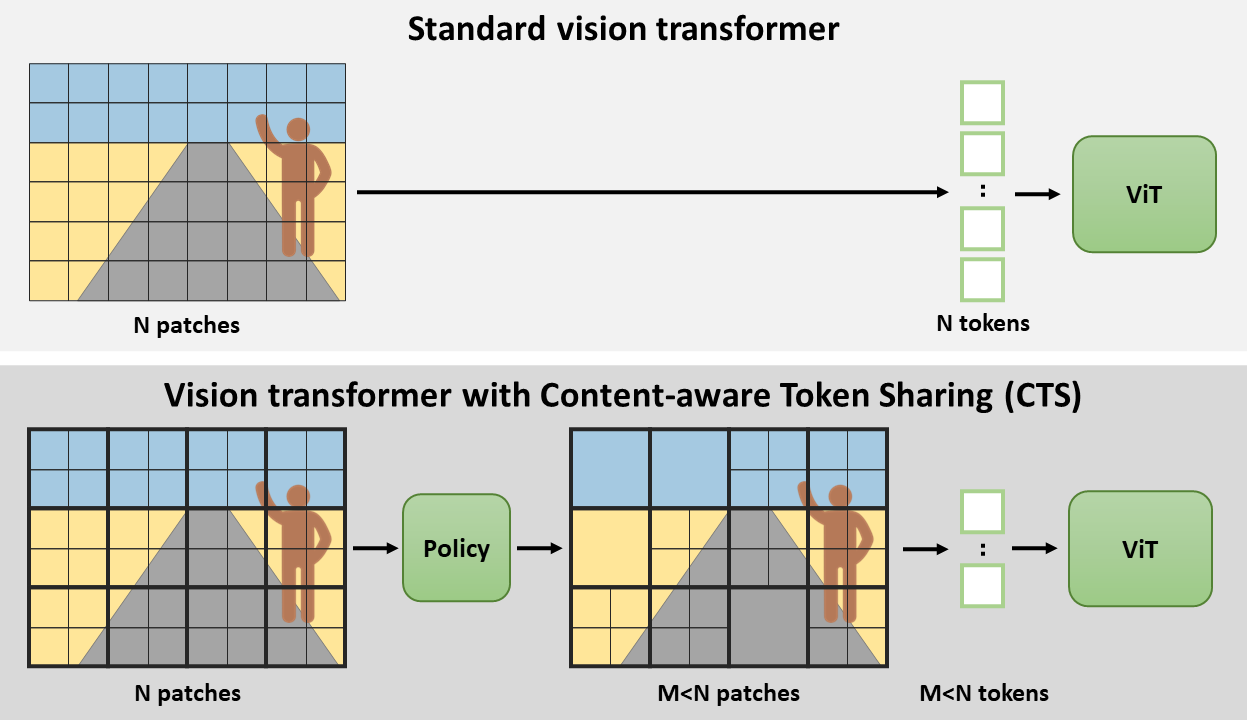

Content-aware Token Sharing for Efficient Semantic Segmentation with Vision Transformers (CVPR 2023)

[Project page] [Paper]

"Content-aware Token Sharing for Efficient Semantic Segmentation with Vision Transformers", by Chenyang Lu*, Daan de Geus*, and Gijs Dubbelman, CVPR 2023.

In this repository, Content-aware Token Sharing (CTS) is applied to Segmenter: Transformer for Semantic Segmentation by Robin Strudel*, Ricardo Garcia*, Ivan Laptev and Cordelia Schmid, ICCV 2021.

The provided code extends the original code for Segmenter.

Installation follows the installation of the original Segmenter code. Specifically: define os environment variables pointing to your checkpoint and dataset directory, put in your .bashrc:

export DATASET=/path/to/dataset/dirInstall PyTorch (1.9 to 1.13 should be compatible), then pip install . at the root of this repository.

To download ADE20K, use the following command:

python -m segm.scripts.prepare_ade20k $DATASETSimilar preparation scripts also exist for Cityscapes and Pascal-Context.

To be able to train a model with CTS, the policy network should first be trained on the segmentation dataset. Here, we provide the code and the instructions for this policy network. The output model checkpoint file should be used below.

To train Segmenter + CTS with ViT-S/16 and 30% token reduction (612 tokens not shared, 103 tokens shared) on ADE20K, run the command below.

python -m segm.train --log-dir runs/ade20k_segmenter_small_patch16_cts_612_103 \

--dataset ade20k \

--backbone vit_small_patch16_384 \

--decoder mask_transformer \

--policy-method policy_net \

--num-tokens-notshared 612 \

--num-tokens-shared 103 \

--policynet-ckpt 'policynet/logdir/policynet_efficientnet_ade20k/model.pth'For more examples of training commands, see TRAINING.

To evaluate on Segmenter + CTS on ADE20K, run the command below after replacing path_to_checkpoint.pth with the path to your checkpoint. Note: the config.yaml file should also be present in the folder where path_to_checkpoint.pth

# single-scale evaluation:

python -m segm.eval.miou path_to_checkpoint.pth ade20k --singlescale

# multi-scale evaluation:

python -m segm.eval.miou path_to_checkpoint.pth ade20k --multiscaleBelow, we provide the results for different network settings and datasets.

In the near future, we plan to release the model weights and configuration files for the trained models.

NOTE: We observe variances in the mIoU of up to +/- 0.5 points. In the paper and the table below, we report the median over 5 runs. Thus, it may take multiple training runs to obtain the results reported below.

Segmenter models with ViT backbone:

| Backbone | CTS token reduction | mIoU | Crop size | Im/sec (BS=32) | Download | |

|---|---|---|---|---|---|---|

| ViT-Ti/16 | 0% | 38.1 | 512x512 | 262 | model (soon) | config (soon) |

| ViT-Ti/16 | 30% | 38.2 | 512x512 | 284 | model (soon) | config (soon) |

| ViT-S/16 | 0% | 45.0 | 512x512 | 122 | model (soon) | config (soon) |

| ViT-S/16 | 30% | 45.1 | 512x512 | 162 | model (soon) | config (soon) |

| ViT-B/16 | 0% | 48.5 | 512x512 | 47 | model (soon) | config (soon) |

| ViT-B/16 | 30% | 48.7 | 512x512 | 69 | model (soon) | config (soon) |

| ViT-L/16 | 0% | 51.8 | 640x640 | 9.7 | model (soon) | config (soon) |

| ViT-L/16 | 30% | 51.6 | 640x640 | 15 | model (soon) | config (soon) |

| Backbone | CTS token reduction | mIoU | Crop size | Im/sec (BS=32) | Download | |

|---|---|---|---|---|---|---|

| ViT-S/16 | 0% | 53.0 | 480x480 | 157 | model (soon) | config (soon) |

| ViT-S/16 | 30% | 52.9 | 480x480 | 203 | model (soon) | config (soon) |

| Backbone | CTS token reduction | mIoU | Crop size | Im/sec (BS=32) | Download | |

|---|---|---|---|---|---|---|

| ViT-S/16 | 0% | 76.5 | 768x768 | 38 | model (soon) | config (soon) |

| ViT-S/16 | 44% | 76.5 | 768x768 | 78 | model (soon) | config (soon) |

@inproceedings{lu2023cts,

title={{Content-aware Token Sharing for Efficient Semantic Segmentation with Vision Transformers}},

author={Lu, Chenyang and {de Geus}, Daan and Dubbelman, Gijs},

booktitle={IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR)},

year={2023}

}

This code extends the official Segmenter code. The Vision Transformer code in the original repository is based on timm library and the semantic segmentation training and evaluation pipelines are based on mmsegmentation.