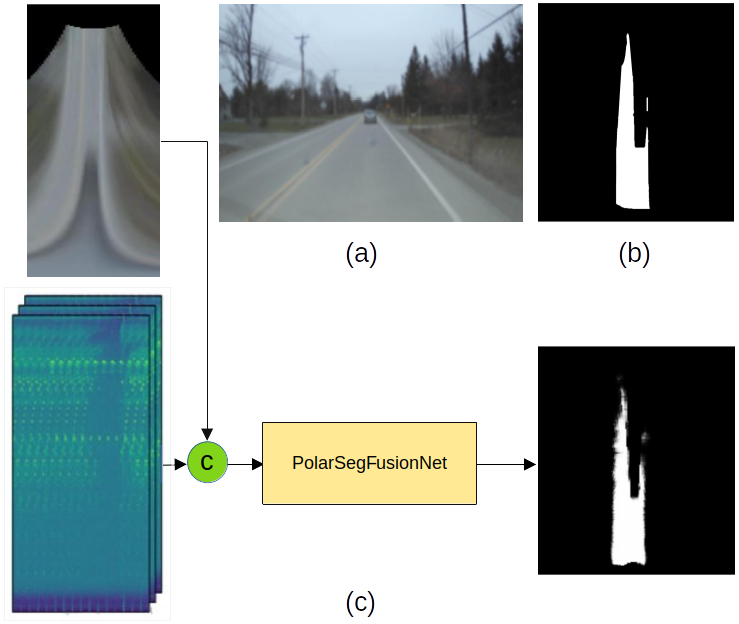

The proposed method, named PolarSegFusionNet, is trained by using the image labels in the birds-eye-view polar domain. This repository implements several design choices for deep learning based sensor fusion architectures of 4D radar and camera data, where the fusion is performed at different network depths from which an optimal architecture is derived. Early fusion using PolarSegFusionNet is shown in the above figure: (a) camera image, (b) ground truth in BEV, (c) 4D Radar-Camera early fusion with final prediction. The models are trained and tested on the RADIal dataset. The dataset can be downloaded here.

The architectures with results can be briefly seen in the following video:

video_summary.mp4

- Clone the repo and set up the conda environment:

$ git clone "this repo"

$ conda create --prefix "your_path" python=3.9 -y

$ conda update -n base -c defaults conda

$ source activate "your_path"- The following are the packages used:

$ conda install pytorch==1.12.1 torchvision==0.13.1 torchaudio==0.12.1 cudatoolkit=11.3 -c pytorch

$ pip install -U pip

$ pip3 install pkbar

$ pip3 install tensorboard

$ pip3 install pandas

$ pip3 install shapelyTo train a model, a JSON configuration file should be set.

The configuration file is provided here: config/config_PolarSegFusionNet.json

There are 8 architectures namely: only_camera, early_fusion, x0_fusion, x1_fusion, x2_fusion, x3_fusion, x4_fusion and after_decoder_fusion. Meaning there is a possibility to train 8 models individually.

For example, to train camera only architecture set the following config parameters appropriately:

"SegmentationHead": "True",

"radar_input": "False",

"camera_input": "True",

"fusion": "False"And in order to train any fusion architecture set all the following to "True":

"SegmentationHead": "True",

"radar_input": "True",

"camera_input": "True",

"fusion": "True"Moreover, it is important to select the architecture for training. With the following setting, "x2_fusion" architecture will be chosen. To choose "early_fusion" architecture, simple set "early_fusion": "True" and the remaining to "False" and so on.

"architecture": {

"only_camera": "False",

"early_fusion": "False",

"x0_fusion": "False",

"x1_fusion": "False",

"x2_fusion": "True",

"x3_fusion": "False",

"x4_fusion": "False",

"after_decoder_fusion": "False"}Now, to train the chosen architecture, please run:

$ python 1-Train.pyTo evaluate the model performance, please run:

$ python 2-Evaluation.pyTo obtain qualitative results, please run:

$ python 3-Test.py| Model | mIoU | Model params (in millions) |

Weights |

|---|---|---|---|

| Camera Only | 81.61 | 3.28 |

Download |

| Early Raw fusion | 86.54 | 3.18 |

Download |

| Pre-encoder (x0) block fusion | 87.19 | 3.19 |

Download |

| x1 feature block fusion | 87.44 | 3.21 |

Download |

| x2 feature block fusion | 87.50 | 3.37 |

Download |

| x3 feature block fusion | 87.74 | 3.93 |

Download |

| x4 feature block fusion | 87.75 | 4.96 |

Download |

| After Decoder fusion | 87.81 | 6.52 |

Download |

Table Notes

- Each of our models has been trained for ten times with a different random seed each time and the mean of the results are documented.

- All checkpoints are trained to 100 epochs.

- Thanks to Eindhoven University of Technology, Elektrobit Automotive GmbH and Transilvania University of Brașov for assistance in successfully achieving this.

- The code base is influenced by Julien Rebut's RADIal paper. Code Paper

The PolarSegFusionNet repo is released under the Clear BSD license. Patent status: "patent applied for".