This is a collection of research papers about Embodied Multimodal Large Language Models (VLA models).

If you would like to include your paper or update any details (e.g., code urls, conference information), please feel free to submit a pull request or email me. Any advice is also welcome.

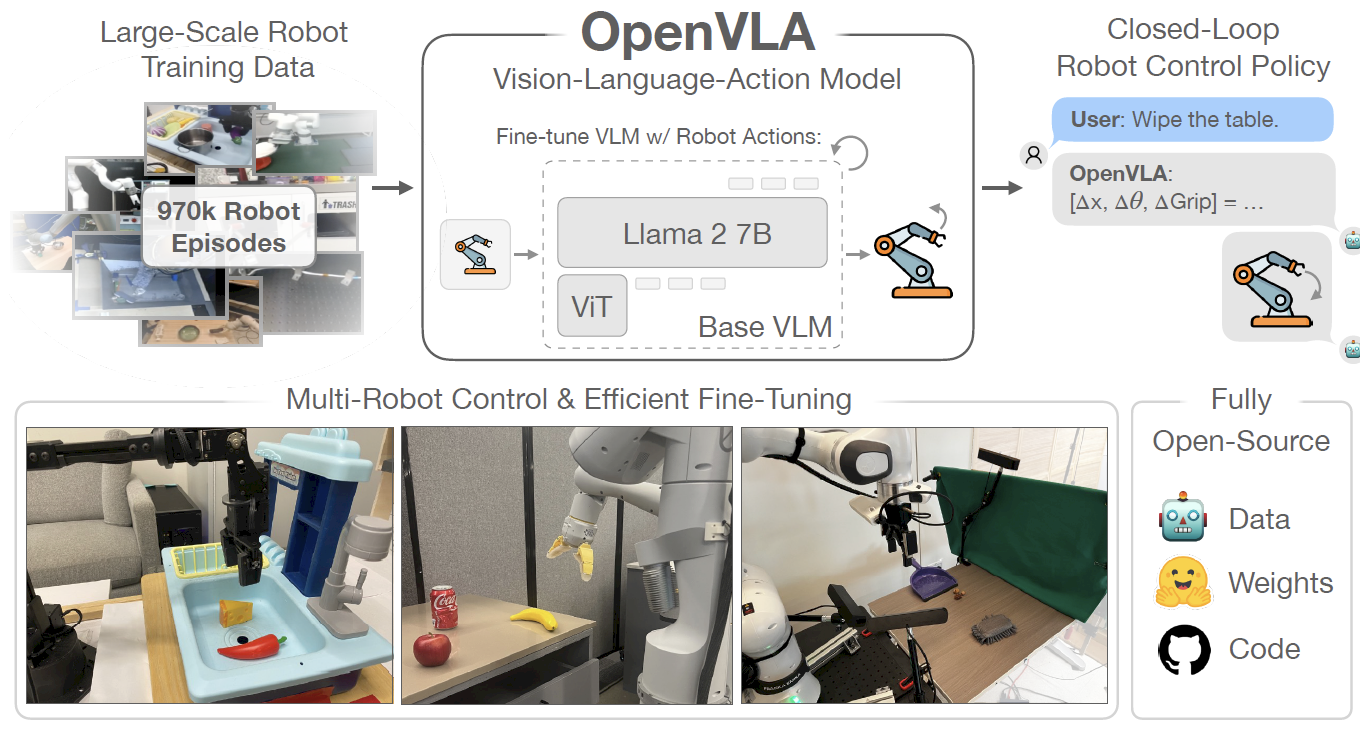

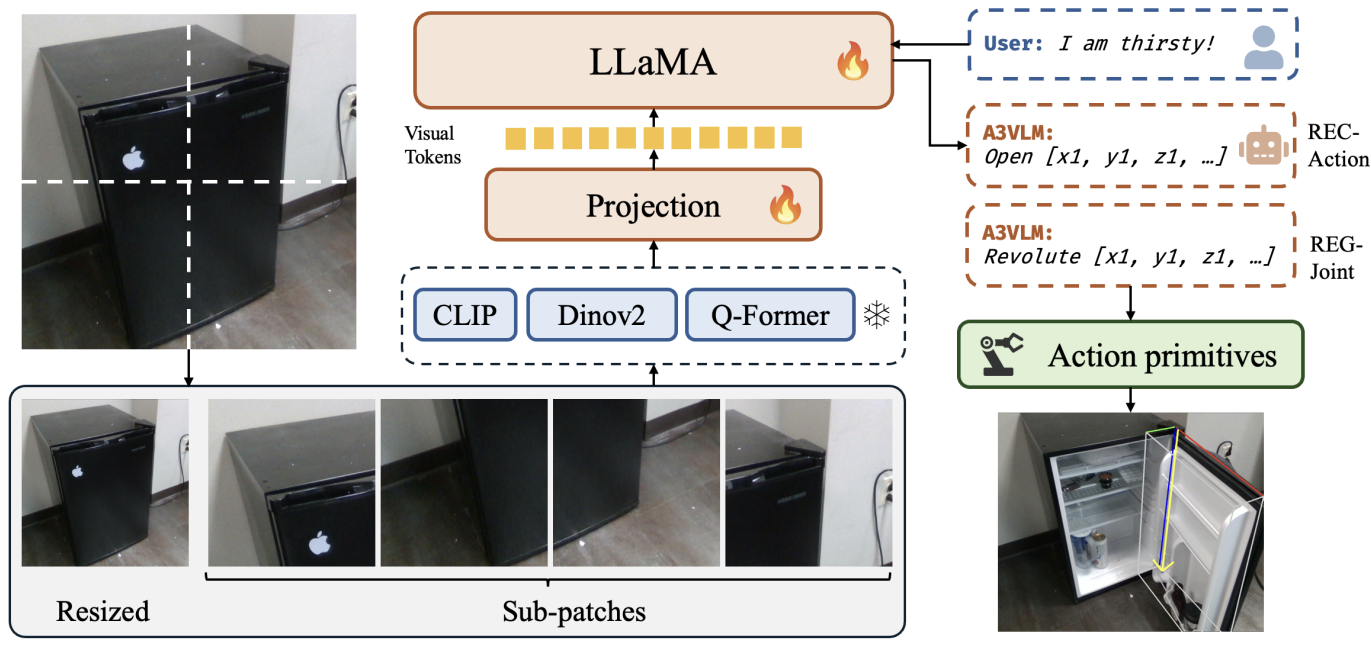

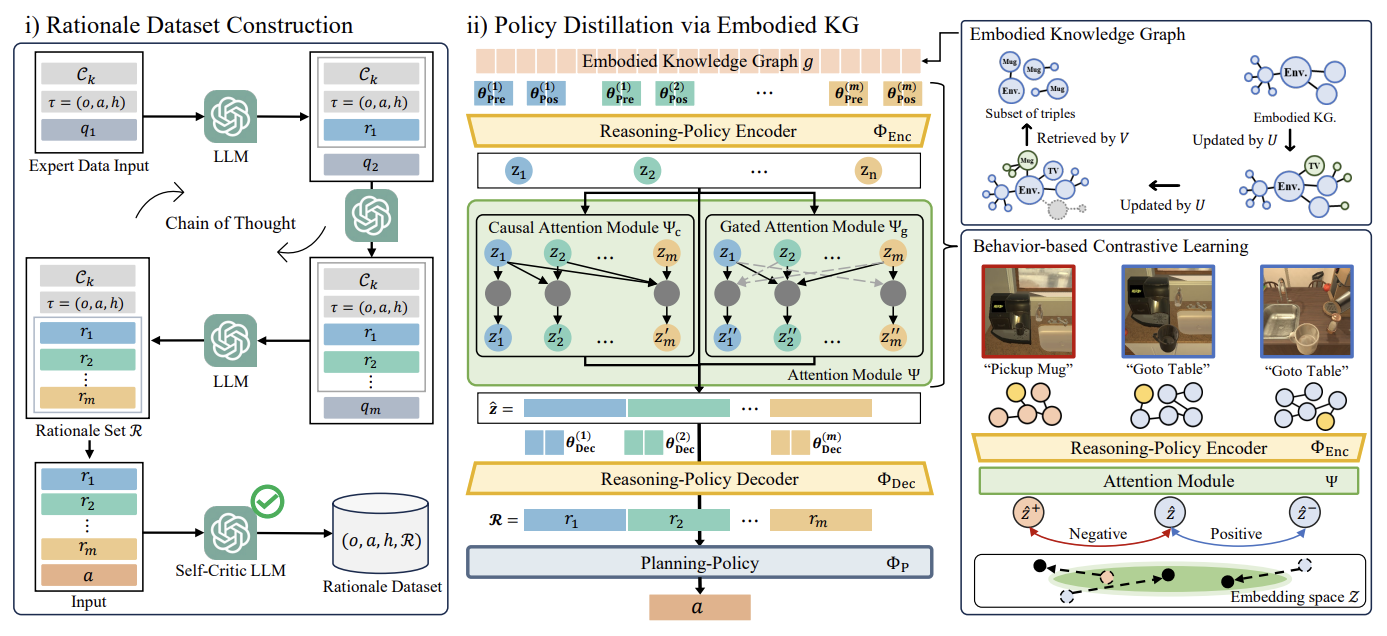

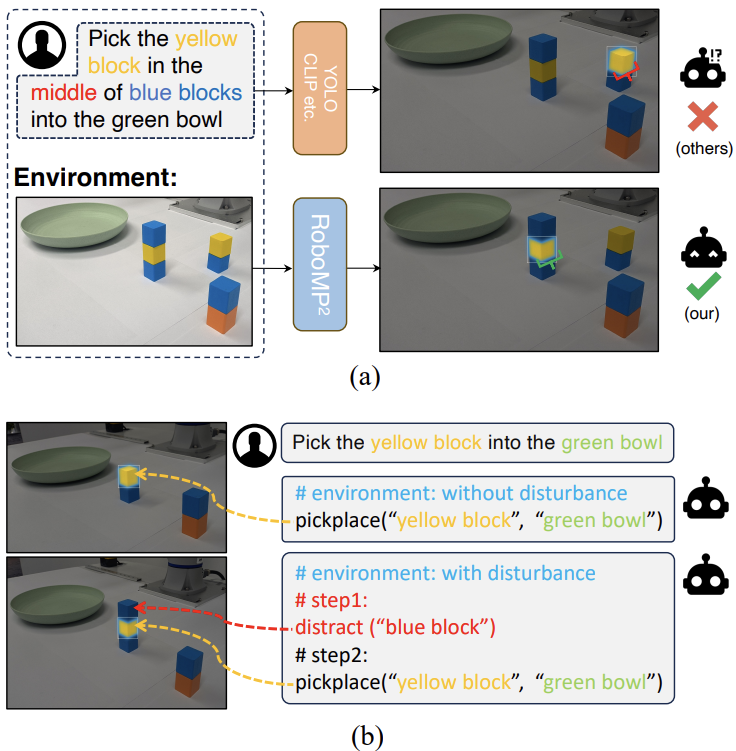

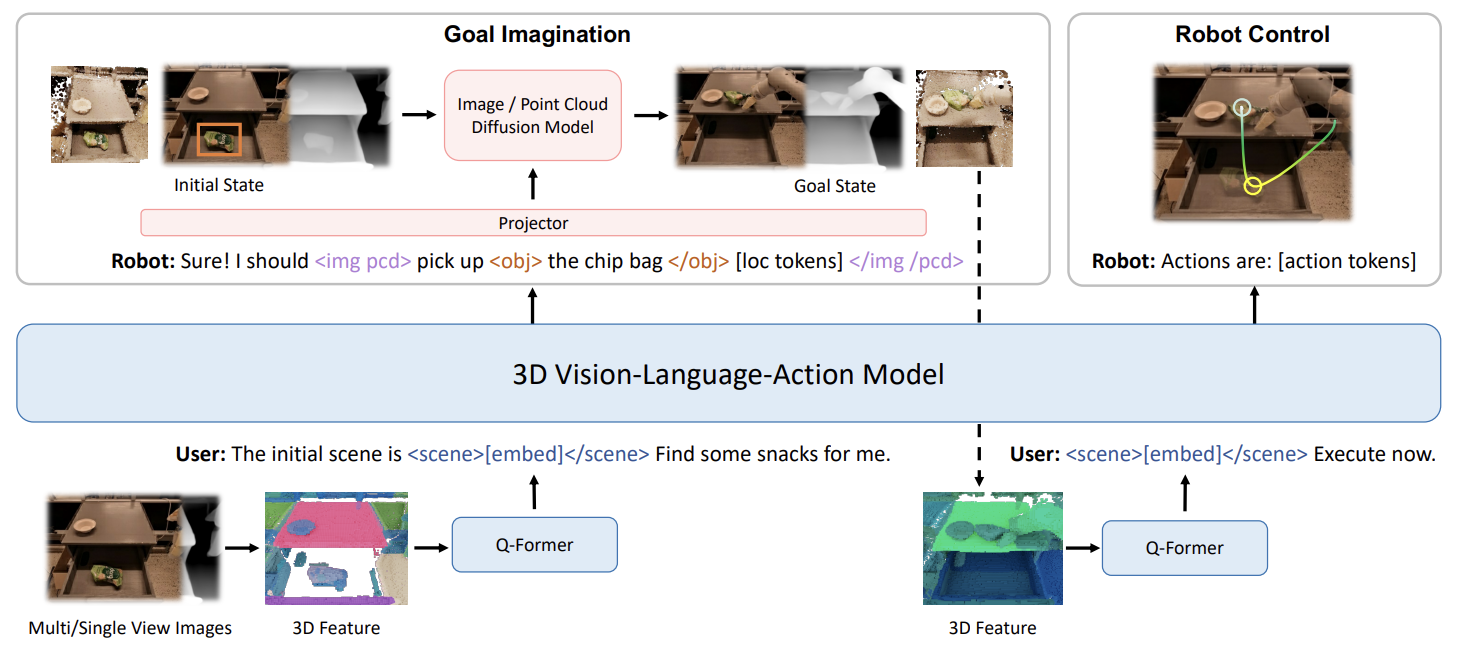

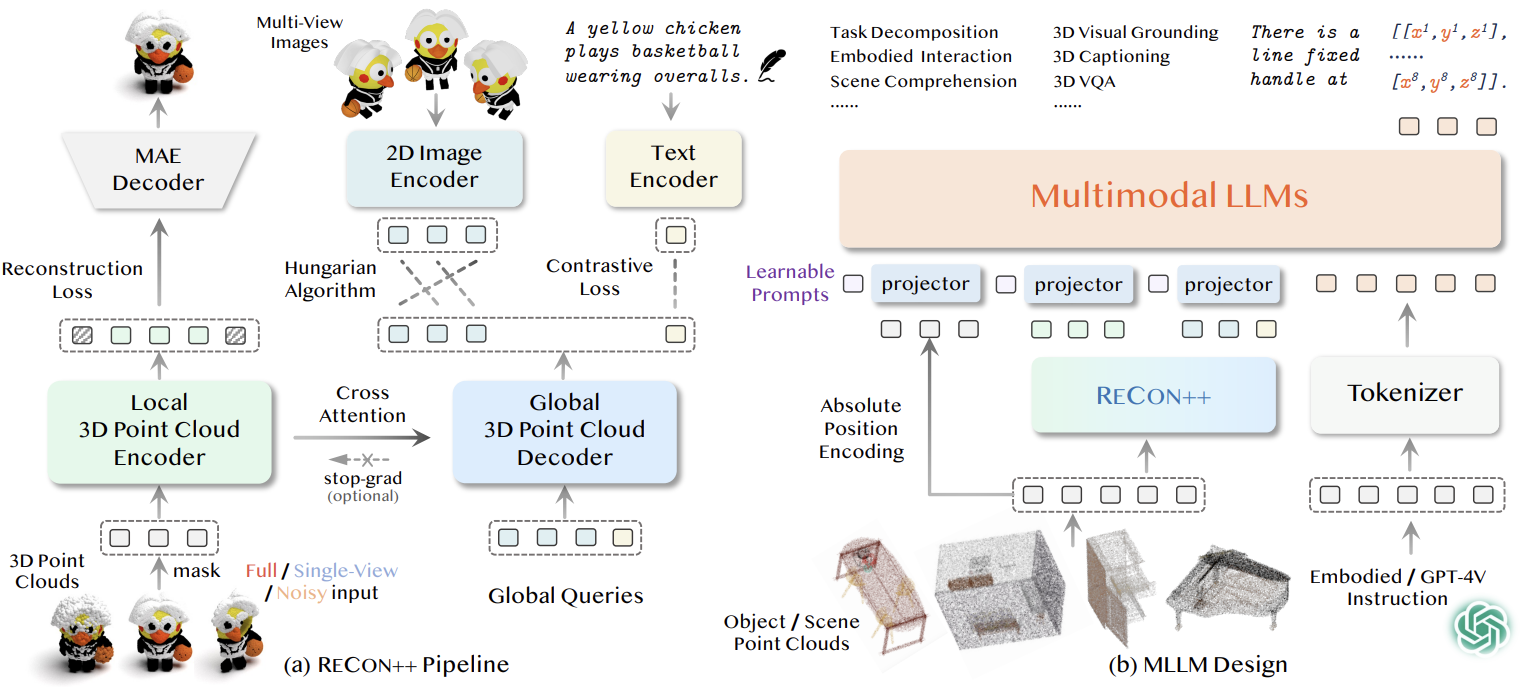

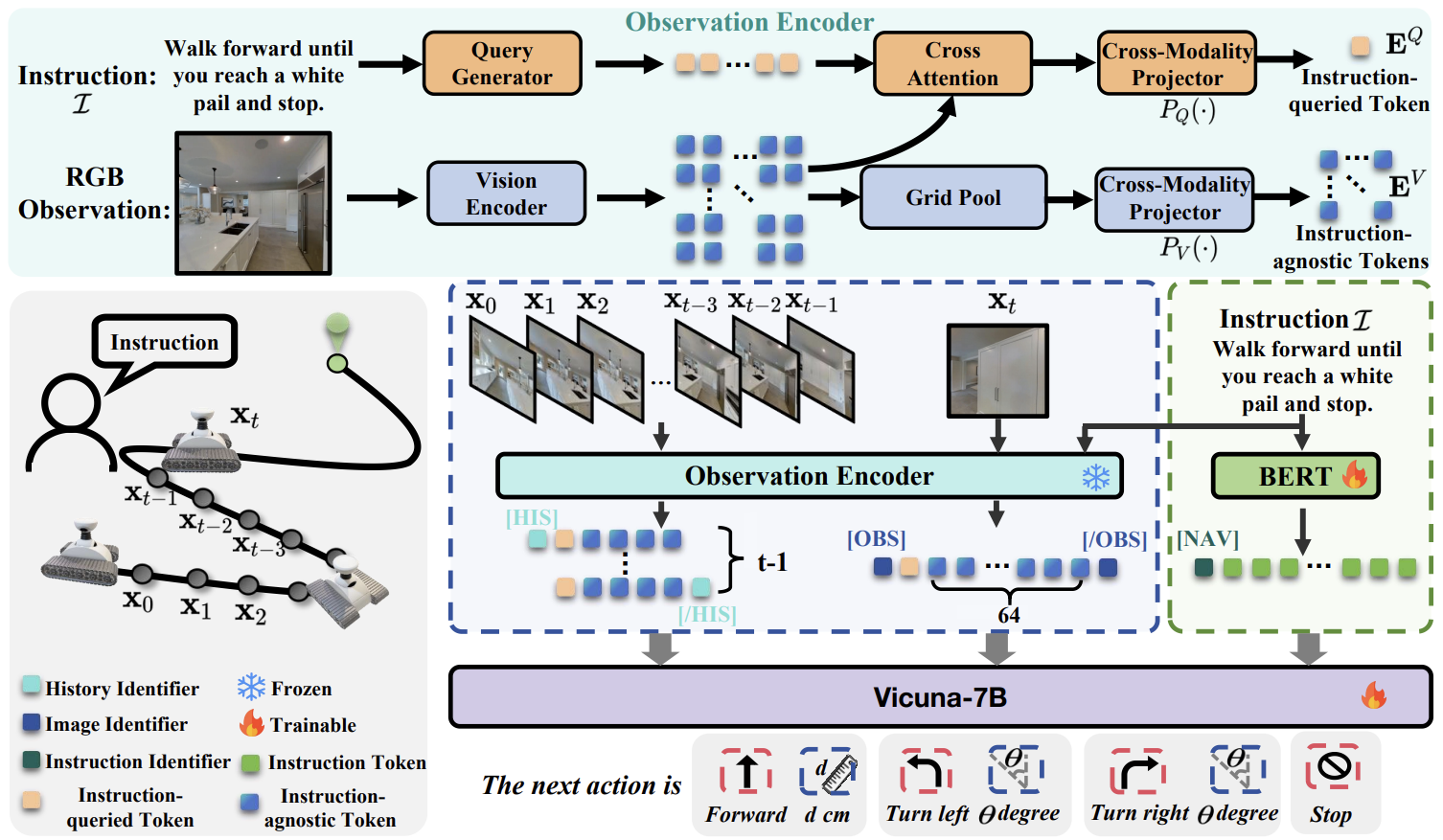

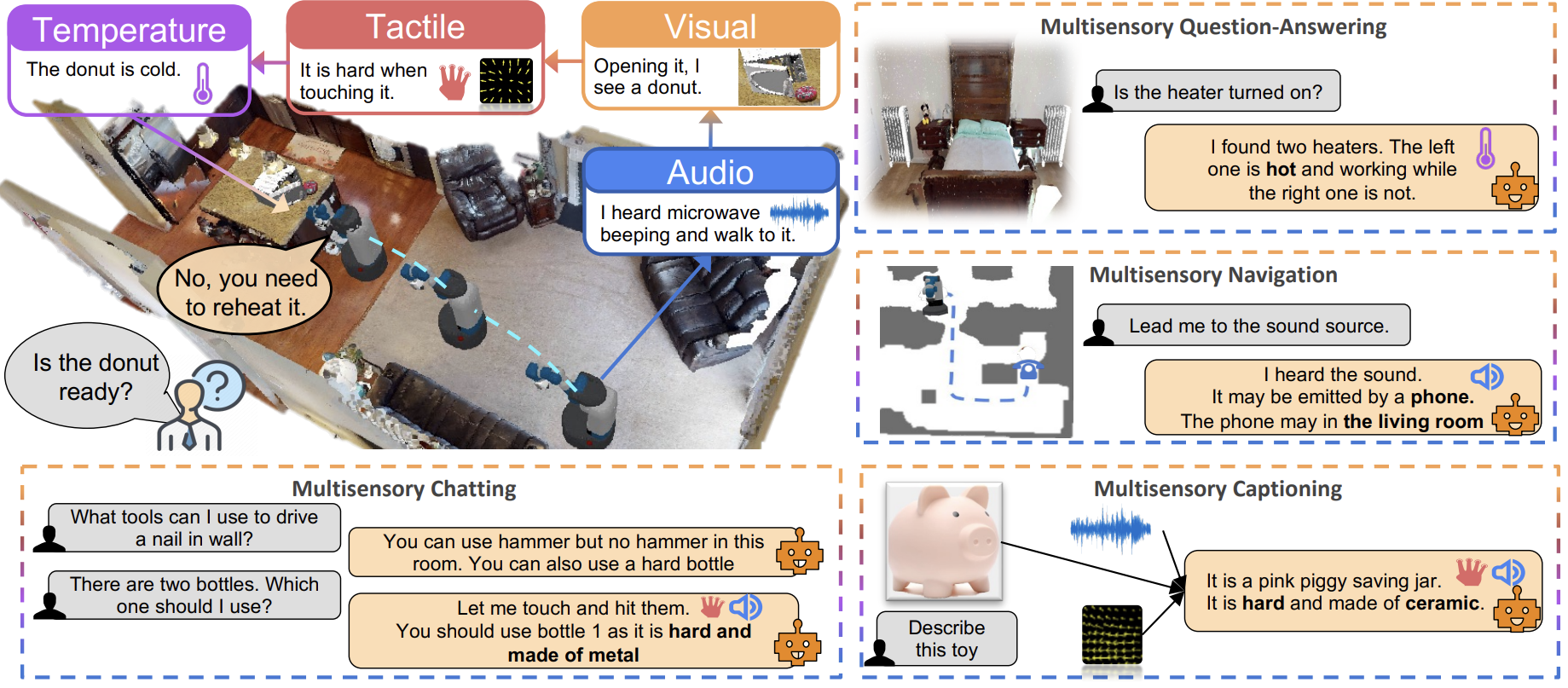

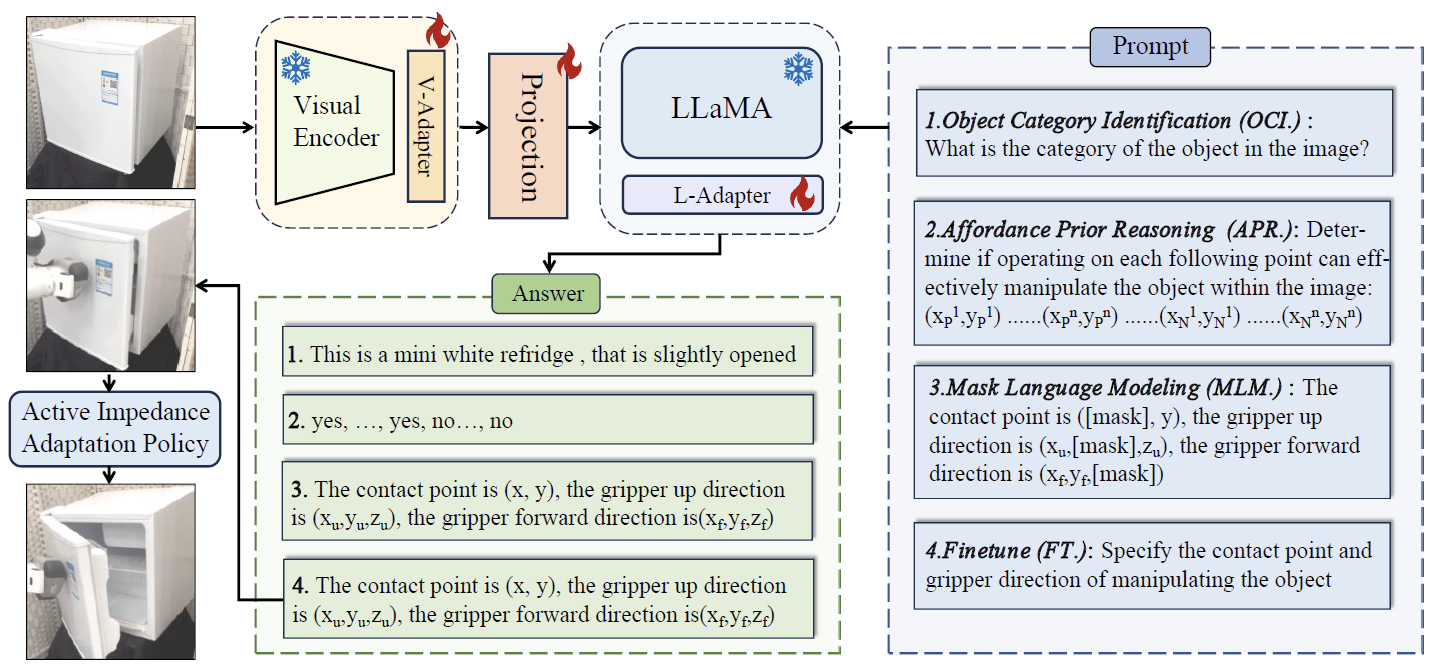

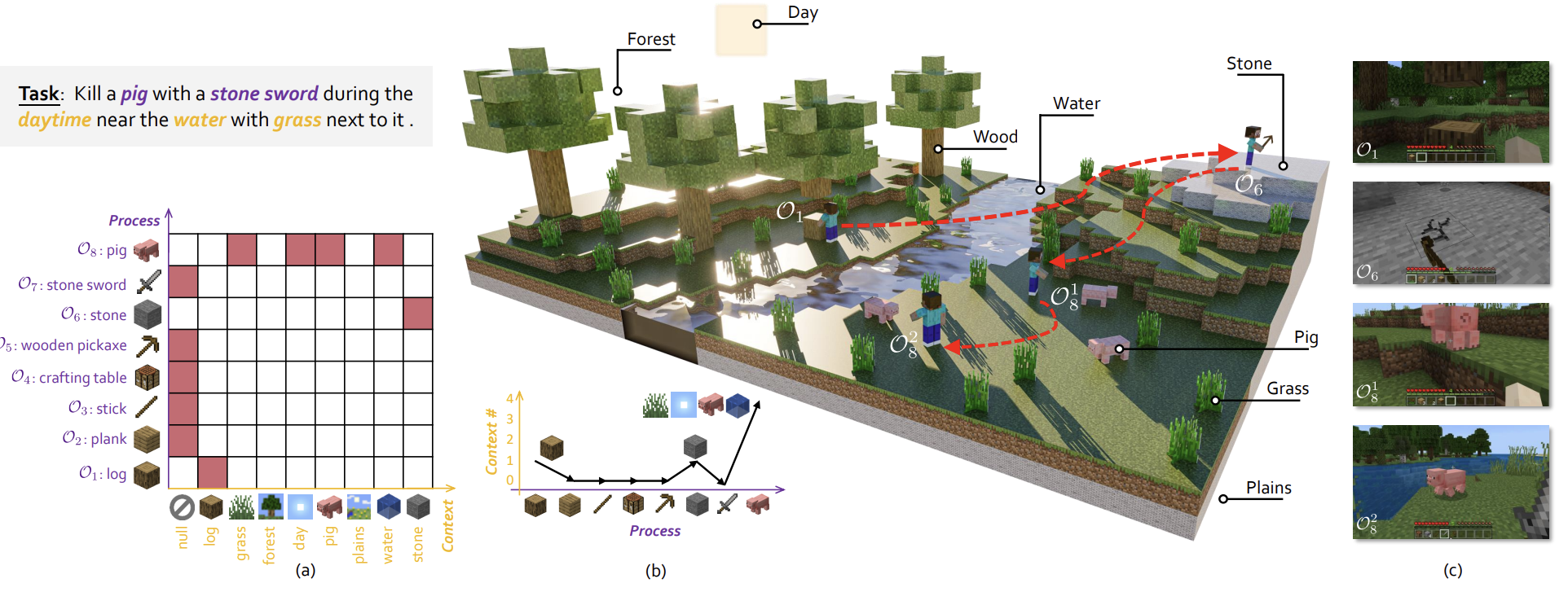

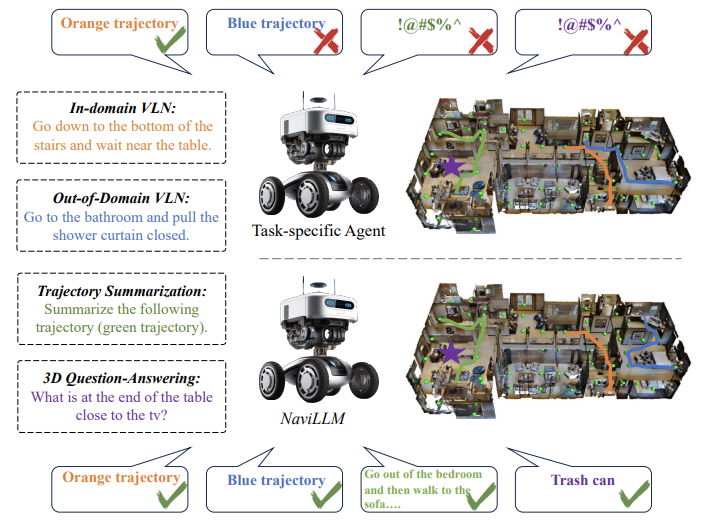

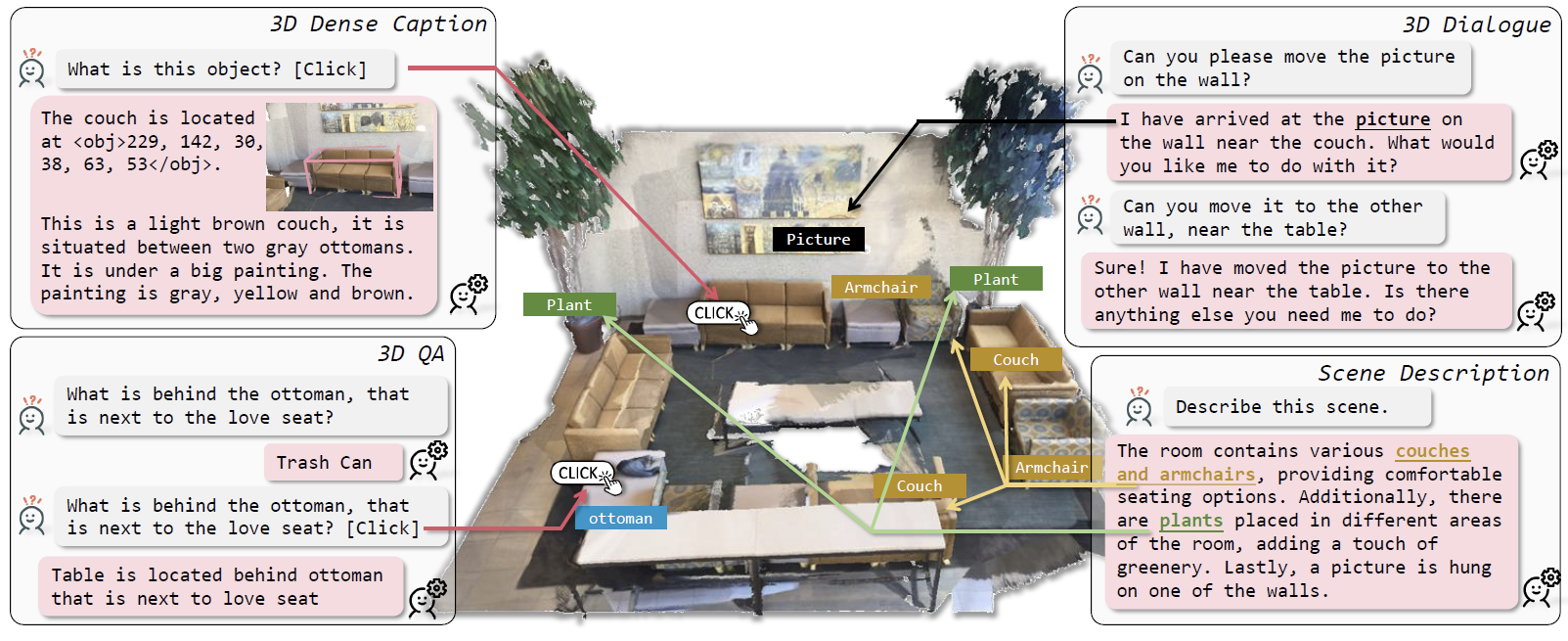

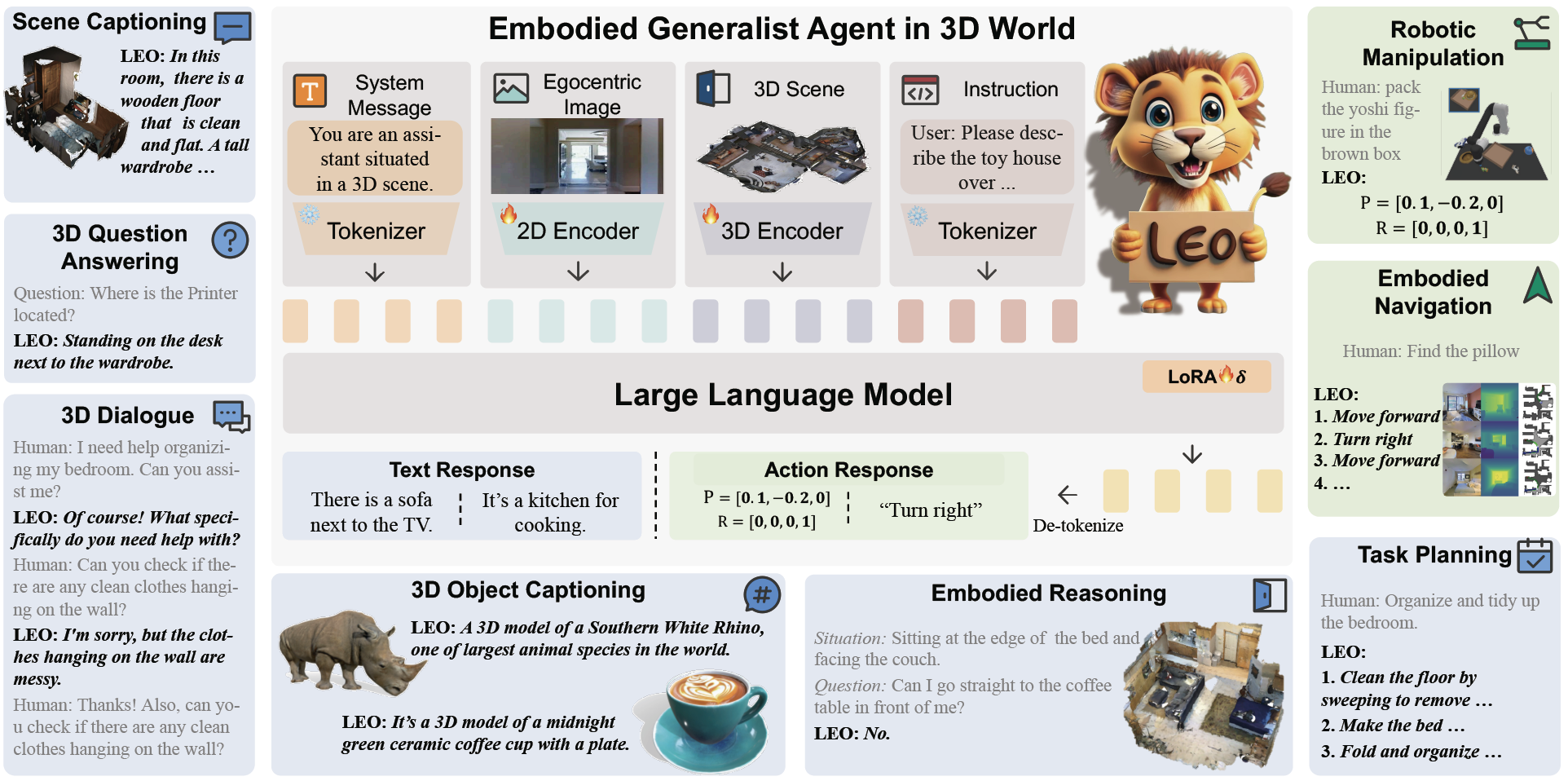

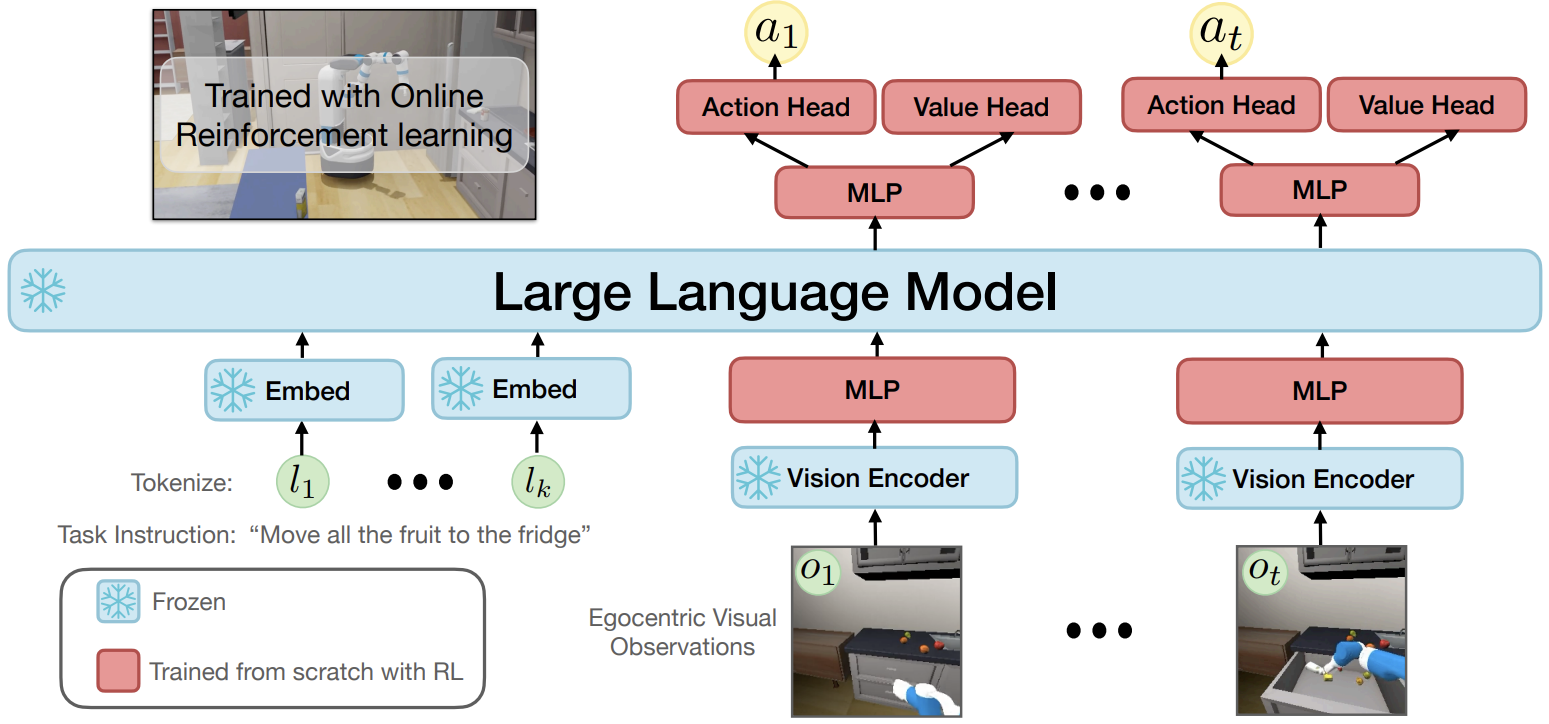

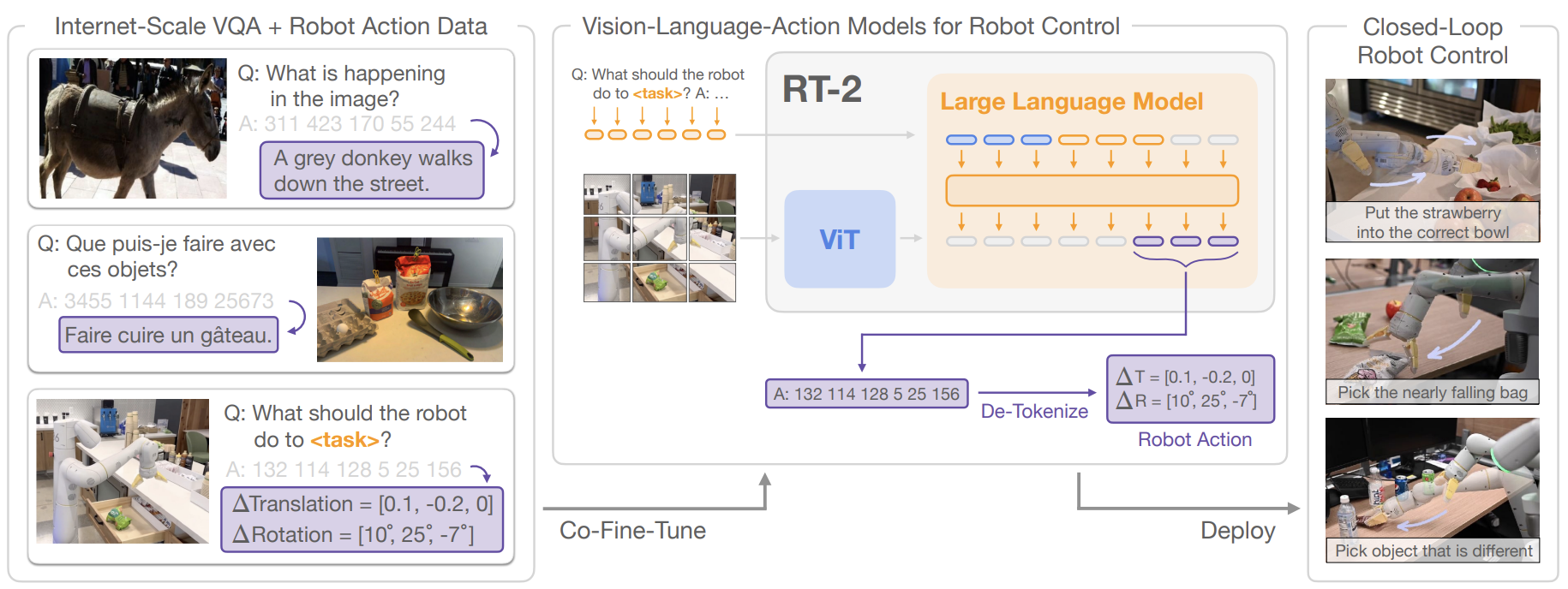

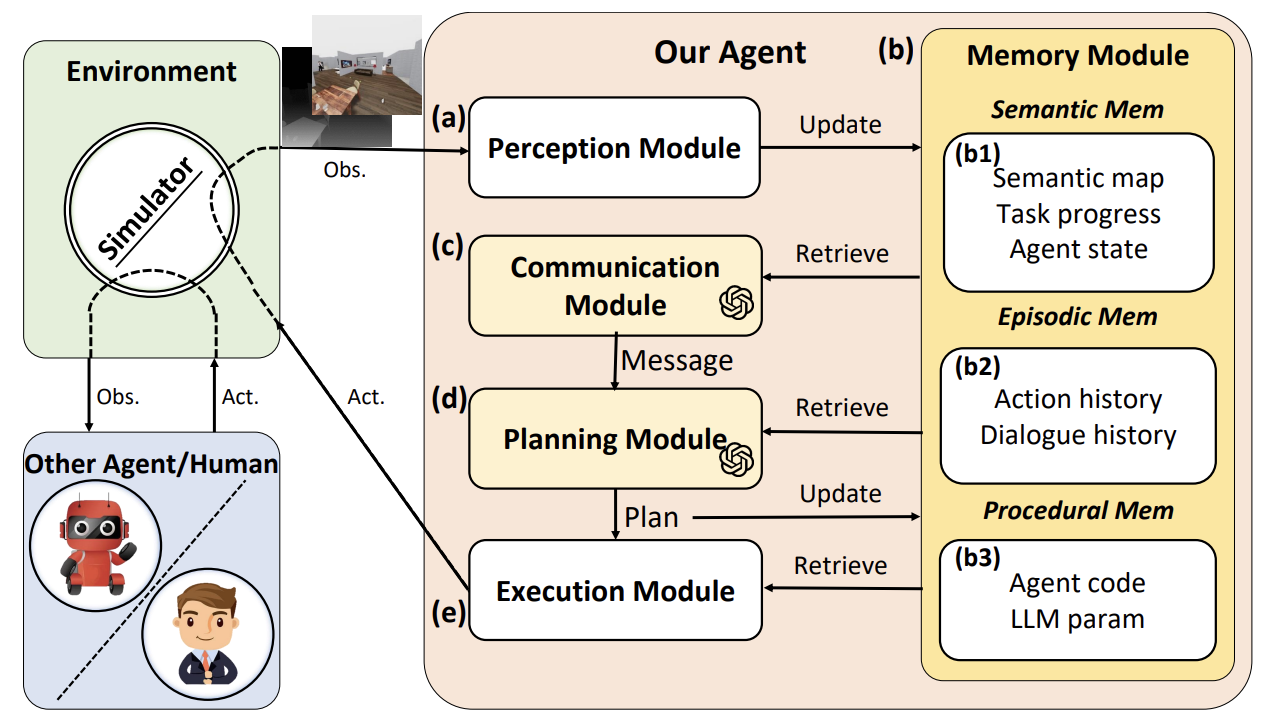

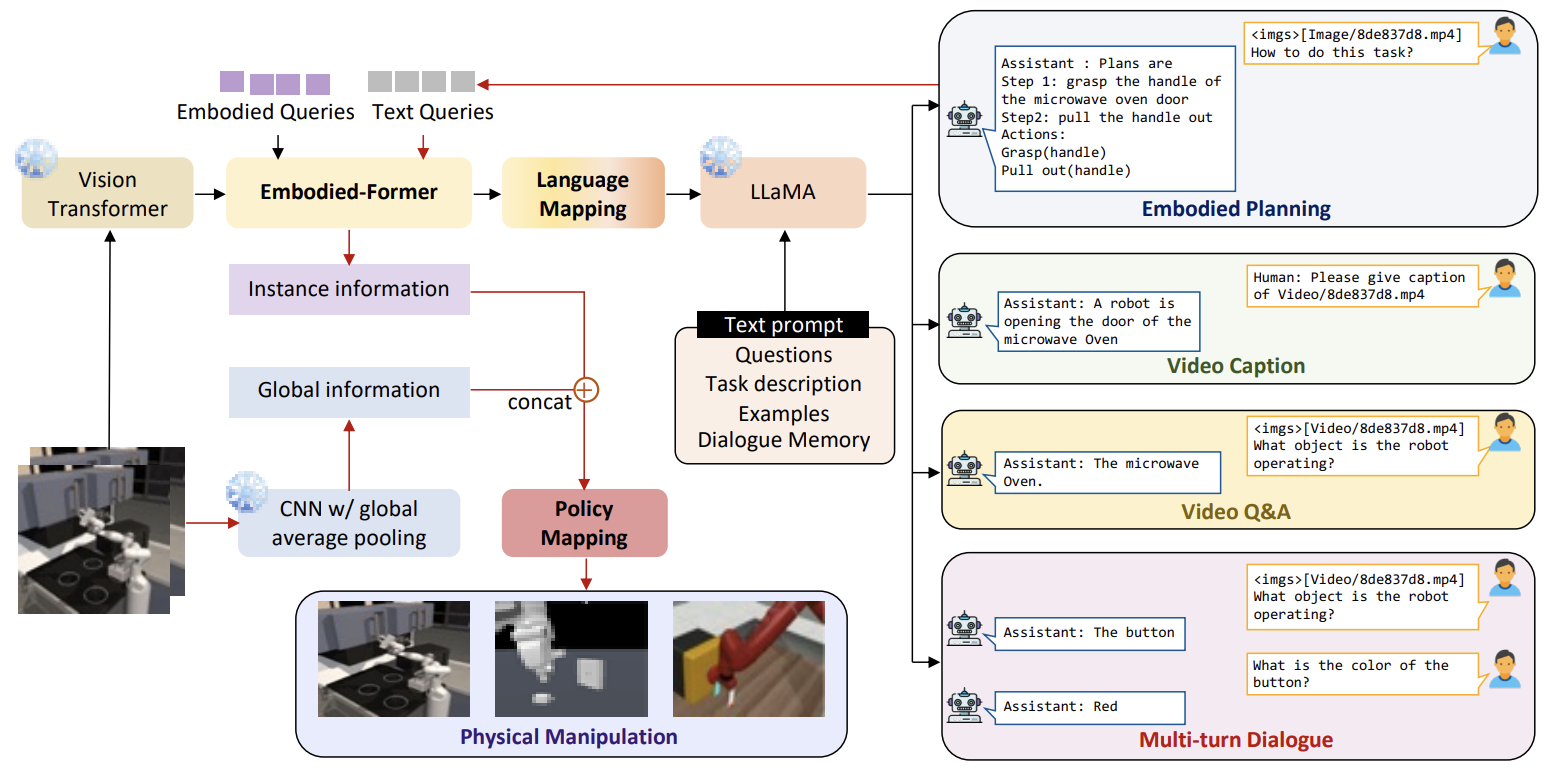

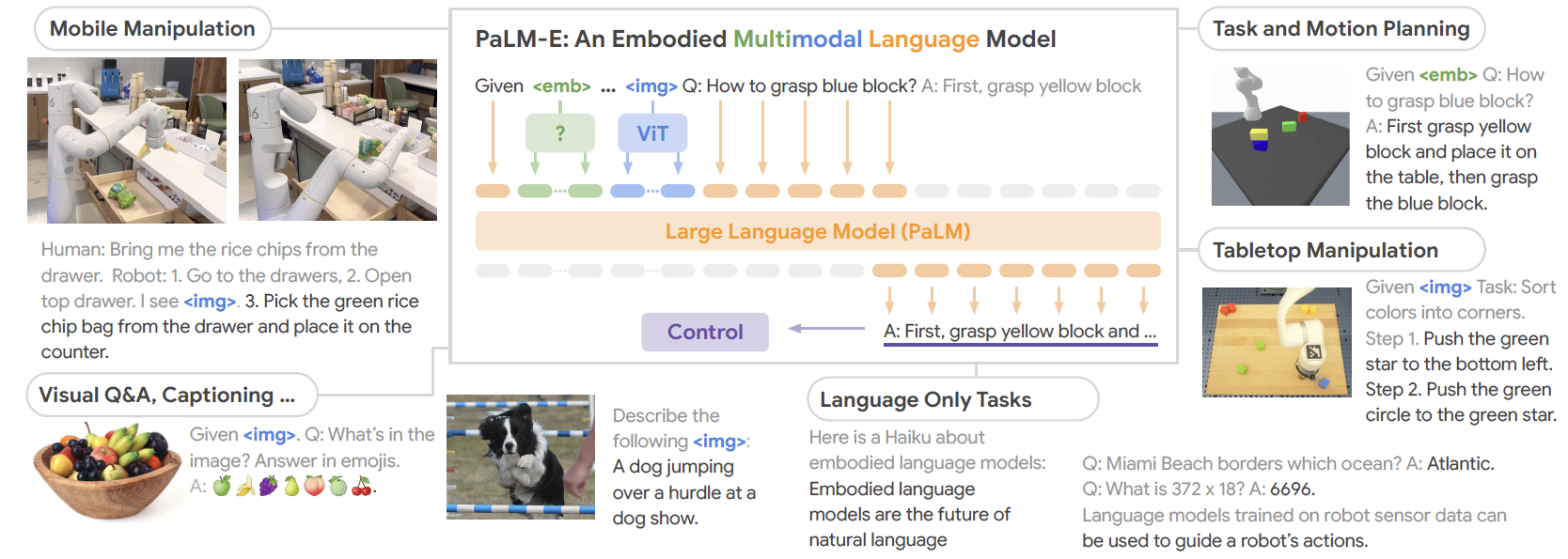

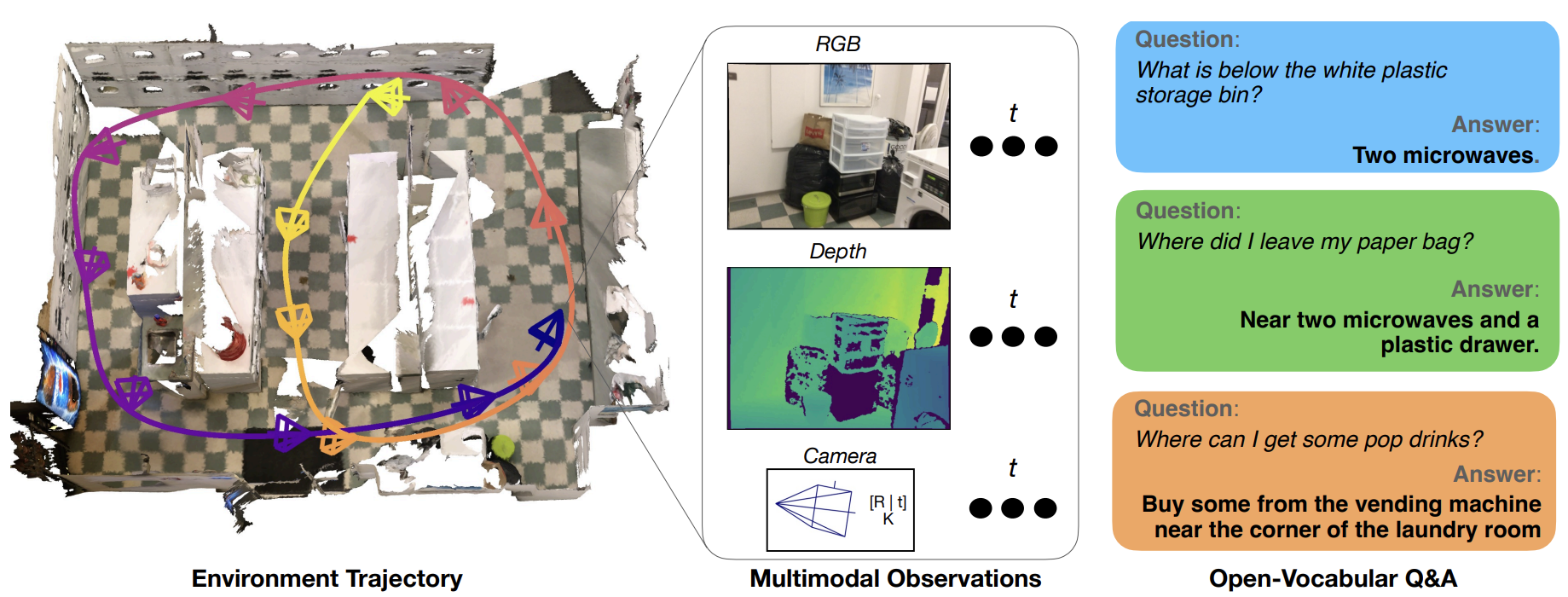

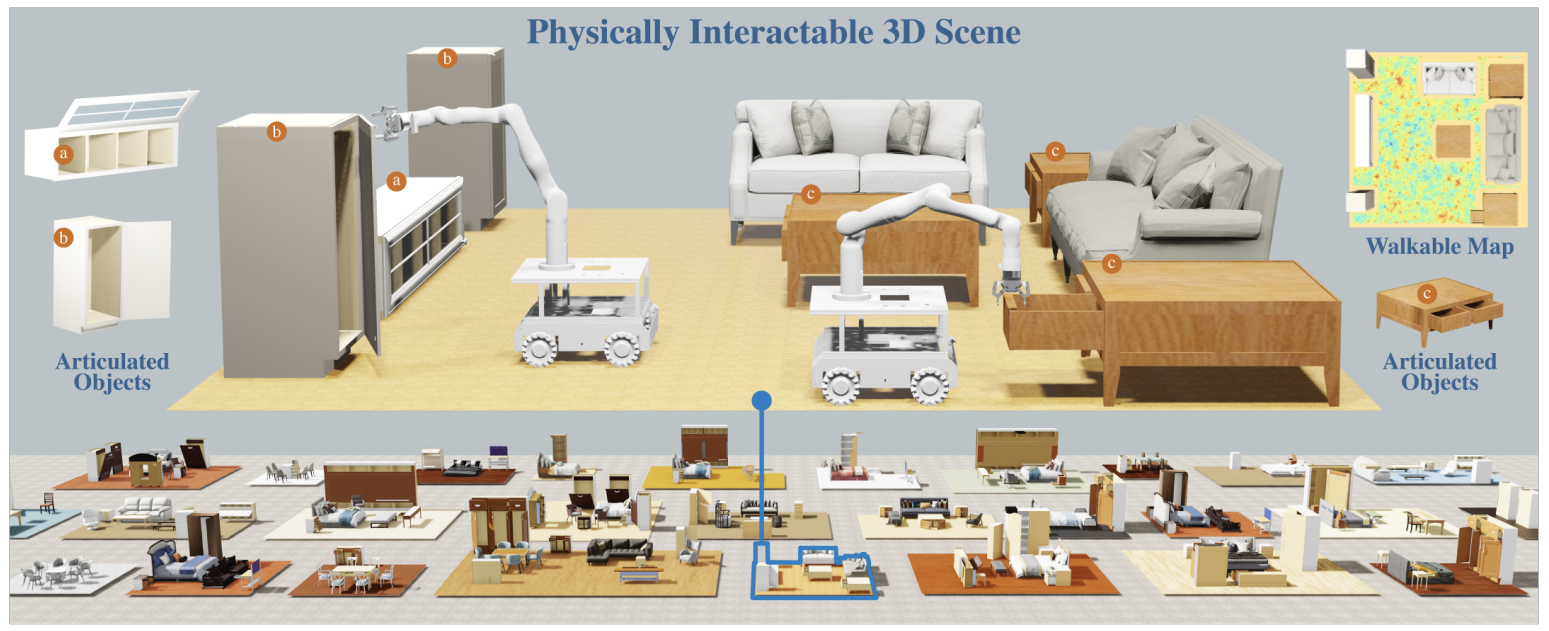

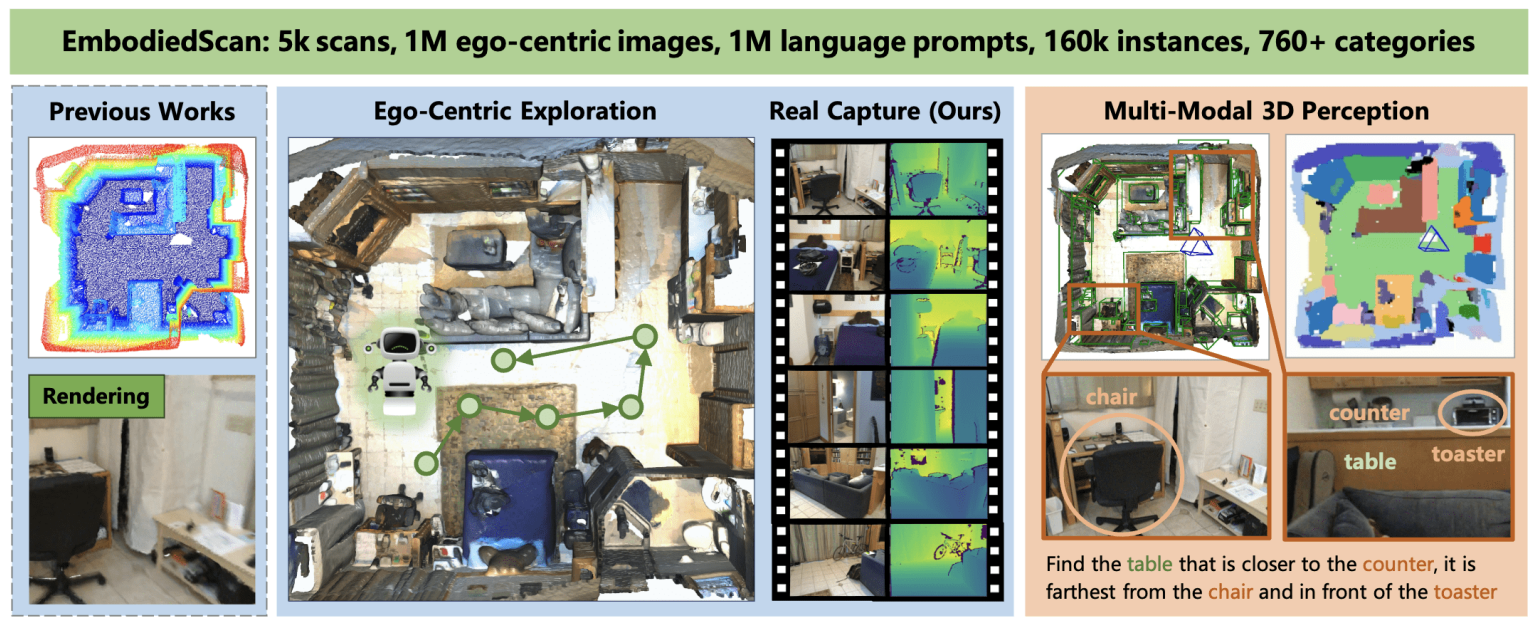

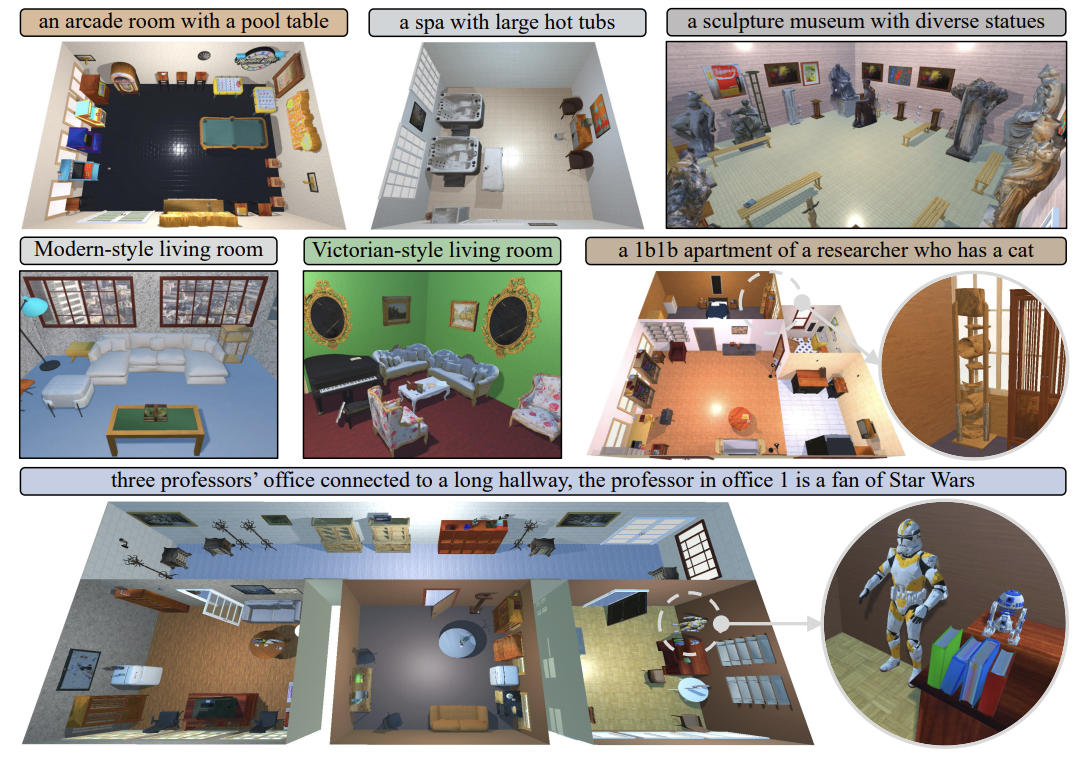

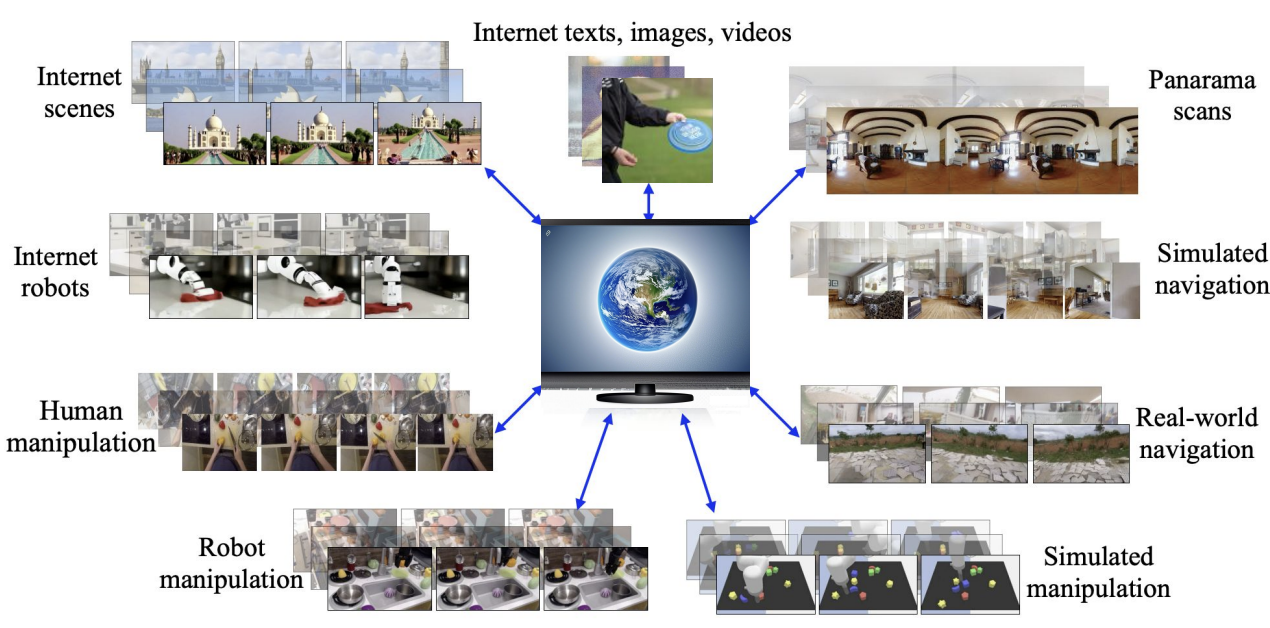

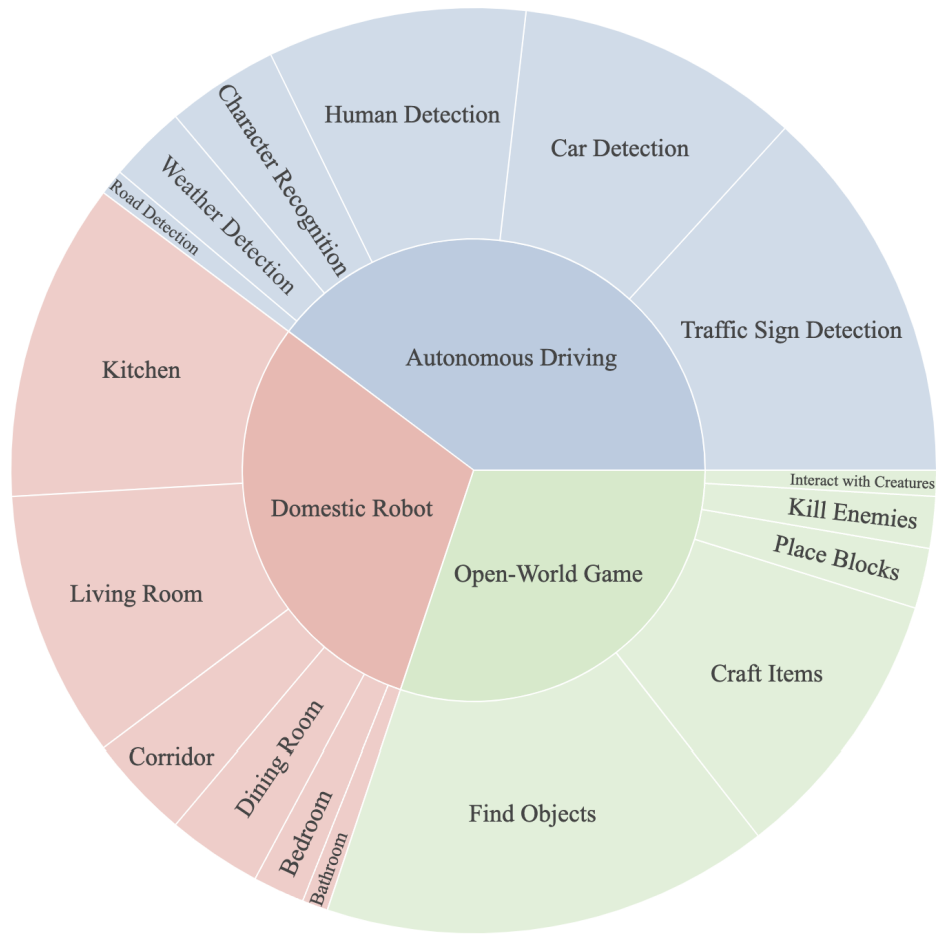

Embodied Multimodal LLMs integrate vision information and action outputs into large language models (LLMs). Leveraging the rich knowledge and strong reasoning capabilities of LLMs, these models excel in interactively following human instructions, comprehensively understanding the real world, and effectively conducting various embodied tasks. They hold great potential to achieve Artificial General Intelligence (AGI).