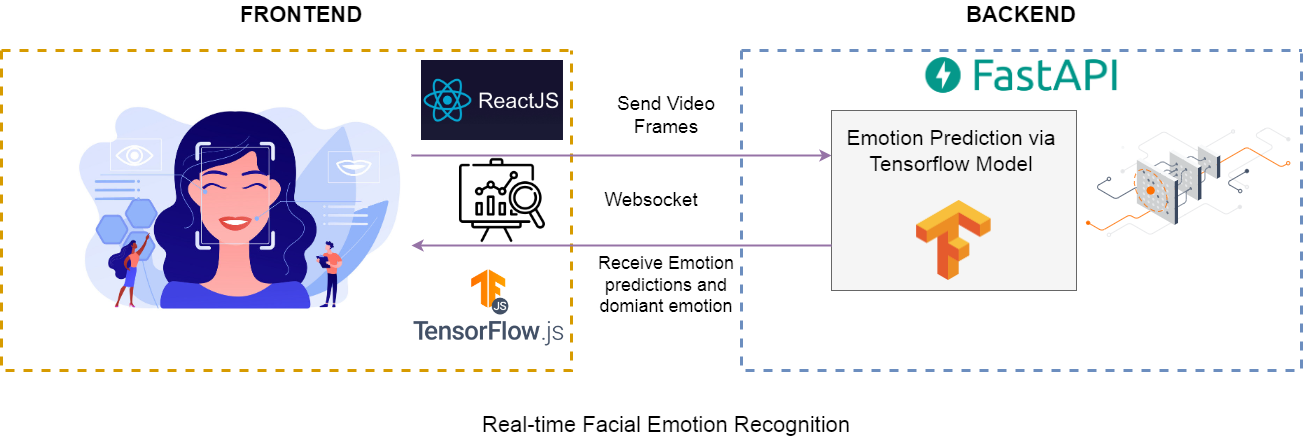

This repository demonstrates an end-to-end pipeline for real-time Facial emotion recognition application through full-stack development. The frontend is developed in react.js and the backend is developed in FastAPI. The emotion prediction model is built with Tensorflow Keras, and for real-time face detection with animation on the frontend, Tensorflow.js have been used.

Below is a demo video of the application that I have built.

High quality youtube video available at - https://youtu.be/aTe05n6T5Vo

- ReactJS.

- Building the front-end UI.

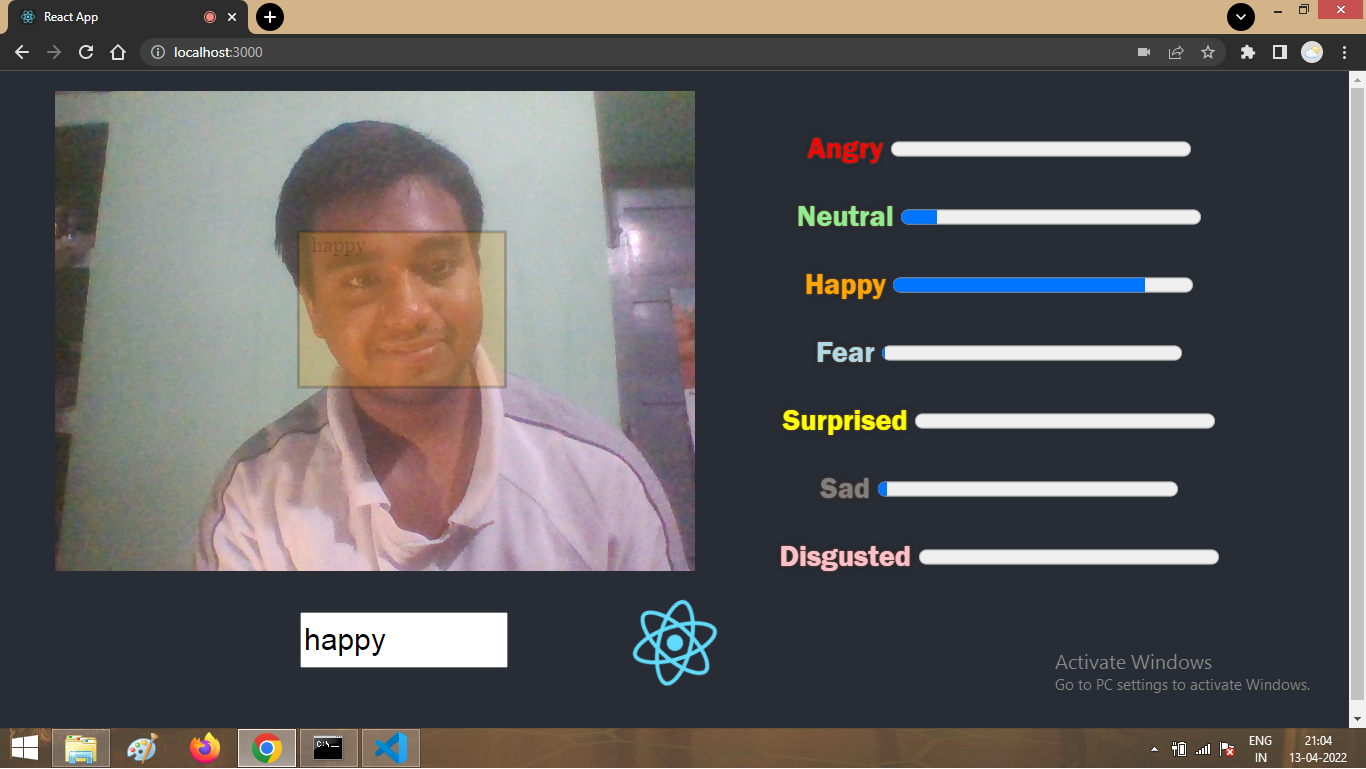

- Displaying Facial emotion in real-time.

- establish and connect to WebSocket to send video frames and receive Emotion prediction probabilities with dominant emotion.

- FastAPI.

- Connect to webSocket, receive video frame, send model predictions to front-end server.

- Build API.

- Tensorflow Keras.

- A pre-built emotion prediction Keras model is deployed.

- The model classifies 7 different emotions emotion: happy, sad, neutral, disgusted, surprised, fear, and angry.

- Model returns the prediction probability of each emotion.

- Tensorflow JS.

- Face Detection model

- Detect faces in an image using a Single Shot Detector architecture with a custom encoder(Blazeface).

Implementation of the full-stack end-to-end face emotion recognition pipeline.

$ cd server

$ pip install -r requirements.txt$ cd server

$ uvicorn main:app --reload$ cd emotion-recognition

$ npm startThe process of streaming video in real-time was possible with WebSocket. In the pipeline, we send video frames from react server to the API server and receive the model's emotion prediction from the API server. The Keras model predicts the probability of each emotion for each frame.

While sending a response from the API server the response payload consists of two keys "predictions" and "emotion". The "prediction" key consists of prediction probabilities of each emotion predicted by the model, which is used for dynamic emotion change with each frame. The "emotion" key has the dominant emotion, which is used for dynamic change in bounding box colour w.r.t the dominant emotion and displaying the dominant emotion inside the bounding box for each frame. The bounding box around the face is detected using a TensorflowJS model.

- The pipeline successfully streams video in real-time and updates the emotion recognition dashboard from the user's face for each frame.

- Pre-built Ml model predicts emotion for each frame. A more efficient light model with higher accuracy could be custom built and loaded for improving the performance of the ml pipeline.

- The Frontend and backend pipelines are connected via Websockets which enhances the real-time video streaming process.

- TensorflowJS used for face detection on the front-end gives scope to a more interactive interface.

- Custom light and efficient ML model with higher accuracy could provide us with better results.

- There is a lag between the instant frame and ml model prediction, this issue could be solved by running it over a system with better computing power. My project ran on a system with 4GM RAM.

- Making a disgusted face in the demo video was a difficult task for me, so the Disgusted emotion bar barely spiked any time. The reason could also be that the model was not trained with enough examples from the "disgust" class compared to other classes.