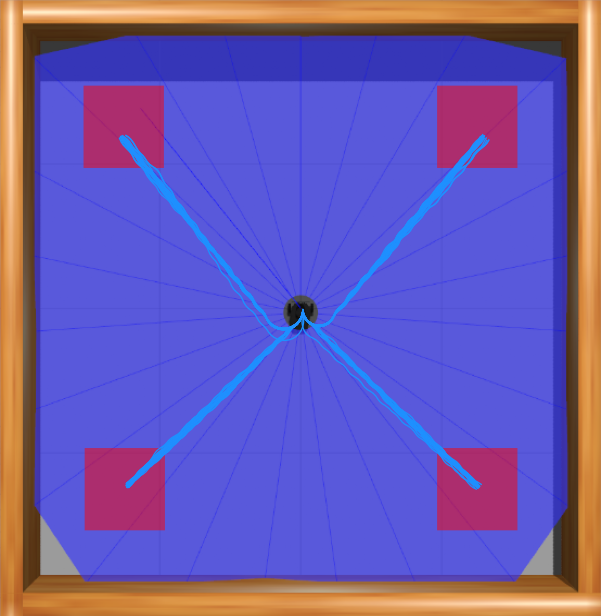

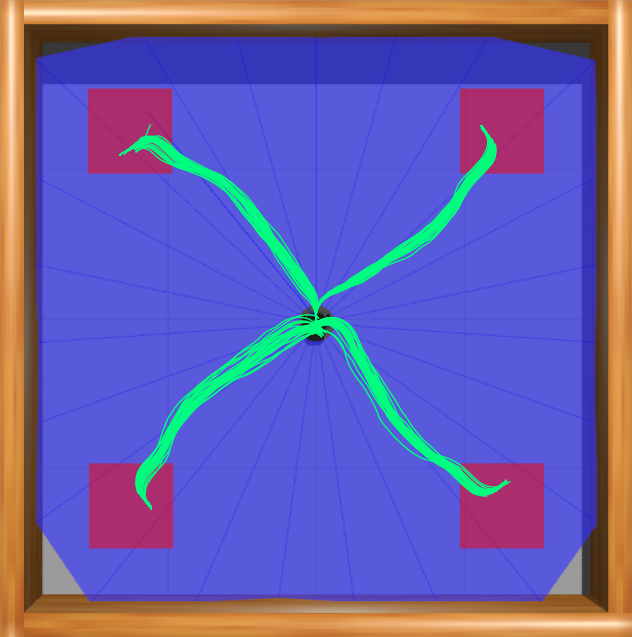

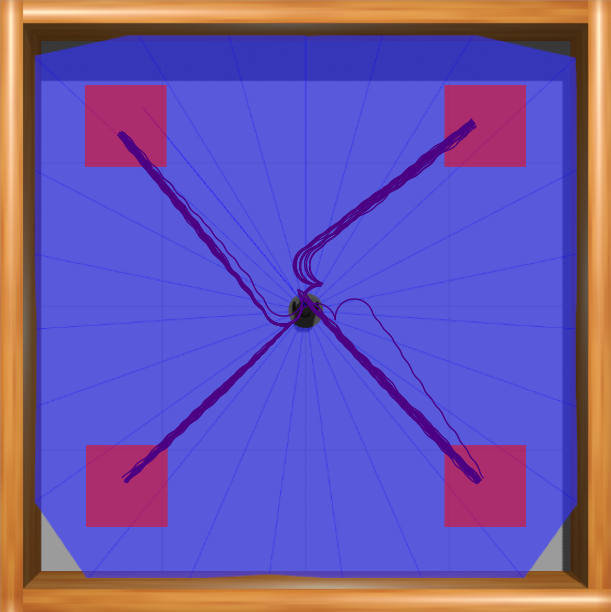

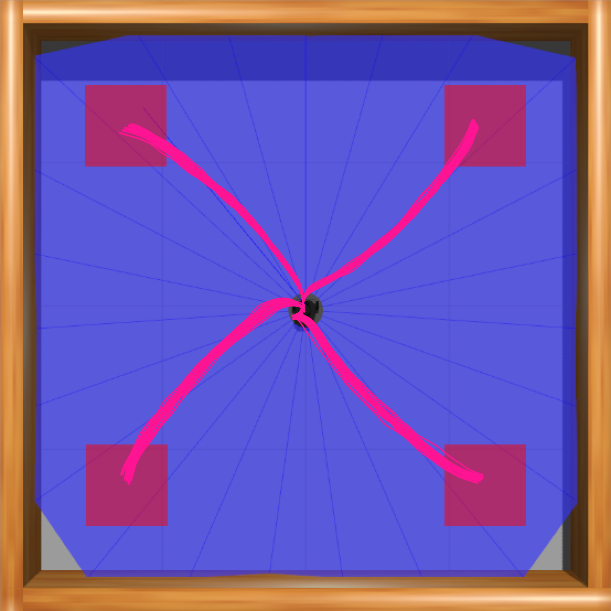

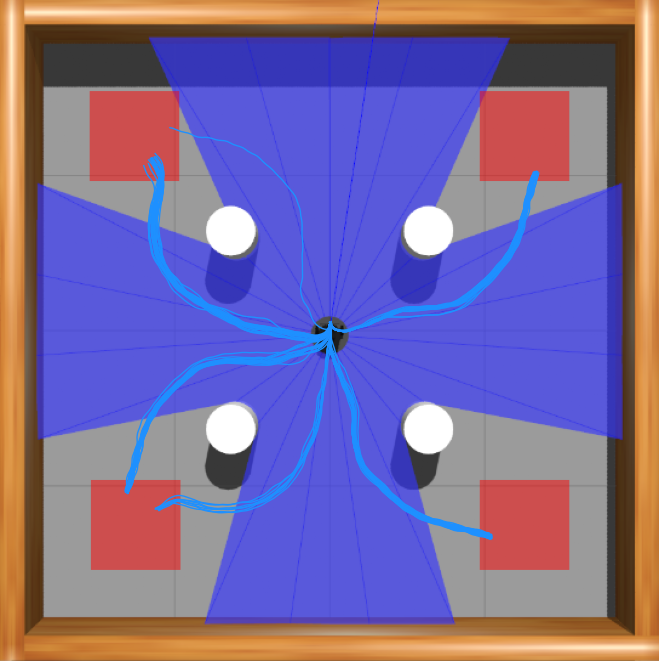

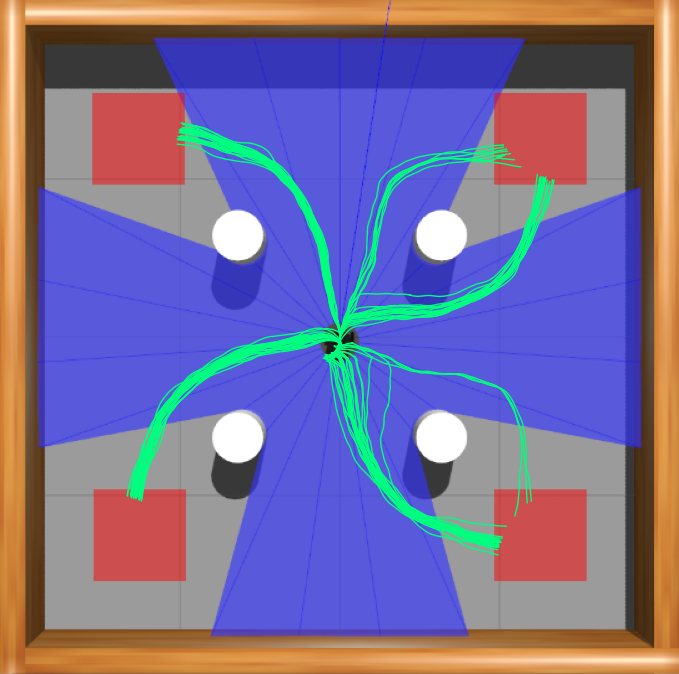

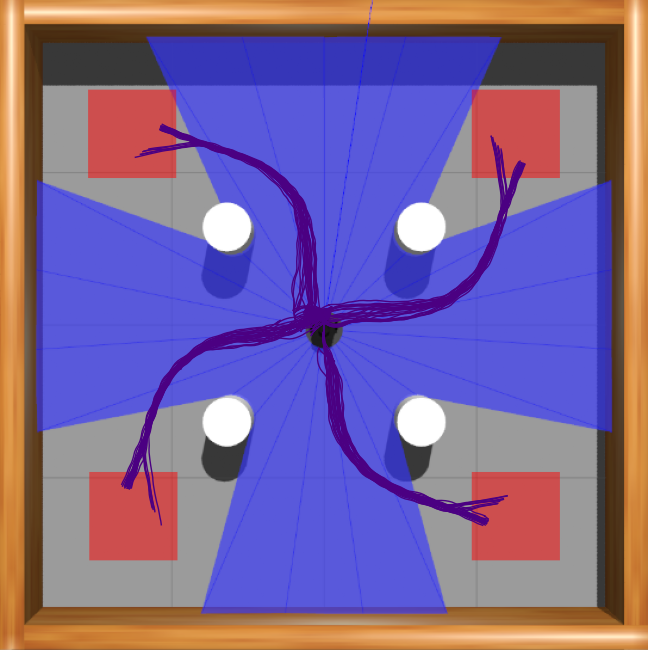

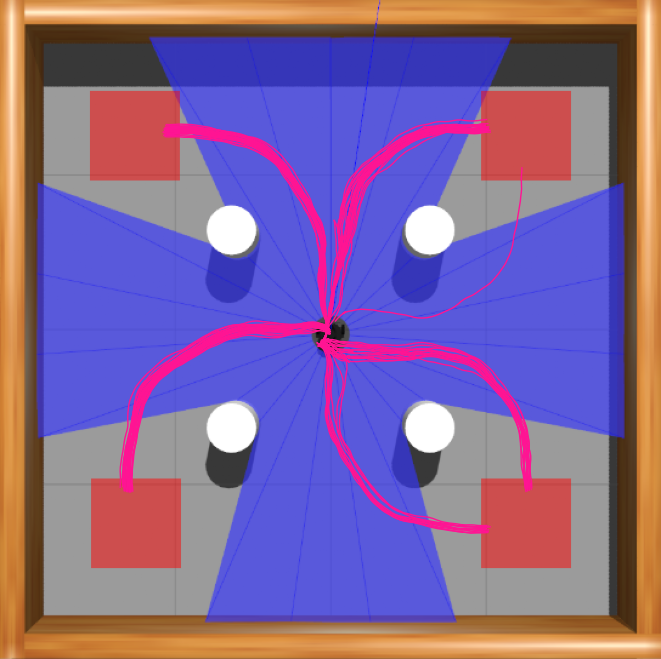

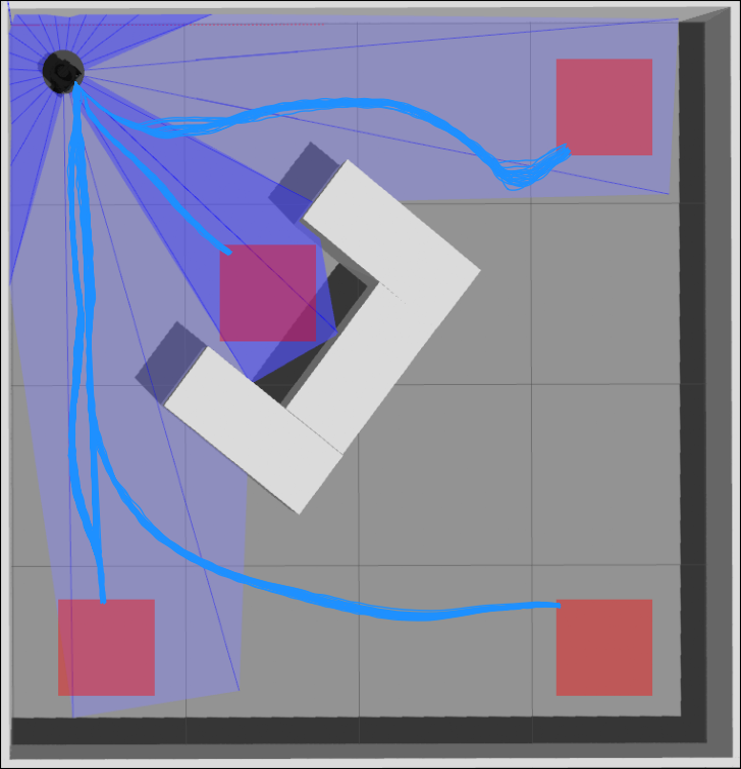

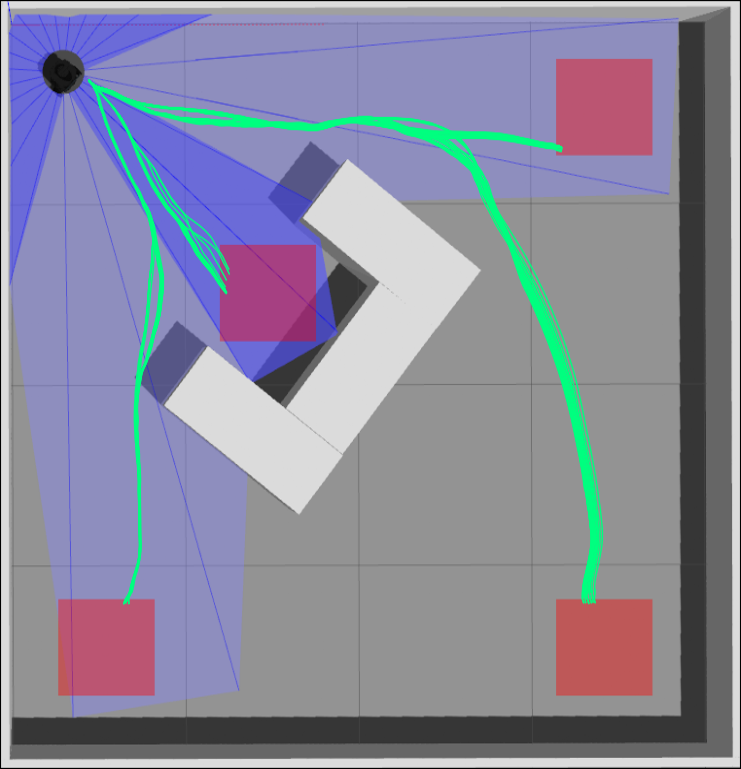

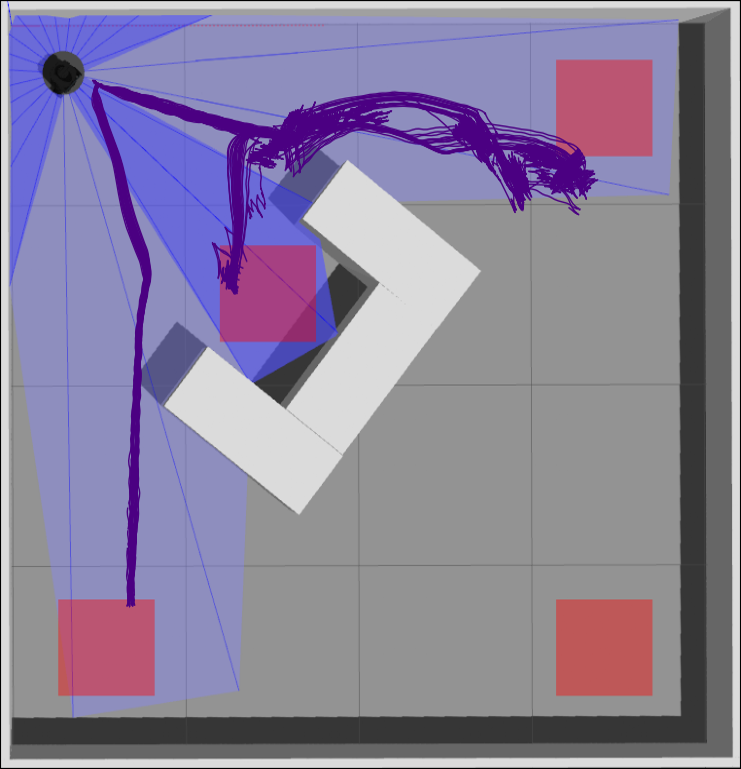

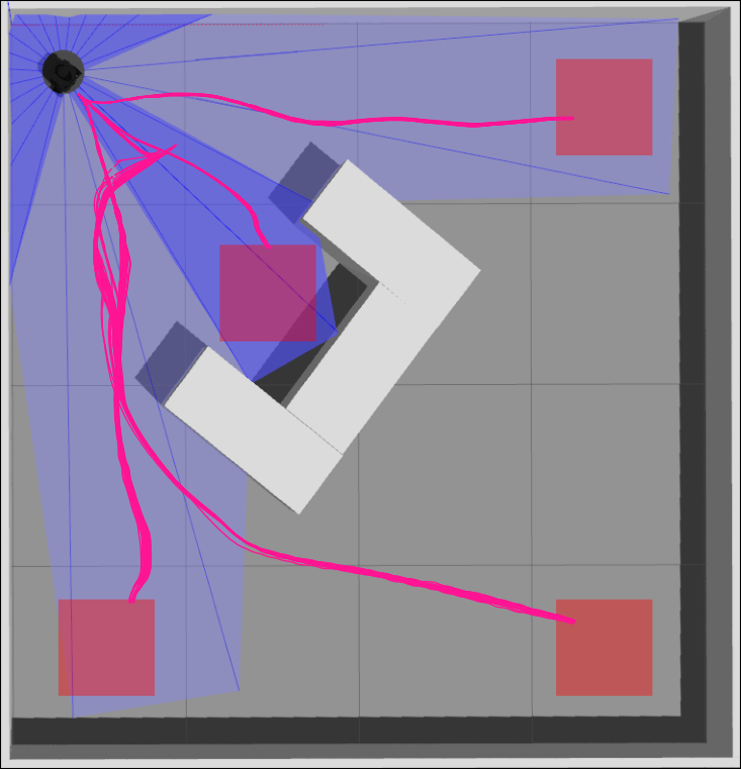

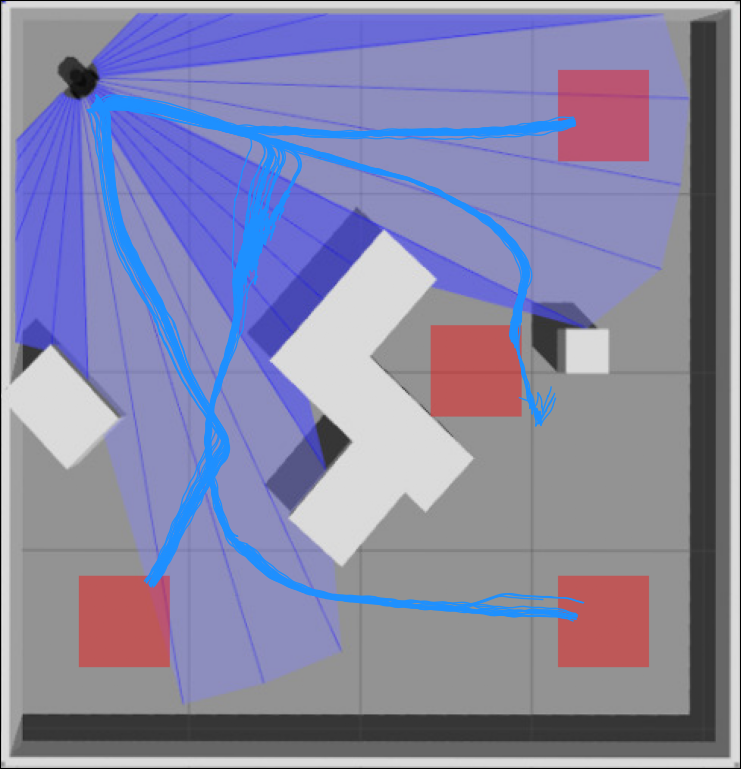

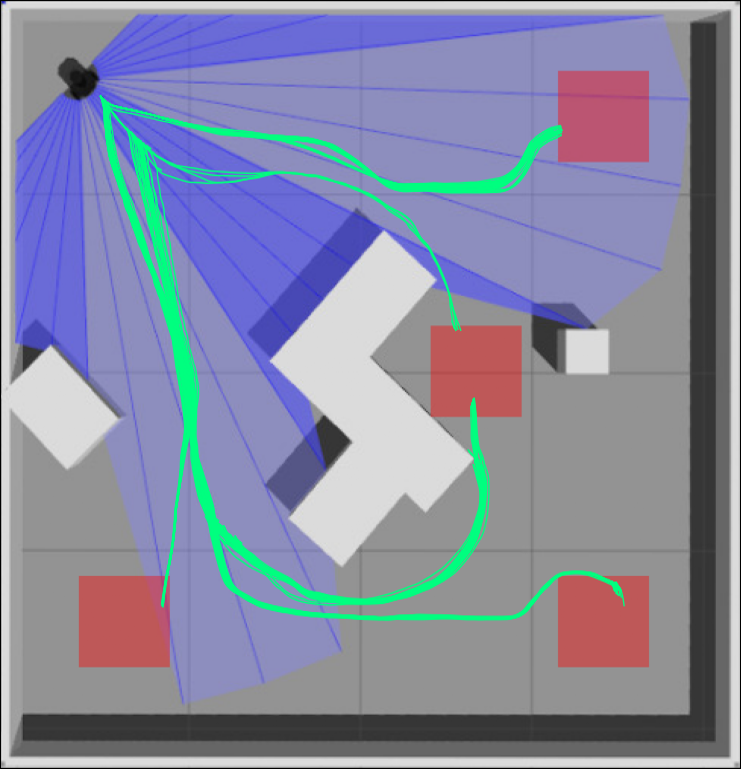

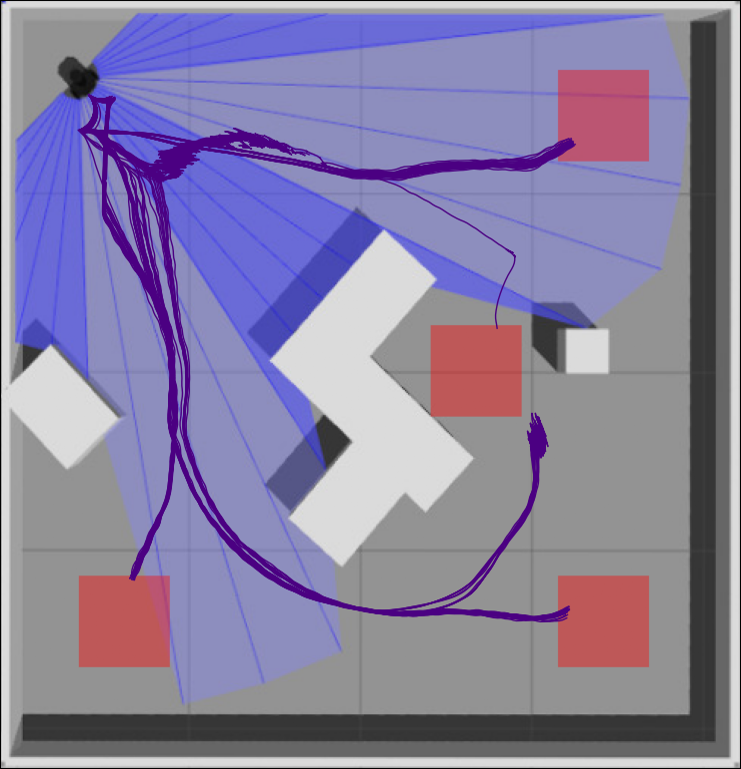

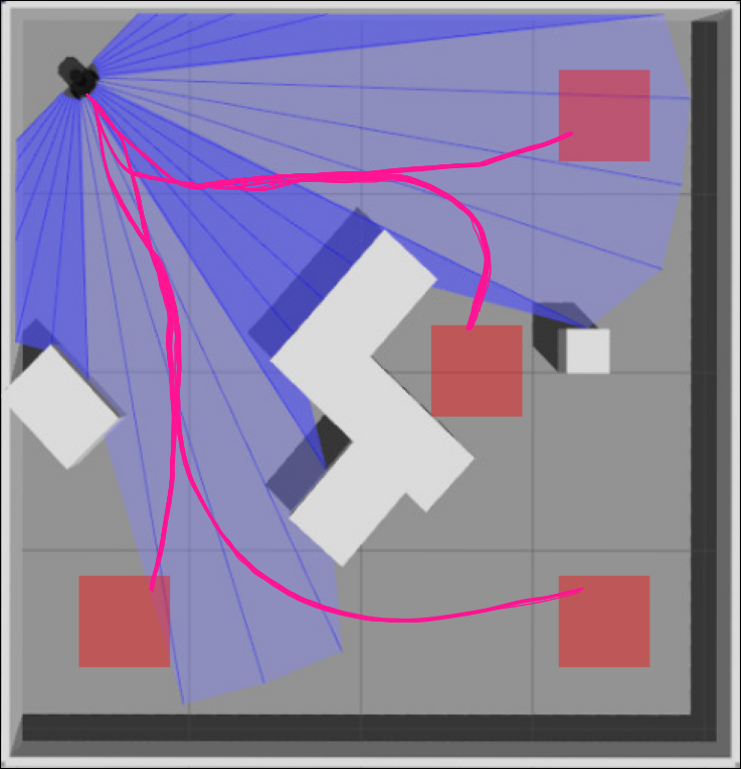

In this repository, we present a study of deep reinforcement learning techniques that uses parallel distributional actor-critic networks to navigate terrestrial mobile robots. Our approaches were developed taking into account only a couple of laser range findings, the relative position and angle of the mobile robot to the target as inputs to make a robot reach the desired goal in an environment. We used a real-to-sim development structure, where the agents trained in the Gazebo simulator were deployed in real scenarios to enhance the evaluation further. Based on the results gathered, it is possible to conclude that parallel distributional deep reinforcement learning’s algorithms, with continuous actions, are effective for decision-make of a terrestrial robotic vehicle and outperform non-parallel-distributional approaches in training time consumption and navigation capability.

All of requirements is show in the badgets above, but if you want to install all of them, enter the repository and execute the following line of code:

pip3 install -r requirements.txtBefore we can train our agent, we need to configure the config.yaml file. Some parameters seems a bit unbiguous, make sure the correct token key of comet is setted instead disable it. Another thing to do is choose an algorithm to perform your training, choose between PDDRL and PDSRL.

With the config.yaml configured, now we can train our agent, to do this just run the following code:

python3 train.pyWe strongly recommend using the package with the pre-configured docker image. Don't forget to use the nvidia container toolkit to enable GPU usage within docker. Inside the docker image, just use the following command to download and install the data from the training and evaluation repositories:

zsh setup.shNote: depending on the speed of your internet connection this can take a long time.

The networks of our proposed PDDRL and PDSRL approaches have three hidden fully-connected layers with 256 neurons each and connected through ReLU activation for the actor-network. The action ranges between -1 and 1, and the hyperbolic tangent function Tanh was used as the activation function. The outputs are scaled between -0.12 and 0.15 meters for the linear velocity, and from -0.1 m/s to 0.1 m/s for the angular velocity. The PDDRL and PDSRL network structure model used can be seen in figure bellow. For both approaches, the Q-value of the current state is predicted in the critic network, while the actor-network predicts the current state.

See more about the results in the [YouTube video](will be available soon).

If you liked this repository, please don't forget to starred it!