Liangliang Yao, Changhong Fu*, Haobo Zuo, Yiheng Wang, Geng Lu

- * Corresponding author.

-

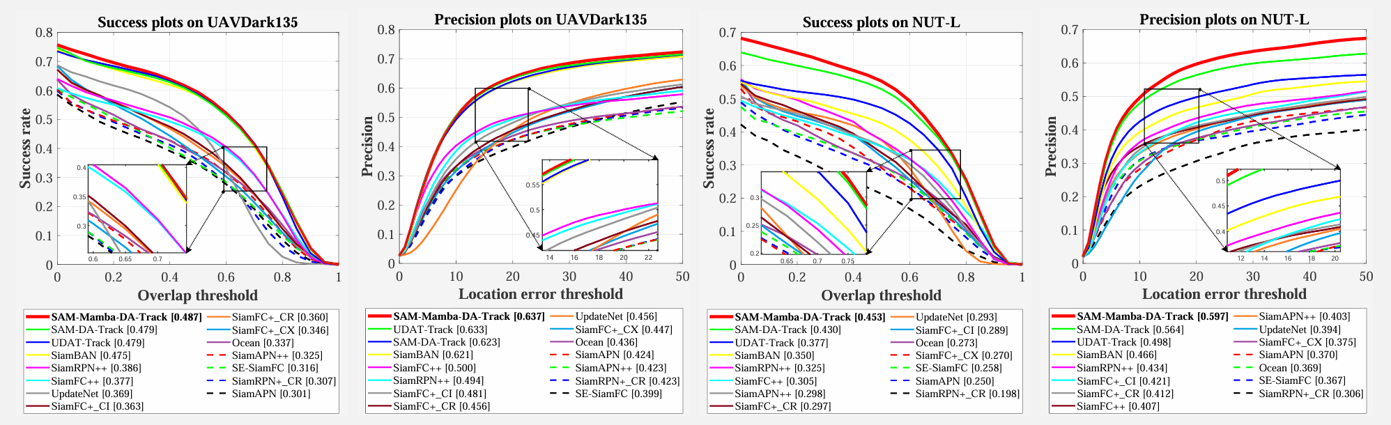

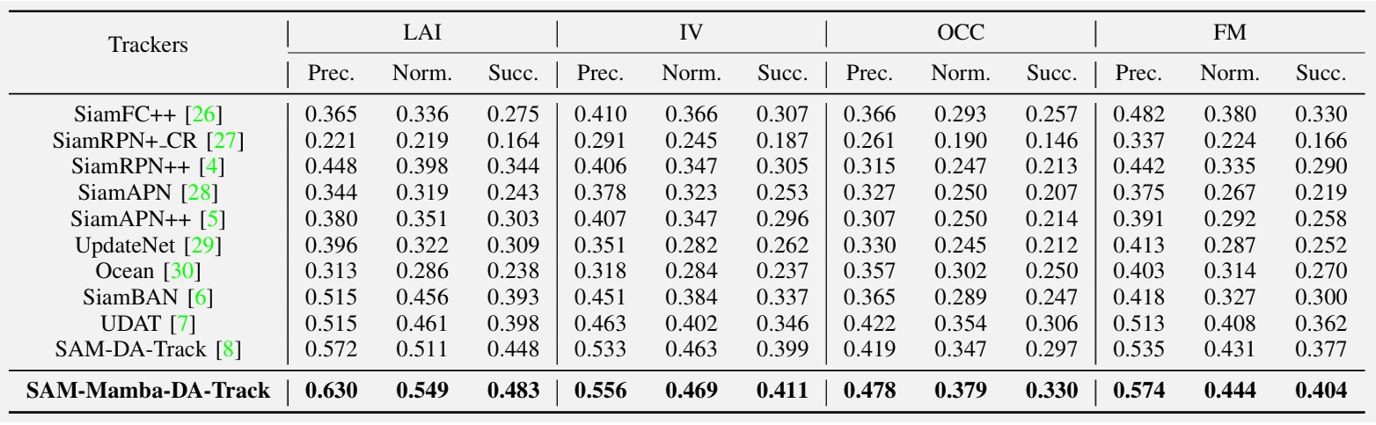

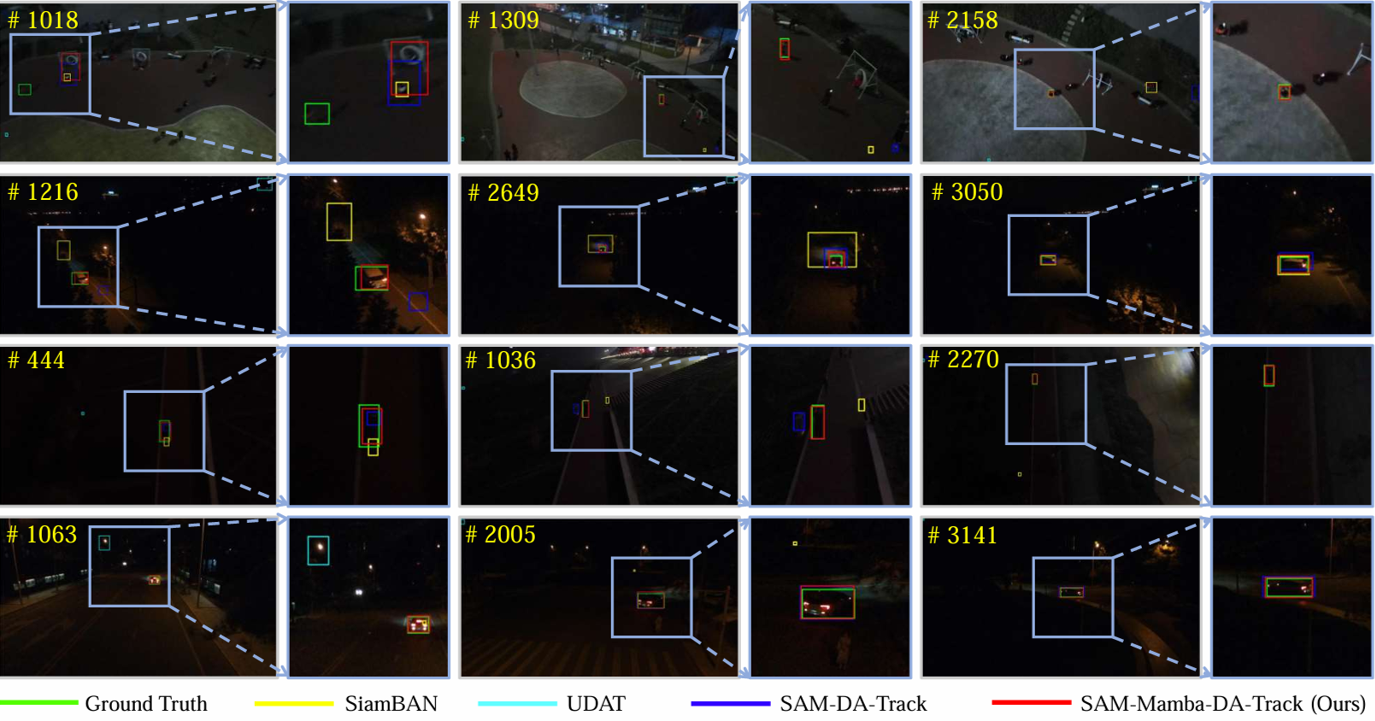

Comparison of tracking results

-

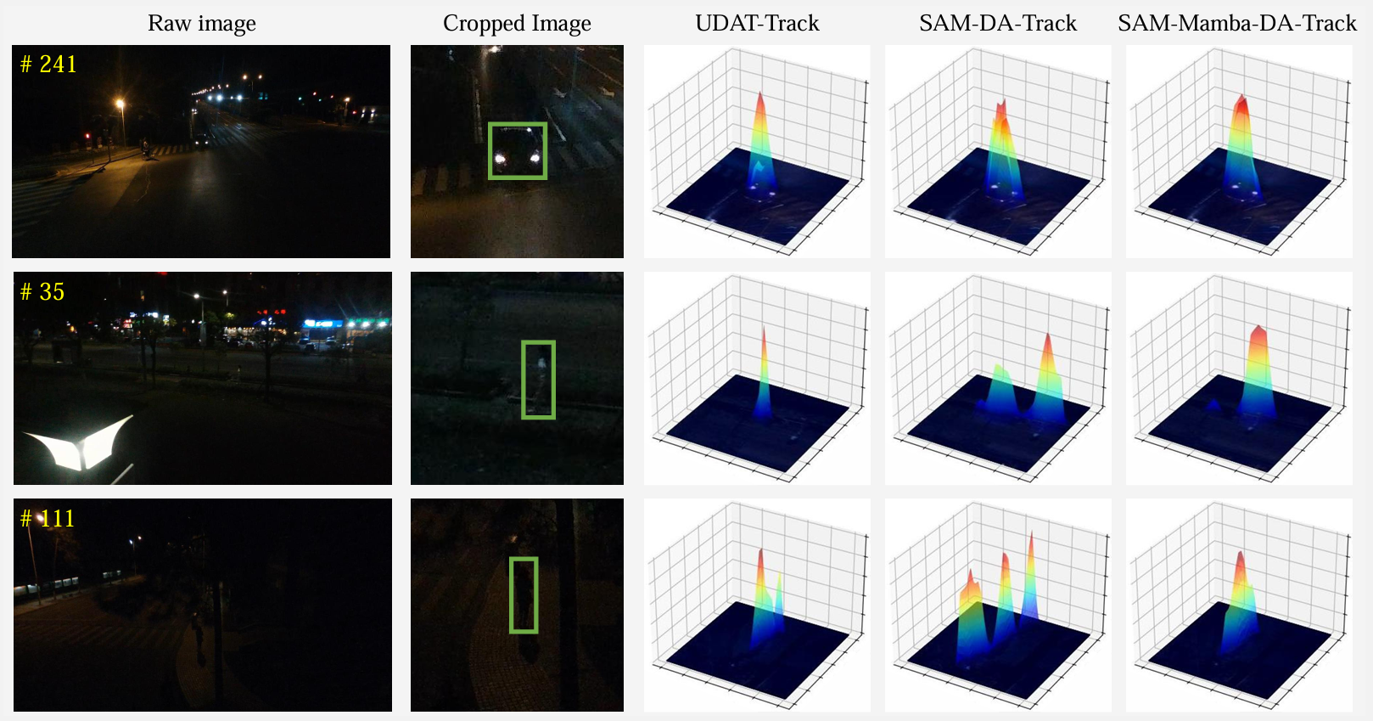

Comparison of heatmap

-

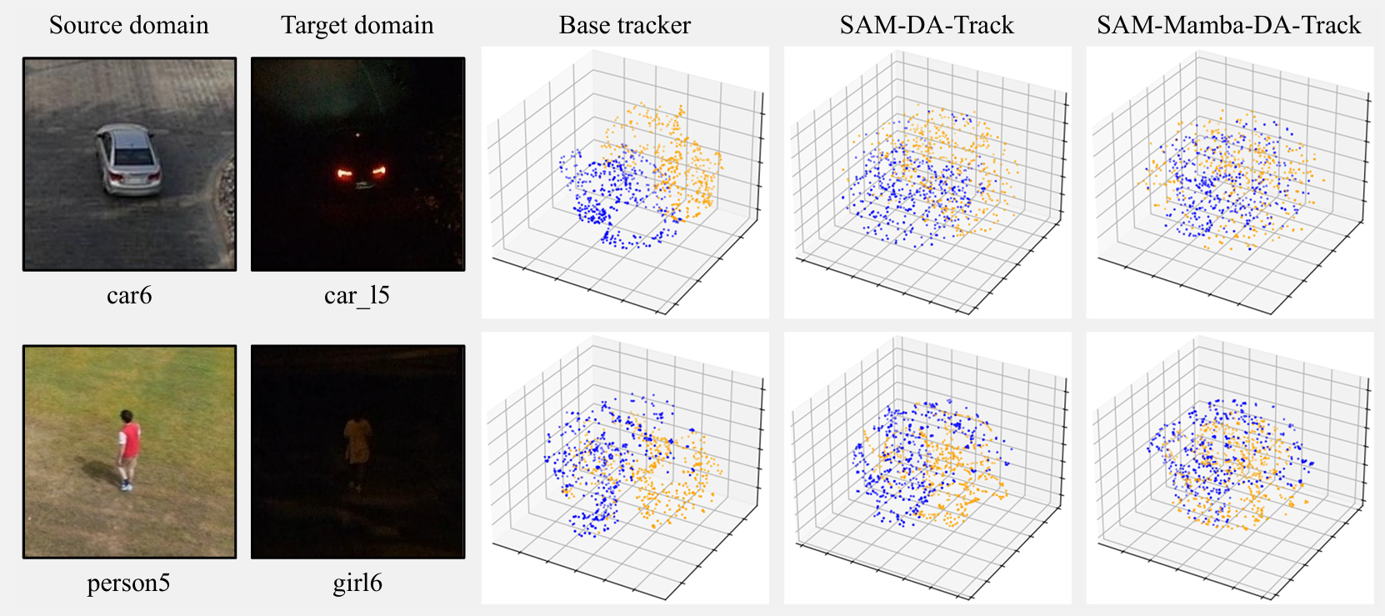

Feature visualization by t-SNE

This code has been tested on Ubuntu 18.04.6 LTS, Python 3.9.21, Pytorch 2.5.1, and CUDA 12.0. Please install related libraries before running this code:

-

Install SAM-DA-Track:

pip install -r requirements.txt

-

Download a model checkpoint below and put it in

./snapshot.Model Source 1 Source 2 SAM-Mamba-DA Baidu Dropboxs to be soon -

Download NUT-L dataset and UAVDark135

Dataset Source 1 Source 2 UAVDark135 Baidu Dropbox to be soon NUT-L Baidu Dropbox to be soon -

Put these datasets in

./test_dataset. -

Test and evalute on NUT-L with

defaultsettings.conda activate <your env> export PYTHONPATH=$(pwd) python tools/test.py python tools/eval.py

-

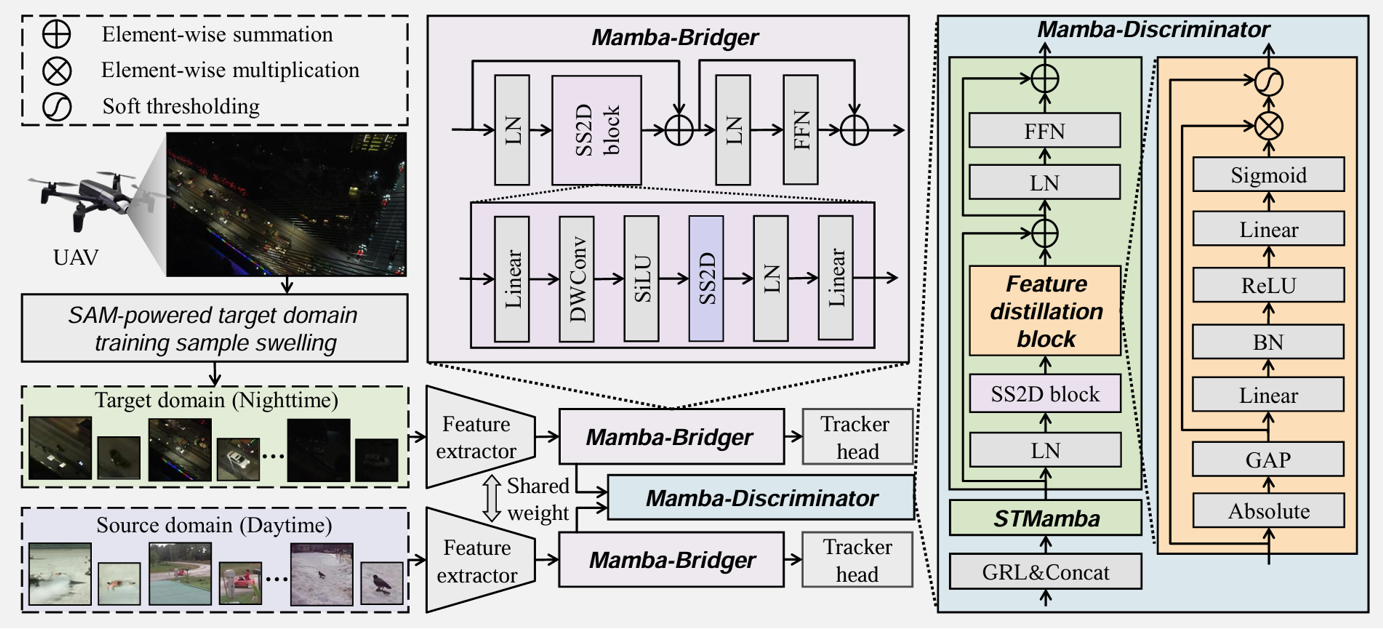

SAM-powered target domain training sample swelling on NAT2021-train.

Please refer to SAM-DA for preparing the nighttime training dataset.

-

Prepare daytime dataset [VID] and [GOT-10K].

-

Train

sam-da-track-b(default) and other models.conda activate <your env> export PYTHONPATH=$(pwd) python tools/train.py

The model is licensed under the Apache License 2.0 license.

Please consider citing the related paper(s) in your publications if it helps your research.

@Inproceedings{Yao2023SAMDA,

title={{SAM-DA: UAV Tracks Anything at Night with SAM-Powered Domain Adaptation}},

author={Fu, Changhong and Yao, Liangliang and Zuo, Haobo and Zheng, Guangze and Pan, Jia},

booktitle={Proceedings of the IEEE International Conference on Advanced Robotics and Mechatronics (ICARM)},

year={2024}

pages={1-8}

}

@article{kirillov2023segment,

title={{Segment Anything}},

author={Kirillov, Alexander and Mintun, Eric and Ravi, Nikhila and Mao, Hanzi and Rolland, Chloe and Gustafson, Laura and Xiao, Tete and Whitehead, Spencer and Berg, Alexander C and Lo, Wan-Yen and others},

journal={arXiv preprint arXiv:2304.02643},

year={2023}

pages={1-30}

}

@Inproceedings{Ye2022CVPR,

title={{Unsupervised Domain Adaptation for Nighttime Aerial Tracking}},

author={Ye, Junjie and Fu, Changhong and Zheng, Guangze and Paudel, Danda Pani and Chen, Guang},

booktitle={Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR)},

year={2022},

pages={1-10}

}

We sincerely thank the contribution of following repos: SAM, SiamBAN, UDAT, SAM-DA.

If you have any questions, please contact Liangliang Yao at 1951018@tongji.edu.cn or Changhong Fu at changhongfu@tongji.edu.cn.