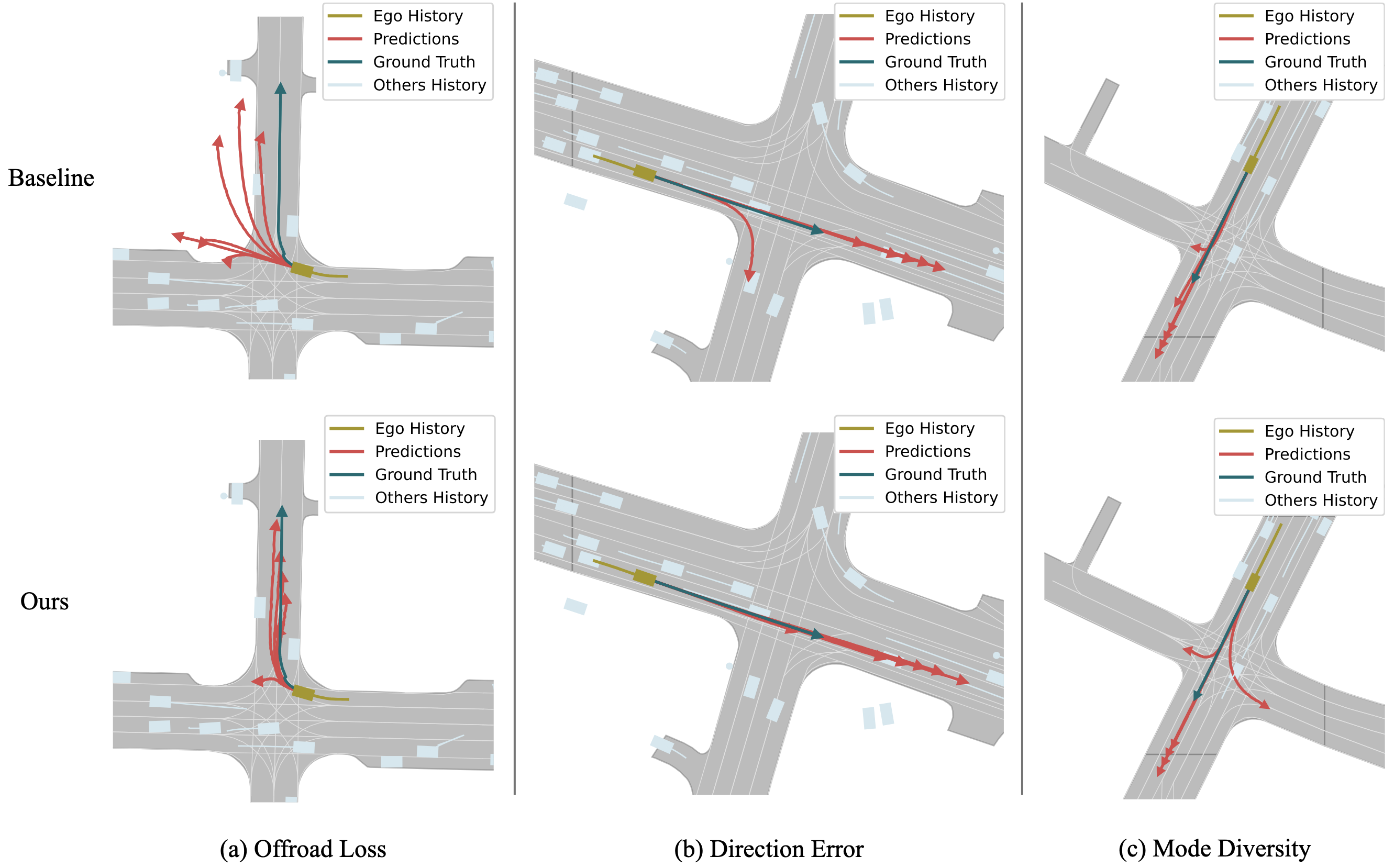

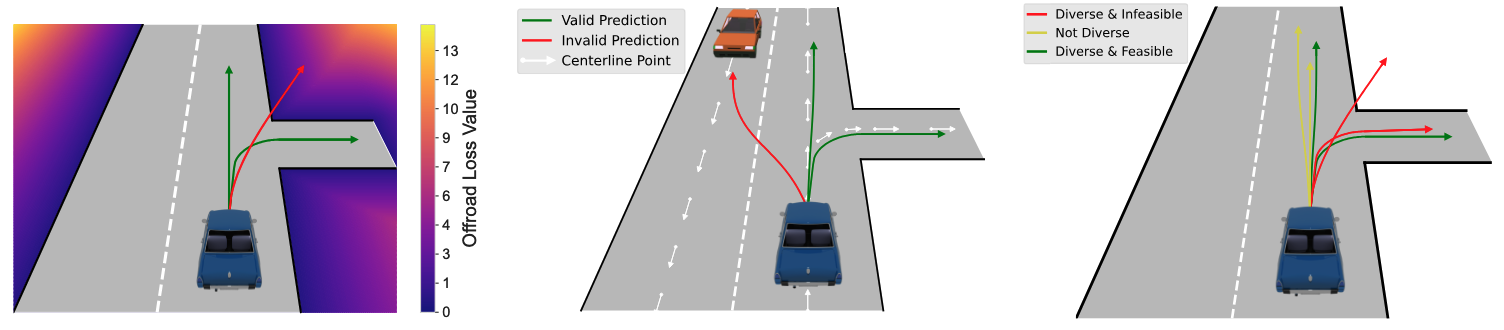

Highlights: predictions inside drivable area, following traffic flow, and more diverse.

An overview of the introduced loss functions.

-

[2024-10-1]: Stay on Track's code is now available! 🚀

Our code is based on UniTraj, a unified framework for scalable vehicle trajectory prediction. Please refer to the UniTraj repository for installation instructions.

In addition to UniTraj dependencies, you need to install Argoverse 2 API and nuScenes devkit to run the code. They are needed for acquiring required data for some of our loss functions. In the future, we will integrate the required data in UniTraj repository.

To train a model with our loss functions, you can run train.py script.

You can specify which auxiliary loss function you want to use and assign weights to the original and auxiliary losses.

For example, to train a model with Offroad Loss with a weight of 0.01, you can run the following command:

python3 train.py exp_name=autobot-offroad-ow1-aw0.3 \

method=autobot \

aux_loss_type=offroad \

original_loss_weight=1 \

aux_loss_weight=0.01By changing the method and aux_loss_type, you can reproduce our results with different settings. The table below shows the weights we used for training models in our experiments, where the first and second numbers are original and auxiliary loss weights, respectively.

| Dataset | Method | Offroad | Direction Consistency | Diversity |

|---|---|---|---|---|

| nuScenes | AutoBot | (0.01, 1) | (0.01, 1) | (1, 10) |

| Argoverse 2 | AutoBot | (0.003, 1) | (0.003, 1) | (1, 3) |

| nuScenes | Wayformer | (0.03, 1) | (0.3, 1) | (1, 0.5) |

| Argoverse 2 | Wayformer | (1, 1) | (1, 1) | (1, 1) |

To train a model with multiple auxiliary losses, you can specify aux_loss_type as combination and use offroad_loss_weight, consistency_loss_weight, and diversity_loss_weight to assign weights to each loss function.

For example, to train a model with all three auxiliary losses, you can run the following command:

python3 train.py exp_name=autobot-nuscenes-combination-ow1.0-or333-cn100-dv6 \

method=autobot \

aux_loss_type=combination \

original_loss_weight=1 \

aux_loss_weight=1 \

offroad_loss_weight=333 \

consistency_loss_weight=100 \

diversity_loss_weight=6The table below shows the weights we used for training combination models in our experiments.

| Dataset | Method | Offroad | Direction Consistency | Diversity |

|---|---|---|---|---|

| nuScenes | AutoBot | 333 | 100 | 6 |

| Argoverse 2 | AutoBot | 3333 | 333 | 3 |

| nuScenes | Wayformer | 33 | 33 | 1.5 |

| Argoverse 2 | Wayformer | 333 | 33 | 1 |

Finally, remember that we do not train models with diversity and combination losses from scratch, and we fine-tune them from baseline models trained with original loss for 20 epochs.

You can use the finetune flag to fine-tune a model with the specified loss functions, as shown below:

python3 train.py exp_name=autobot-nuscenes-combination-ow1.0-or333-cn100-dv6 \

method=autobot \

aux_loss_type=diversity \

original_loss_weight=1 \

aux_loss_weight=10 \

finetune=True \

ckpt_path=autobot-nuscenes-baseline.ckptAll our loss functions are evaluated in validation steps during traning, and they are logged in WandB.

But if you need scene-level evaluation, you can use the evaluation.py script. By setting the save_predictions flag to True,

the code will save the scene names, models' predictions, and per scene metrics in a pickle file in the preds_and_losses directory.

We have also implemented the scene attack benchmark in UniTraj to evaluate the robustness of models trained with our loss functions.

You can use the evaluate_scene_attack.py script for each of the 12 models in table 2 of our paper.

Each model is evaluated on three different attacks with different attack powers and the offroad metrics are saved in pickle files.

After all 12 models are evaluated, you can use the create_table2 function in the evaluate_scene_attack.py script to create table 2 of our paper.

If you find our work useful in your research, please consider citing our paper:

Coming soon!