This is an official implementation for the paper

TTT++: When Does Self-supervised Test-time Training Fail or Thrive? @ NeurIPS 2021

Yuejiang Liu,

Parth Kothari,

Bastien van Delft,

Baptiste Bellot-Gurlet,

Taylor Mordan,

Alexandre Alahi

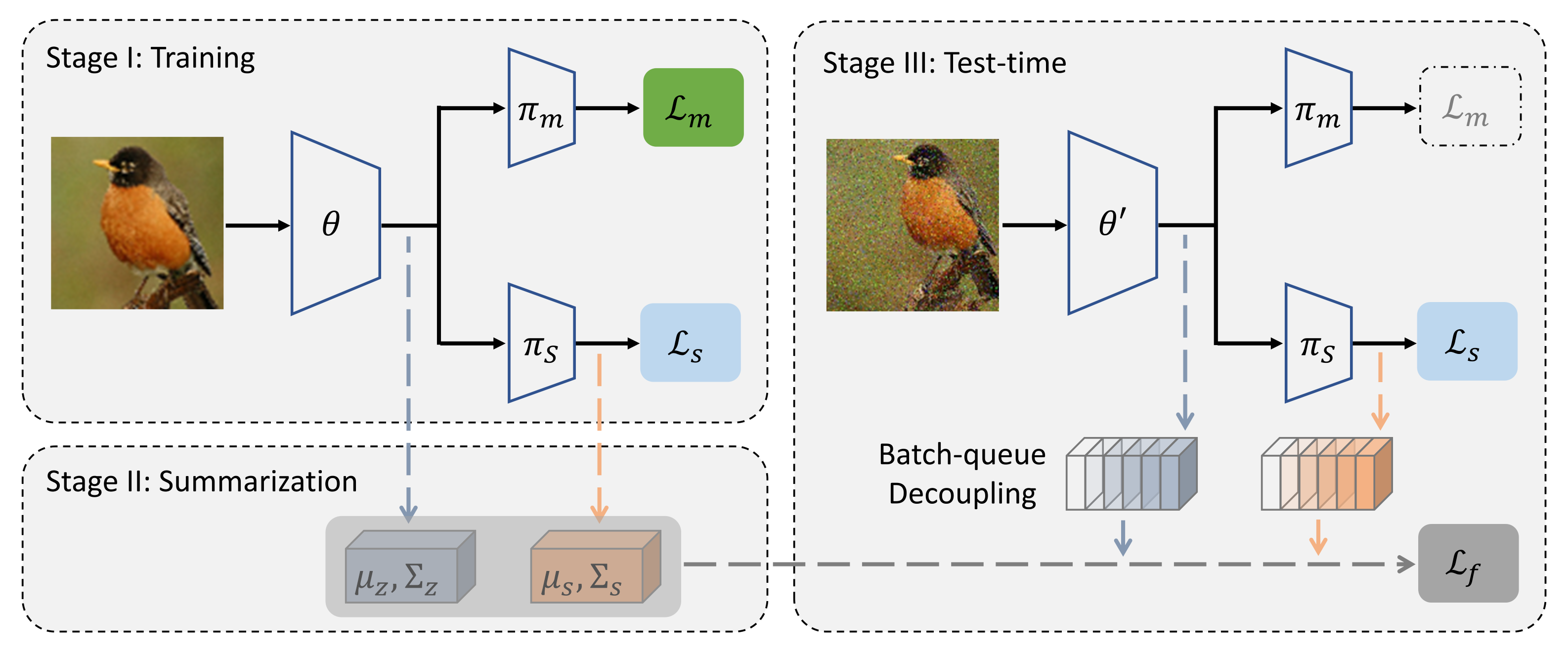

TL;DR: Online Feature Alignment + Strong Self-supervised Learner 🡲 Robust Test-time Adaptation

- Results

- reveal limitations and promise of TTT, with evidence through synthetic simulations

- our proposed TTT++ yields state-of-the-art results on visual robustness benchmarks

- Takeaways

- both task-specific (e.g. related SSL) and model-specific (e.g. feature moments) info are crucial

- need to rethink what (and how) to store, in addition to model parameters, for robust deployment

Please check out the code in the synthetic folder.

Please check out the code in the cifar folder.

If you find this code useful for your research, please cite our paper:

@inproceedings{liu2021ttt++,

title={TTT++: When Does Self-Supervised Test-Time Training Fail or Thrive?},

author={Liu, Yuejiang and Kothari, Parth and van Delft, Bastien Germain and Bellot-Gurlet, Baptiste and Mordan, Taylor and Alahi, Alexandre},

booktitle={Thirty-Fifth Conference on Neural Information Processing Systems},

year={2021}

}yuejiang [dot] liu [at] epfl [dot] ch