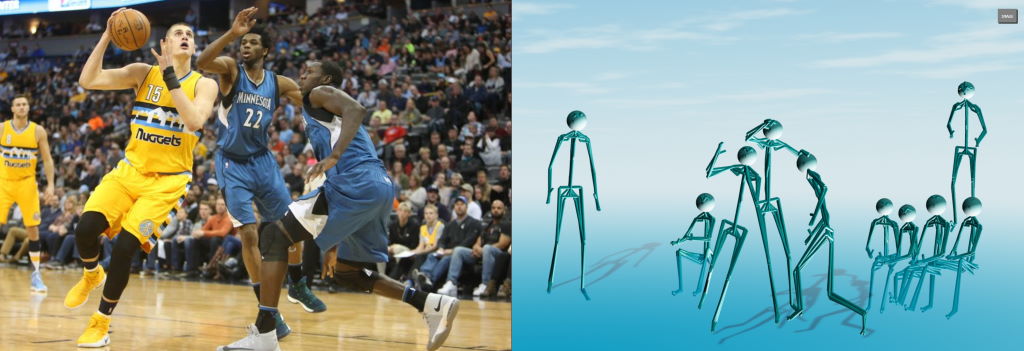

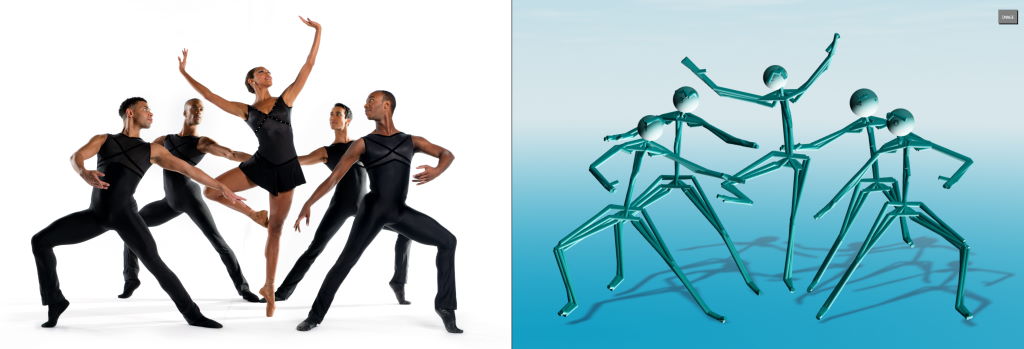

Live Demo

Note: Live demo uses pre-rendered data from sample images and videos

This solution is in two parts

- Image or Video Processing using Python and TensorFlow framework

- 3D Visualization using JavaScript and BabylonJS

TensorFlow with CUDA for GPU acceleration

Note that models used here are S.O.T.A. and computationally intensive thus requiring GPU with sufficient memory:

- Tiny (using MobileNetV3 backbone with YOLOv4-Tiny detector) => 2GB

- Small (using EfficientNetV2 backbone with YOLOv4 detector) => 4GB (6GB recommended)

- Large (using EfficientNetV2 backbone with YOLOv4 detector) => 8GB (10GB recommended)

- Large 360 (same as large but tuned for occluded body parts) => 8GB (12GB recommended)

- Output Specs (e.g. json format used)

- Constants (e.g., skeleton definitions with joints and connected edges)

process.py

arguments:

--help show this help message

--image image file

--video video file

--json write results to json file

--round round coordiantes in json outputs

--minify minify json output

--verbose verbose logging

--model model used for predictions

--skipms skip time between frames in miliseconds

--plot plot output when processing image

--fov field-of-view in degrees

--batch process n detected people in parallel

--maxpeople limit processing to n people in the scene

--skeleton use specific skeleton definition standard

--augmentations how many variations of detection to run

--average run avarage on augmentation variations

--suppress suppress implausible poses

--minconfidence minimum detection confidence

--iou iou threshold for overlaps

Using default model and processing parameters

./process.py --model models/tiny --video media/BaseballPitchSlowMo.webm --json output.json

options: image:null video:media/BaseballPitchSlowMo.webm json:output.json verbose:1 model:models/tiny skipms:0 plot:0 fov:55 batch:64 maxpeople:1 skeleton: augmentations:1 average:1 suppress:1 round:1 minify:1 minconfidence:0.1 iou:0.7

loaded tensorflow 2.7.0

loaded cuda 11.2

loaded model: models/tiny in 27.8sec

loaded video: media/BaseballPitchSlowMo.webm frames: 720 resolution: 1080 x 1080

processed video: 720 frames in 67.8sec

results written to: output.json

- All the source files are in

/client - Preprocessed JSON files and accompanying media files are in

/media

Start compile TypeScript to JavaScript and run HTTP/HTTPS dev web server:

npm run dev

Start web browser and navigate to:

- Create process server to process data on demand

- Implement avatar using inverse kinematics

- Use animation instead of updating meshes