Must-read papers on knowledge editing for large language models.

- 2023-11-18 We will provide a tutorial on Knowledge Editing for Large Language Models at COLING 2024.

- 2023-10-25 We will provide a tutorial on Knowledge Editing for Large Language Models at AAAI 2024.

- 2023-10-22 Our paper "Can We Edit Multimodal Large Language Models?" has been accepted by EMNLP 2023.

- 2023-10-08 Our paper "Editing Large Language Models: Problems, Methods, and Opportunities" has been accepted by EMNLP 2023.

- 2023-8-15 We release the paper "EasyEdit: An Easy-to-use Knowledge Editing Framework for Large Language Models."

- 2023-07 We release EasyEdit, an easy-to-use knowledge editing framework for LLMs.

- 2023-06 We will provide a tutorial on Editing Large Language Models at AACL 2023.

- 2023-05 We release a new analysis paper:"Editing Large Language Models: Problems, Methods, and Opportunities" based on this repository! We are looking forward to any comments or discussions on this topic :)

- 2022-12 We create this repository to maintain a paper list on Knowledge Editing.

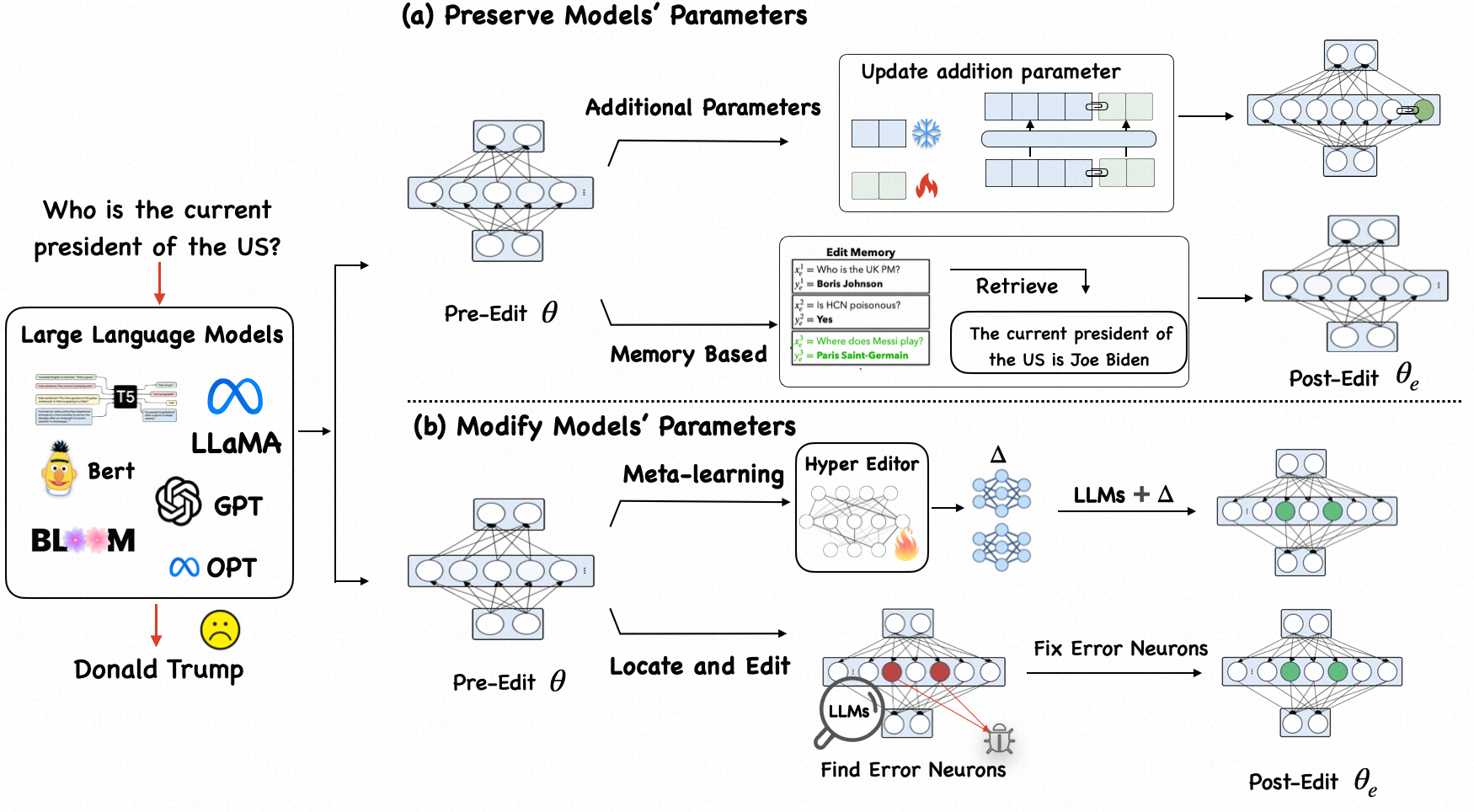

Knowledge Editing is a compelling field of research that focuses on facilitating efficient modifications to the behavior of models, particularly foundation models. The aim is to implement these changes within a specified scope of interest without negatively affecting the model's performance across a broader range of inputs.

Knowledge Editing has strong connections with following topics.

- Updating and fixing bugs for large language models

- Language models as knowledge base, locating knowledge in large language models

- Lifelong learning, unlearning and etc.

- Security and privacy for large language models

This is a collection of research and review papers of Knowledge Editing. Any suggestions and pull requests are welcome for better sharing of latest research progress.

Editing Large Language Models: Problems, Methods, and Opportunities, EMNLP 2023 Main Conference Paper. [paper]

Editing Large Language Models, AACL 2023 Tutorial. [Github] [Google Drive] [Baidu Pan]

Knowledge Editing for Large Language Models: A Survey

Song Wang, Yaochen Zhu, Haochen Liu, Zaiyi Zheng, Chen Chen, Jundong Li. [paper]

A Survey on Knowledge Editing of Neural Networks

Vittorio Mazzia, Alessandro Pedrani, Andrea Caciolai, Kay Rottmann, Davide Bernardi. [paper]

Knowledge Unlearning for LLMs: Tasks, Methods, and Challenges

Nianwen Si, Hao Zhang, Heyu Chang, Wenlin Zhang, Dan Qu, Weiqiang Zhang. [paper]

-

Memory-Based Model Editing at Scale (ICML 2022)

Eric Mitchell, Charles Lin, Antoine Bosselut, Christopher D. Manning, Chelsea Finn. [paper] [code] [demo] -

Fixing Model Bugs with Natural Language Patches. (EMNLP 2022)

Shikhar Murty, Christopher D. Manning, Scott M. Lundberg, Marco Túlio Ribeiro. [paper] [code] -

MemPrompt: Memory-assisted Prompt Editing with User Feedback. (EMNLP 2022)

Aman Madaan, Niket Tandon, Peter Clark, Yiming Yang. [paper] [code] [page] [video] -

Large Language Models with Controllable Working Memory.

Daliang Li, Ankit Singh Rawat, Manzil Zaheer, Xin Wang, Michal Lukasik, Andreas Veit, Felix Yu, Sanjiv Kumar. [paper] -

Can We Edit Factual Knowledge by In-Context Learning?

Ce Zheng, Lei Li, Qingxiu Dong, Yuxuan Fan, Zhiyong Wu, Jingjing Xu, Baobao Chang. [paper] -

Can LMs Learn New Entities from Descriptions? Challenges in Propagating Injected Knowledge

Yasumasa Onoe, Michael J.Q. Zhang, Shankar Padmanabhan, Greg Durrett, Eunsol Choi. [paper] -

MQUAKE: Assessing Knowledge Editing inLanguage Models via Multi-Hop Questions

Zexuan Zhong, Zhengxuan Wu, Christopher D. Manning, Christopher Potts, Danqi Chen.

.[paper]

-

Calibrating Factual Knowledge in Pretrained Language Models. (EMNLP 2022)

Qingxiu Dong, Damai Dai, Yifan Song, Jingjing Xu, Zhifang Sui, Lei Li. [paper] [code] -

Transformer-Patcher: One Mistake worth One Neuron. (ICLR 2023)

Zeyu Huang, Yikang Shen, Xiaofeng Zhang, Jie Zhou, Wenge Rong, Zhang Xiong. [paper] [code] -

Aging with GRACE: Lifelong Model Editing with Discrete Key-Value Adaptors.

Thomas Hartvigsen, Swami Sankaranarayanan, Hamid Palangi, Yoon Kim, Marzyeh Ghassemi. [paper] [code] -

Neural Knowledge Bank for Pretrained Transformers

Damai Dai, Wenbin Jiang, Qingxiu Dong, Yajuan Lyu, Qiaoqiao She, Zhifang Sui. [paper] -

Rank-One Editing of Encoder-Decoder Models

Vikas Raunak, Arul Menezes. [paper]

- Inspecting and Editing Knowledge Representations in Language Models

Evan Hernandez, Belinda Z. Li, Jacob Andreas. [paper] [code]

-

Plug-and-Play Adaptation for Continuously-updated QA. (ACL 2022 Findings)

Kyungjae Lee, Wookje Han, Seung-won Hwang, Hwaran Lee, Joonsuk Park, Sang-Woo Lee. [paper] [code] -

Modifying Memories in Transformer Models.

Chen Zhu, Ankit Singh Rawat, Manzil Zaheer, Srinadh Bhojanapalli, Daliang Li, Felix Yu, Sanjiv Kumar. [paper] -

Forgetting before Learning: Utilizing Parametric Arithmetic for Knowledge Updating in Large Language Models

Shiwen Ni, Dingwei Chen, Chengming Li, Xiping Hu, Ruifeng Xu and Min Yang. [paper]

-

Editing Factual Knowledge in Language Models.

Nicola De Cao, Wilker Aziz, Ivan Titov. (EMNLP 2021) [paper] [code] -

Fast Model Editing at Scale. (ICLR 2022)

Eric Mitchell, Charles Lin, Antoine Bosselut, Chelsea Finn, Christopher D. Manning. [paper] [code] [page] -

Editable Neural Networks. (ICLR 2020)

Anton Sinitsin, Vsevolod Plokhotnyuk, Dmitry V. Pyrkin, Sergei Popov, Artem Babenko. [paper] [code]

-

Editing a classifier by rewriting its prediction rules. (NeurIPS 2021)

Shibani Santurkar, Dimitris Tsipras, Mahalaxmi Elango, David Bau, Antonio Torralba, Aleksander Madry. [paper] [code] -

Language Anisotropic Cross-Lingual Model Editing.

Yang Xu, Yutai Hou, Wanxiang Che. [paper] -

Repairing Neural Networks by Leaving the Right Past Behind.

Ryutaro Tanno, Melanie F. Pradier, Aditya Nori, Yingzhen Li. [paper] -

Locating and Editing Factual Associations in GPT. (NeurIPS 2022)

Kevin Meng, David Bau, Alex Andonian, Yonatan Belinkov. [paper] [code] [page] [video] -

Mass-Editing Memory in a Transformer.

Kevin Meng, Arnab Sen Sharma, Alex Andonian, Yonatan Belinkov, David Bau. [paper] [code] [page] [demo] -

Editing models with task arithmetic .

Gabriel Ilharco, Marco Tulio Ribeiro, Mitchell Wortsman, Ludwig Schmidt, Hannaneh Hajishirzi, Ali Farhadi. [paper] -

Editing Commonsense Knowledge in GPT .

Anshita Gupta, Debanjan Mondal, Akshay Krishna Sheshadri, Wenlong Zhao, Xiang Lorraine Li, Sarah Wiegreffe, Niket Tandon. [paper] -

Do Language Models Have Beliefs? Methods for Detecting, Updating, and Visualizing Model Beliefs.

Peter Hase, Mona Diab, Asli Celikyilmaz, Xian Li, Zornitsa Kozareva, Veselin Stoyanov, Mohit Bansal, Srinivasan Iyer. [paper] [code] -

Detecting Edit Failures In Large Language Models: An Improved Specificity Benchmark .

Jason Hoelscher-Obermaier, Julia Persson, Esben Kran, Ioannis Konstas, Fazl Barez. [paper] -

Knowledge Neurons in Pretrained Transformers.(ACL 2022)

Damai Dai , Li Dong, Yaru Hao, Zhifang Sui, Baobao Chang, Furu Wei.[paper] [code] [code by EleutherAI] -

LEACE: Perfect linear concept erasure in closed form .

Nora Belrose, David Schneider-Joseph, Shauli Ravfogel, Ryan Cotterell, Edward Raff, Stella Biderman. [paper] -

Transformer Feed-Forward Layers Are Key-Value Memories. (EMNLP 2021)

Mor Geva, Roei Schuster, Jonathan Berant, Omer Levy. [paper] -

Transformer Feed-Forward Layers Build Predictions by Promoting Concepts in the Vocabulary Space.(EMNLP 2022)

Mor Geva, Avi Caciularu, Kevin Ro Wang, Yoav Goldberg. [paper] -

PMET: Precise Model Editing in a Transformer.

Xiaopeng Li, Shasha Li, Shezheng Song, Jing Yang, Jun Ma, Jie Yu. [paper] [code] -

Unlearning Bias in Language Models by Partitioning Gradients. (ACL 2023 Findings)

Charles Yu, Sullam Jeoung, Anish Kasi, Pengfei Yu, Heng Ji. [paper] [code] -

DEPN: Detecting and Editing Privacy Neurons in Pretrained Language Models (EMNLP 2023)

Xinwei Wu, Junzhuo Li, Minghui Xu, Weilong Dong, Shuangzhi Wu, Chao Bian, Deyi Xiong. [paper] -

Untying the Reversal Curse via Bidirectional Language Model Editing

Jun-Yu Ma, Jia-Chen Gu, Zhen-Hua Ling, Quan Liu, Cong Liu. [paper]

-

FRUIT: Faithfully Reflecting Updated Information in Text. (NAACL 2022)

Robert L. Logan IV, Alexandre Passos, Sameer Singh, Ming-Wei Chang. [paper] [code] -

Entailer: Answering Questions with Faithful and Truthful Chains of Reasoning. (EMNLP 2022)

Oyvind Tafjord, Bhavana Dalvi Mishra, Peter Clark. [paper] [code] [video] -

Towards Tracing Factual Knowledge in Language Models Back to the Training Data.

Ekin Akyürek, Tolga Bolukbasi, Frederick Liu, Binbin Xiong, Ian Tenney, Jacob Andreas, Kelvin Guu. (EMNLP 2022) [paper] -

Prompting GPT-3 To Be Reliable.

Chenglei Si, Zhe Gan, Zhengyuan Yang, Shuohang Wang, Jianfeng Wang, Jordan Boyd-Graber, Lijuan Wang. [paper] -

Patching open-vocabulary models by interpolating weights. (NeurIPS 2022)

Gabriel Ilharco, Mitchell Wortsman, Samir Yitzhak Gadre, Shuran Song, Hannaneh Hajishirzi, Simon Kornblith, Ali Farhadi, Ludwig Schmidt. [paper] [code] -

Decouple knowledge from paramters for plug-and-play language modeling (ACL2023 Findings)

Xin Cheng, Yankai Lin, Xiuying Chen, Dongyan Zhao, Rui Yan.[paper] [code] -

Backpack Language Models

John Hewitt, John Thickstun, Christopher D. Manning, Percy Liang. [paper] -

Learning to Model Editing Processes. (EMNLP 2022)

Machel Reid, Graham Neubig. [paper] -

Trends in Integration of Knowledge and Large Language Models: A Survey and Taxonomy of Methods, Benchmarks, and Applications.

Zhangyin Feng, Weitao Ma, Weijiang Yu, Lei Huang, Haotian Wang, Qianglong Chen, Weihua Peng, Xiaocheng Feng, Bing Qin, Ting liu. [paper]

- Does Localization Inform Editing? Surprising Differences in Causality-Based Localization vs. Knowledge Editing in Language Models.

Peter Hase, Mohit Bansal, Been Kim, Asma Ghandeharioun. [paper] [code] - Dissecting Recall of Factual Associations in Auto-Regressive Language Models

Mor Geva, Jasmijn Bastings, Katja Filippova, Amir Globerson. [paper] - Evaluating the Ripple Effects of Knowledge Editing in Language Models

Roi Cohen, Eden Biran, Ori Yoran, Amir Globerson, Mor Geva. [paper] - Edit at your own risk: evaluating the robustness of edited models to distribution shifts.

Davis Brown, Charles Godfrey, Cody Nizinski, Jonathan Tu, Henry Kvinge. [paper] - Journey to the Center of the Knowledge Neurons: Discoveries of Language-Independent Knowledge Neurons and Degenerate Knowledge Neurons.

Yuheng Chen, Pengfei Cao, Yubo Chen, Kang Liu, Jun Zhao. [paper] - Linearity of Relation Decoding in Transformer Language Models

Evan Hernandez, Martin Wattenberg, Arnab Sen Sharma, Jacob Andreas, Tal Haklay, Yonatan Belinkov, Kevin Meng, David Bau. [paper] - KLoB: a Benchmark for Assessing Knowledge Locating Methods in Language Models

Yiming Ju, Zheng Zhang. [paper] - Inference-Time Intervention: Eliciting Truthful Answers from a Language Model (NeurIPS 2023)

Kenneth Li, Oam Patel, Fernanda Viégas, Hanspeter Pfister, Martin Wattenberg. [paper] [code] - Emptying the Ocean with a Spoon: Should We Edit Models? (EMNLP 2023 Findings)

Yuval Pinter and Michael Elhadad. [paper] - Unveiling the Pitfalls of Knowledge Editing for Large Language Models

Zhoubo Li, Ningyu Zhang, Yunzhi Yao, Mengru Wang, Xi Chen and Huajun Chen. [paper] - Editing Personality for LLMs

Shengyu Mao, Ningyu Zhang, Xiaohan Wang, Mengru Wang, Yunzhi Yao, Yong Jiang, Pengjun Xie, Fei Huang and Huajun Chen. [paper]

| Edit Type | Benchmarks & Datasets |

|---|---|

| Fact Knowledge | ZSRE, ZSRE plus, CounterFact,CounterFact plus, CounterFact+,ECBD, MQUAKE |

| Multi-Lingual | Bi-ZsRE,Eva-KELLM |

| Sentiment | Convsent |

| Bias | Bias in Bios |

| Hallucination | WikiBio |

| Commonsense | MEMITcsk |

| Reasoning | Eva-KELLM |

| Privacy Infomation Protect | PrivQA, Knowledge Sanitation,Enron |

| Toxic Information | RealToxicityPrompts |

| MultiModal | MMEdit |

EasyEdit: An Easy-to-use Knowledge Editing Framework for Large Language Models.

FastEdit: Editing large language models within 10 seconds

Please cite our paper if find our work useful.

@article{DBLP:journals/corr/abs-2305-13172,

author = {Yunzhi Yao and

Peng Wang and

Bozhong Tian and

Siyuan Cheng and

Zhoubo Li and

Shumin Deng and

Huajun Chen and

Ningyu Zhang},

title = {Editing Large Language Models: Problems, Methods, and Opportunities},

journal = {CoRR},

volume = {abs/2305.13172},

year = {2023},

url = {https://doi.org/10.48550/arXiv.2305.13172},

doi = {10.48550/arXiv.2305.13172},

eprinttype = {arXiv},

eprint = {2305.13172},

timestamp = {Tue, 30 May 2023 17:04:46 +0200},

biburl = {https://dblp.org/rec/journals/corr/abs-2305-13172.bib},

bibsource = {dblp computer science bibliography, https://dblp.org}

}- There are cases where we miss important works in this field, please contribute to this repo! Thanks for the efforts in advance.

- We would like to express our gratitude to Longhui Yu for the kind reminder about the missing papers.