Official PyTorch implementation of "TransNeXt: Robust Foveal Visual Perception for Vision Transformers".

✔️ Release of model code and CUDA implementation for acceleration.

- Release of comprehensive training and inference code.

- Release of pretrained model weights.

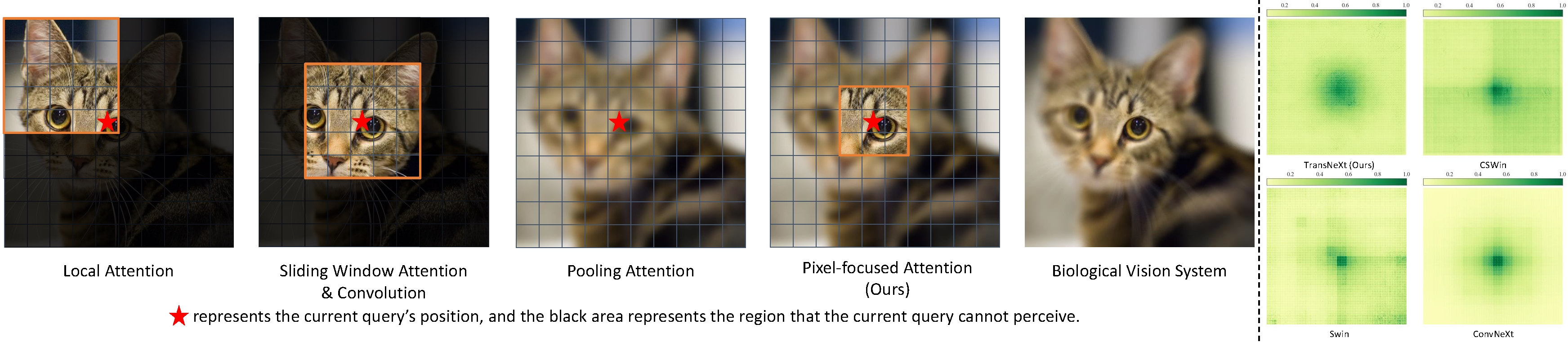

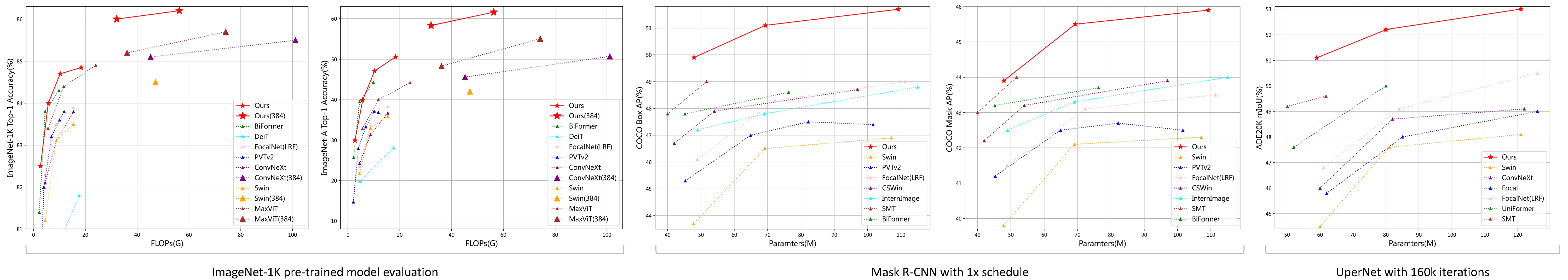

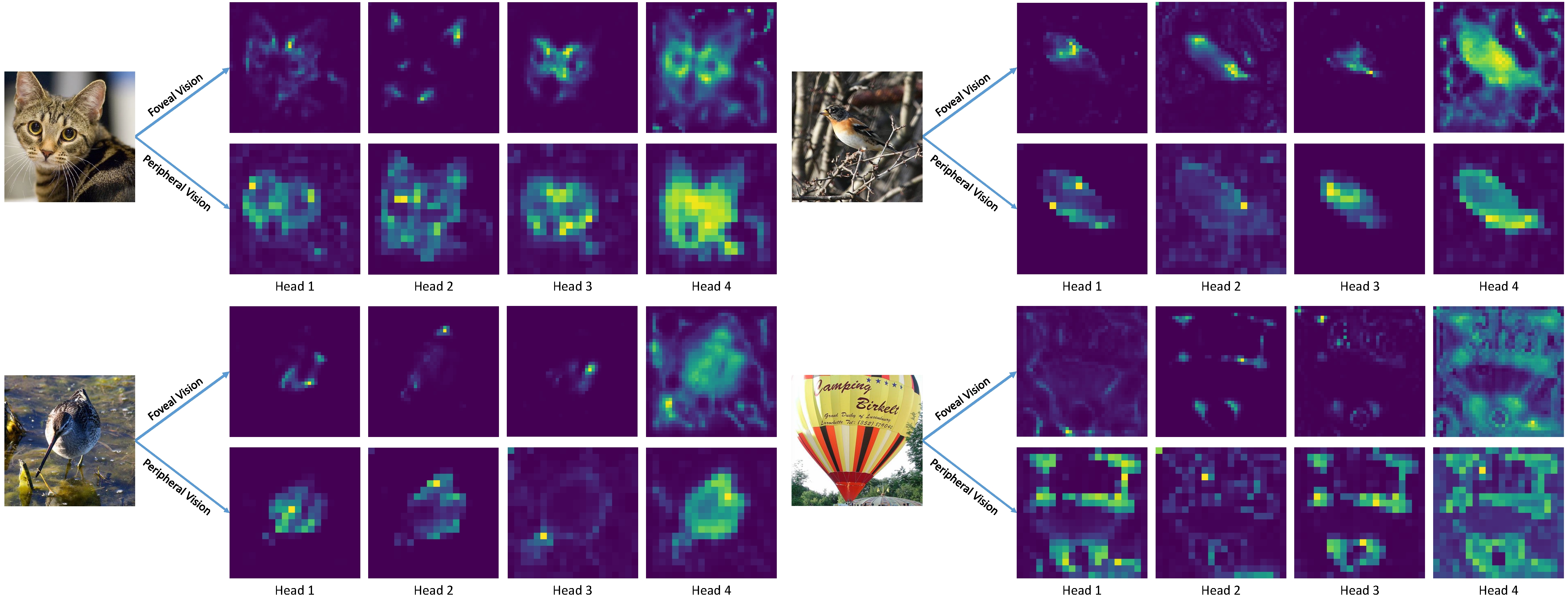

Due to the depth degradation effect in residual connections, many efficient Vision Transformers models that rely on stacking layers for information exchange often fail to form sufficient information mixing, leading to unnatural visual perception. To address this issue, in this paper, we propose Aggregated Attention, a biomimetic design-based token mixer that simulates biological foveal vision and continuous eye movement while enabling each token on the feature map to have a global perception. Furthermore, we incorporate learnable tokens that interact with conventional queries and keys, which further diversifies the generation of affinity matrices beyond merely relying on the similarity between queries and keys. Our approach does not rely on stacking for information exchange, thus effectively avoiding depth degradation and achieving natural visual perception.

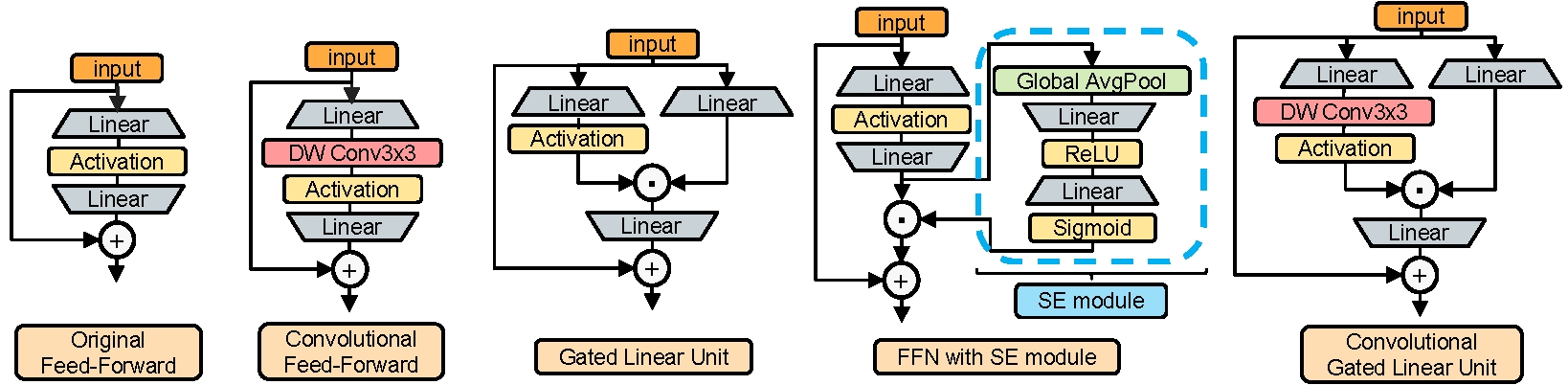

Additionally, we propose Convolutional GLU, a channel mixer that bridges the gap between GLU and SE mechanism, which empowers each token to have channel attention based on its nearest neighbor image features, enhancing local modeling capability and model robustness. We combine aggregated attention and convolutional GLU to create a new visual backbone called TransNeXt. Extensive experiments demonstrate that our TransNeXt achieves state-of-the-art performance across multiple model sizes. At a resolution of

Before installing the CUDA extension, please ensure that the CUDA version on your machine matches the CUDA version of PyTorch.

cd swattention_extension

pip install .

2023.11.28 We have submitted the preprint of our paper to Arxiv

2023.09.21 We have submitted our paper and the model code to OpenReview, where it is publicly accessible.

If you find our work helpful, please consider citing the following bibtex. We would greatly appreciate a star for this project.

@misc{shi2023transnext,

author = {Dai Shi},

title = {TransNeXt: Robust Foveal Visual Perception for Vision Transformers},

year = {2023},

eprint = {arXiv:2311.17132},

archivePrefix={arXiv},

primaryClass={cs.CV}

}