This repository contains PyTorch implementations of deep reinforcement learning algorithms. This implementation uses PyTorch. For a TensorFlow implementation of algorithms, take a look at tsallis_actor_critic_mujoco.

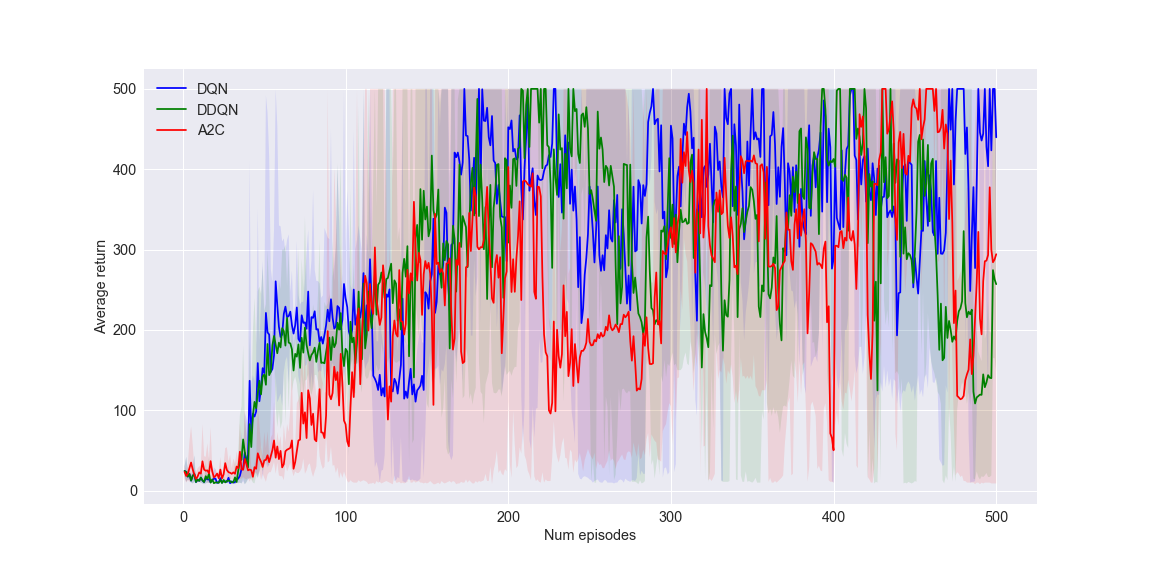

- Deep Q-Network (DQN) (V. Mnih et al. 2015)

- Double DQN (DDQN) (H. Van Hasselt et al. 2015)

- Advantage Actor Critic (A2C)

- Vanilla Policy Gradient (VPG)

- Natural Policy Gradient (NPG) (S. Kakade et al. 2002)

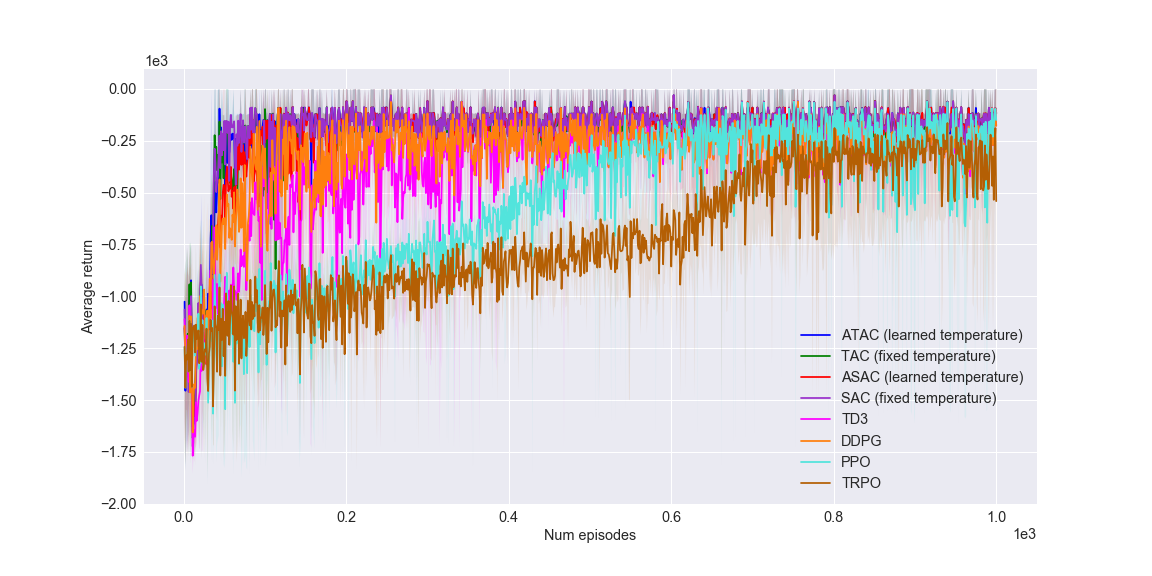

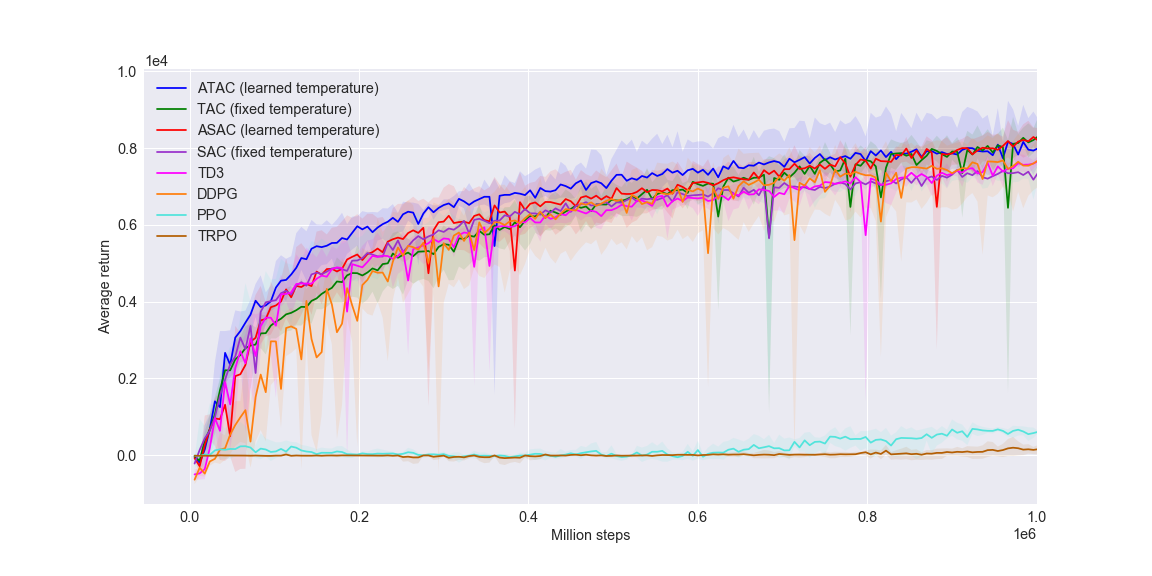

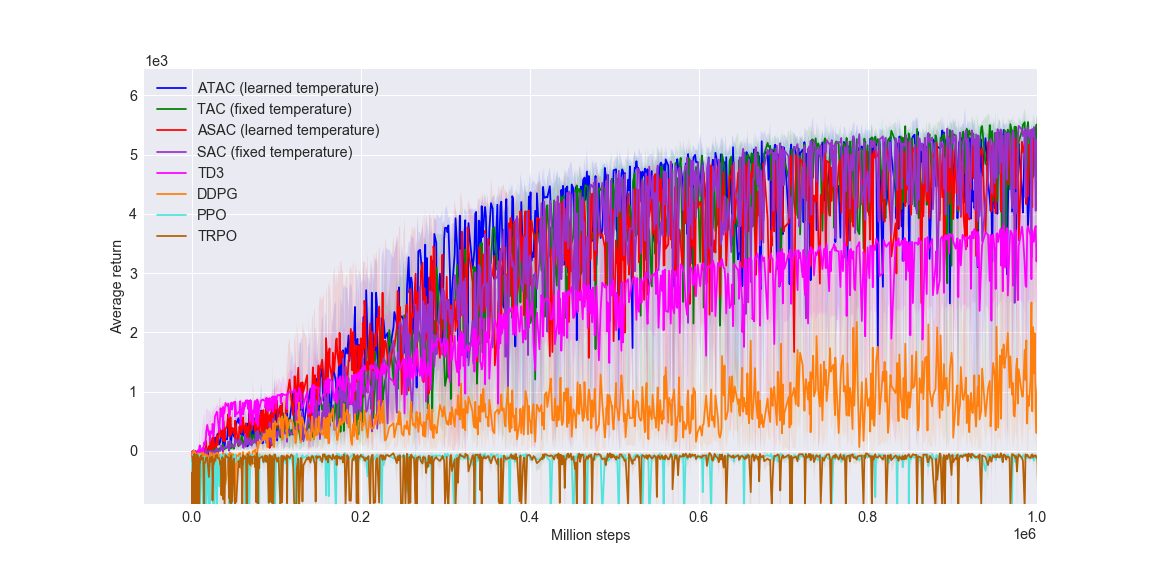

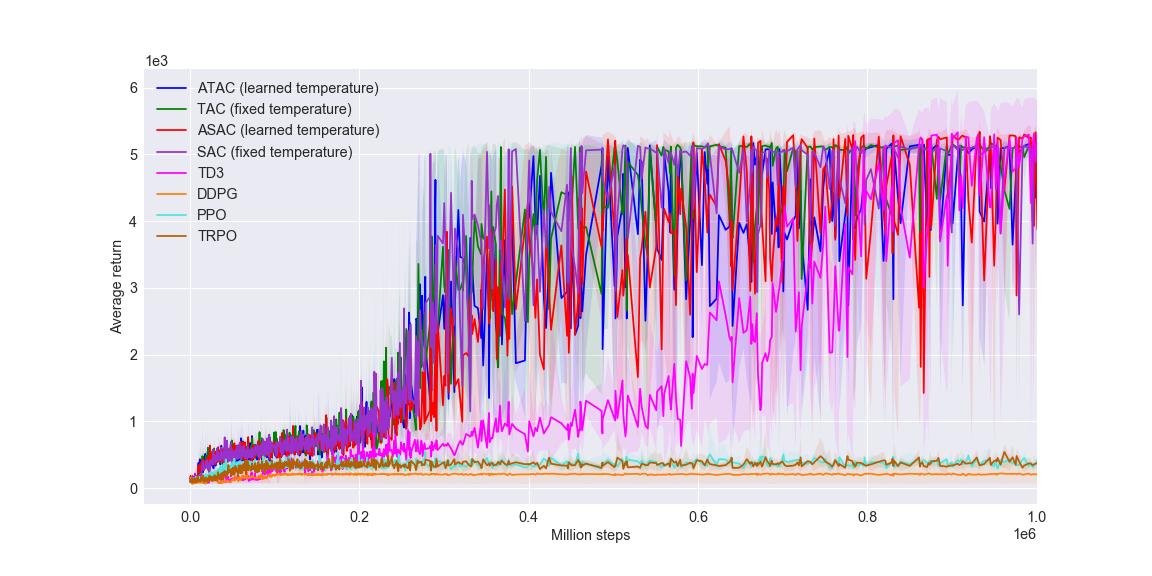

- Trust Region Policy Optimization (TRPO) (J. Schulman et al. 2015)

- Proximal Policy Optimization (PPO) (J. Schulman et al. 2017)

- Deep Deterministic Policy Gradient (DDPG) (T. Lillicrap et al. 2015)

- Twin Delayed DDPG (TD3) (S. Fujimoto et al. 2018)

- Soft Actor-Critic (SAC) (T. Haarnoja et al. 2018)

- Automating entropy adjustment on SAC (ASAC) (T. Haarnoja et al. 2018)

- Tsallis Actor-Critic (TAC) (K. Lee et al. 2019)

- Automating entropy adjustment on TAC (ATAC)

- CartPole-v1 (as described in here)

- Pendulum-v0 (as described in here)

- MuJoCo environments (HalfCheetah-v2, Ant-v2, Humanoid-v2, etc.) (as described in here)

- Observation space: 4

- Action space: 2

- Observation space: 3

- Action space: 1

- Observation space: 17

- Action space: 6

- Observation space: 111

- Action space: 8

- Observation space: 376

- Action space: 17

The repository's high-level structure is:

├── agents

└── common

├── results

├── data

└── graphs

├── tests

└── save_model

To train all the different agents on MuJoCo environments, follow these steps:

git clone https://github.com/dongminlee94/deep_rl.git

cd deep_rl

python run_mujoco.py

For other environments, change the last line to run_cartpole.py, run_pendulum.py.

If you want to change configurations of the agents, follow this step:

python run_mujoco.py \

--env=Humanoid-v2 \

--algo=atac \

--seed=0 \

--iterations=200 \

--steps_per_iter=5000 \

--max_step=1000

To watch all the learned agents on MuJoCo environments, follow these steps:

cd tests

python mujoco_test.py --load=envname_algoname_...

You should copy the saved model name in tests/save_model/envname_algoname_... and paste the copied name in envname_algoname_.... So the saved model will be load.