Please check this repository for detail implementation. The trained files are provided by the following repository. The trained files are automatically downloaded when you build.

https://github.com/lewes6369/TensorRT-Yolov3

https://github.com/wang-xinyu/tensorrtx

https://github.com/tier4/AutowareArchitectureProposal.git

- tranined file (608) : https://drive.google.com/drive/folders/1F3f2_CZTOIcuUhvubNaLlMoIx0_Pv6_x?usp=sharing

Please note that above repository is under MIT or Apache 2.0 license.

- Ubuntu 18.04

- ros melodic

- cuda 10.2

- cudnn 7.6.5

- tensorrt 7.0.0

- install ros and colcon.

mkdir -p workspace/srccd workspace/srcgit clone https://github.com/wep21/tensorrt_yolov4_ros.gitcd tensorrt_yolov4_ros && mkdir data- Place trained models under data/.

- copy msgs under src/ from https://github.com/tier4/AutowareArchitectureProposal/tree/master/src/common/msgs.

cd workspacecolcon build --cmake-args -DCMAKE_BUILD_TYPE=Release --packages-up-to tensorrt_yolo4source install/setup.bashroslaunch tensorrt_yolo4 tensorrt_yolo4.launch- Publish /image_raw by real camera or rosbag.

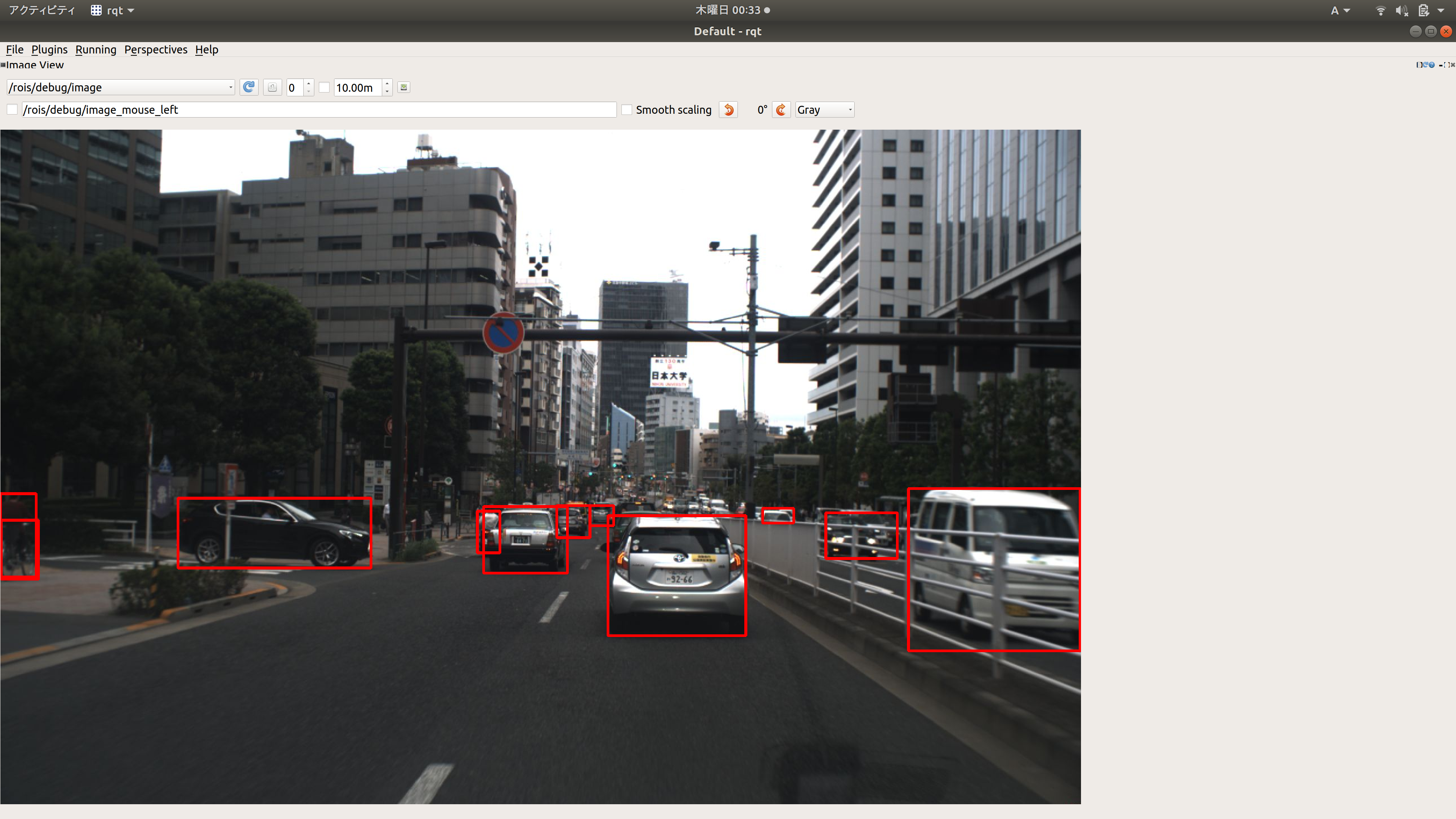

- Check /rois/debug/image by rqt_image_view.

sensor_msgs::Image

autoware_perception_msgs::DynamicObjectWithFeatureArray